Method for realizing extrasensory experience by retrieving video content

A technology of video content and video, applied in the fields of super-sensing device mapping and video event retrieval, to achieve the effect of automation, improving retrieval efficiency and improving efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The present invention will be described in further detail below in conjunction with the accompanying drawings.

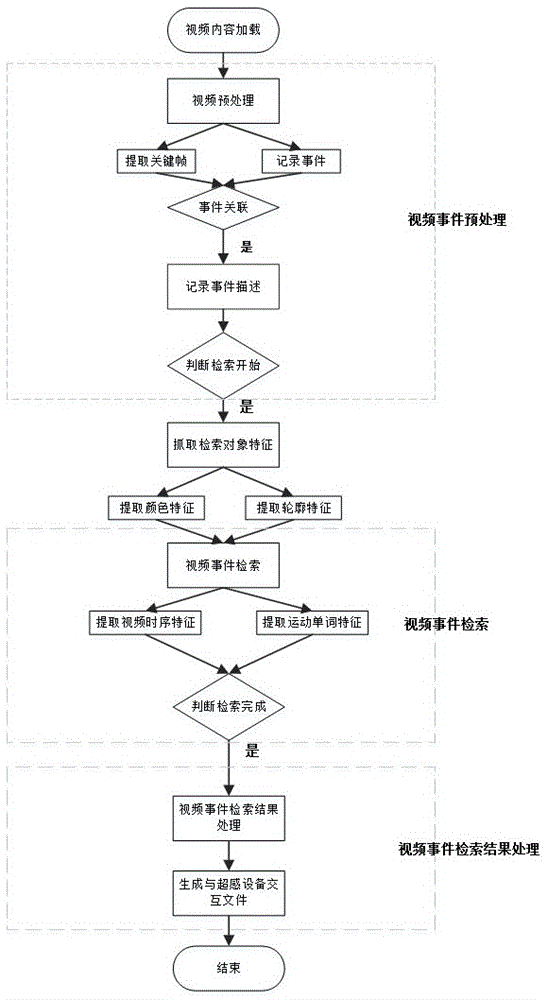

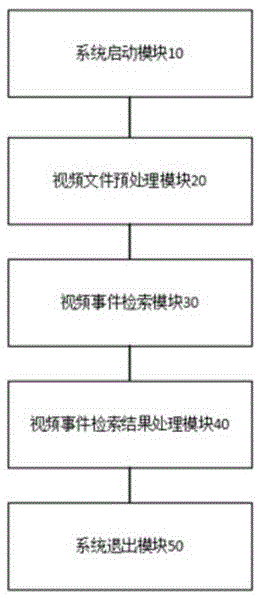

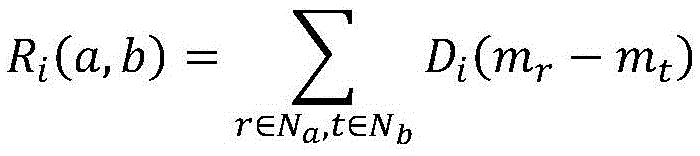

[0031] refer to figure 1 , the present invention includes three steps of video preprocessing, video event retrieval, and video event retrieval result processing; wherein in the video preprocessing, the video frame is taken as the minimum unit to divide the video into multiple analysis units and obtain its representative semantics feature, and then obtain its semantic information, and establish a semantic model through the obtained semantic information. The description of the video event mainly includes two aspects: the event occurrence object (Who) and the event development process (How). The method described in this application studies three aspects: the description and feature extraction of event dynamic information, the establishment of a motion dictionary and the resolution of the impact of the interaction between events on the event. For the description...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com