Human face comparison method and device

A face comparison and face image technology, applied in the field of face comparison, can solve problems such as large amount of data, high cost, and multi-check workload, and achieve the effect of accurate locking.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

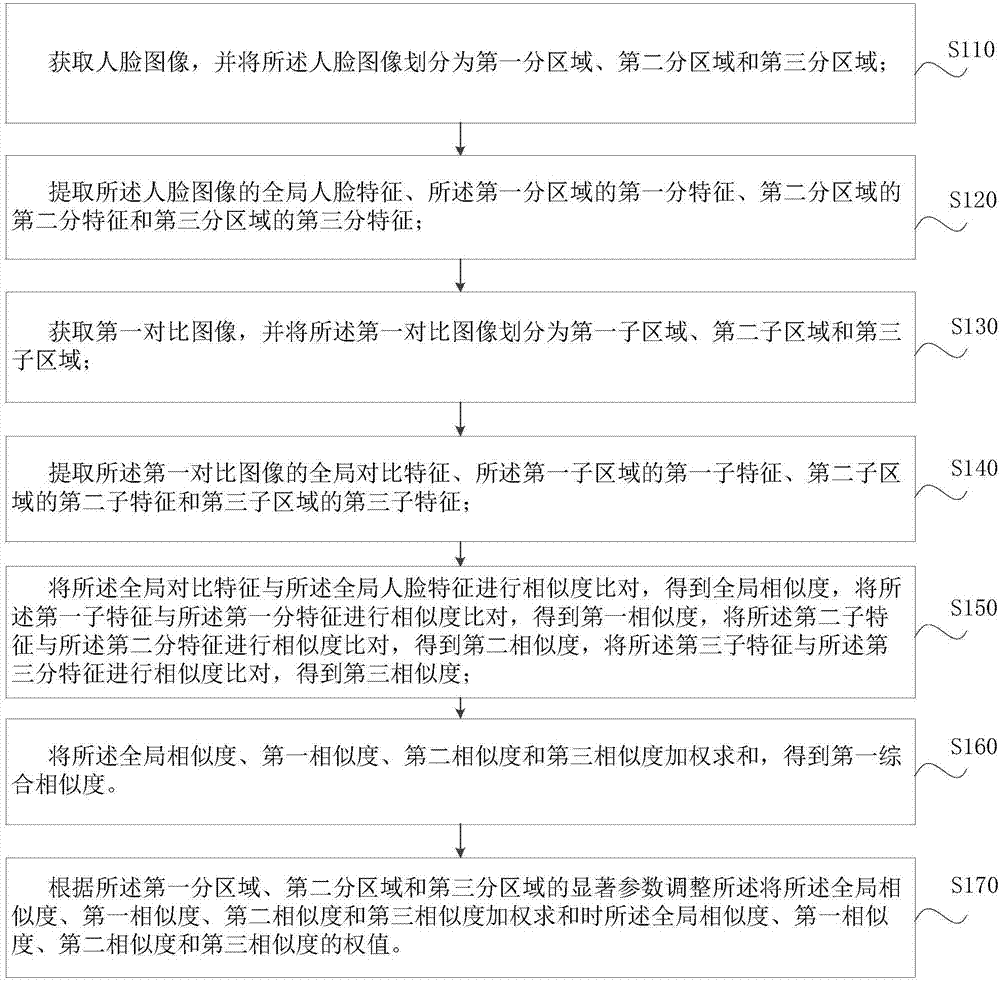

[0060] Such as figure 1 A method for face comparison, comprising the following steps:

[0061] Step S110, acquiring a face image, and dividing the face image into a first sub-region, a second sub-region and a third sub-region.

[0062] Specifically, the first sub-region, the second sub-region and the third sub-region are one or more of human face regions such as eye region, nose region, mouth region, ear region, cheek region or forehead region. and the first sub-region, the second sub-region and the third sub-region are different from each other; the first sub-region, the second sub-region and the third sub-region respectively correspond to the first sub-region , the second sub-region and the third sub-region. For example, the first sub-area and the first sub-area are the eye area, the second sub-area and the second sub-area are the nose area, the third sub-area and the third sub-area are people including the cheek area and the ear area The face area, of course, may also in...

Embodiment 2

[0093] Such as Figure 5 The method for face comparison shown includes the following steps:

[0094] Step S210, acquiring a face image, and dividing the face image into a first sub-region, a second sub-region and a third sub-region.

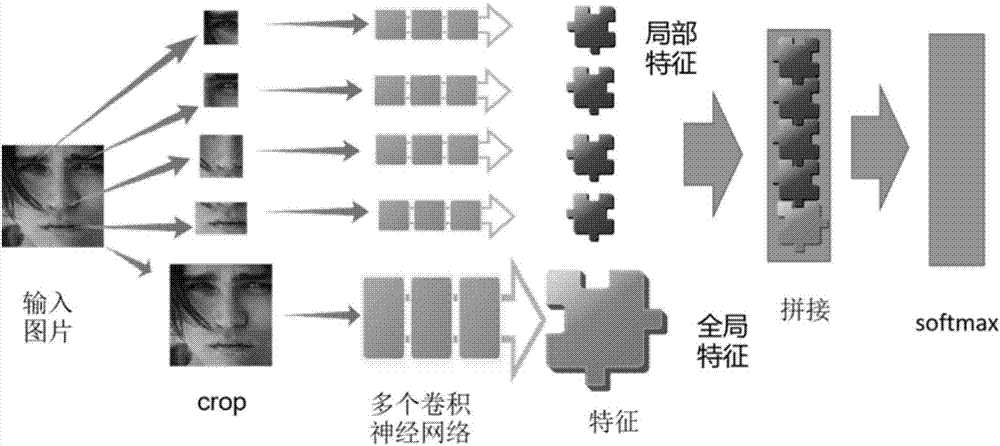

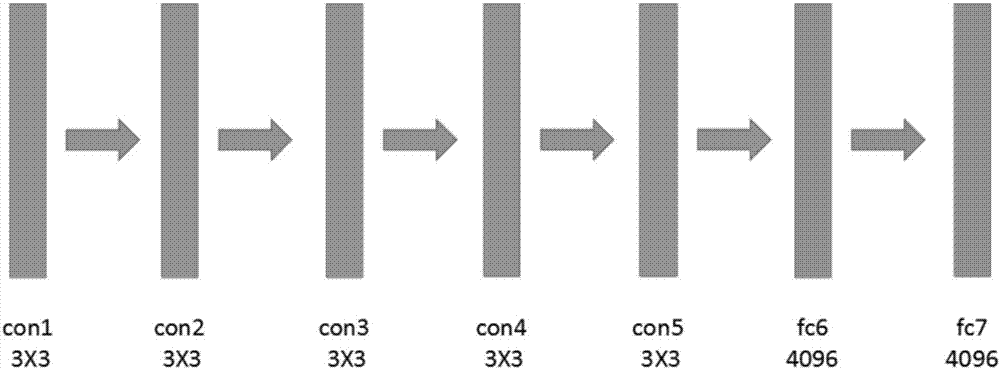

[0095] Step S220, extracting global face features of the face image, first sub-features of the first sub-region, second sub-features of the second sub-region, and third sub-features of the third sub-region.

[0096] Step S230, acquiring a first comparison image, and dividing the first comparison image into a first sub-region, a second sub-region and a third sub-region.

[0097] In this embodiment, the acquiring the first comparison image is specifically acquiring the first comparison image from a comparison library, where the comparison library includes a plurality of comparison images. The comparison library is the aforementioned large library, which includes a large amount of face information, such as snapshots and ID photos of N individuals....

Embodiment 3

[0109] Such as Image 6 The devices shown for face comparison include:

[0110] The first acquiring module 110 is configured to acquire a human face image, and divide the human face image into a first sub-region, a second sub-region and a third sub-region;

[0111] The first extraction module 120 is used to extract the global facial feature of the human face image, the first sub-feature of the first sub-region, the second sub-feature of the second sub-region and the third sub-region of the third sub-region. feature;

[0112] The second acquisition module 130 is configured to acquire a first comparison image, and divide the first comparison image into a first sub-area, a second sub-area and a third sub-area;

[0113] The second extraction module 140 is configured to extract the global contrast feature of the first contrast image, the first sub-feature of the first sub-region, the second sub-feature of the second sub-region and the third sub-character of the third sub-region ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com