RNN-based automatic picture description generation method

A technology of automatic generation and picture description, applied to instruments, character and pattern recognition, computer components, etc., can solve the problems of weak semantic expression and poor utilization, and achieve the effect of improving performance and reducing complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

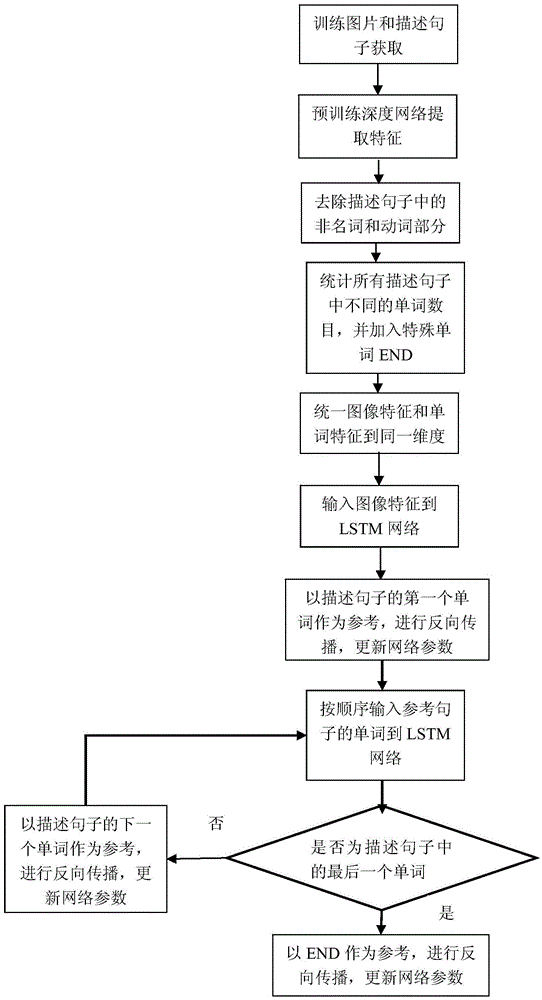

[0034] A method for automatically generating image description based on RNN in this embodiment, such as figure 1 As shown, including the following steps:

[0035] S1 carries on the training process on the computer:

[0036] S1.1 Collecting data set: Download the mscoco database from http: / / mscoco.org / . The database contains 300,000 pictures, each with 5 sentences describing the content of the image;

[0037] S1.2 Use a deep learning network (refer to the paper ImageNetClassificationwithDeepConvolutionalNeuralNetworks, AlexKrizhevsky, IlyaSutskever, GeoffreyEHinton, NIPS2012.) to extract image features from each picture in the training set; this embodiment selects the output of the last fully connected layer of the network structure m= 4096-dimensional vector F i ∈R 4096 As the feature vector of the image;

[0038] S1.3 Part-of-speech screening: collect the vocabulary list of English words in Level 4 and 6 and the part of speech of each word;

[0039] Perform part-of-speech screening f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com