Task and data scheduling method and device based on hybrid memory

A technology of data scheduling and hybrid memory, which is applied in the field of data processing and can solve the problem of high consumption

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

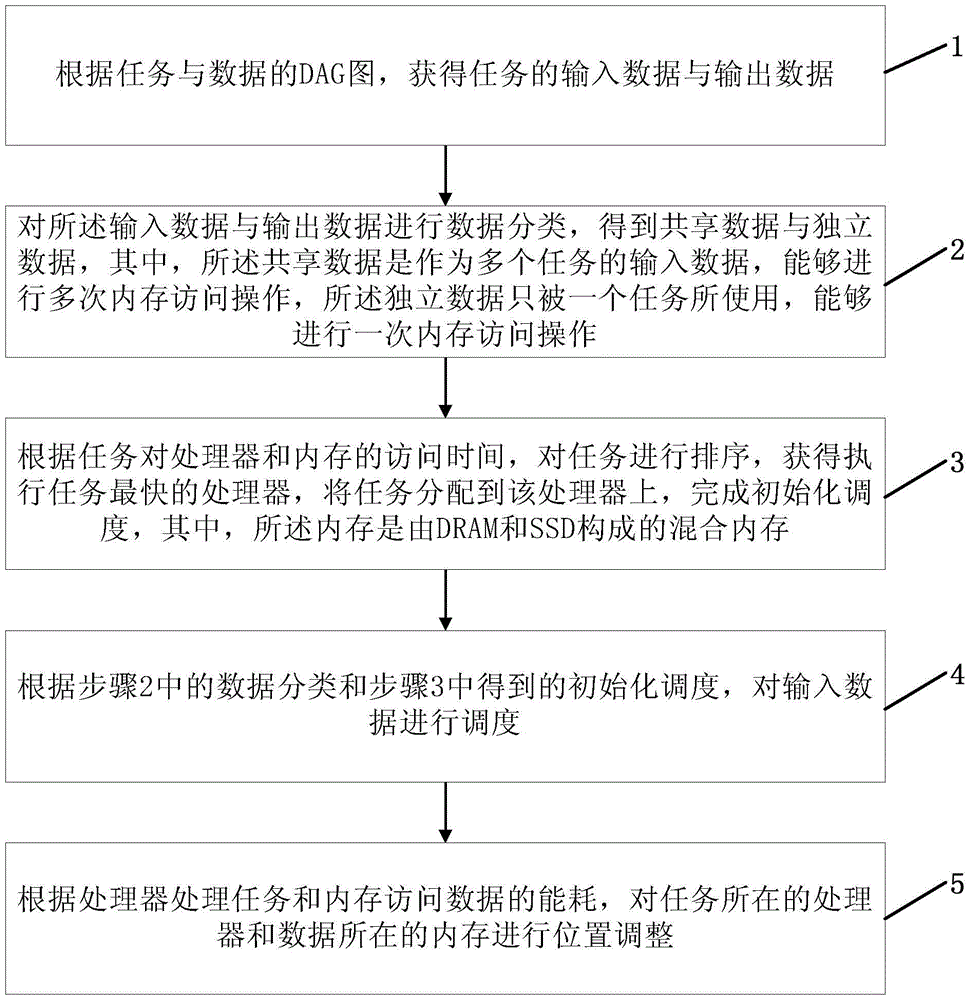

[0046] figure 1 It is an implementation flow chart of the hybrid memory-based task and data scheduling method provided by the embodiment of the present invention. Such as figure 1 As shown, the task and data scheduling method based on hybrid memory DRAM and SSD provided by the embodiment of the present invention includes the following process:

[0047] Step 1. Obtain the input data and output data of the task according to the DAG diagram of the task and data.

[0048] Specifically, since each task has its own data that needs to be read, some tasks will also generate certain data, and some tasks need to use the data generated by another task, so tasks and data have dependencies. DAG A graph can represent the dependencies between tasks. Among them, DAG (DirectedAcyclicGraph, non-loop directed) graph is the relationship between tasks, for example, if v1->v2 is the direction, if v1 produces data d1, and the input data of v2 is d1, then this dependency It is the direction and c...

Embodiment 2

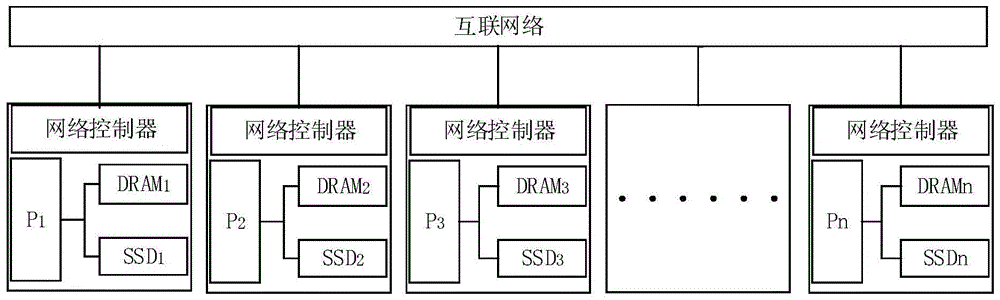

[0068] Figure 4 A schematic structural diagram of a hybrid memory-based task and data scheduling device provided by an embodiment of the present invention. Such as Figure 4 As shown, the task and data scheduling device based on hybrid memory DRAM and SSD provided by the embodiment of the present invention includes:

[0069] The data acquisition module is used to obtain the input data and output data of the task according to the DAG diagram of the task and data;

[0070] The data classification module is used to classify the input data and output data to obtain shared data and independent data, wherein the shared data is the input data of multiple tasks and can perform multiple memory access operations. Independent data is only used by one task and can perform a memory access operation;

[0071] The initialization scheduling module is used to obtain the processor with the fastest execution of the task according to the access time of the task to the processor and the memory...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com