Generation method for image convolution characteristics based on top layer weight

A convolution and image technology, which is applied in the field of automatically obtaining the area weight of image content, can solve problems such as poor image quality, many noise points around, and distortion of clothes and objects, and achieve the goal of ensuring accuracy, ensuring Lupine, and improving performance Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

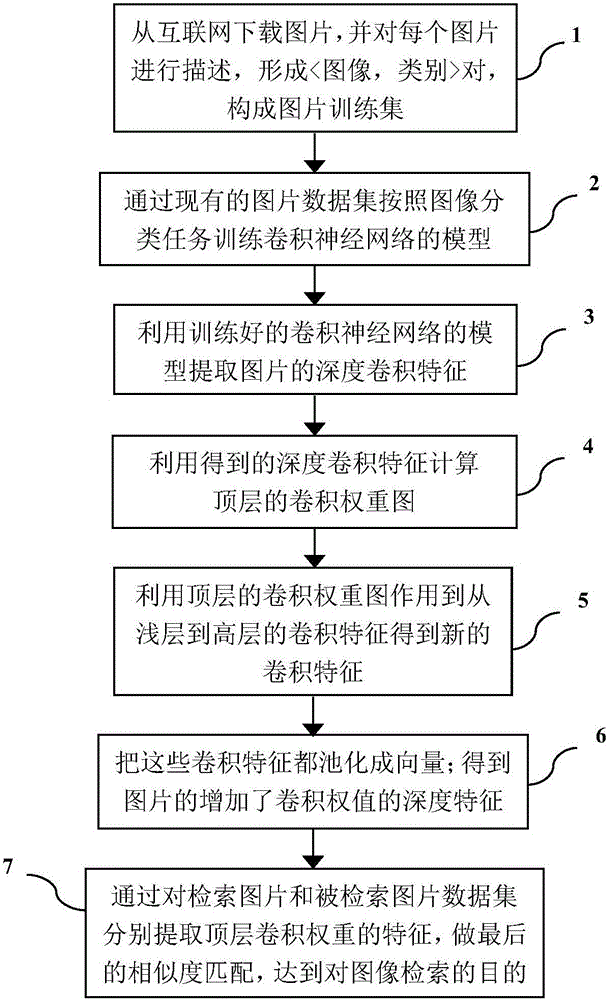

[0043] Below in conjunction with accompanying drawing, the present invention is described in further detail:

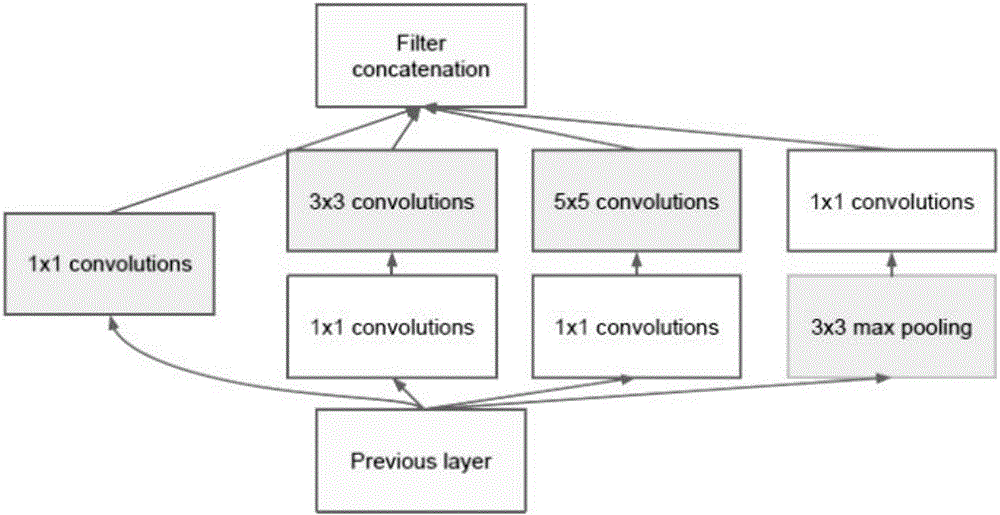

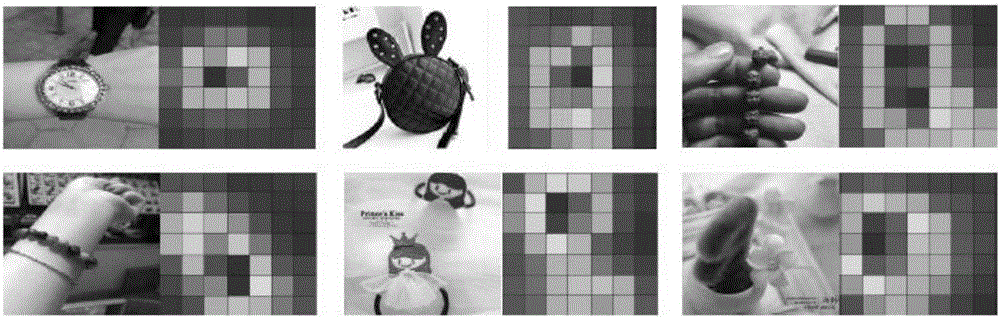

[0044] The weight value of the top-level convolution proposed by the present invention is not based on artificial prior knowledge, but a weight automatically learned through the convolutional neural network. Using this method is not only applicable to the case where the product is in the middle area, but is applicable to the case where the product is in any position. In the present invention, the structure of the deep convolutional network GoogLeNet includes passing from the input layer to the loss layer. The unit nodes in the network are divided into four types, and each unit node represents a network layer: the first type of unit node represents the input layer (input layer) and the loss layer (loss layer); the second type of node represents the convolutional layer (convlayer) and fully connected layer (fully connection layer), the third type of node represents the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com