A Nonlinear Unmixing Method for Hyperspectral Imagery Based on Bilinear Mixture Model

A hyperspectral image and hybrid model technology, applied in character and pattern recognition, instrument, scene recognition, etc., can solve the problems of over-fitting results, local minima, complex calculations, etc., achieve good robustness, and reduce algorithm complexity The effect of reducing the calculation time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0066] In the following, simulation data and actual remote sensing image data are used as examples to illustrate the specific implementation of the present invention.

[0067] The hyperspectral remote sensing image nonlinear unmixing algorithm based on the bilinear mixing model used in the present invention is represented by GEAB-FCLS.

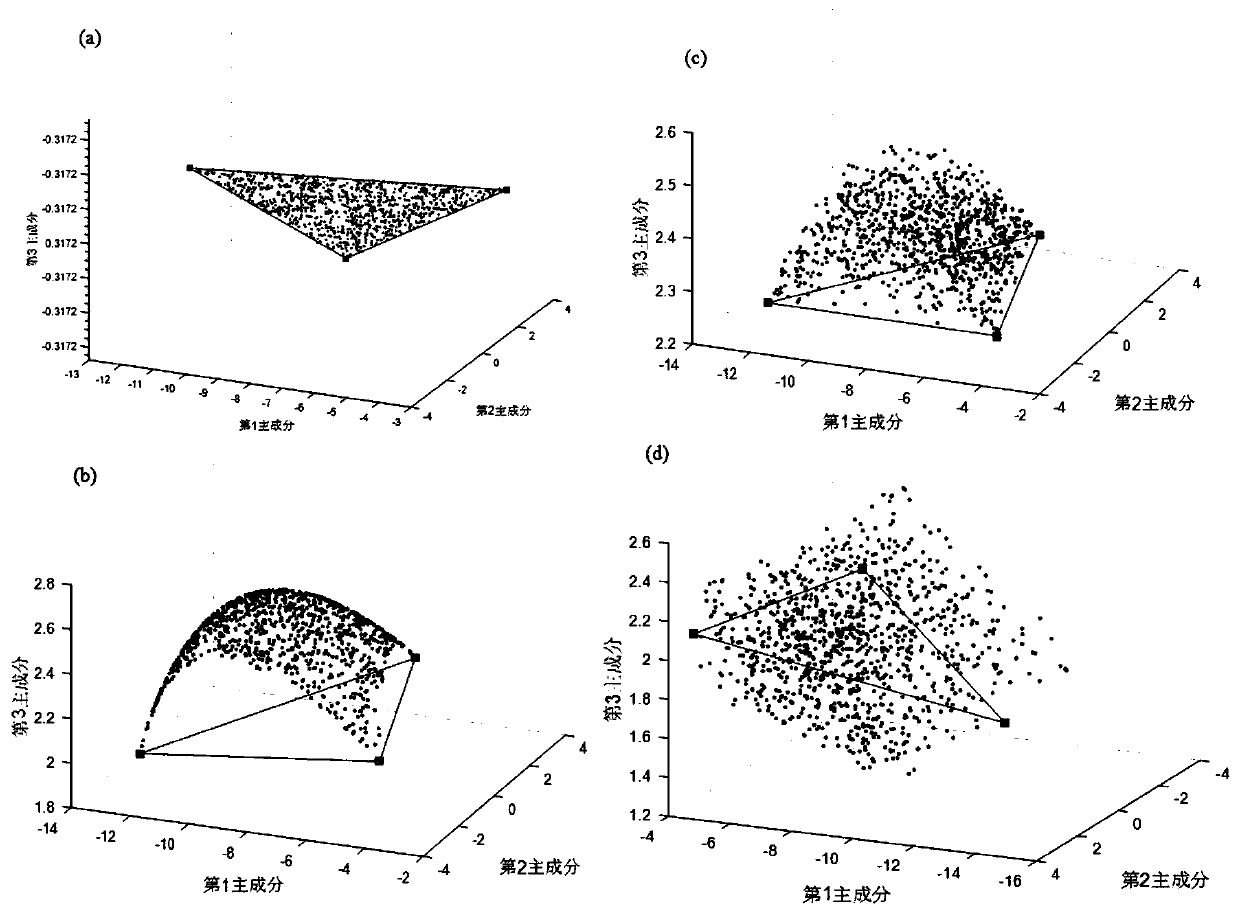

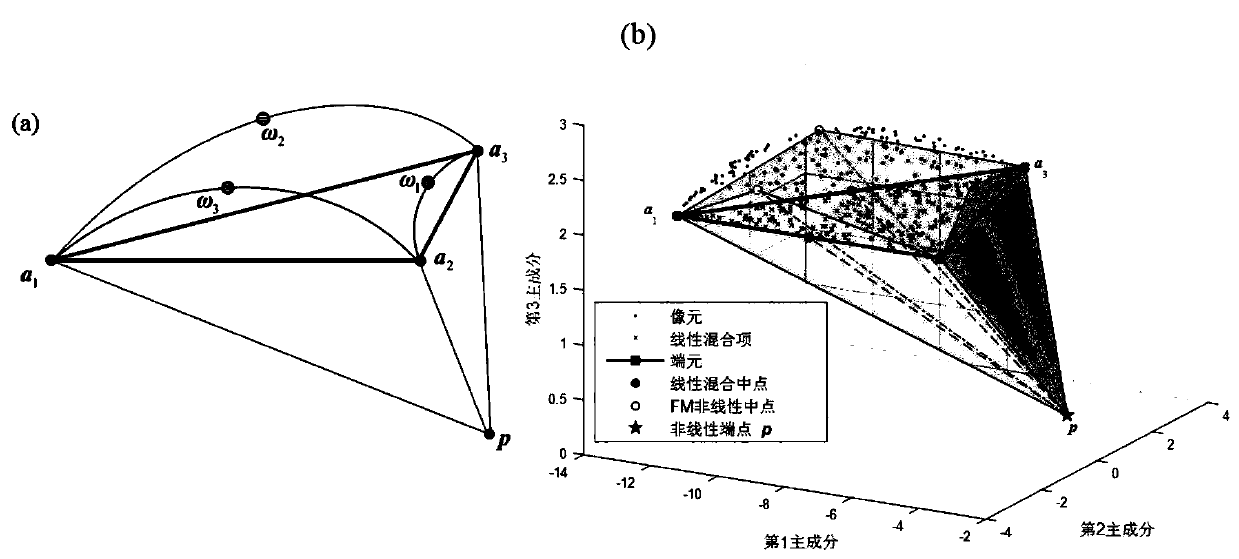

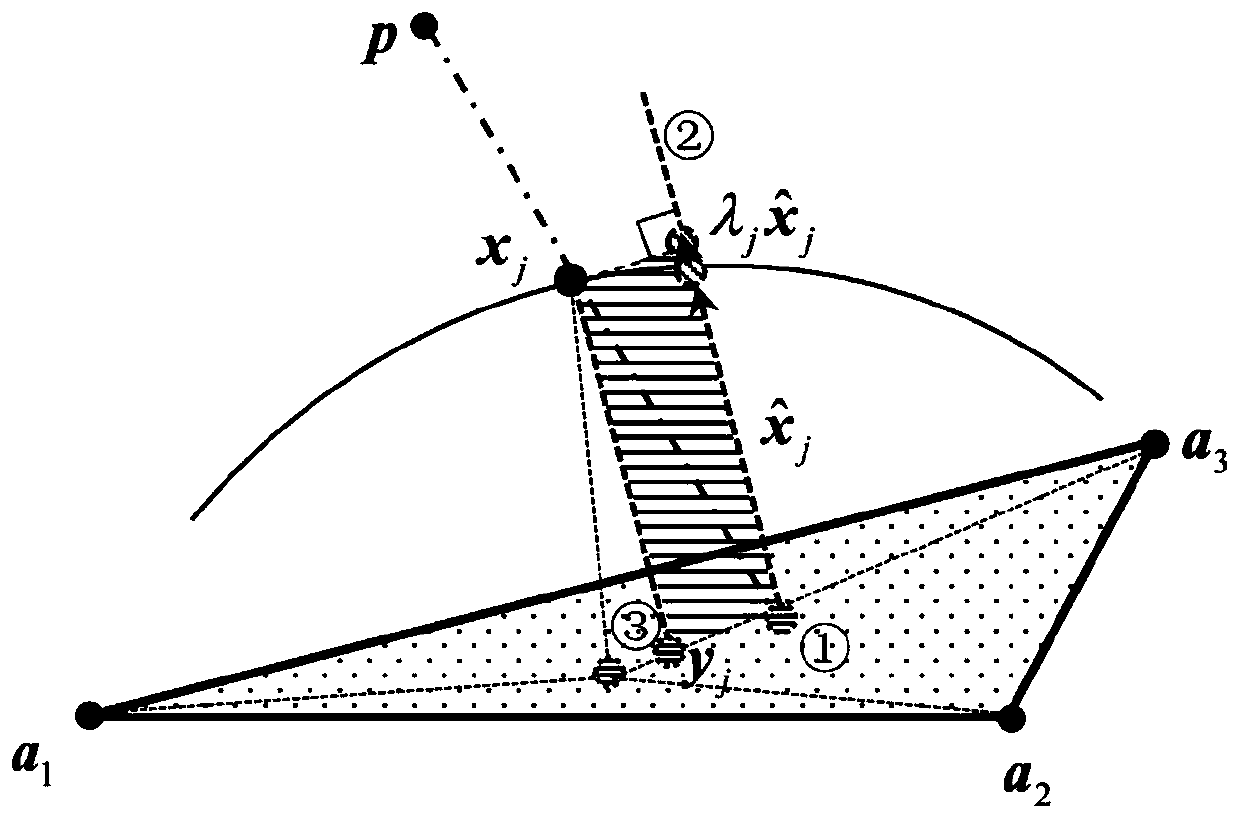

[0068] 1. Simulation data experiment

[0069] In this section, the GAEB-FCLS algorithm and the linear abundance estimation algorithm FCLS [10], the data-driven nonlinear unmixing method KFCLS [12] based on Gaussian kernel (the kernel parameters are obtained through the cross-validation method between 0.01-300) and The traditional solving algorithms corresponding to the three models of FM, GBM and PPNM: Fan-FCLS[6], GBM-GDA[7] and PPNM-GDA[8] for performance comparison. And use the root mean square error of abundance RMSE (Root Mean Square Error) and image reconstruction error RE (Reconstructed Error) two factors to evaluate the accuracy of the abund...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com