Camera motion and image brightness-based Kinect depth reconstruction algorithm

A camera movement and image technology, applied in image enhancement, image analysis, image data processing, etc., can solve the problem of insufficient depth detection accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0084] The present invention will be further described below in conjunction with specific examples.

[0085] The Kinect depth reconstruction algorithm based on camera motion and image shade described in the present embodiment comprises the following steps:

[0086] 1) When the Kinect depth camera and RGB camera are calibrated and aligned, upload the data collected by Kinect to the computer through a third-party interface.

[0087] 2.1) When the system is initialized, an RGB image is read and used as a key frame, and a depth map is bound to the key frame. The depth map and the grayscale image have the same dimension, traverse the depth map, and assign a random value to each pixel position. value;

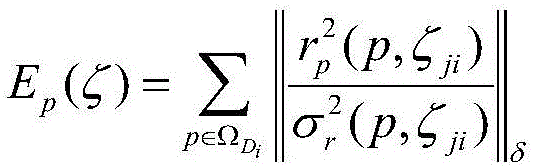

[0088] 2.2) Every time an RGB image is read, the following cost function is constructed:

[0089]

[0090] Where||·|| δ is the Hubble operator, r p represents the error, represents the variance of the error, .

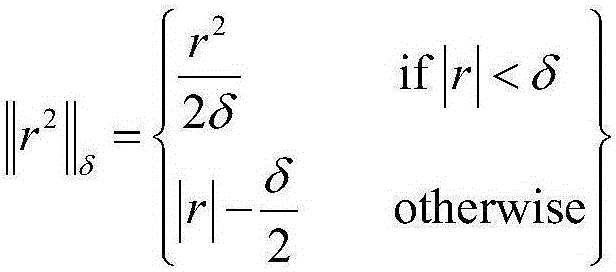

[0091] The Hubble operator is defined as follows:

[0092] ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com