Video description generation method based on deep learning and probabilistic graphical model

A probabilistic graphical model, video description technology, applied in character and pattern recognition, special data processing applications, instruments, etc., can solve problems such as insufficient additional information, and achieve the effect of accurate video description

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] In order to make the purpose, technical solution and advantages of the present invention clearer, the implementation of the present invention will be described in detail below in conjunction with the drawings and examples.

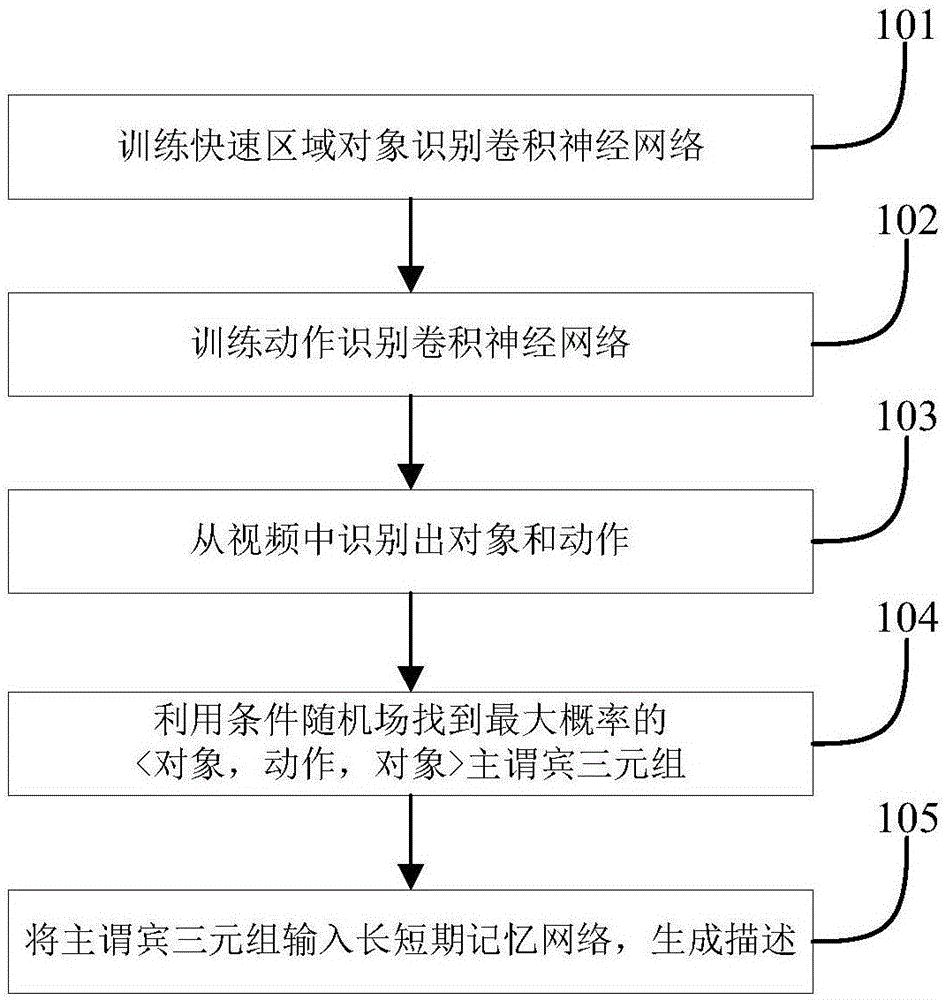

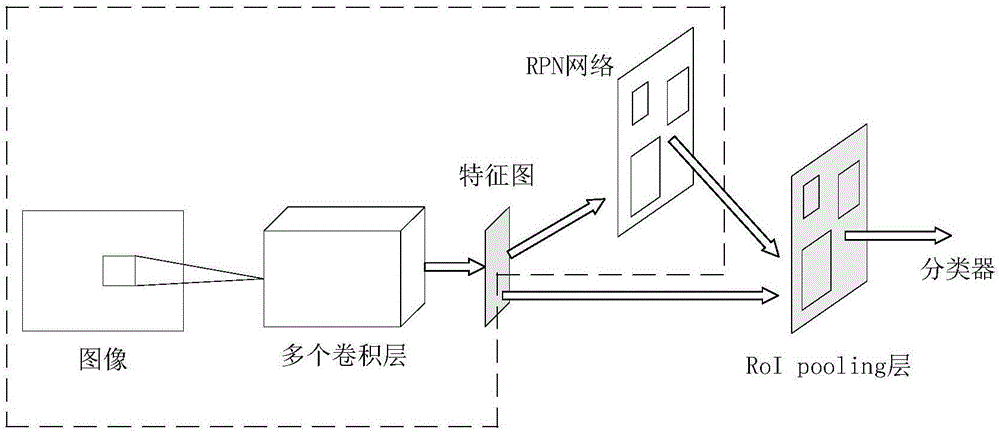

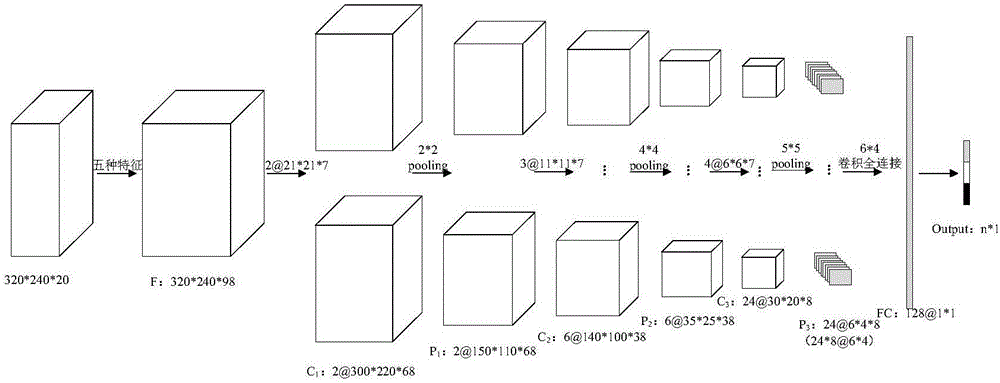

[0038] The invention recognizes the actions and objects in the video through the fast regional convolutional neural network and the action recognition convolutional neural network, and initially understands the information contained in the video; then uses the conditional random field to find the subject-predicate-object triple with the highest probability , to remove noise objects and actions that affect the judgment result; finally, use the long short-term memory network to convert the subject-verb-object triple into a description.

[0039] A video description generation method based on deep learning and probabilistic graphical model, see figure 1 , the method includes the following steps:

[0040] 101: Utilize existing image datasets to train a ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com