Feature fusion method based on stack-type self-encoder

A stacked self-encoding and feature fusion technology, which is applied in the field of SAE-based feature fusion, can solve the problems of insignificant improvement in feature discrimination, complex fusion network structure, and inability to meet real-time performance, so as to reduce network training time and testing time , simplify the SAE structure, and improve the effect of fusion efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

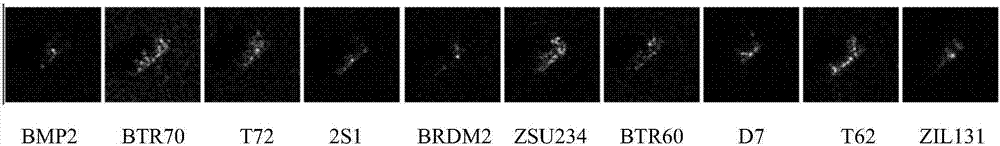

[0016] The experimental data of the present invention is the MSTAR data set, which includes BMP2, BRDM2, BTR60, BTR70, D7, T62, T72, ZIL131, ZSU234, 2S1 and other 10 types of military targets such as SAR (synthetic aperture radar, synthetic aperture radar) image slices , figure 1 An example of slices of 10 types of military targets is given in , and the slice size is uniformly cropped to 128×128 pixels.

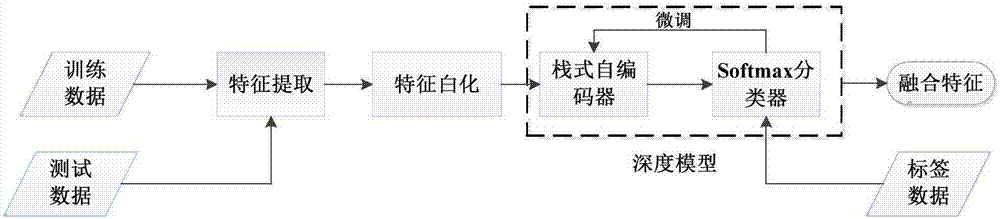

[0017] figure 2 Be the flow chart of the present invention, in conjunction with a certain experiment of the present invention, concrete implementation steps are as follows:

[0018] The first step is to extract the TPLBP (Three-Patch Local Binary Patterns, local three-patch binary pattern) texture feature of the image. The LBP (Local Binary Patterns) operator is used to obtain the LBP code value of the original image, and then the TPLBP code value is obtained by comparing the LBP value between image blocks, and the histogram vector is obtained by counting the TPLBP code va...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com