Human-Computer Interaction System and Working Method Based on Personality and Interpersonal Recognition

A technology of interpersonal relationship and human-computer interaction, applied in the field of computer vision, which can solve problems such as integration

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

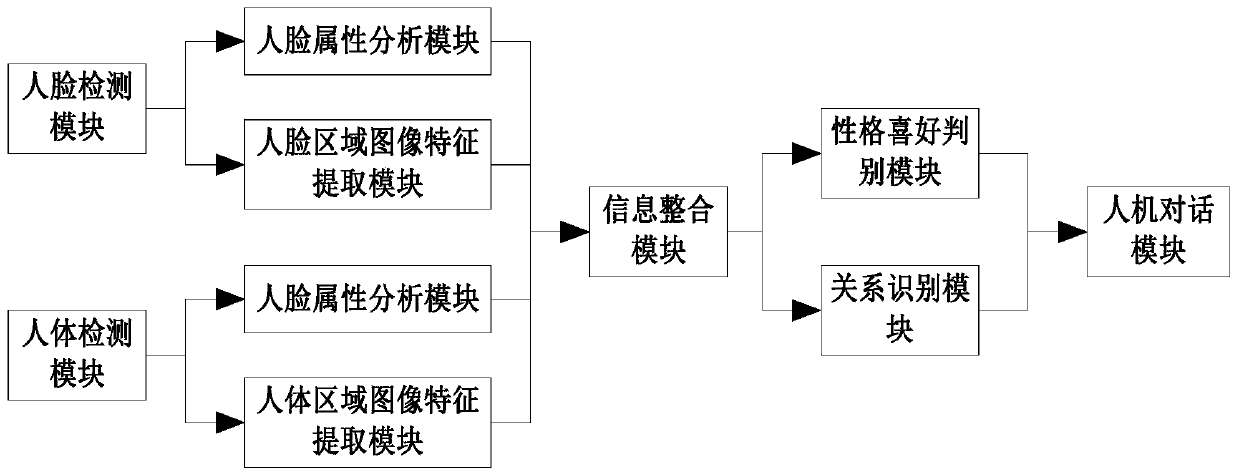

[0040] This embodiment provides a human-computer interaction system based on character and interpersonal relationship recognition, such as Figure 1 to Figure 6 shown, including:

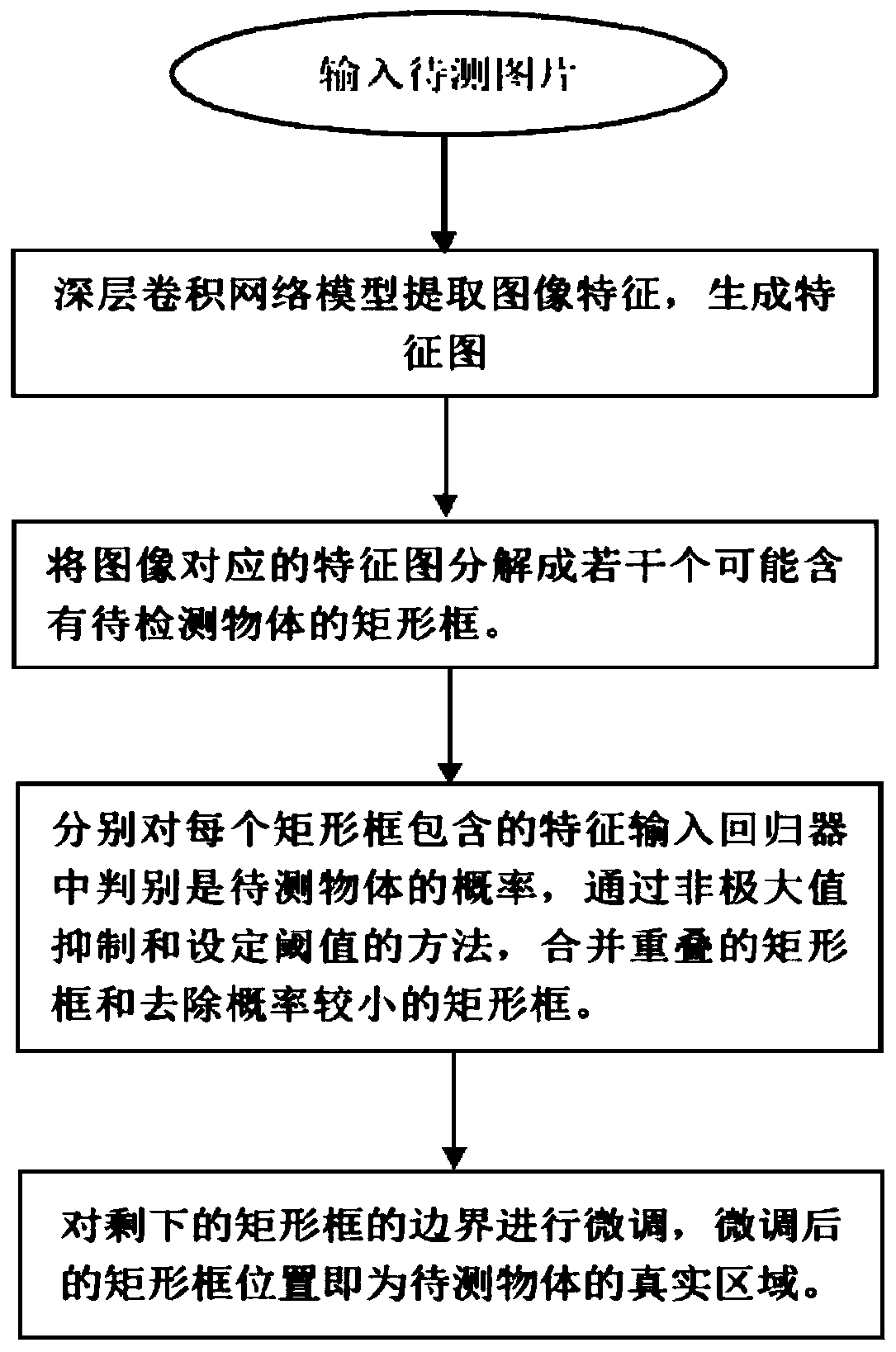

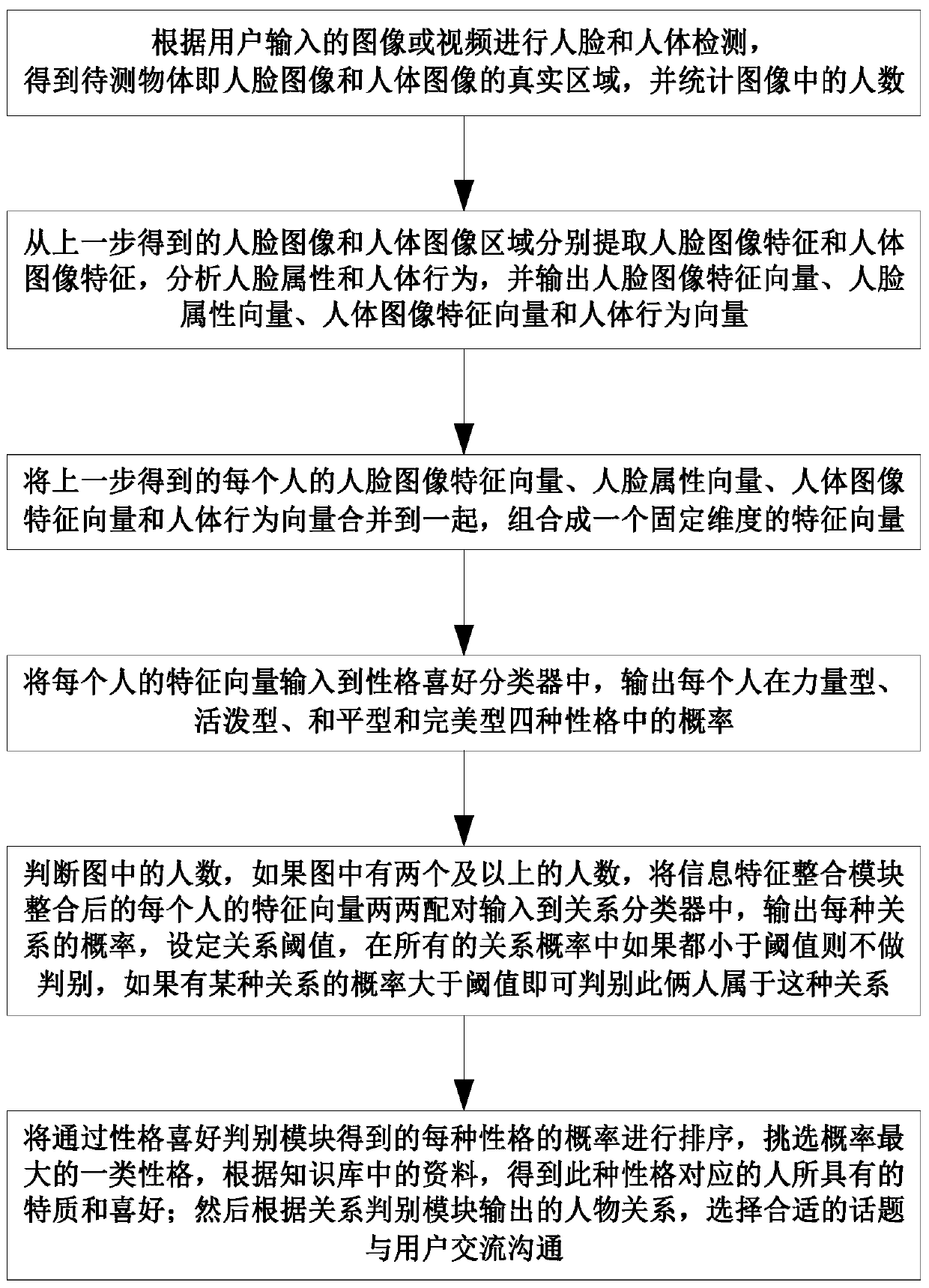

[0041] Detection module: perform face and human detection according to the image or video input by the user, obtain the real area of the object to be tested, that is, the face image and the human body image, and count the number of people in the image;

[0042] Information feature extraction module: extract face image features and human body image features from the face image and human body image regions obtained by the detection module, and analyze face attributes and human body behaviors;

[0043] Information feature integration module: merge the face and body information of each person output by the information feature extraction module into a fixed-dimensional feature vector;

[0044] Personality preference discrimination module: Input the feature vector of each person integrated by the infor...

example 1

[0067] Example 1: When a boy is chatting with a robot, he inputs a selfie. First, he conducts face and human body detection and finds that there is only one person. Then, he performs face attribute analysis, human body key point positioning and behavior analysis on this person, and Extract the image features of human face and human body, integrate these information, and input it into the personality preference identification module to analyze whether he belongs to the strong, decisive and conceited type, the enthusiastic, lively and changeable lively type, the easy-going, friendly and reticent peaceful type, or the meticulous The sensitive and pessimistic perfect type, based on this personality information, matches the preferences and chat styles of each personality in the previous database, and the chat robot chooses appropriate topics and methods to communicate with it.

example 2

[0068] Example 2: When a girl is chatting with a chatbot, she uploads a selfie of herself holding hands with her boyfriend. The system will perform face and body detection on this photo to obtain the location of the face and body area and the number of people. Secondly, analyze the age, gender, expression and face value of each person's face, as well as the key points of the body and behaviors such as hugging, holding hands, approaching and staying away, combined with the image features of the extracted face and human body , carry out information integration, integrate into a fixed-length feature vector, and then input it into the relationship discrimination module, and it is determined that the relationship between the two in the figure is a couple. Finally, based on this relationship, the chatbot takes the initiative to greet the user, such as: "Is the boy next to you your boyfriend? He's so handsome."

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com