Target tracking method and device

A technology for target tracking and target tracking, which is applied in the field of target tracking methods and devices, and can solve problems such as inability to identify task differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

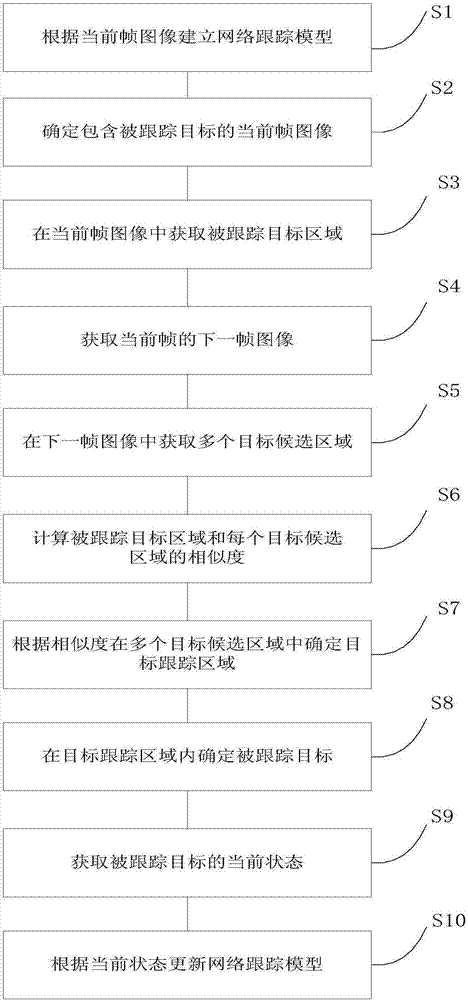

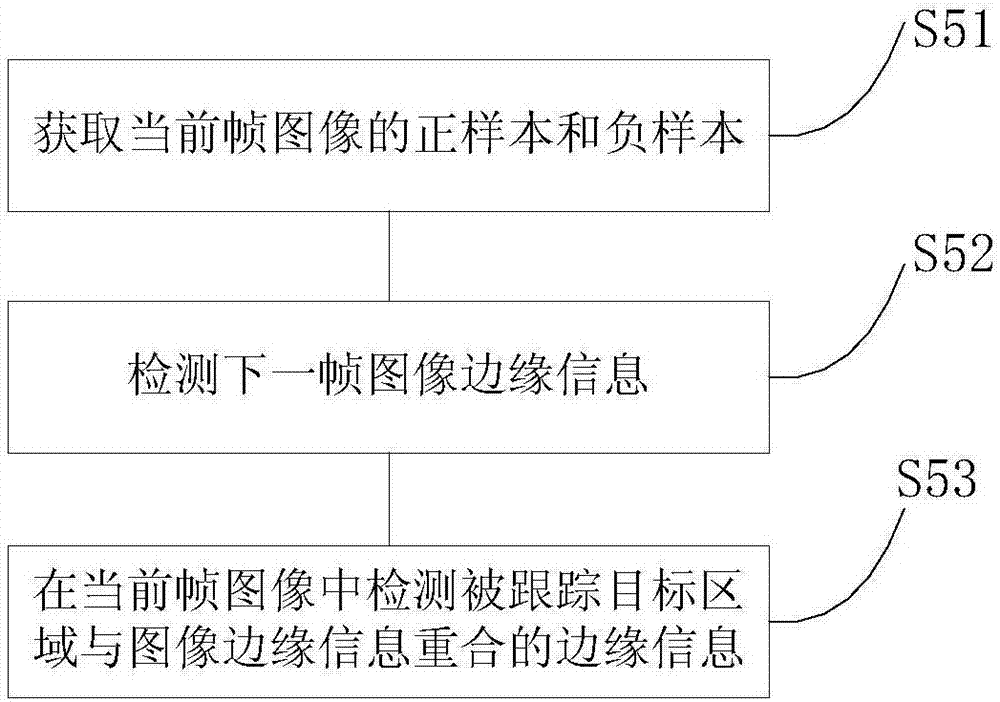

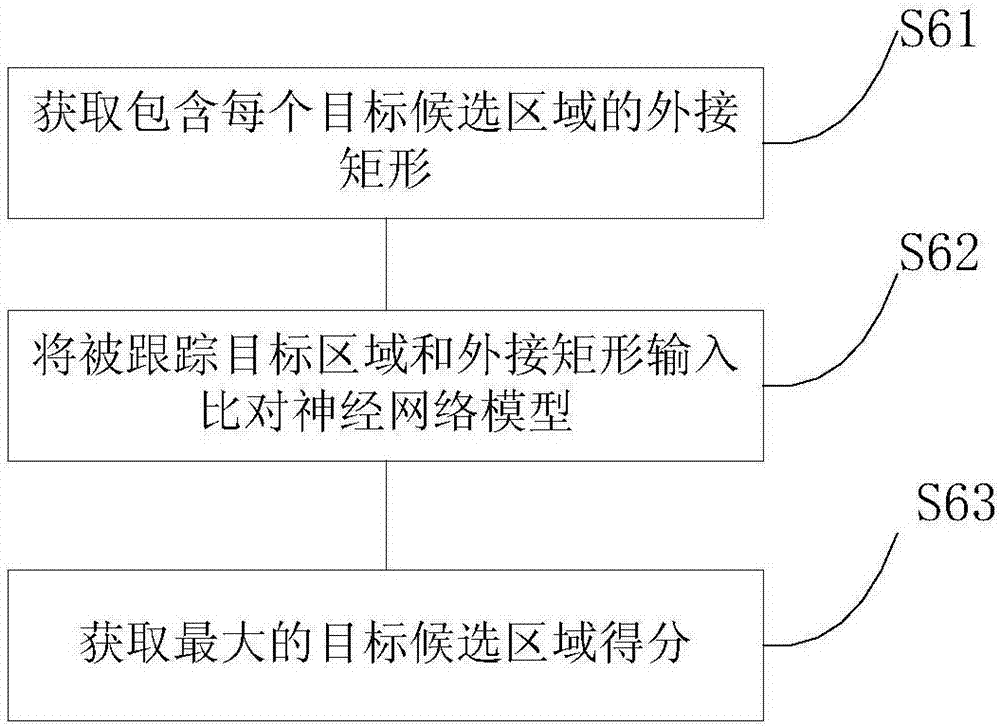

[0070] This embodiment provides a target tracking method, such as figure 1 shown, including the following steps:

[0071] S1. Establish a network tracking model based on the current frame image; this network tracking model is combined with video data associated with time series, and trained to obtain an individual-level network. Because object tracking usually refers to the initial state of the object in the first frame of the tracking video, the state of the object in subsequent frames is automatically estimated. The human eye can easily follow a specific target for a period of time, but for the machine, this task is not easy. During the tracking process, the target may change drastically, be blocked by other targets or appear similar objects Interference and other complex situations. The above-mentioned current frame is the image information including the initial frame input from the video stream or the previous frame or the next frame at the current moment, and the inform...

Embodiment 2

[0096] This embodiment provides a target tracking device, which corresponds to the target tracking method in Embodiment 1, such as Figure 5 As shown, it includes the following units:

[0097] An establishment unit 511, configured to establish a network tracking model according to the current frame image;

[0098] The first determining unit 512 is configured to determine the current frame image containing the tracked target;

[0099] The first acquiring unit 513 is configured to acquire the tracked target area in the current frame image;

[0100] The second acquiring unit 514 is configured to acquire the next frame image of the current frame;

[0101] A third acquisition unit 515, configured to acquire a plurality of target candidate regions in the next frame of image;

[0102] A calculation unit 516, configured to calculate the similarity between the tracked target area and each target candidate area;

[0103] The second determining unit 517 is configured to determine a t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com