A large-scale scene 3D modeling method and device based on a depth camera

A technology of depth camera and modeling method, applied in the field of 3D modeling, can solve the problems of huge amount of data, high difficulty, poor flexibility, etc., and achieve the effect of low storage space, wide application field and flexible use.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in detail below with reference to the accompanying drawings and in combination with embodiments.

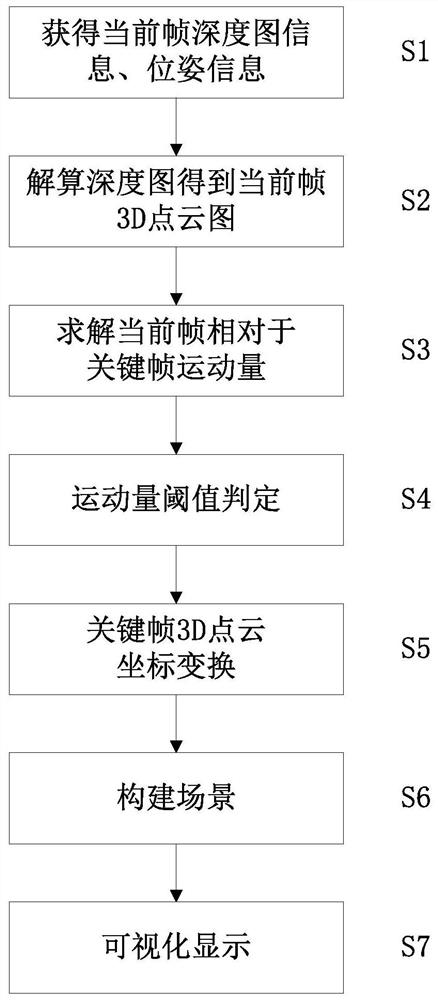

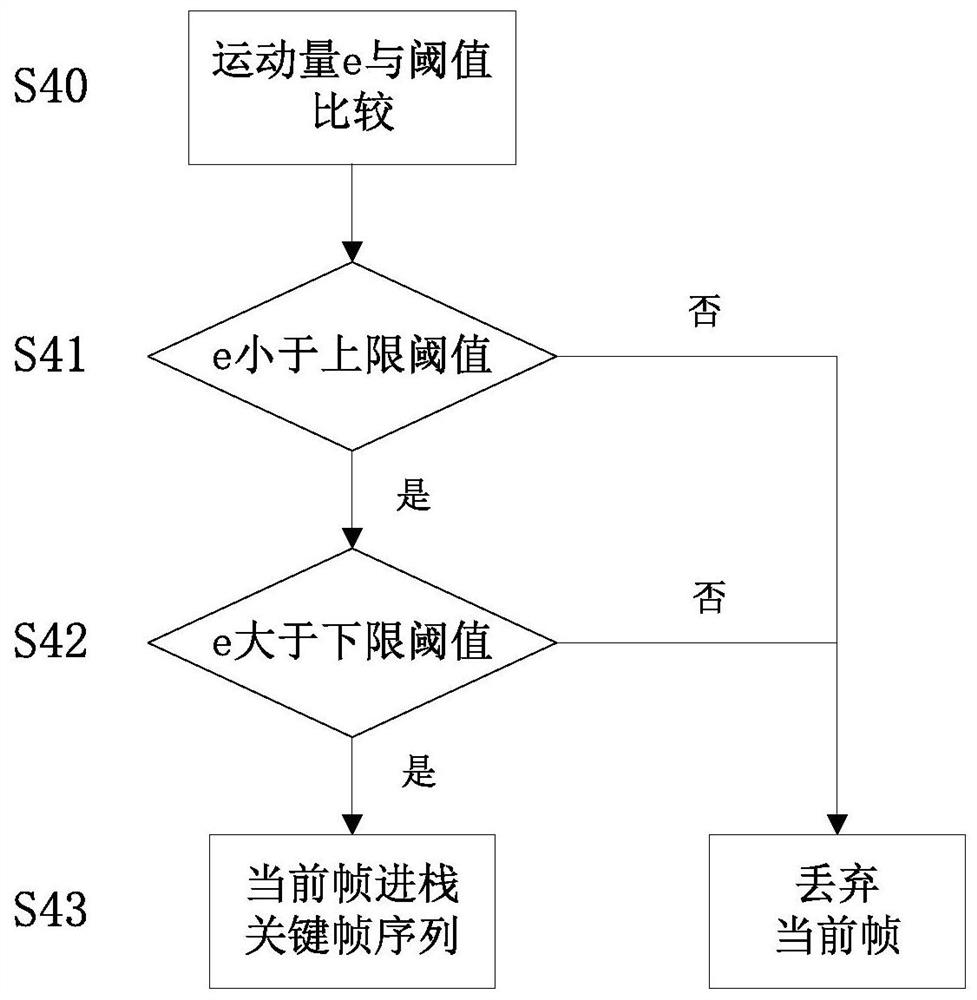

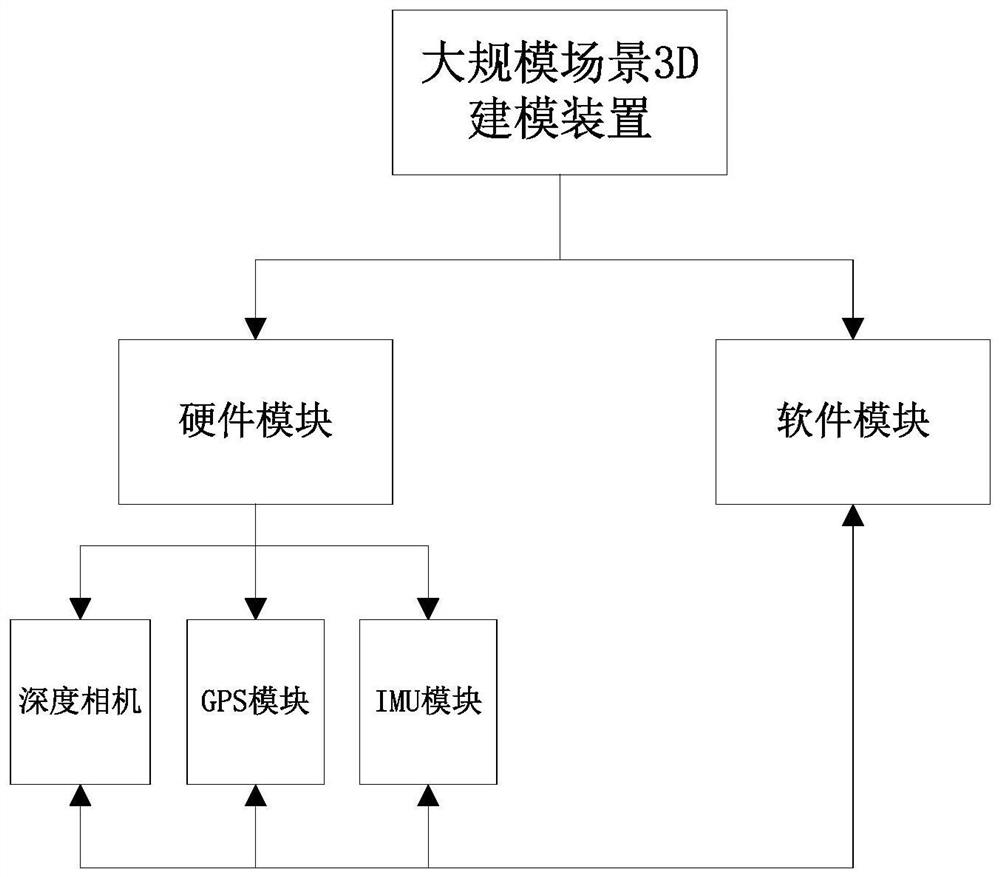

[0036] refer to Figure 1-4 As shown, a large-scale scene 3D modeling method based on depth camera, such as figure 1 shown, including the following steps:

[0037] S1. Obtain the depth map information and pose information of the current frame, and use the depth camera to obtain the depth map information of the current frame at the current position. The pose information includes position information and attitude information. In an outdoor environment, differential GPS and IMU (Inertial Measurement Unit, inertial Measurement unit) sensor combination acquisition, and for the indoor environment, the pose information calculated from the depth image is fused with the IMU sensor information.

[0038] S2. Calculate the depth map to obtain the current frame 3D point cloud map, and use coordinate transformation to uniformly convert the depth...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com