Method and system for generating reality-fused three-dimensional dynamic image

A three-dimensional dynamic, image generation technology, applied in the field of image processing, to achieve the effects of diverse forms of expression, easy updating and interaction, and high reproducibility

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0043] The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

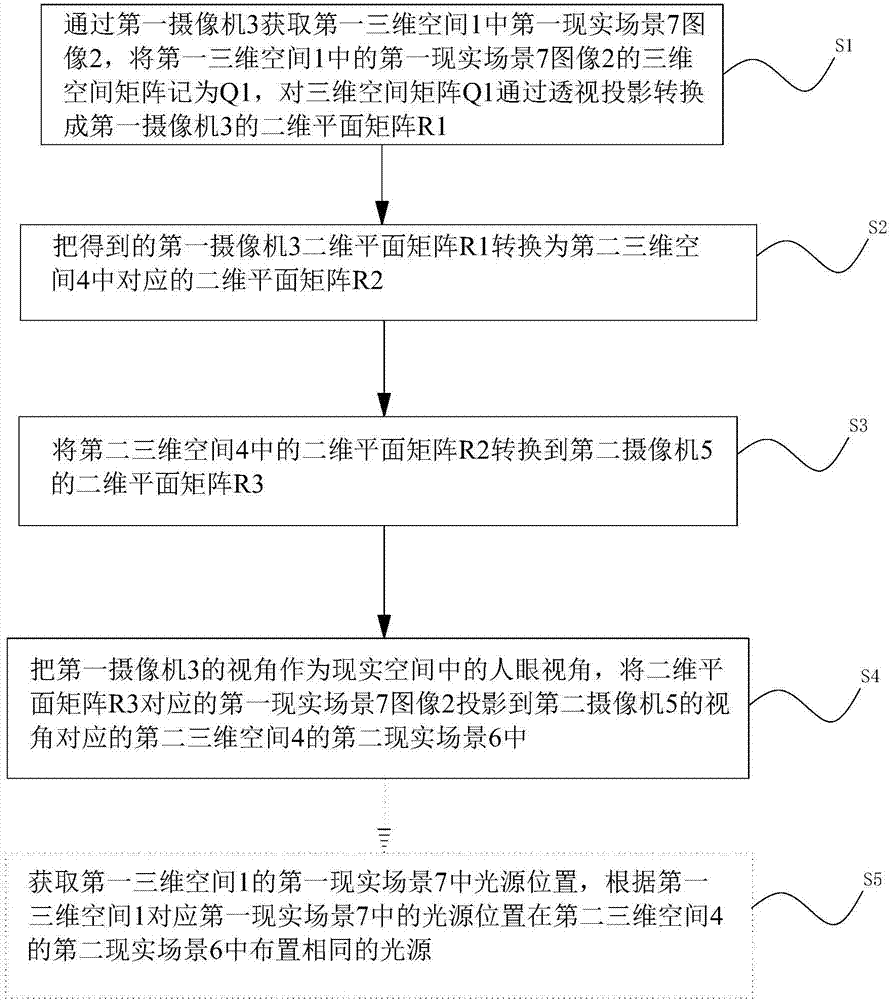

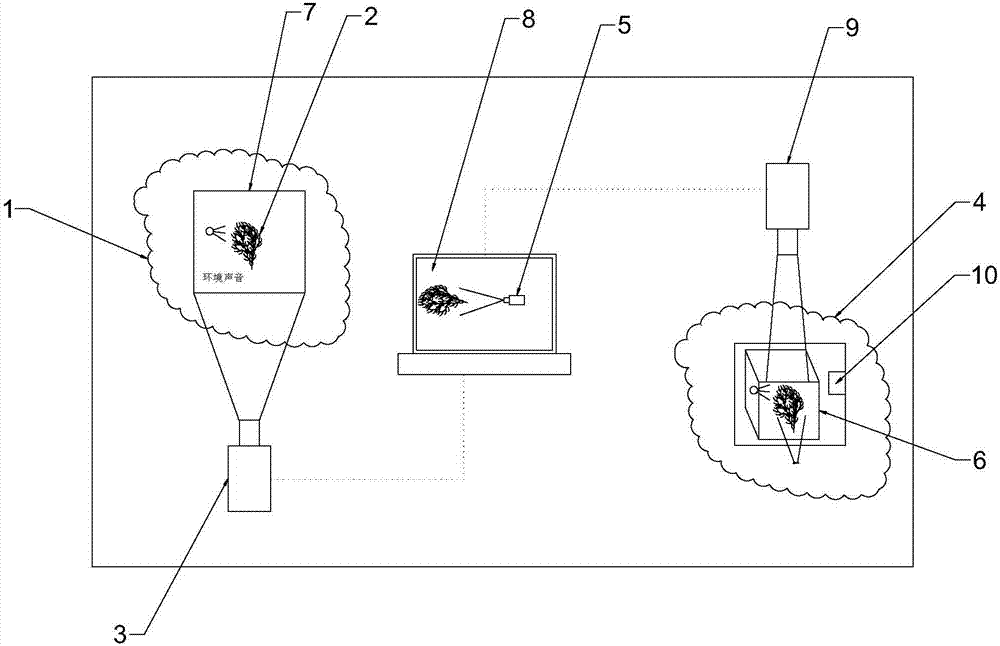

[0044] see in figure 1 on the basis of further see figure 2 , a kind of 3D dynamic image generation method of fusion reality, described method comprises the following steps:

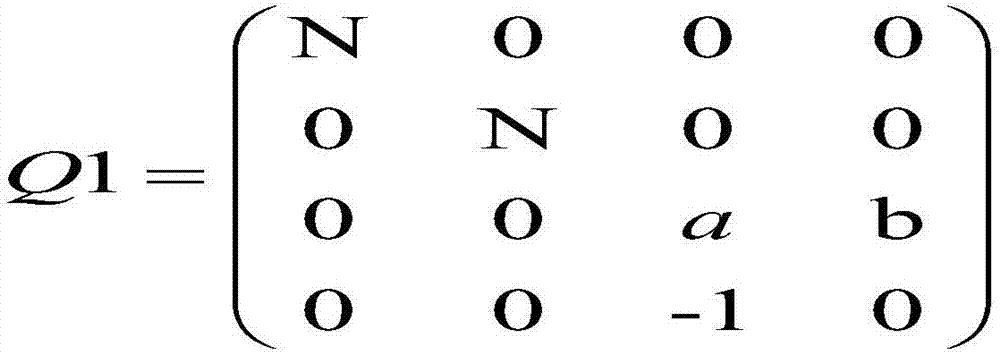

[0045] S1: Obtain the image 2 of the first real scene 7 in the first three-dimensional space 1 through the first camera 3, and denote the three-dimensional space matrix of the first real scene 7 image 2 in the first three-dimensional space 1 as Q1, and the three-dimensional space matrix Q1 converted into a two-dimensional plane matrix R1 of the first camera 3 through perspective projection;

[0046] S2: converting the obtained two-dimensional plane matrix R1 of the first camera 3 into a corresponding two-dimensional plane matrix R2 in the second three-dimensional space 4;

[0047] S3: converting the two-dimensional plane matrix R2 in the second three-dimensio...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com