Section-line recognition device

A lane line recognition, lane line technology, applied in character and pattern recognition, vehicle components, transportation and packaging, etc., can solve problems such as increased deviation, reduced driving control controllability, and low recognition accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 Embodiment approach

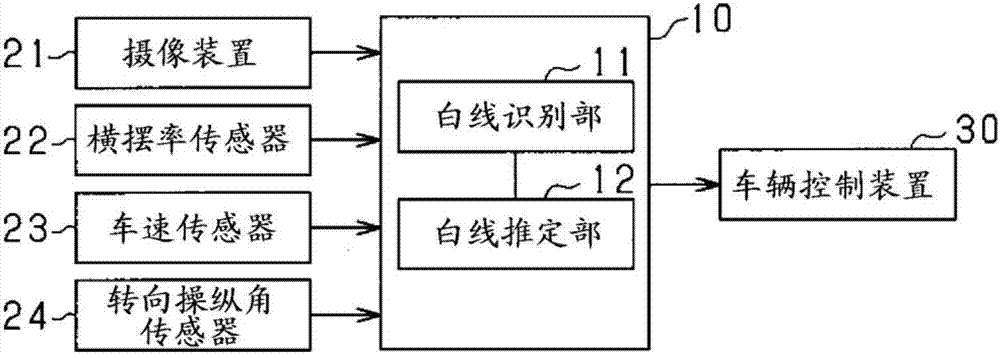

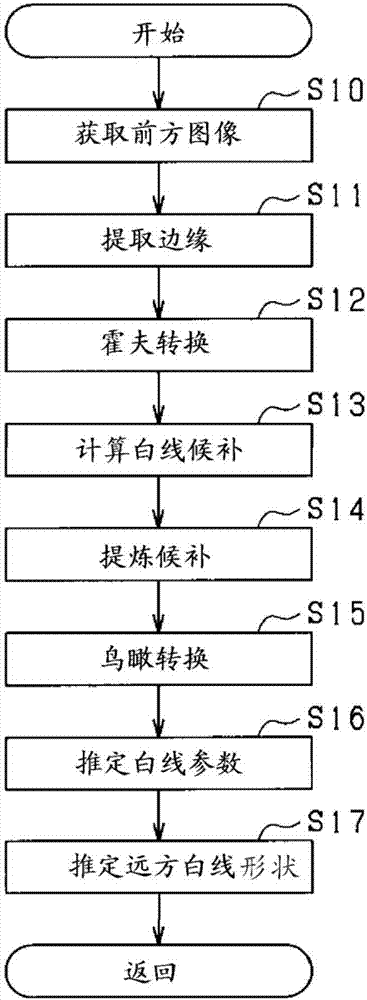

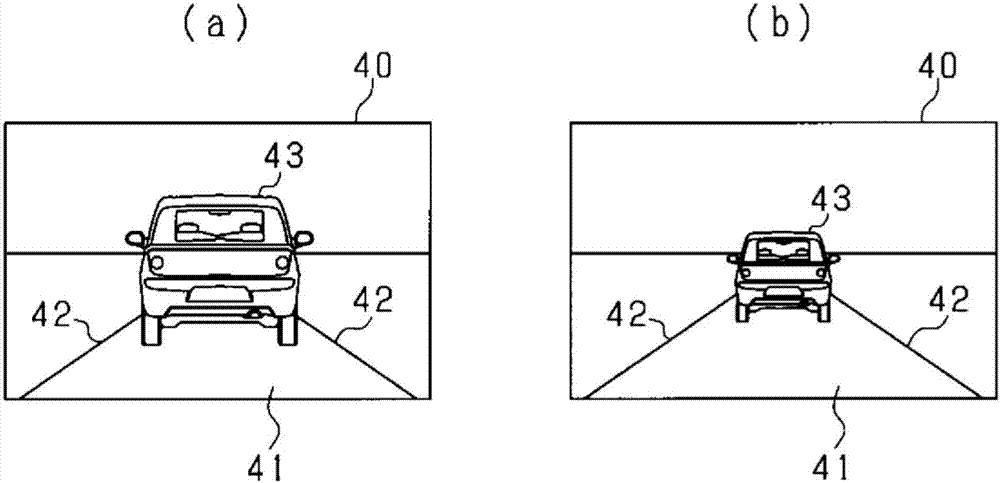

[0016] Hereinafter, the lane marking recognition device according to the present embodiment will be described with reference to the drawings. The lane marking recognition device of this embodiment is a device mounted on a vehicle. This lane line recognition device recognizes white lines that are running lane lines that define a driving lane of a vehicle. Information related to the white line recognized by the lane line recognition device (for example, white line information) is used to follow the preceding vehicle which is a vehicle traveling in the same lane as the own vehicle among the preceding vehicles driving in front of the own vehicle. Driving assistance control such as the adaptive cruise control of the vehicle, the lane keeping assist which controls the driving of the vehicle so that the vehicle does not deviate from the driving lane line. First, use figure 1 A schematic configuration of the lane marking recognition device according to this embodiment will be descri...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - Generate Ideas

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com