Intelligent voice interaction method and device

A technology of intelligent voice and interactive methods, which is applied in voice analysis, voice recognition, instruments, etc., and can solve problems such as large effects, errors, and difficulty in ensuring high accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0091] In order to enable those skilled in the art to better understand the solutions of the embodiments of the present invention, the embodiments of the present invention will be further described in detail below in conjunction with the drawings and implementations.

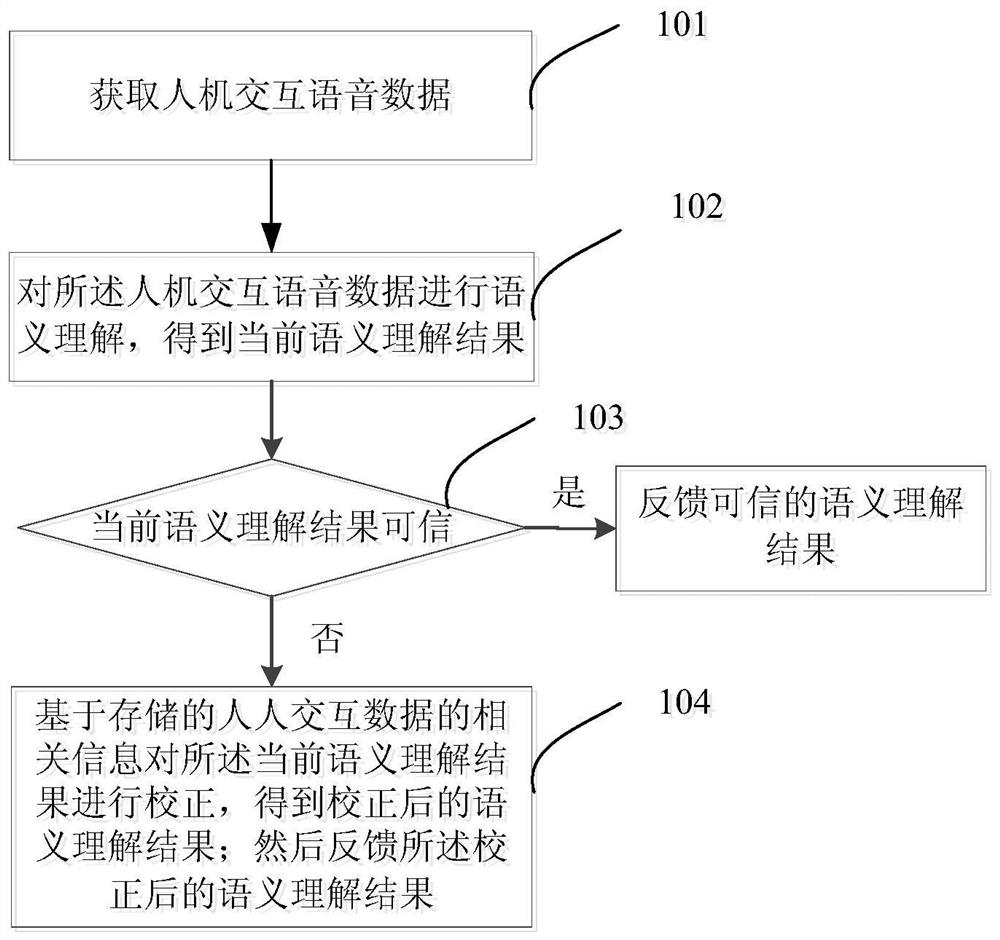

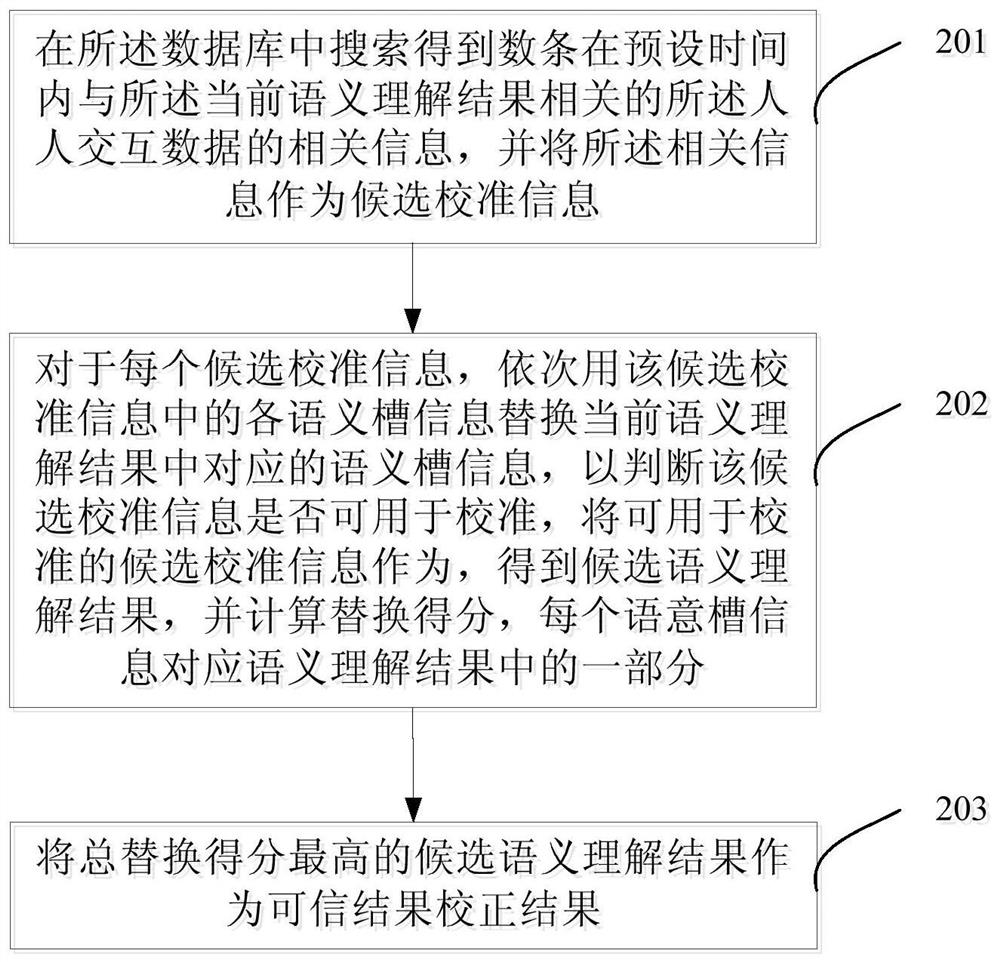

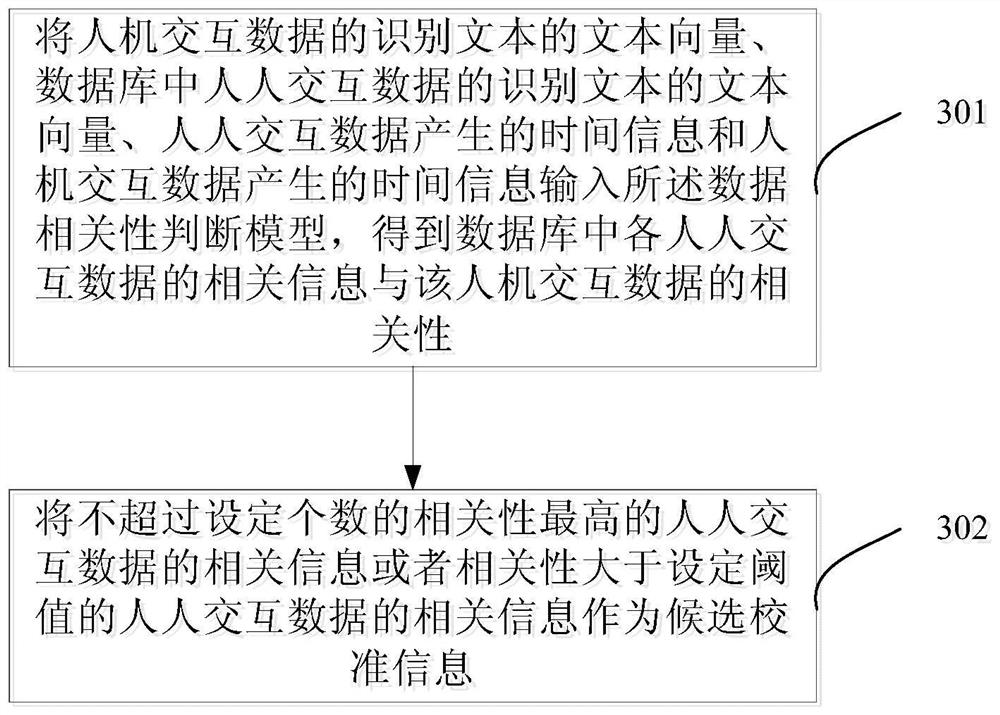

[0092] The existing intelligent interaction methods in the vehicle environment generally perform semantic understanding for this round of interaction when performing semantic understanding. However, in some human-computer interaction environments, there will be some human-computer interaction voices, and these human-computer interaction voices usually contain information related to the content of human-computer interaction, such as users in the car talking to other passengers, or In the process of making calls with others, most of the information related to the car business is implied. This information is of great help in improving the understanding of intentions in human-computer interaction. To this end, the e...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com