A Visual Perceptual Coding Method Based on Multi-Domain JND Model

A technology of visual perception and coding method, which is applied in the field of video information processing, can solve problems such as immature basic theory, inability to explain human eye characteristics well, and coding standards that do not take human eye characteristics into account.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

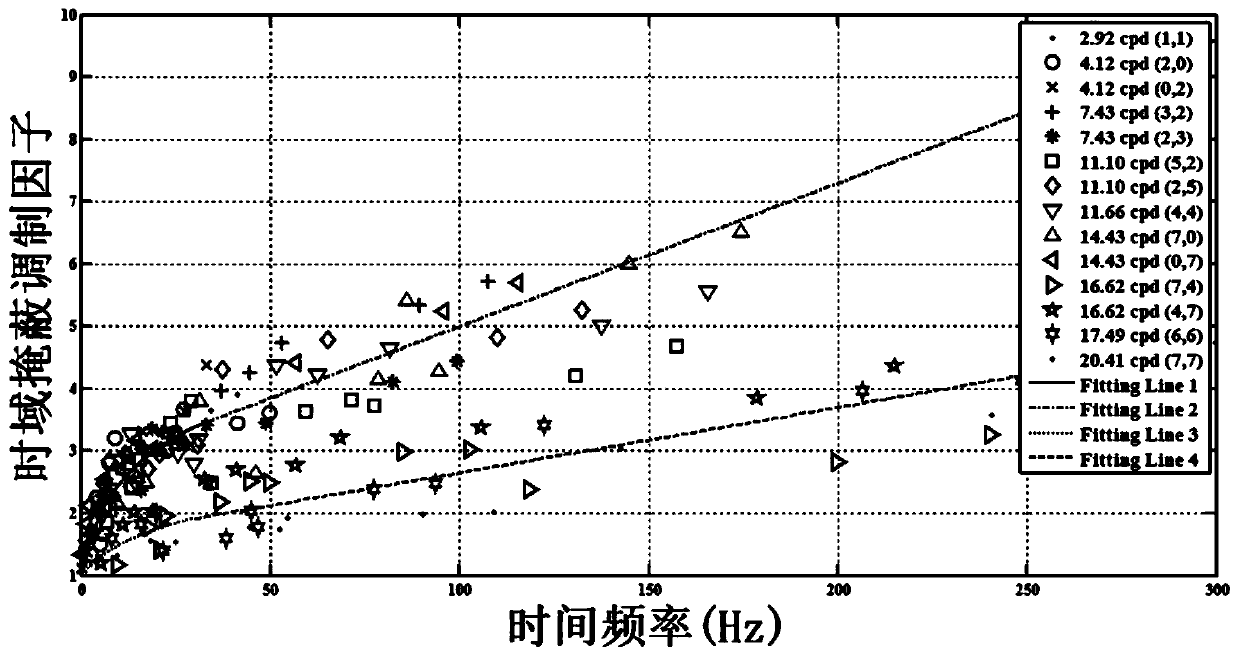

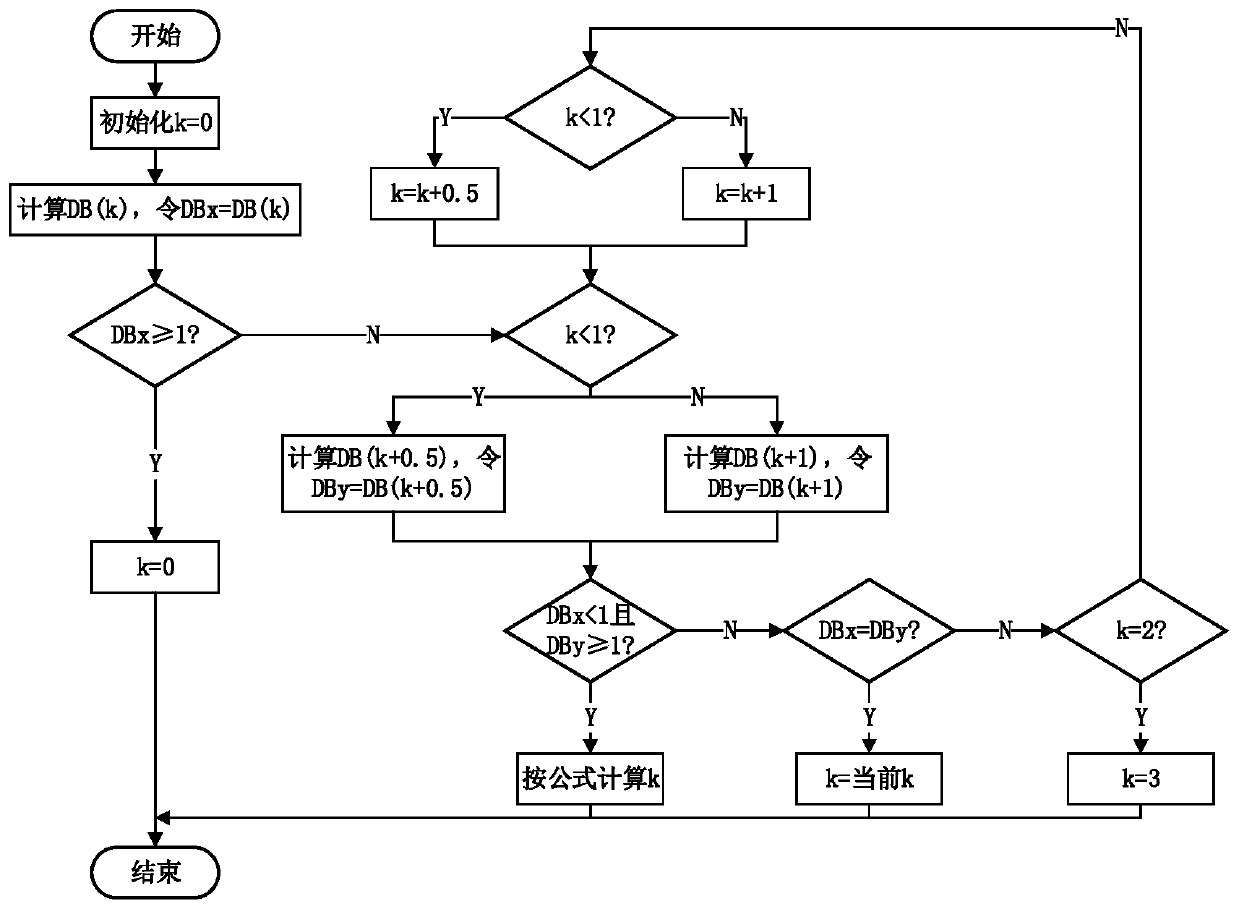

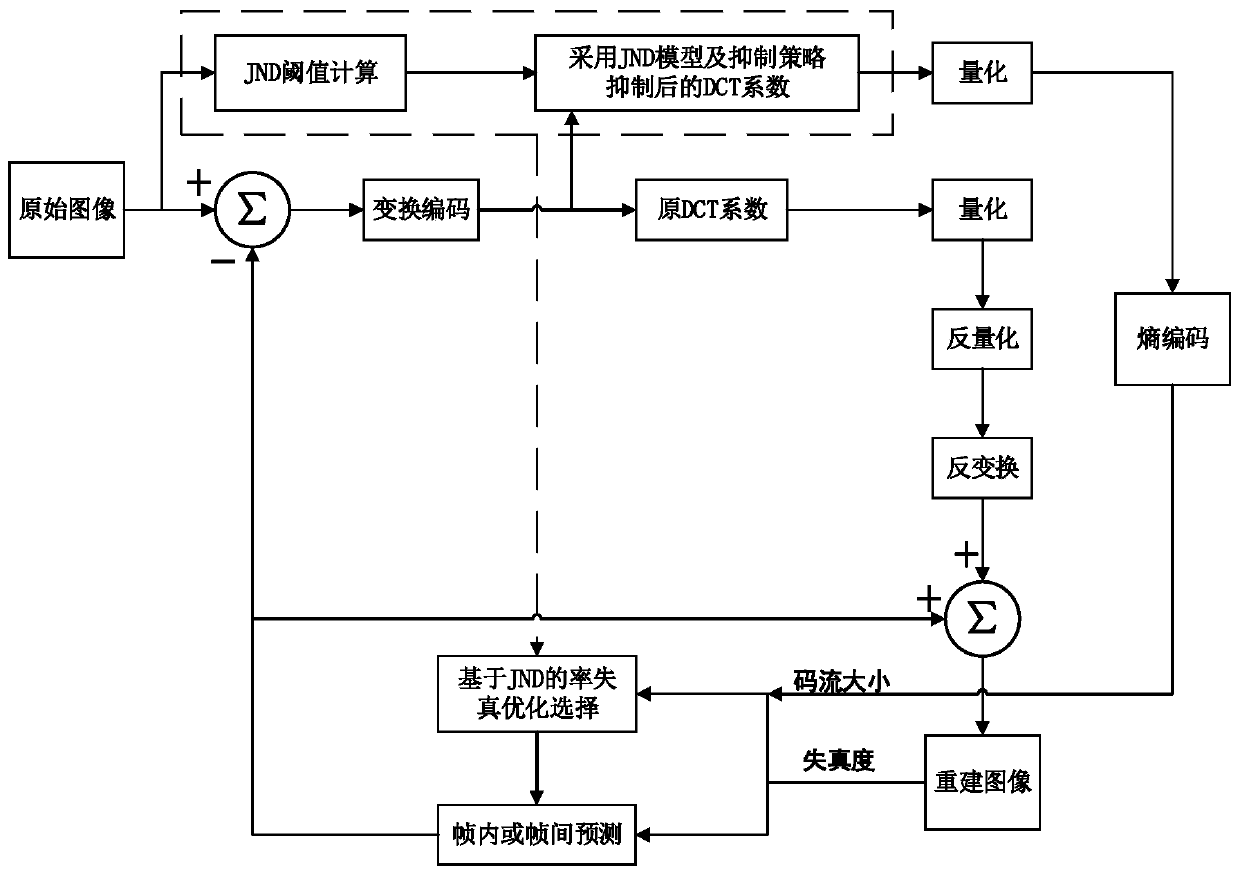

[0084] The invention provides a visual perception coding algorithm based on a multi-domain JND model, which includes two parts: a multi-domain JND model and a coding suppression strategy. The entire multi-domain JND model includes three parts: space-time and frequency, in which the frequency domain model is only related to the spatial frequency and viewing angle of the different position coefficients of the transform block, and is used to calculate the basic JND threshold; the spatial domain model includes two parts: brightness masking modulation factor and contrast masking modulation factor In the part, the brightness masking modulation factor is related to the average brightness and spatial frequency of the transform block, which is used to correct the distortion sensitivity of the human eye to different brightness, and the contrast masking modulation factor is related to the average texture intensity and spatial frequency of the transform block, which is used for Correct the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com