Patents

Literature

299 results about "Space time domain" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

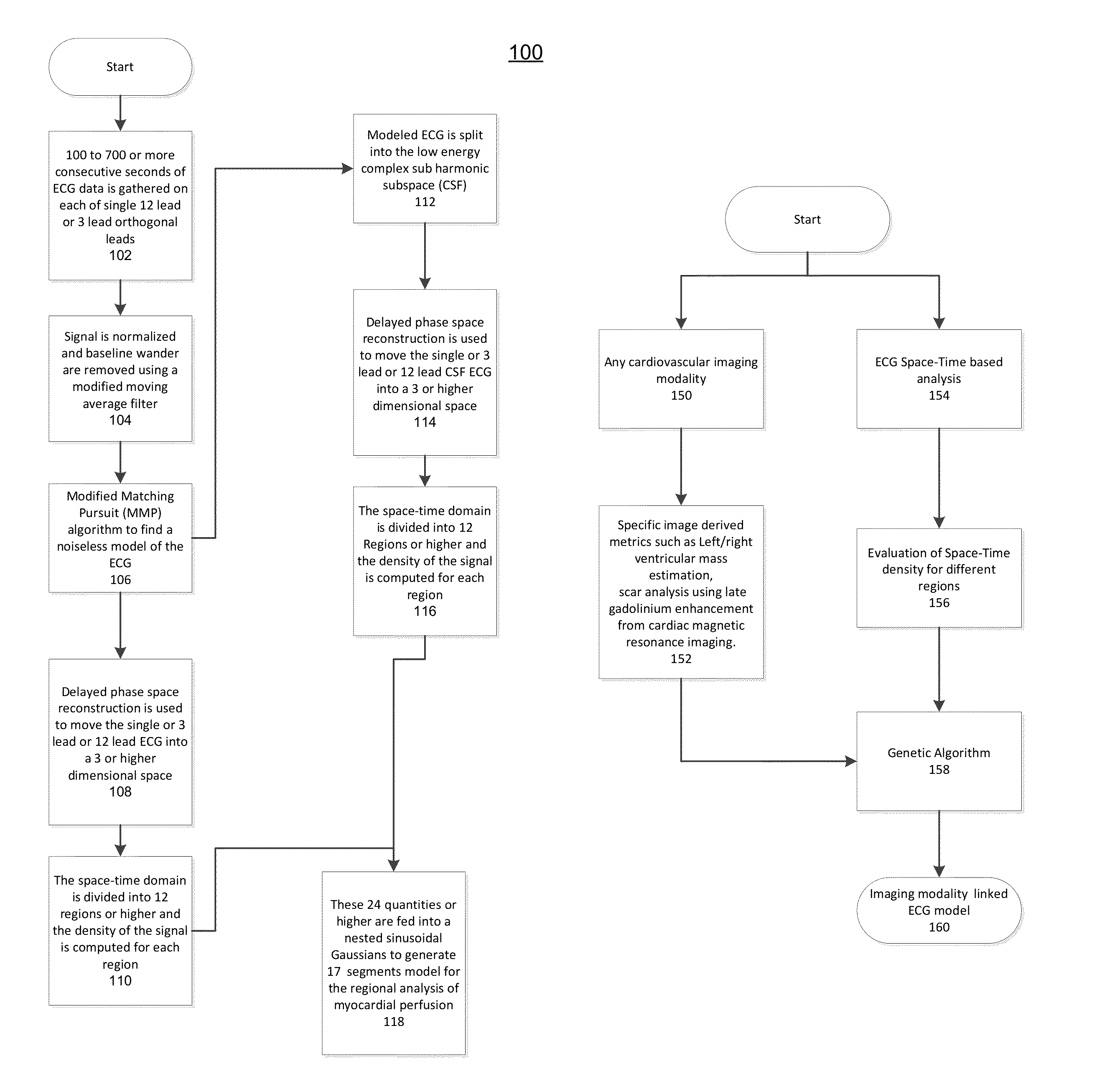

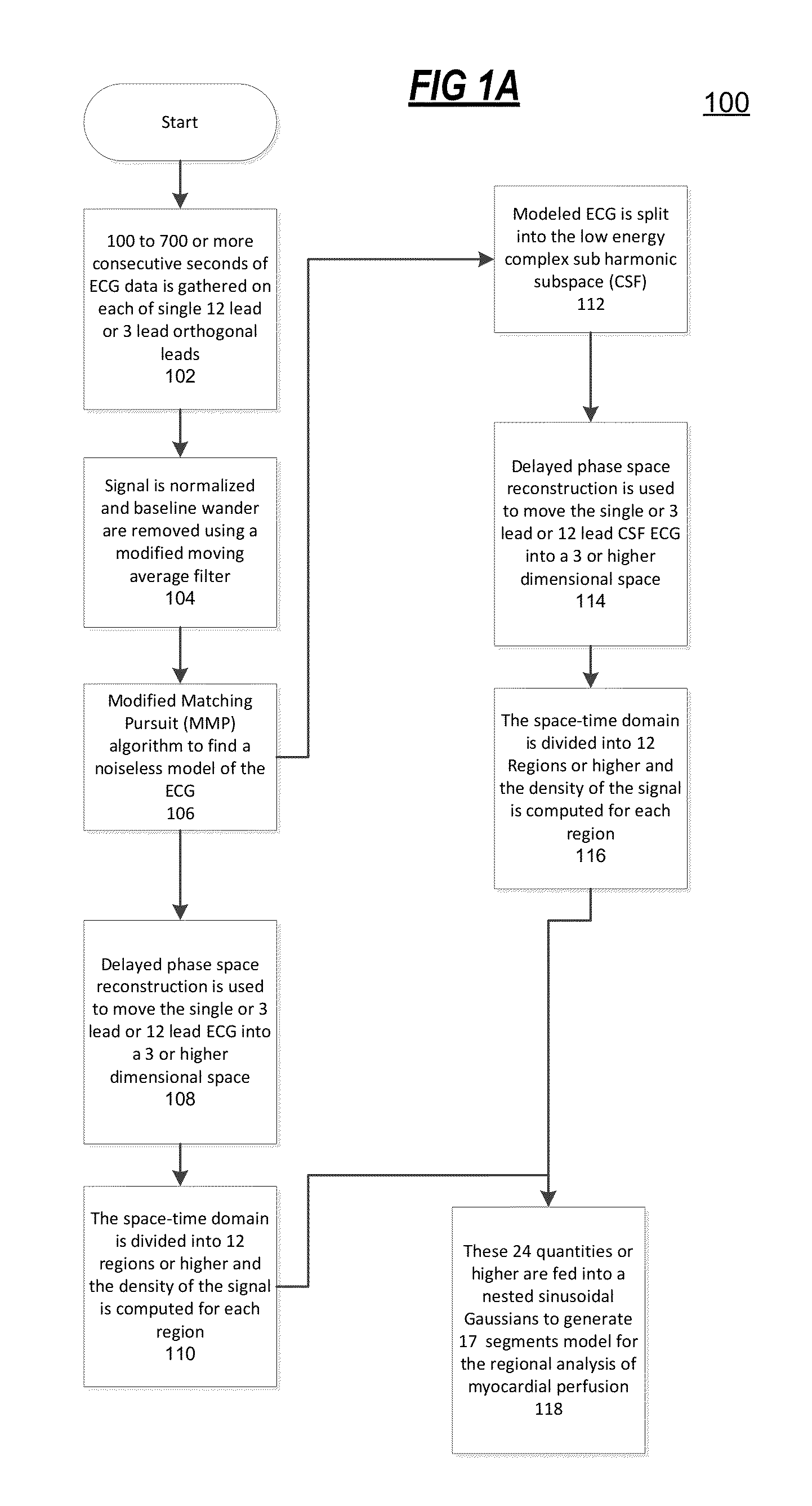

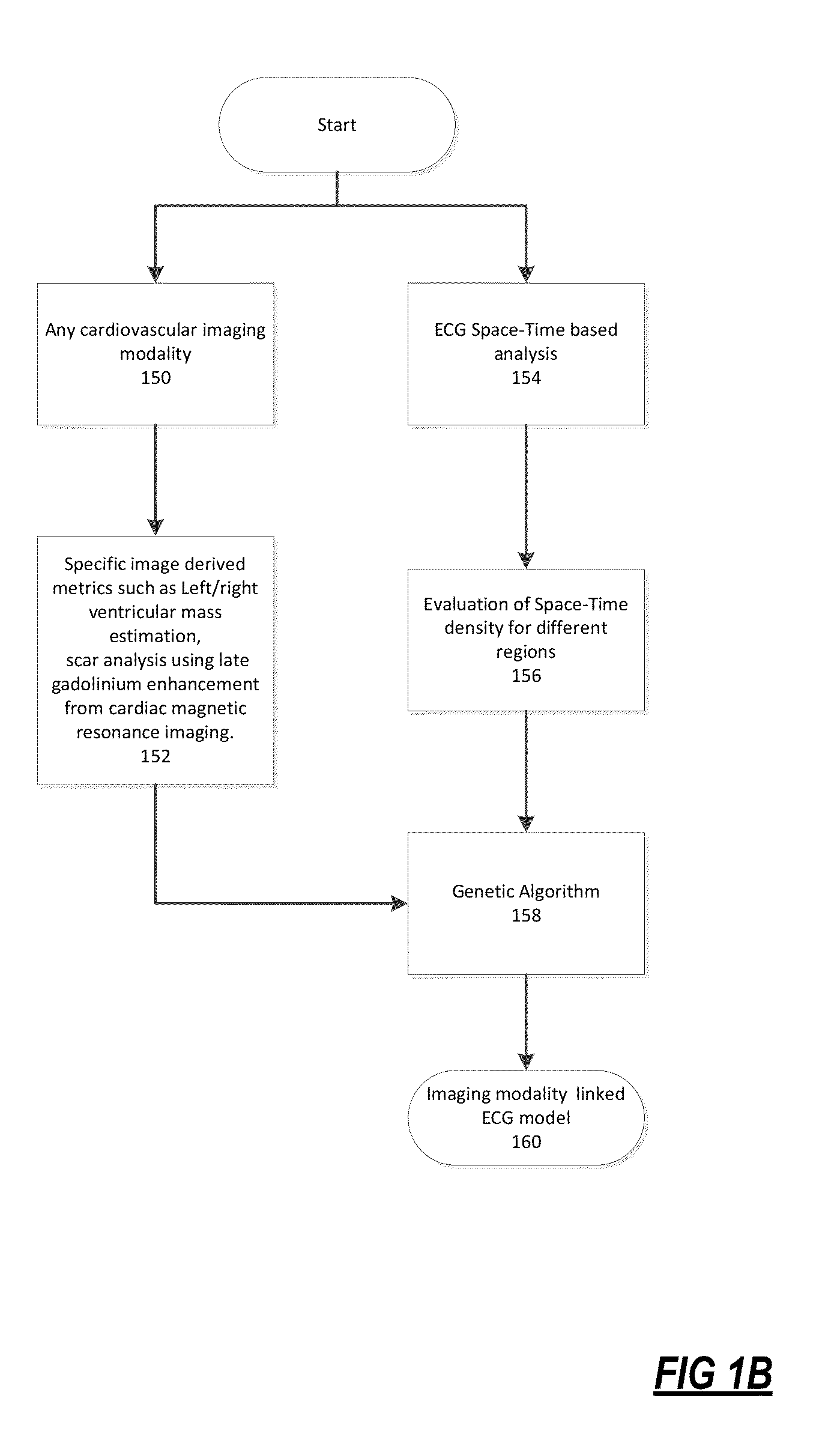

Non-invasive method and system for characterizing cardiovascular systems

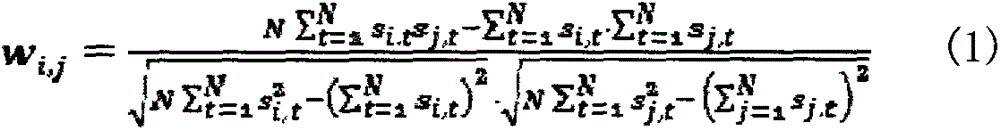

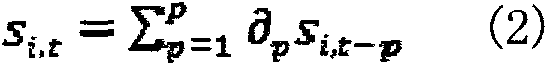

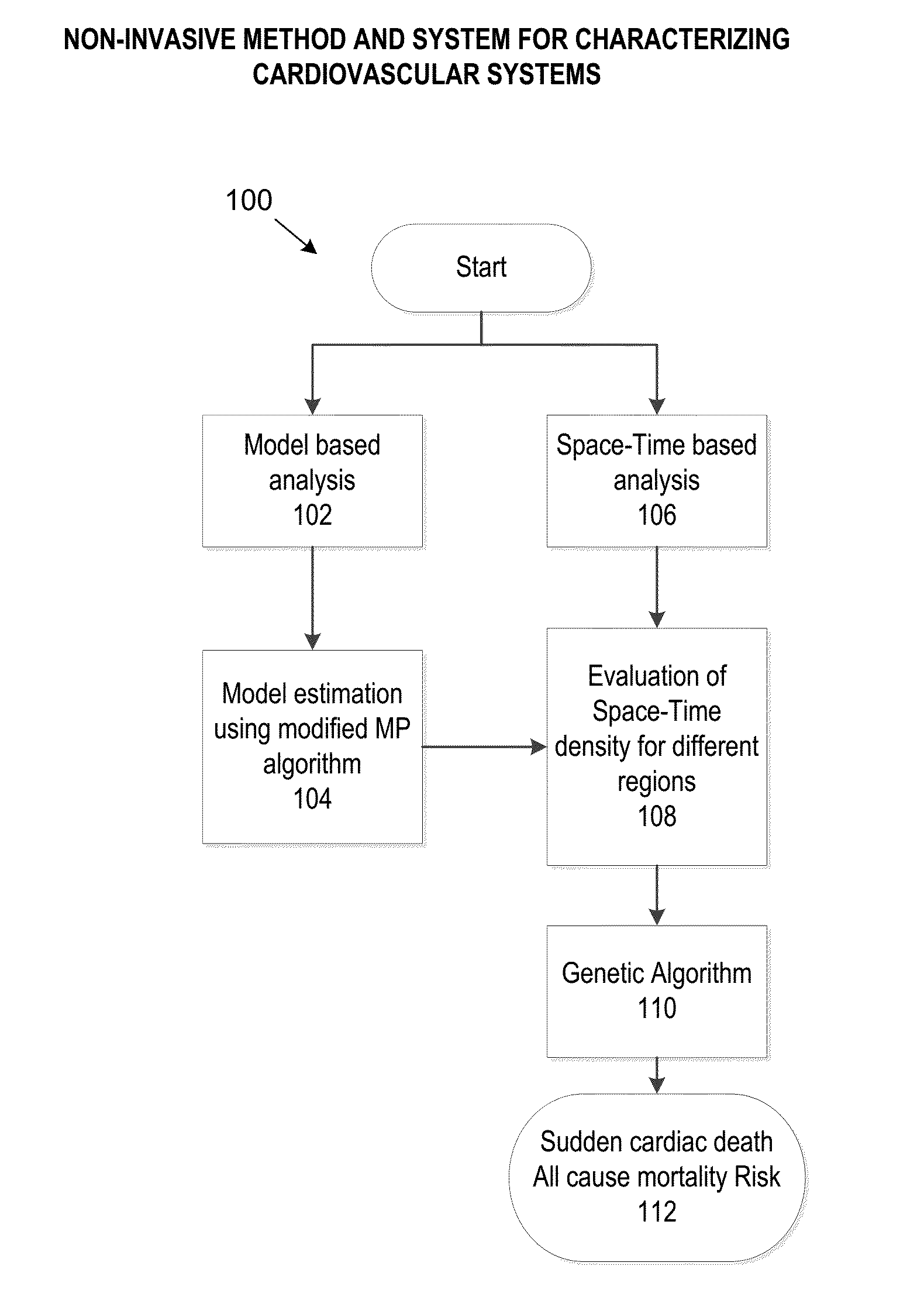

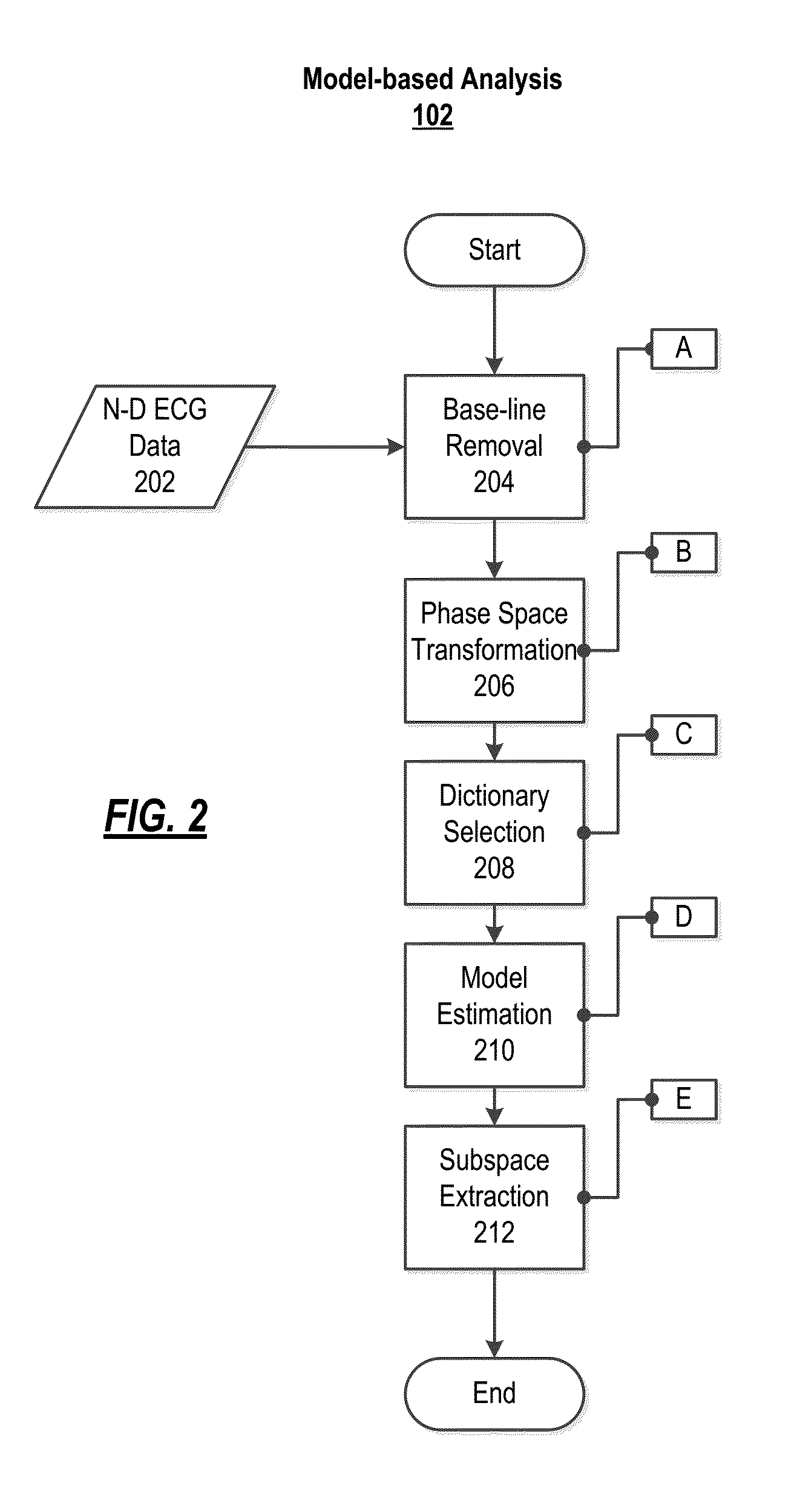

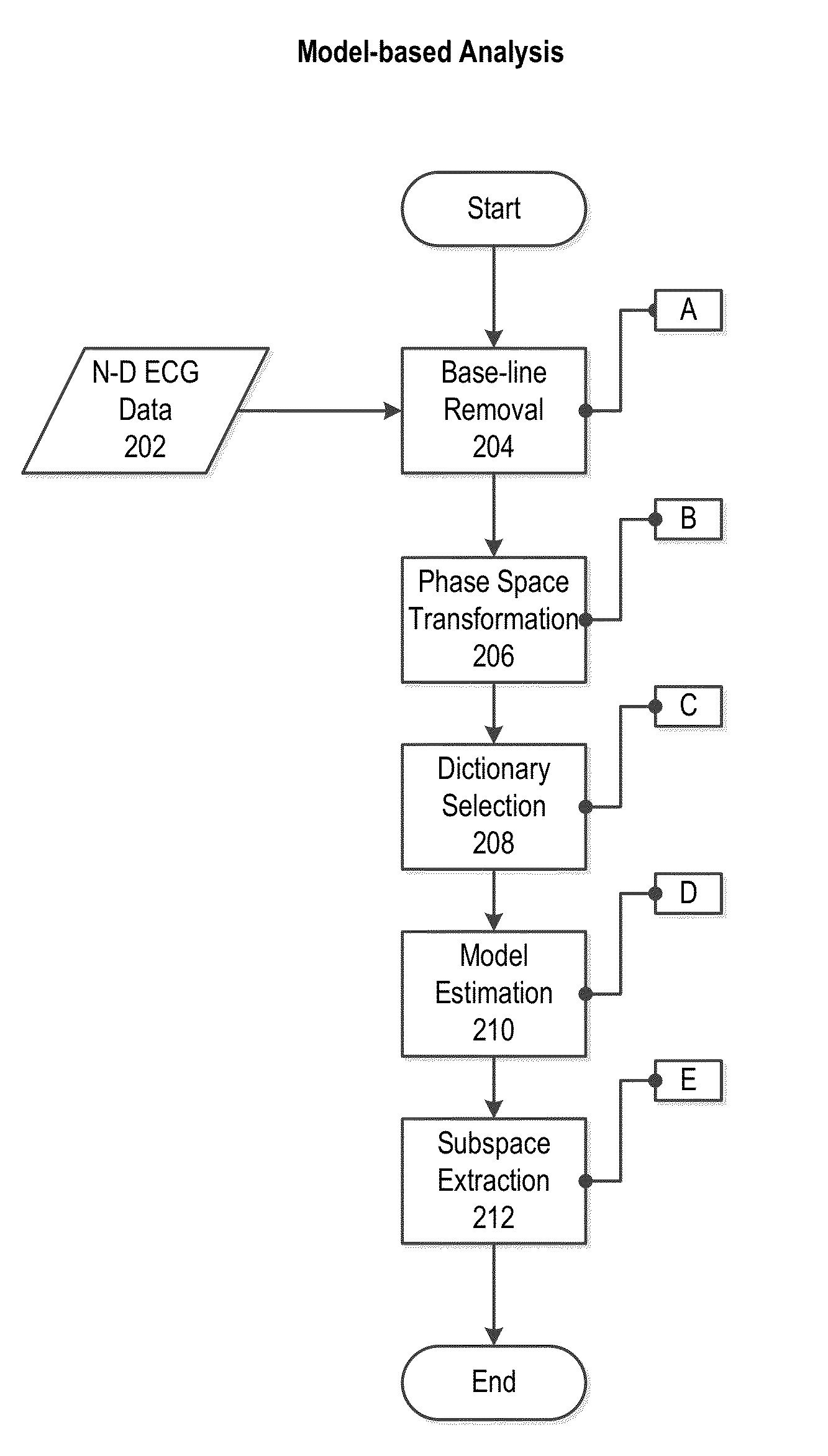

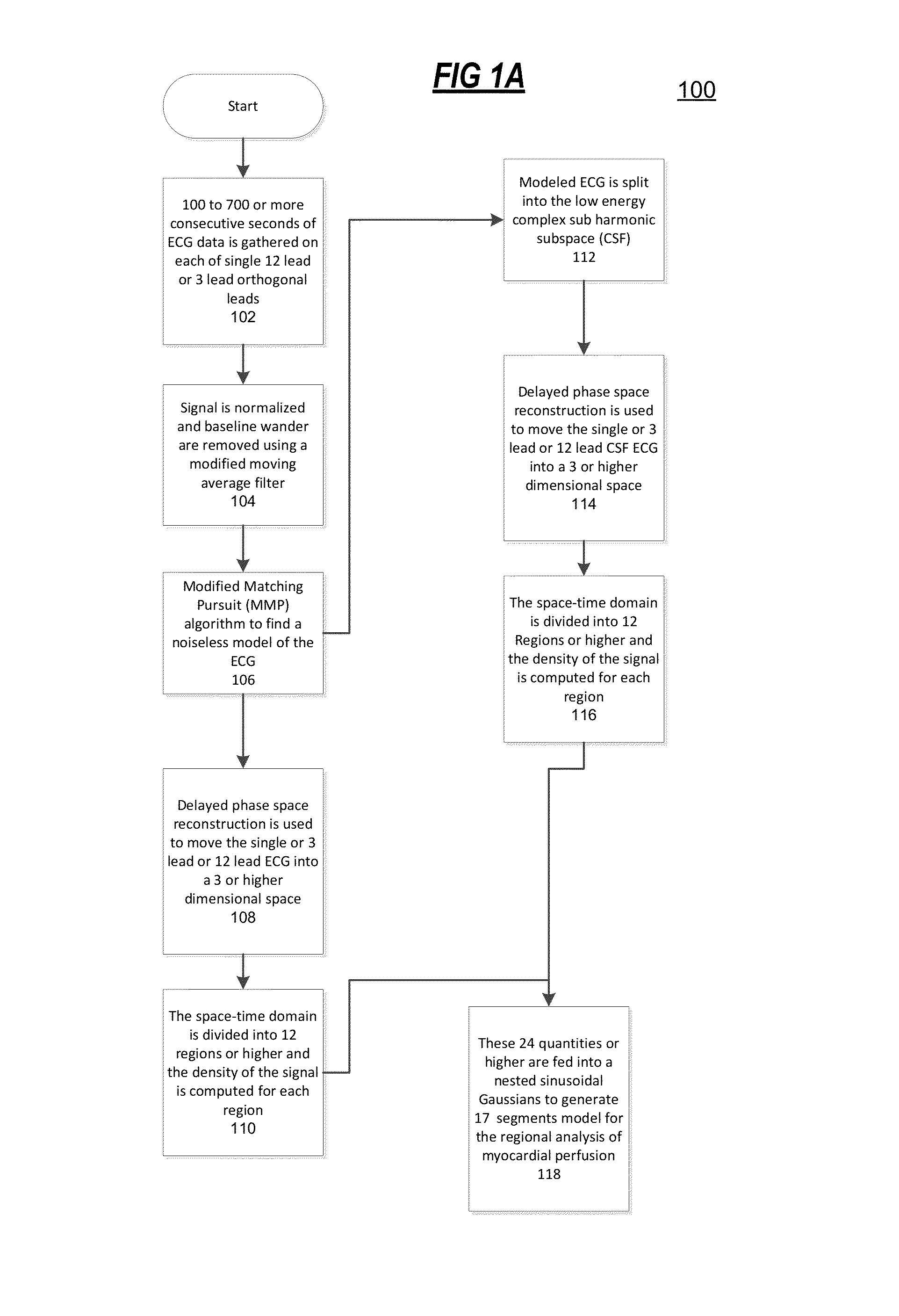

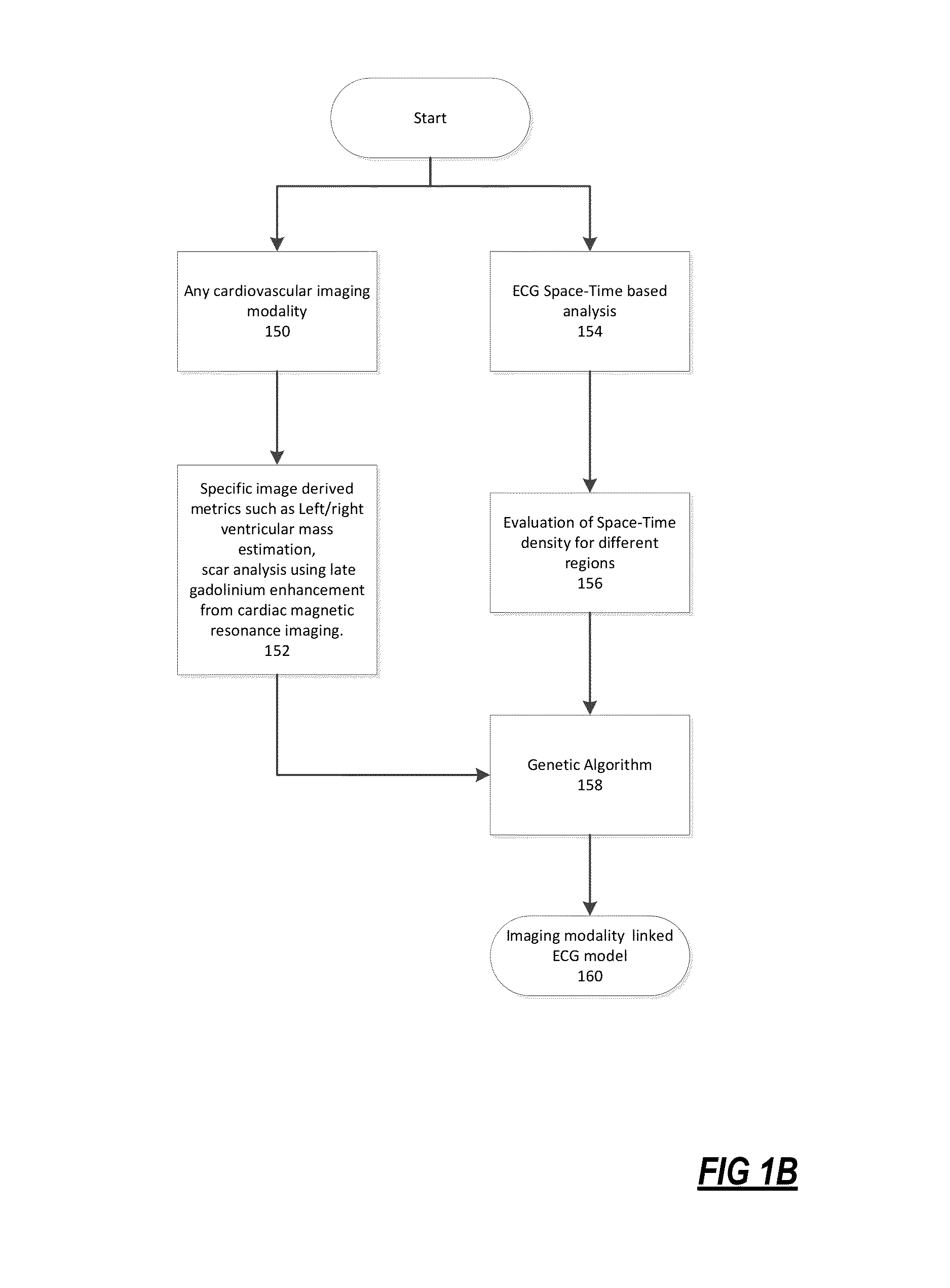

The present disclosure uses physiological data, ECG signals as an example, to evaluate cardiac structure and function in mammals. Two approaches are presented, e.g., a model-based analysis and a space-time analysis. The first method uses a modified Matching Pursuit (MMP) algorithm to find a noiseless model of the ECG data that is sparse and does not assume periodicity of the signal. After the model is derived, various metrics and subspaces are extracted to image and characterize cardiovascular tissues using complex-sub-harmonic-frequencies (CSF) quasi-periodic and other mathematical methods. In the second method, space-time domain is divided into a number of regions, the density of the ECG signal is computed in each region and inputted into a learning algorithm to image and characterize the tissues.

Owner:ANALYTICS FOR LIFE

Method and system for the efficient calculation of unsteady processes on arbitrary space-time domains

InactiveUS20080300835A1Computation using non-denominational number representationDesign optimisation/simulationTime domainDesign improvement

Owner:HIXON TECH

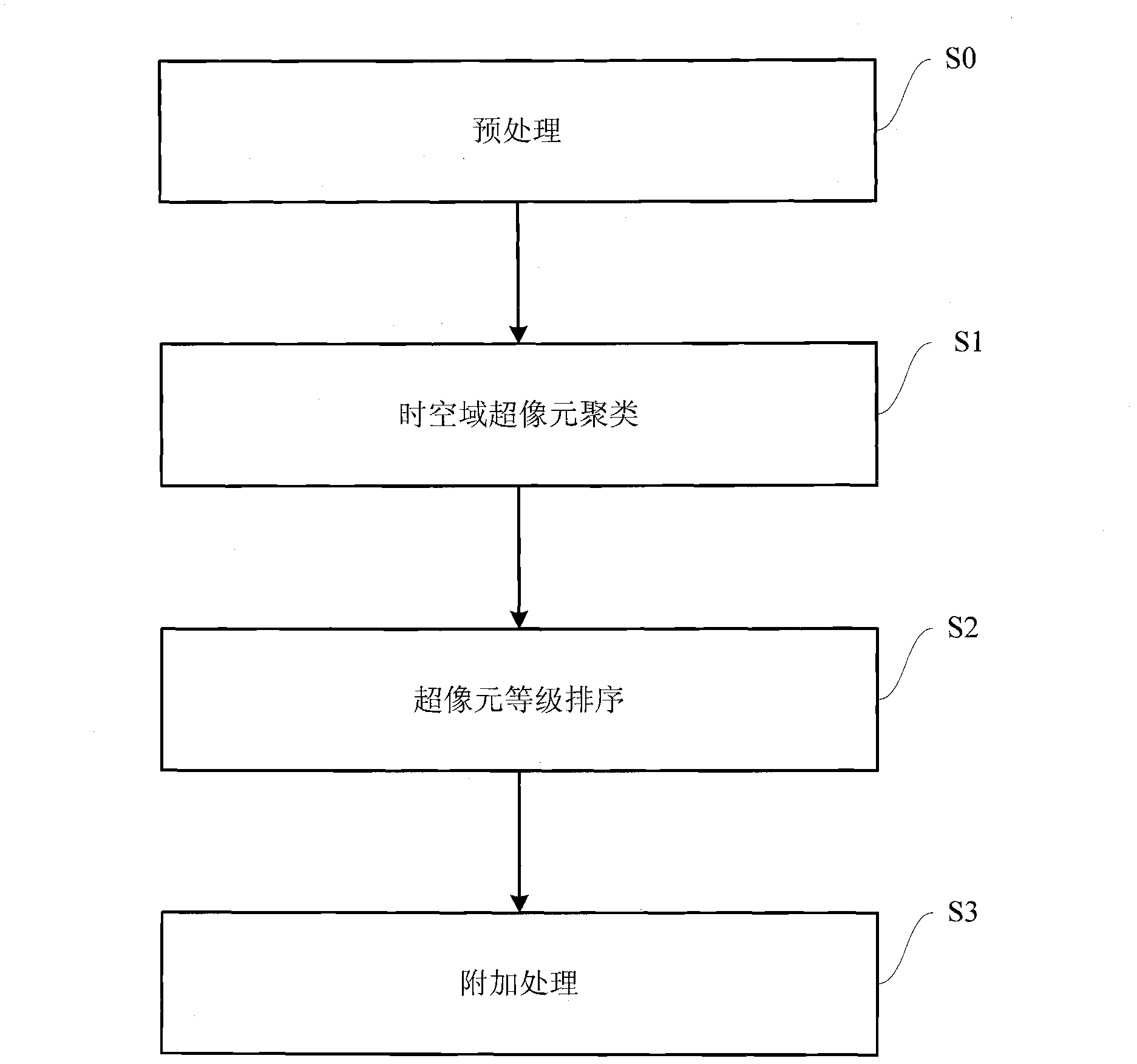

Method for partial reference evaluation of wireless videos based on space-time domain feature extraction

InactiveCN101742355AIncreased disgustReflects the degree of spatial distortionTelevision systemsDigital video signal modificationComputation complexityObjective quality

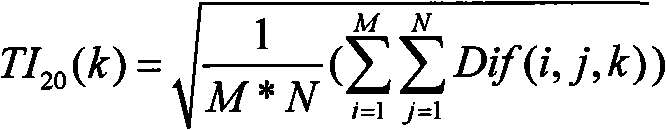

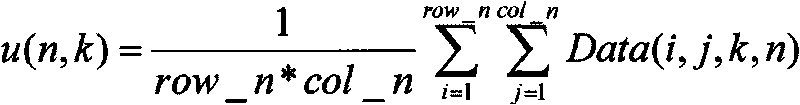

The invention discloses a method for partial reference evaluation of wireless videos based on space-time domain feature extraction, which relates to a method for evaluating video quality. On the basis of performing profound understanding and detailed analysis on the existing video objective quality evaluation model, by combining visual characteristics of human eyes, the invention provides the method for the partial reference evaluation of the wireless videos based on the space-time domain feature extraction to improve the conventional SSIM model. The method takes the fluency of the time domain and the structural similarity and the definition of the space domain of the videos as main evaluation indexes, and under the condition of ensuring the evaluation accuracy, the method extracts characteristic parameters of ST domains (the space domain and the time domain), establishes a new evaluation model, reduces the reference data needed by the evaluation and lowers the computational complexity to ensure that the evaluation model is suitable for evaluating the quality of wireless transmission videos in real time.

Owner:XIAMEN UNIV +1

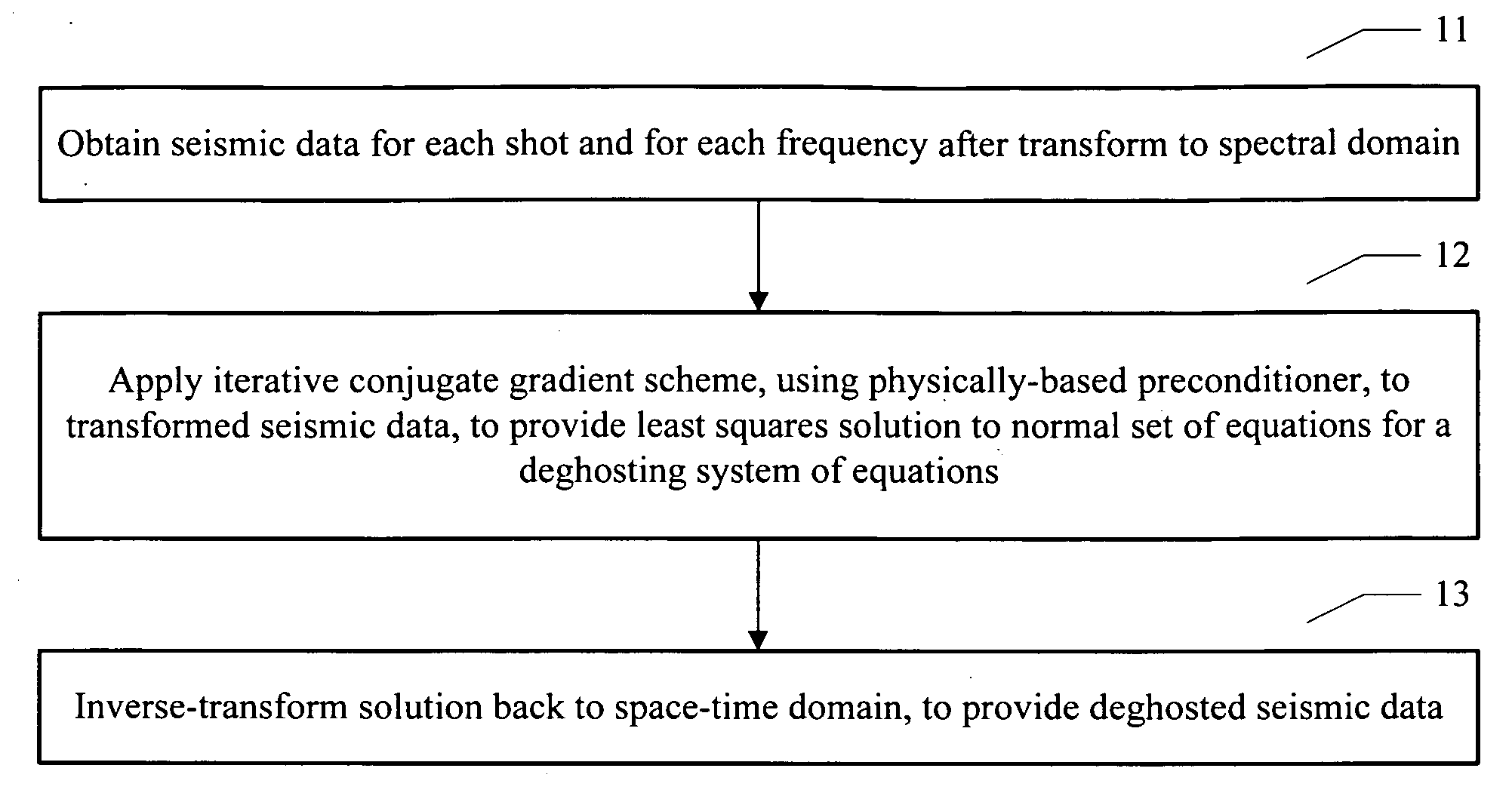

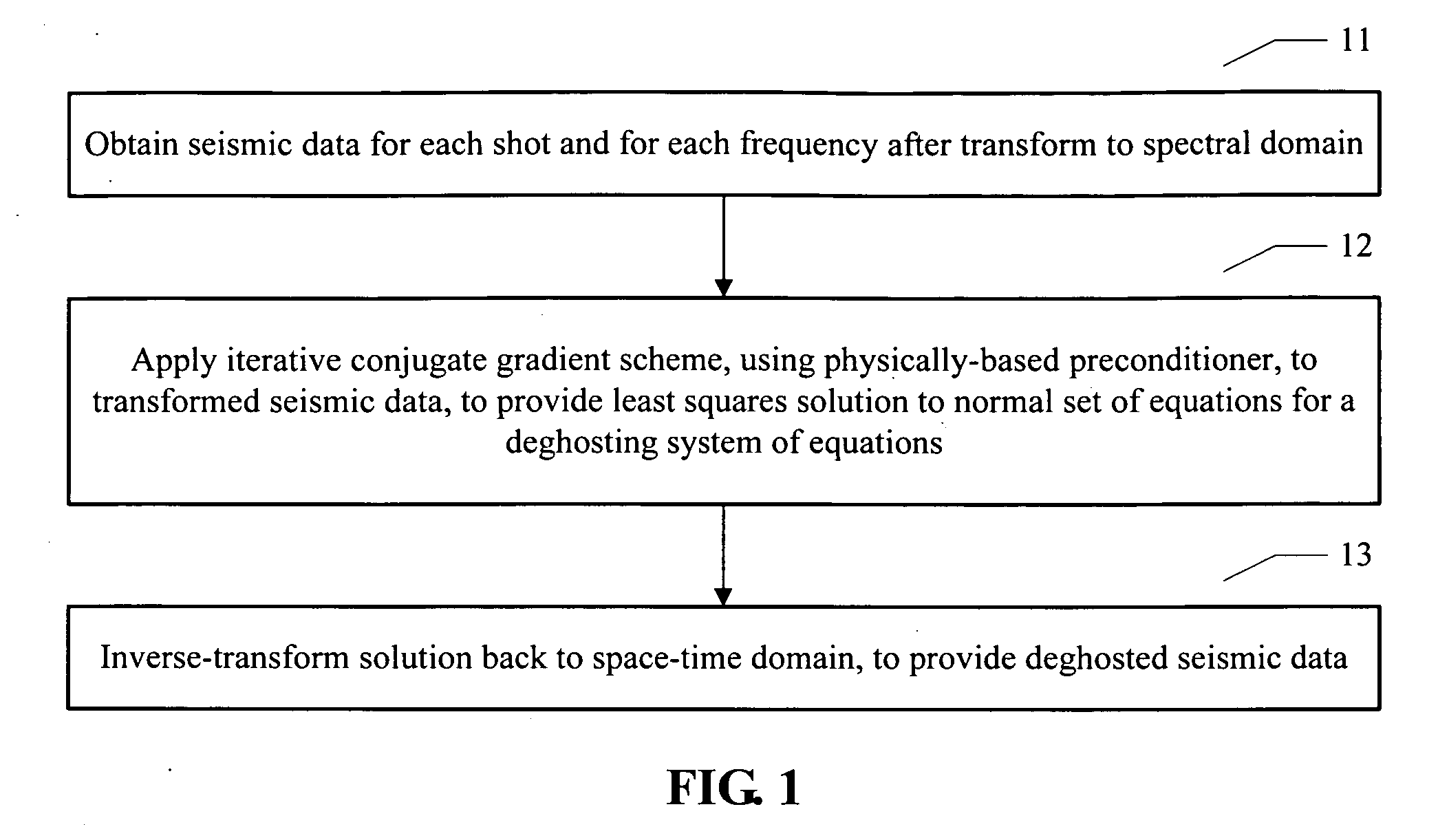

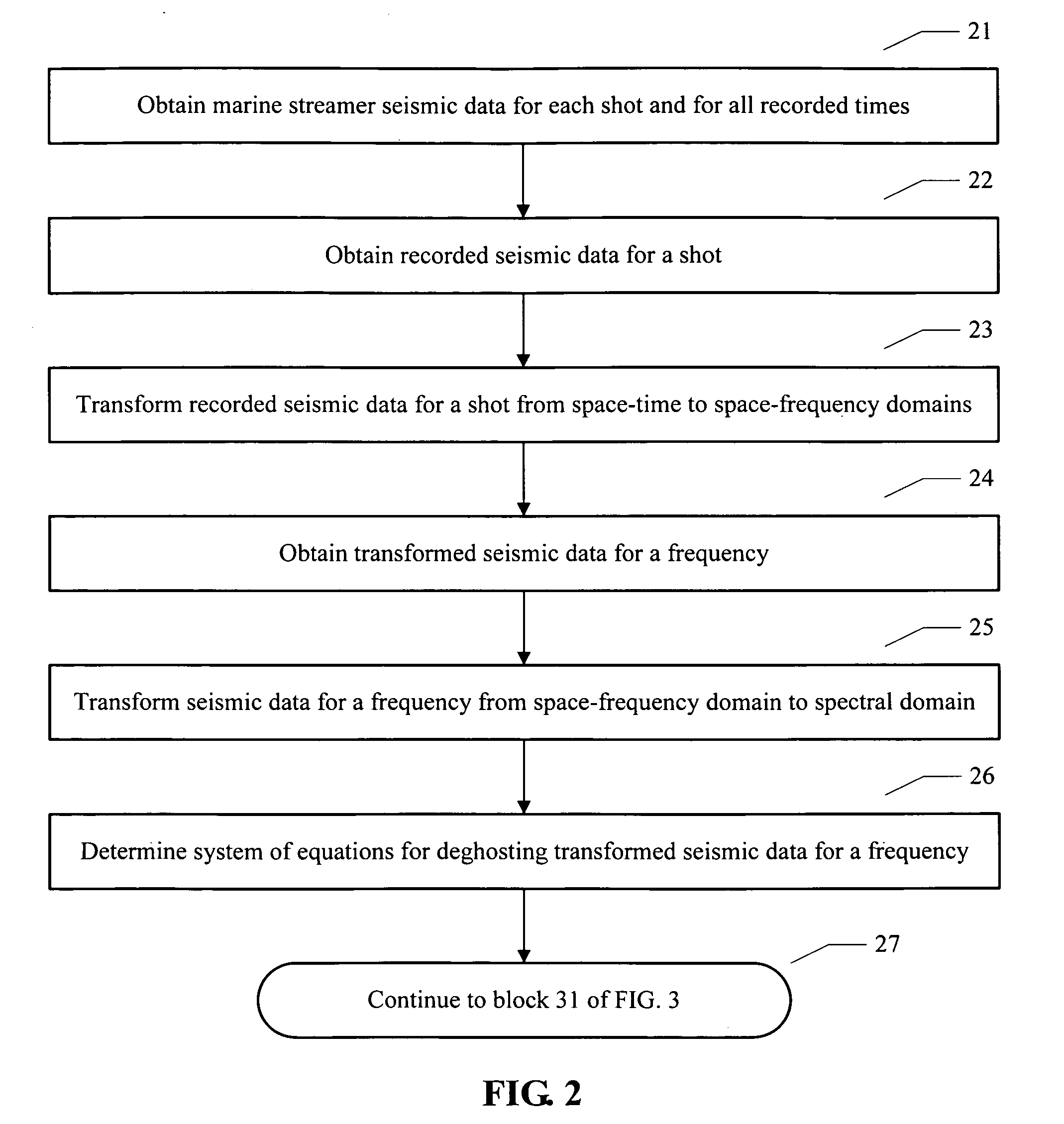

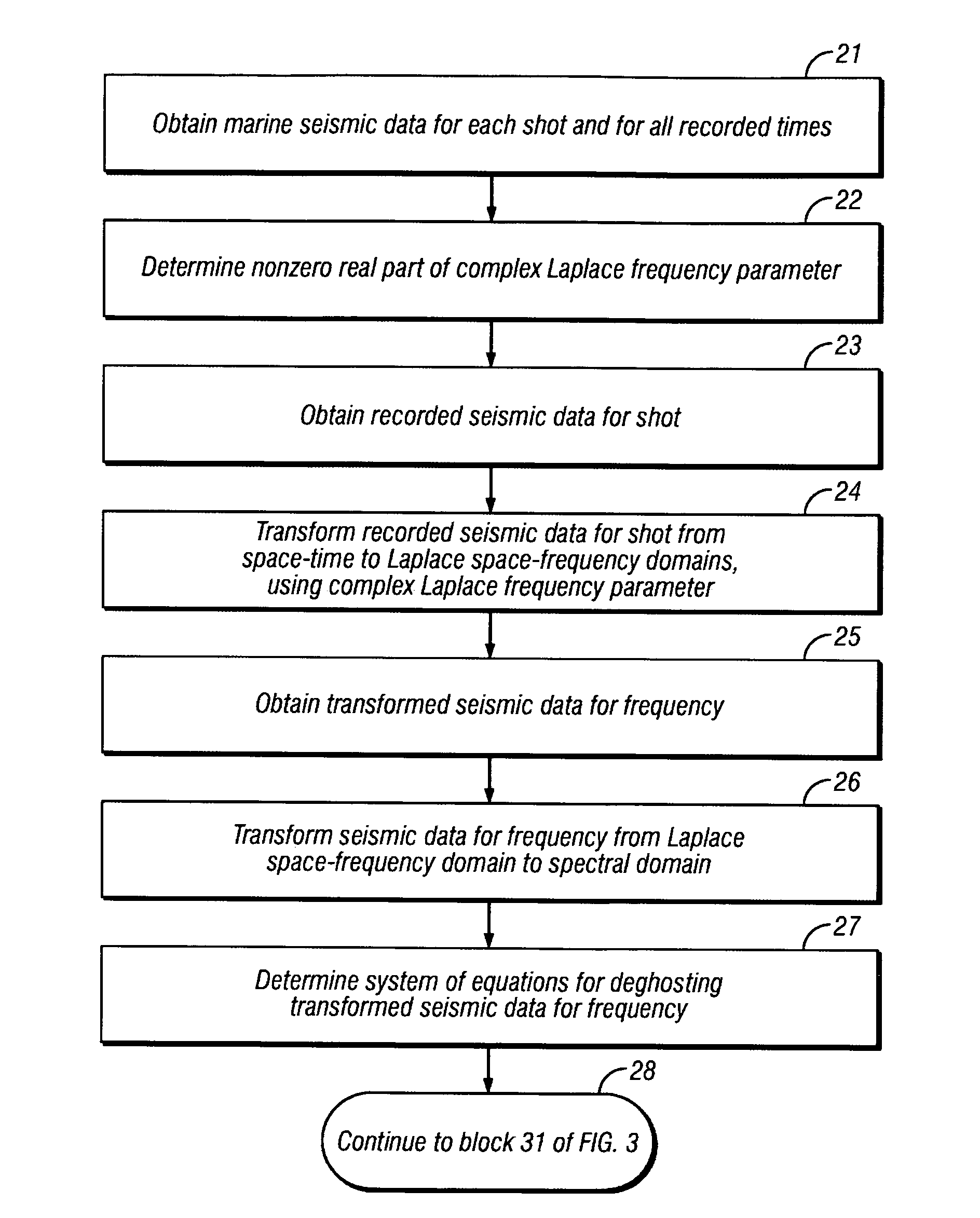

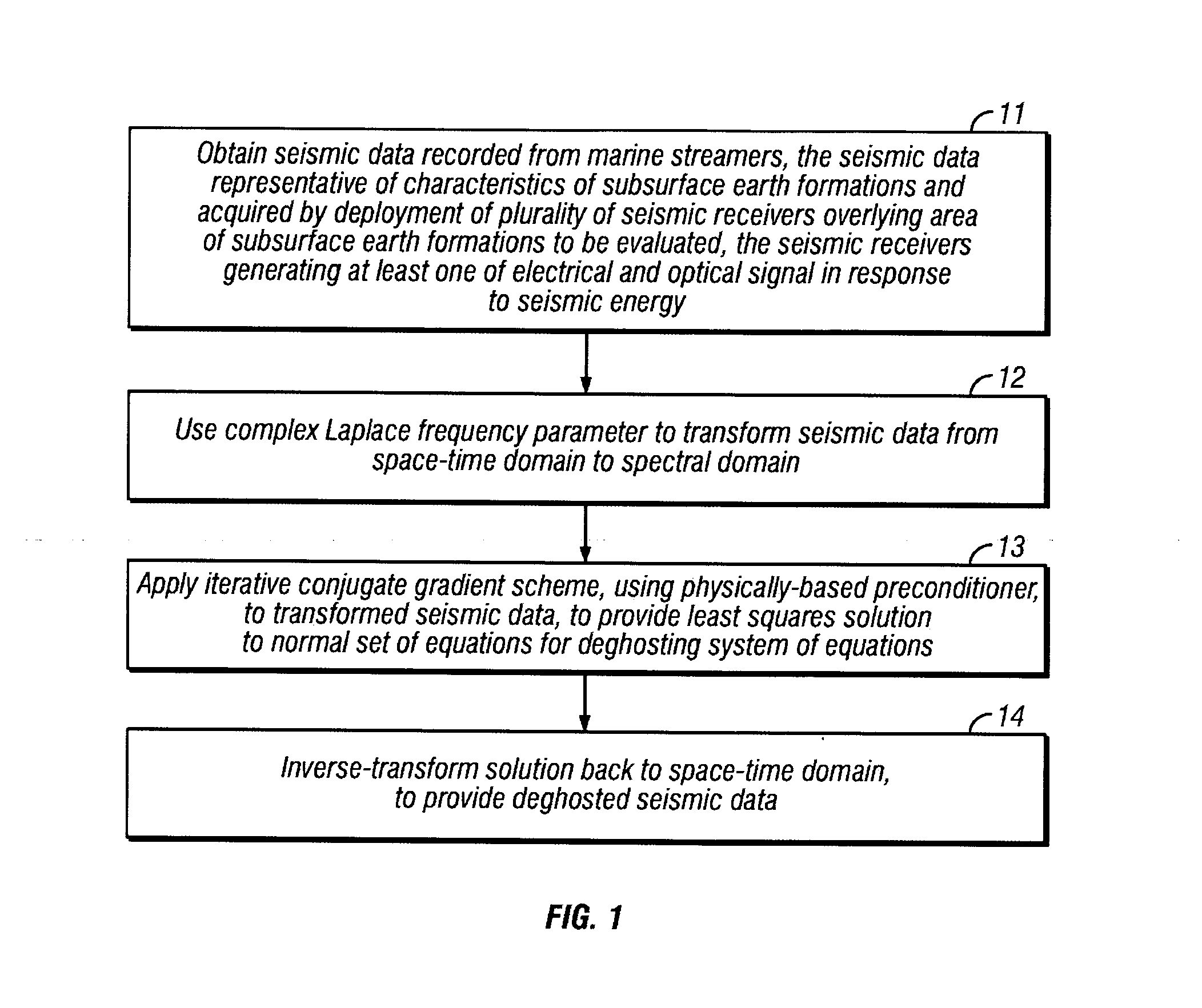

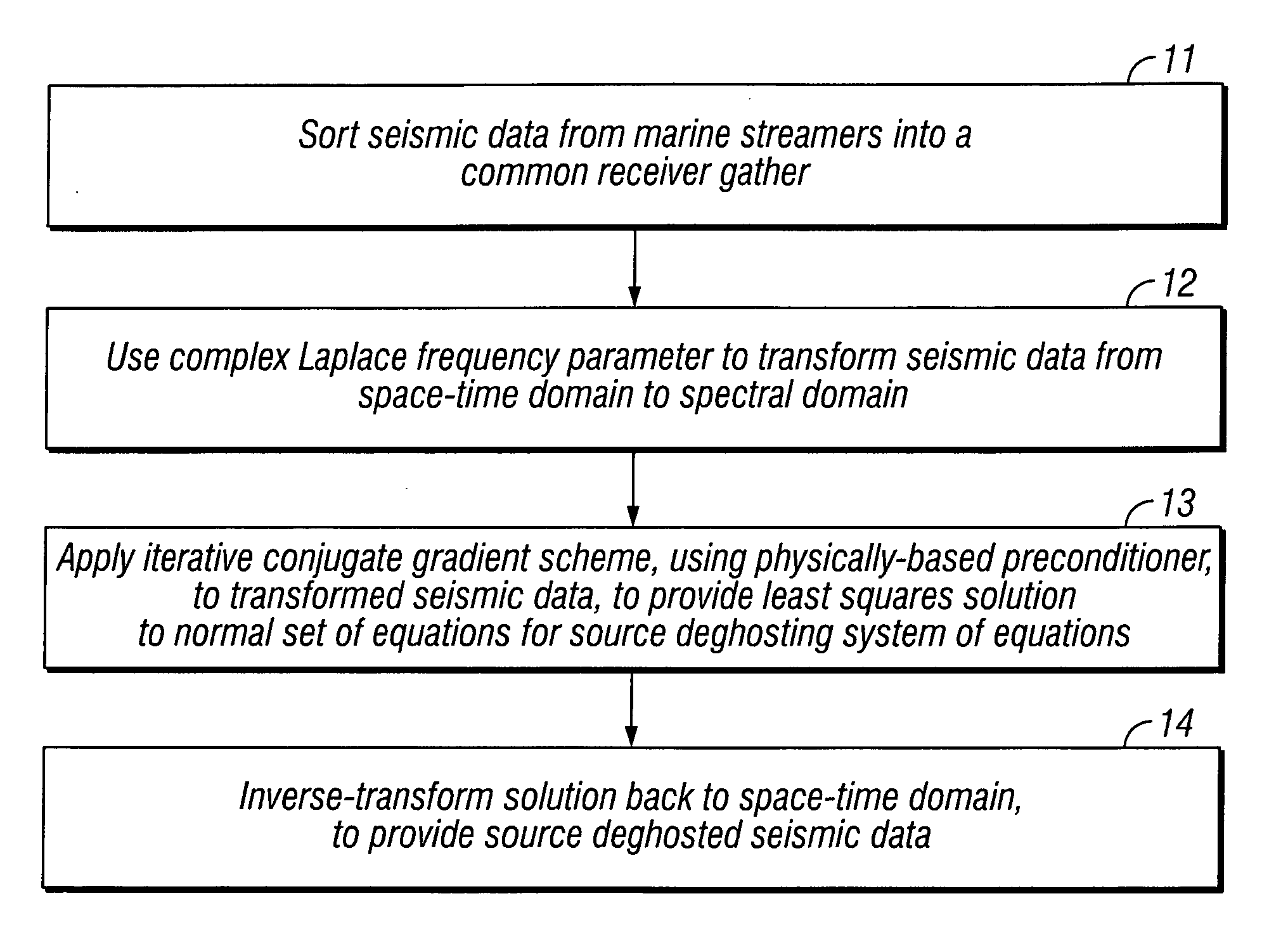

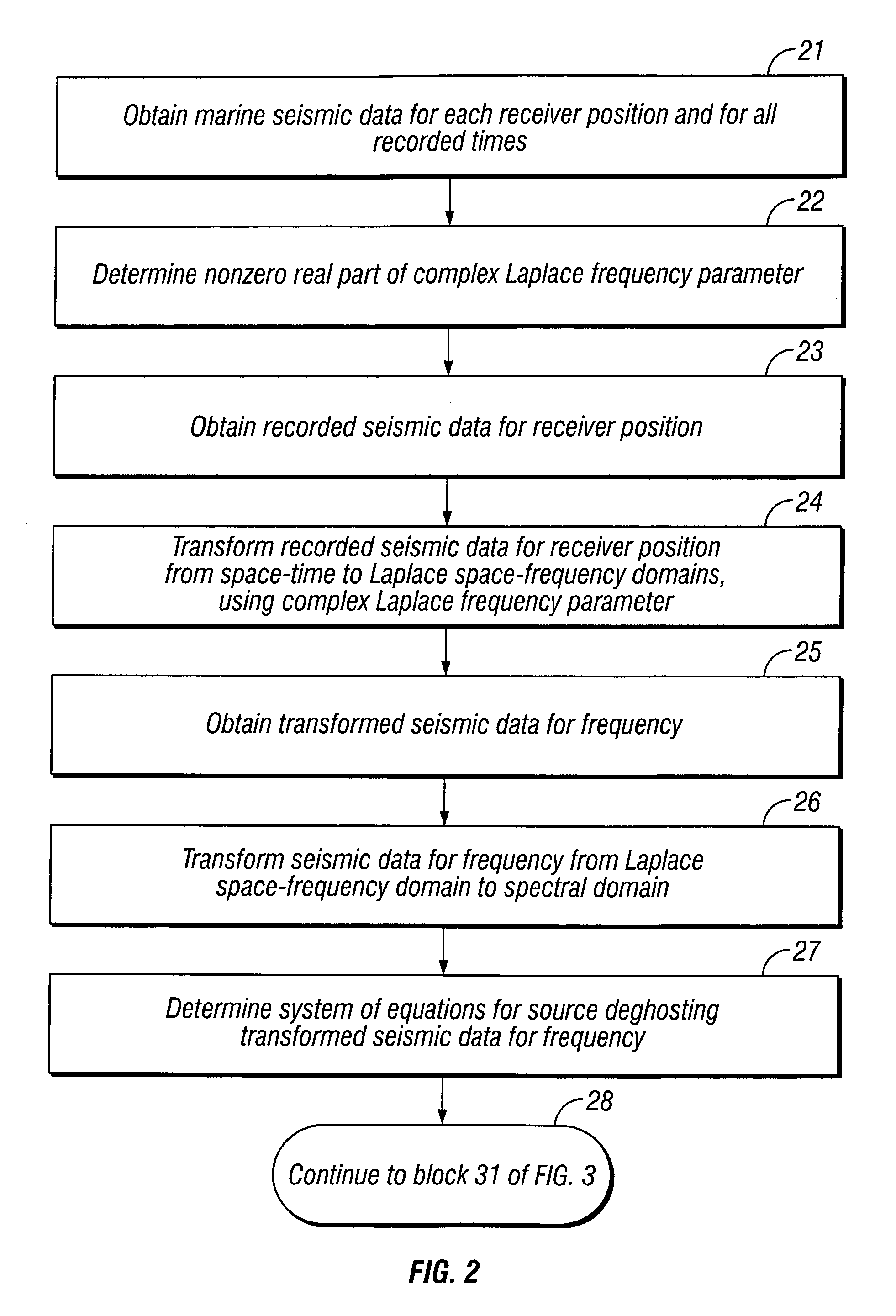

Method for deghosting marine seismic streamer data with irregular receiver positions

InactiveUS20090251992A1Seismic signal receiversSeismic signal processingSpace time domainSpectral domain

Seismic data are obtained for each seismic source activation in a marine streamer and for each frequency, after being transformed to a spectral domain. An iterative conjugate gradient scheme, using a physically-based preconditioner, is applied to the transformed seismic data, to provide a least squares solution to a normal set of equations for a deghosting system of equations. The solution is inverse-transformed back to a space-time domain to provide deghosted seismic data.

Owner:PGS GEOPHYSICAL AS +1

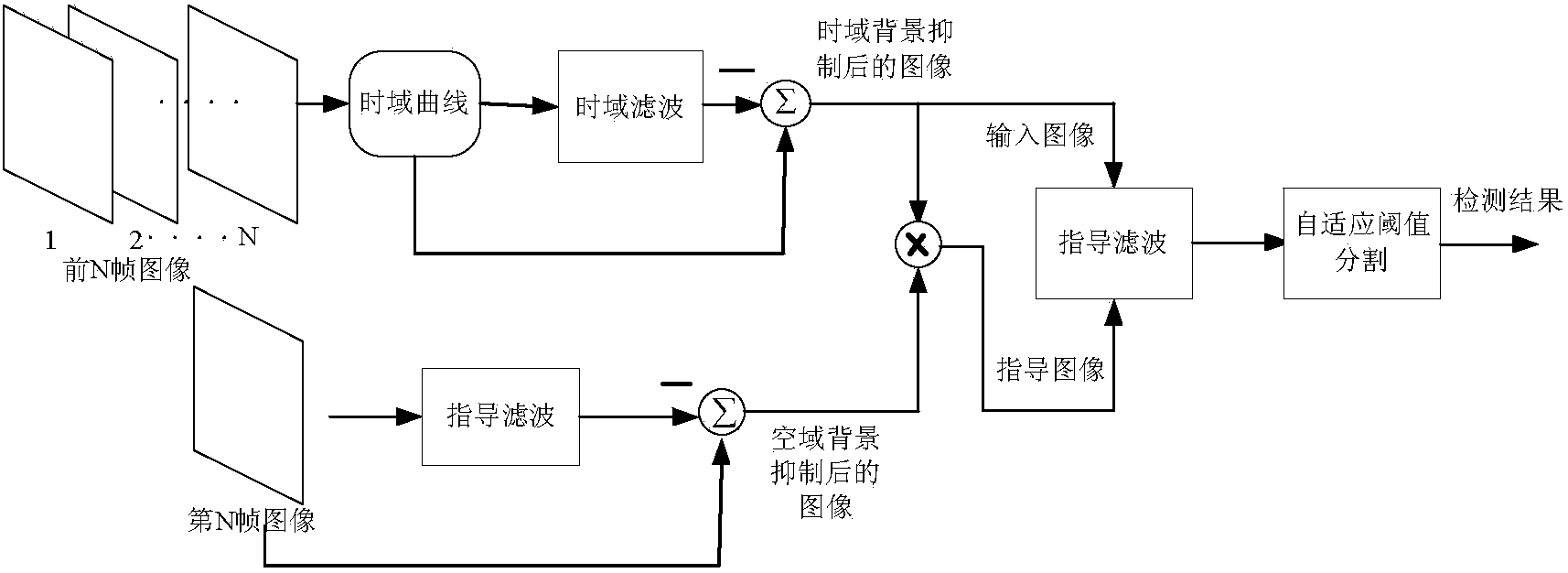

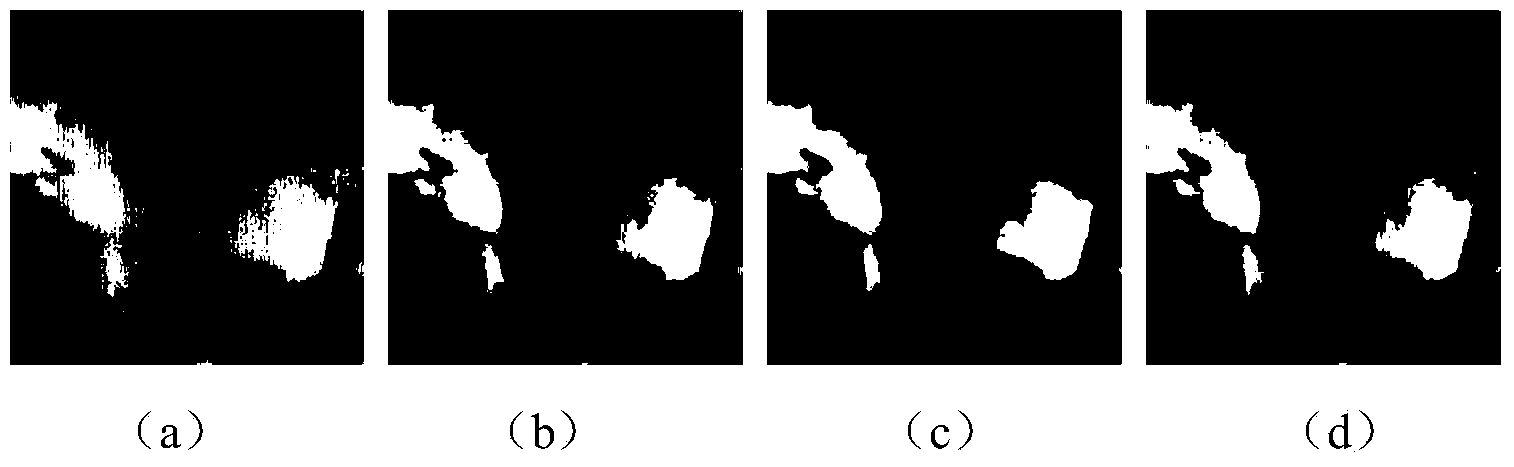

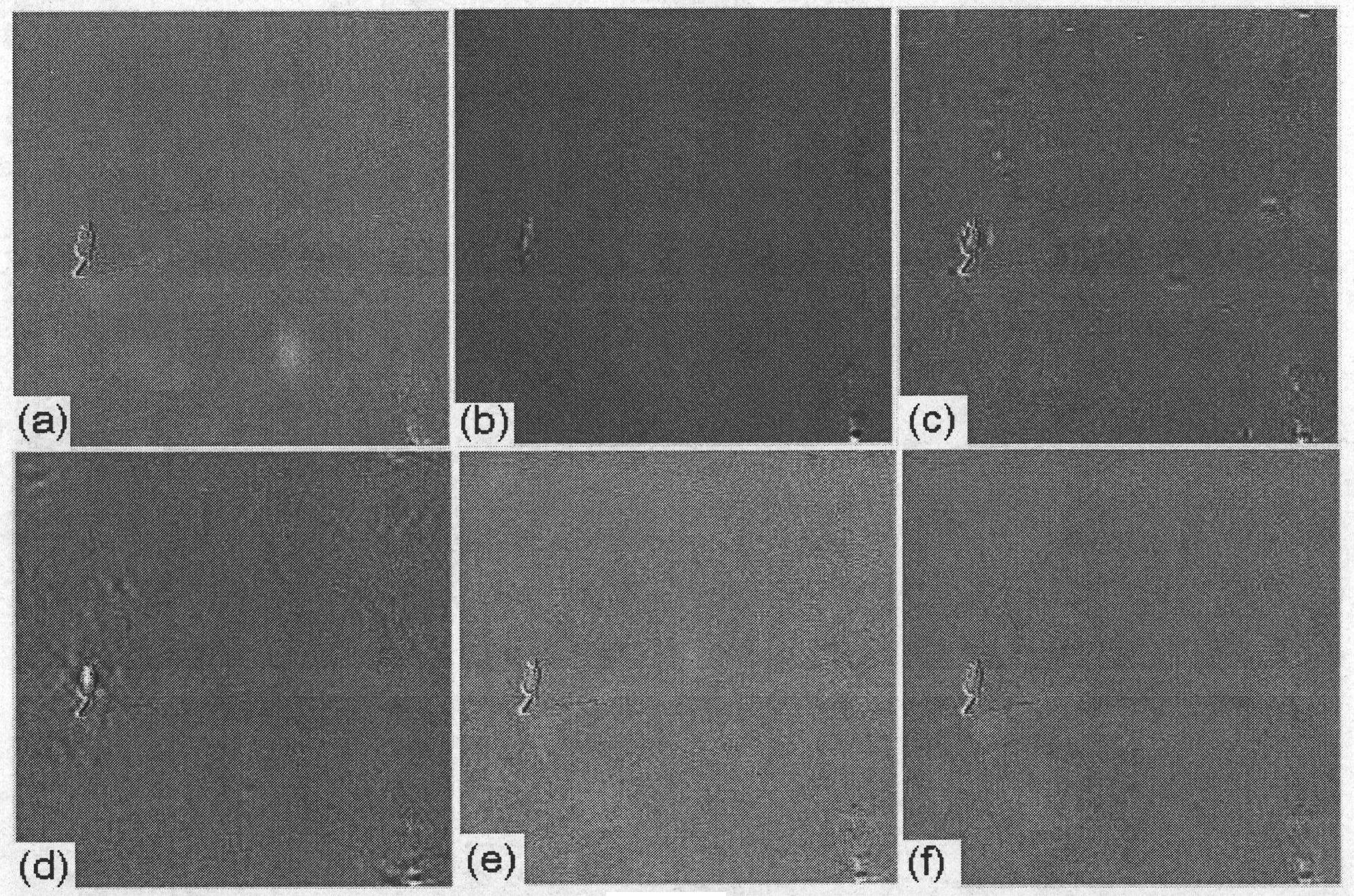

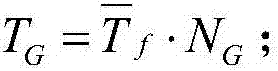

Infrared weak and small target detection method based on time-space domain background suppression

ActiveCN104299229AReal-time detectionReduce false alarm rateImage enhancementImage analysisTime domainSpace time domain

The invention belongs to the field of infrared image processing, and mainly relates to an infrared weak and small target detection method based on time-space domain background suppression. The infrared weak and small target detection method is used for achieving the aim of infrared movement weak and small target detection in a complicated background and includes the steps that firstly, stable background noise waves in a space domain are suppressed through guiding filtering; secondly, slowly-changed backgrounds in a time domain are suppressed with a gradient weight filtering method on the time domain through target movement information in an infrared image sequence; thirdly, the time domain background suppression result and the space domain background suppression result are fused to obtain a background-suppressed weak and small target image; finally, the image is split through a self-adaptation threshold value, and a weak and small target is detected. By means of the infrared weak and small target detection method, during target detection, space grey information of the infrared weak and small target is used, time domain movement information of the target is further sufficiently used, the background noise waves are suppressed in the time domain and the space domain, and therefore the movement weak and small target detection performance in the complex background is greatly improved.

Owner:SHANGHAI RONGJUN TECH

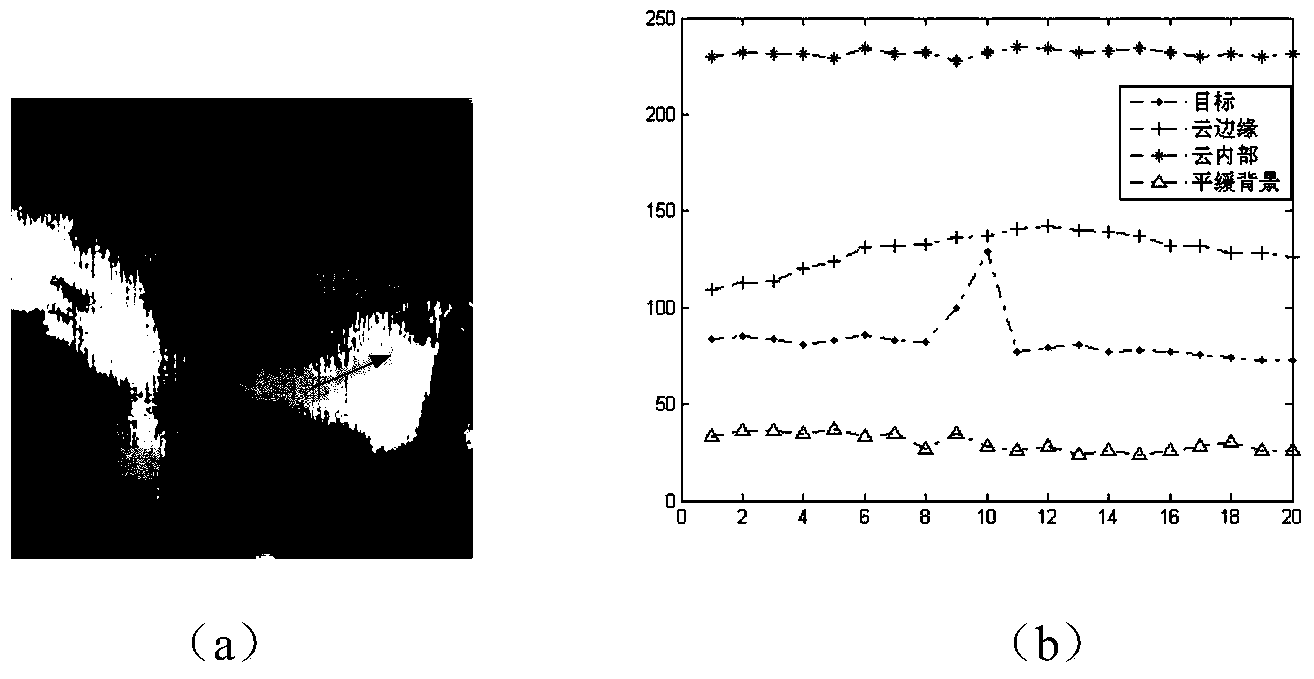

Urban road traffic condition prediction method based on spatial-temporal data

The invention discloses an urban road traffic condition prediction method based on spatial-temporal data. The method comprises the following steps: calculating the parameters of a spatial-temporal correlation model using historical traffic data; abstracting an urban road network in the form of undirected graph; calculating the weight of the undirected graph using historical data; building a time domain correlation model; building a spatial-temporal correlation model; and predicting the traffic condition of a road section through use of real-time traffic data and based on a time-space domain model. A more accurate urban road traffic condition prediction method is provided.

Owner:HANGZHOU NORMAL UNIVERSITY +1

Method and system for characterizing cardiovascular systems from single channel data

ActiveUS20150216426A1Cumbersome to executeBroaden applicationMedical simulationElectrocardiographyDiseaseCardiac defects

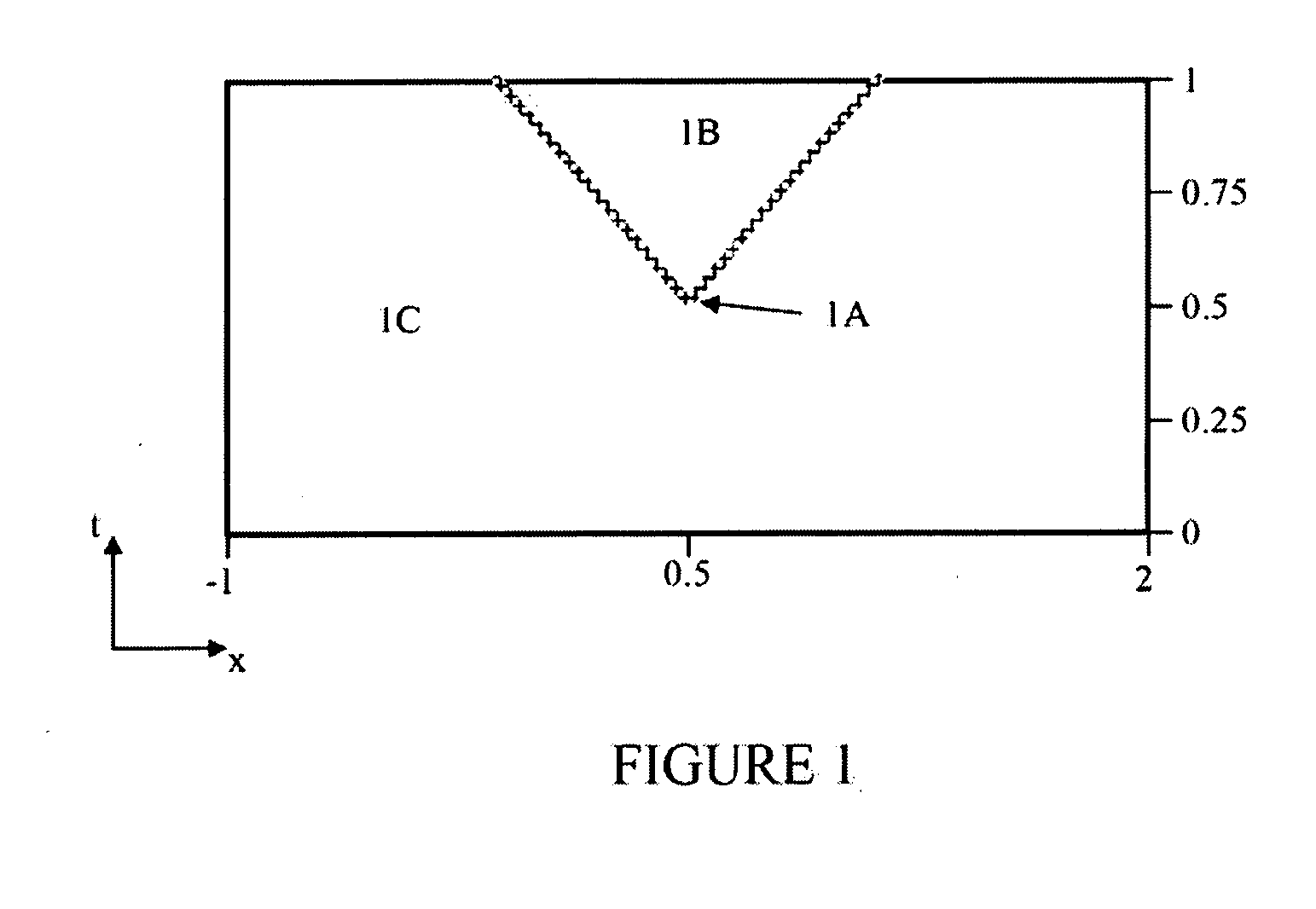

Methods to identify and risk stratify disease states, cardiac structural defects, functional cardiac deficiencies induced by teratogens and other toxic agents, pathological substrates, conduction delays and defects, and ejection fraction using single channel biological data obtained from the subject. A modified Matching Pursuit (MP) algorithm may be used to find a noiseless model of the data that is sparse and does not assume periodicity of the signal. After the model is derived, various metrics and subspaces are extracted to characterize the cardiac system. In another method, space-time domain is divided into a number of regions (which is largely determined by the signal length), the density of the signal is computed in each region and input to a learning algorithm to associate them to the desired cardiac dysfunction indicator target.

Owner:ANALYTICS FOR LIFE

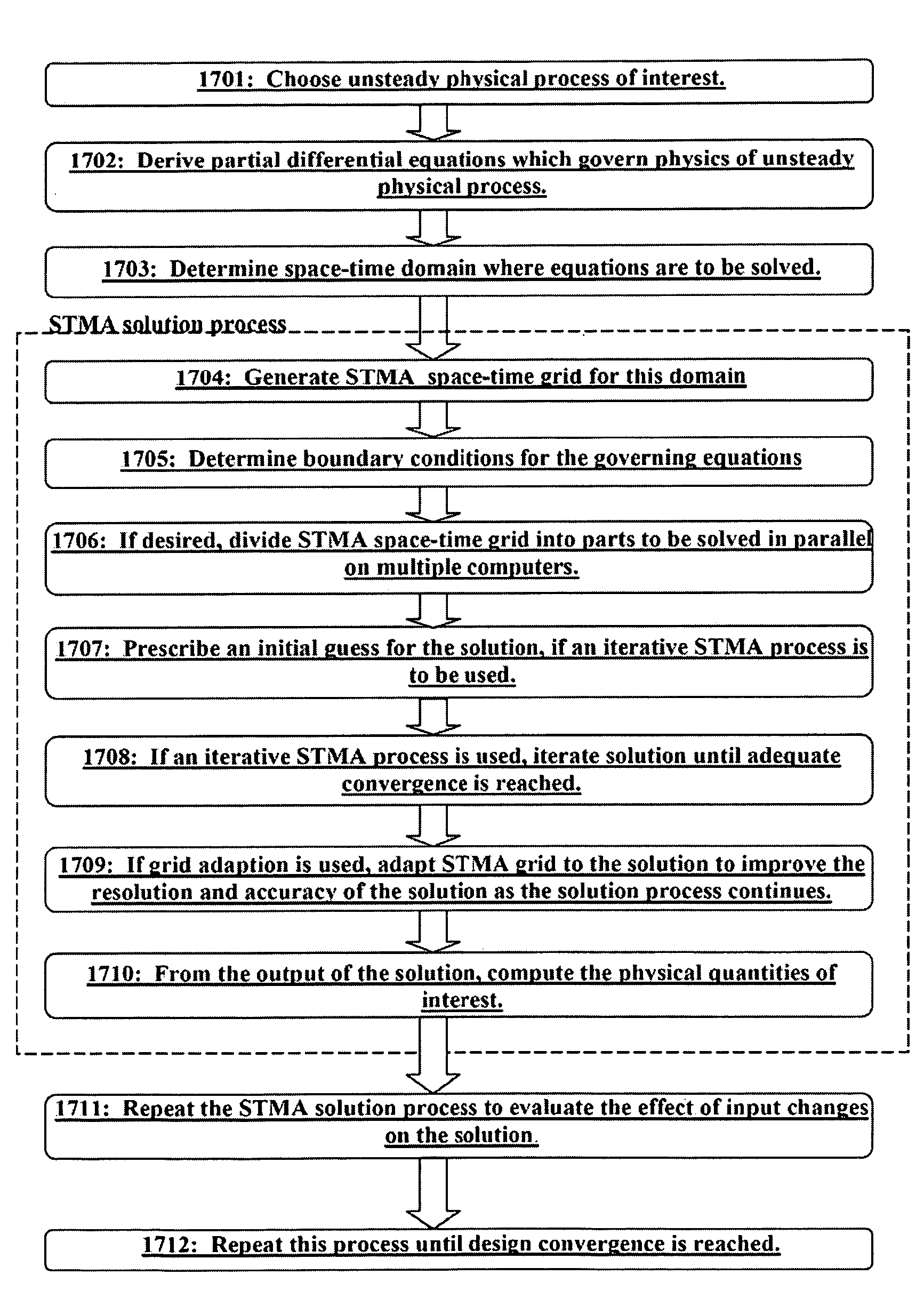

Method and system for the efficient calculation of unsteady processes on arbitrary space-time domains

ActiveUS7359841B1Well formedAnalogue computers for chemical processesComputation using non-denominational number representationTime domainSpace time domain

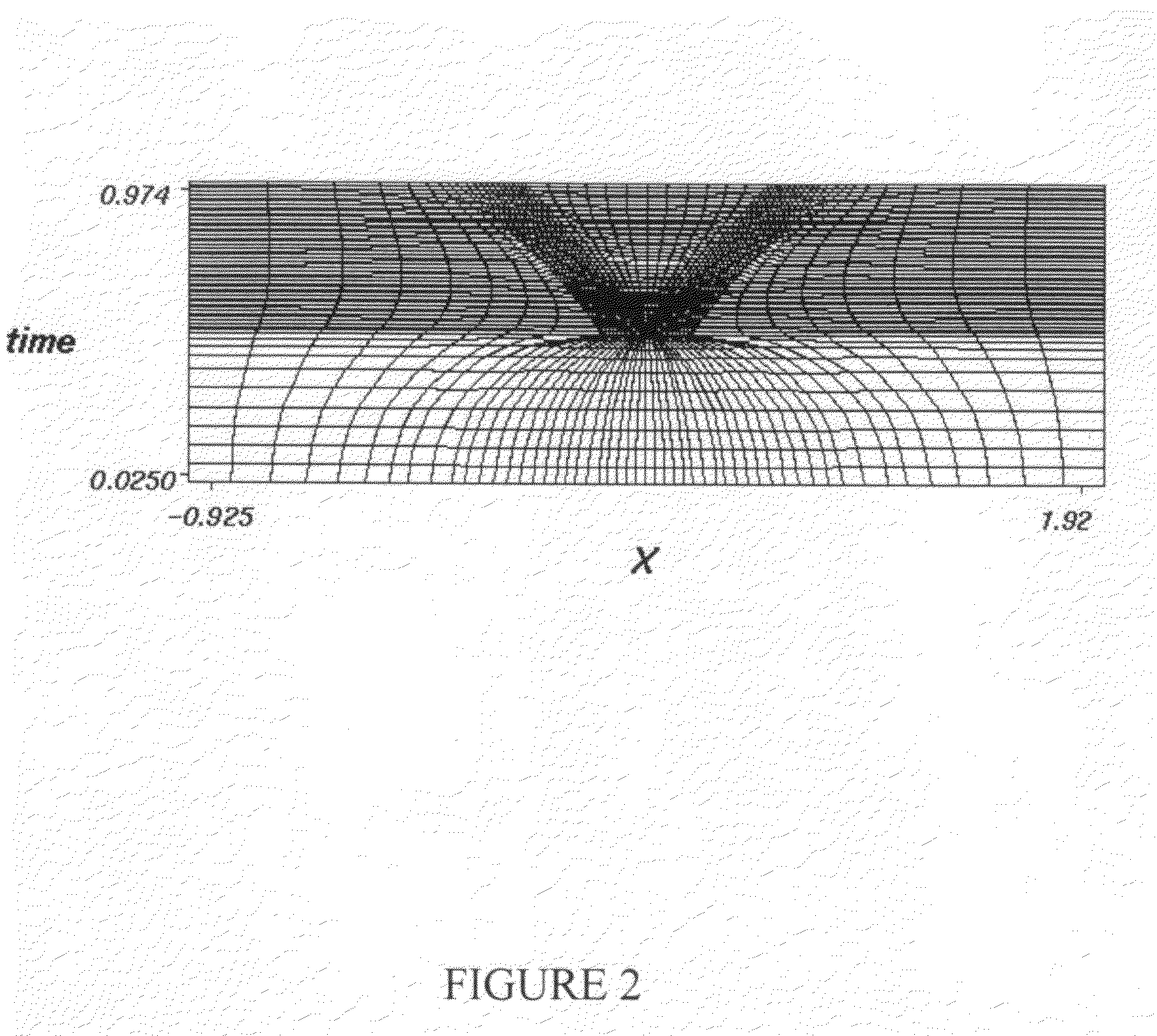

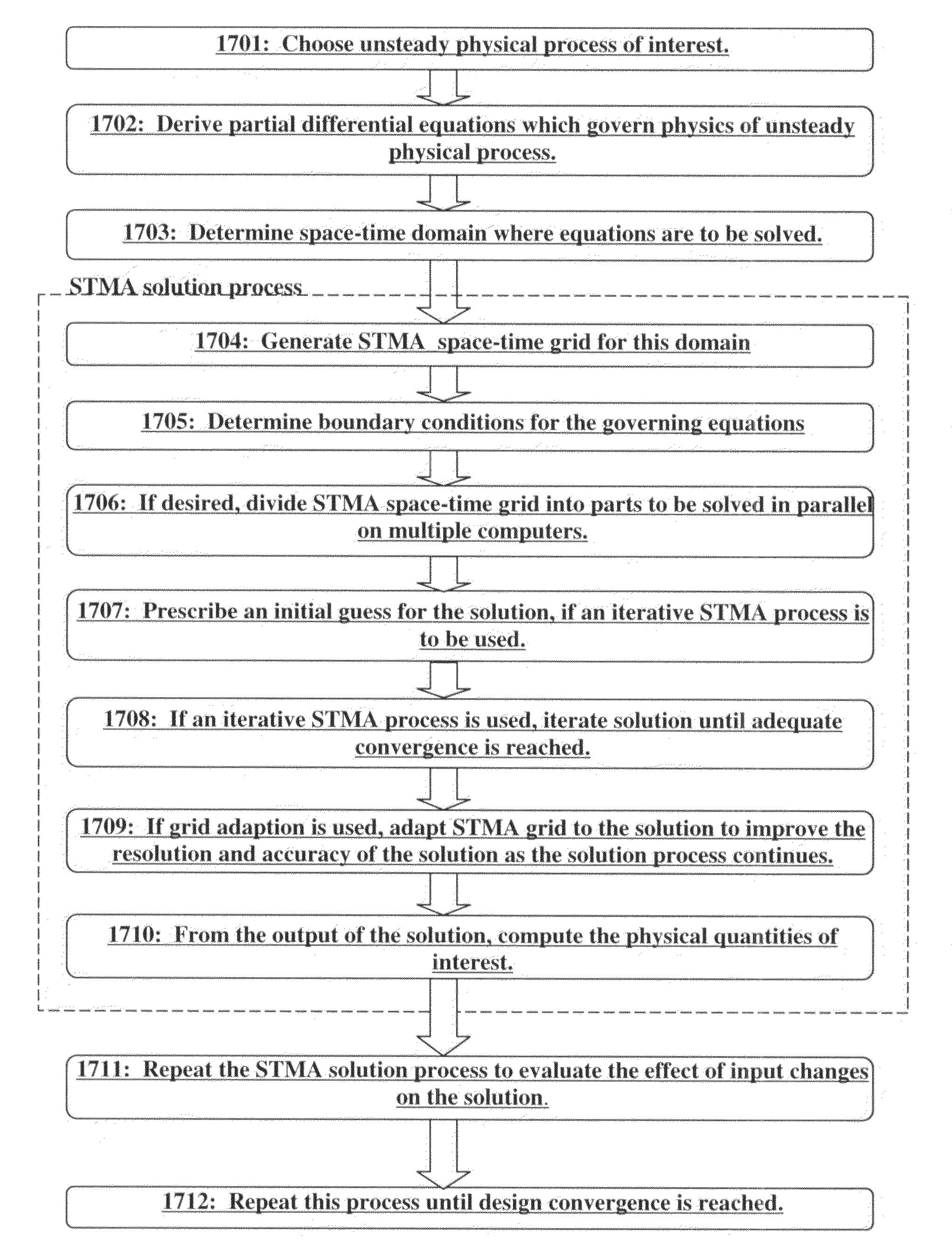

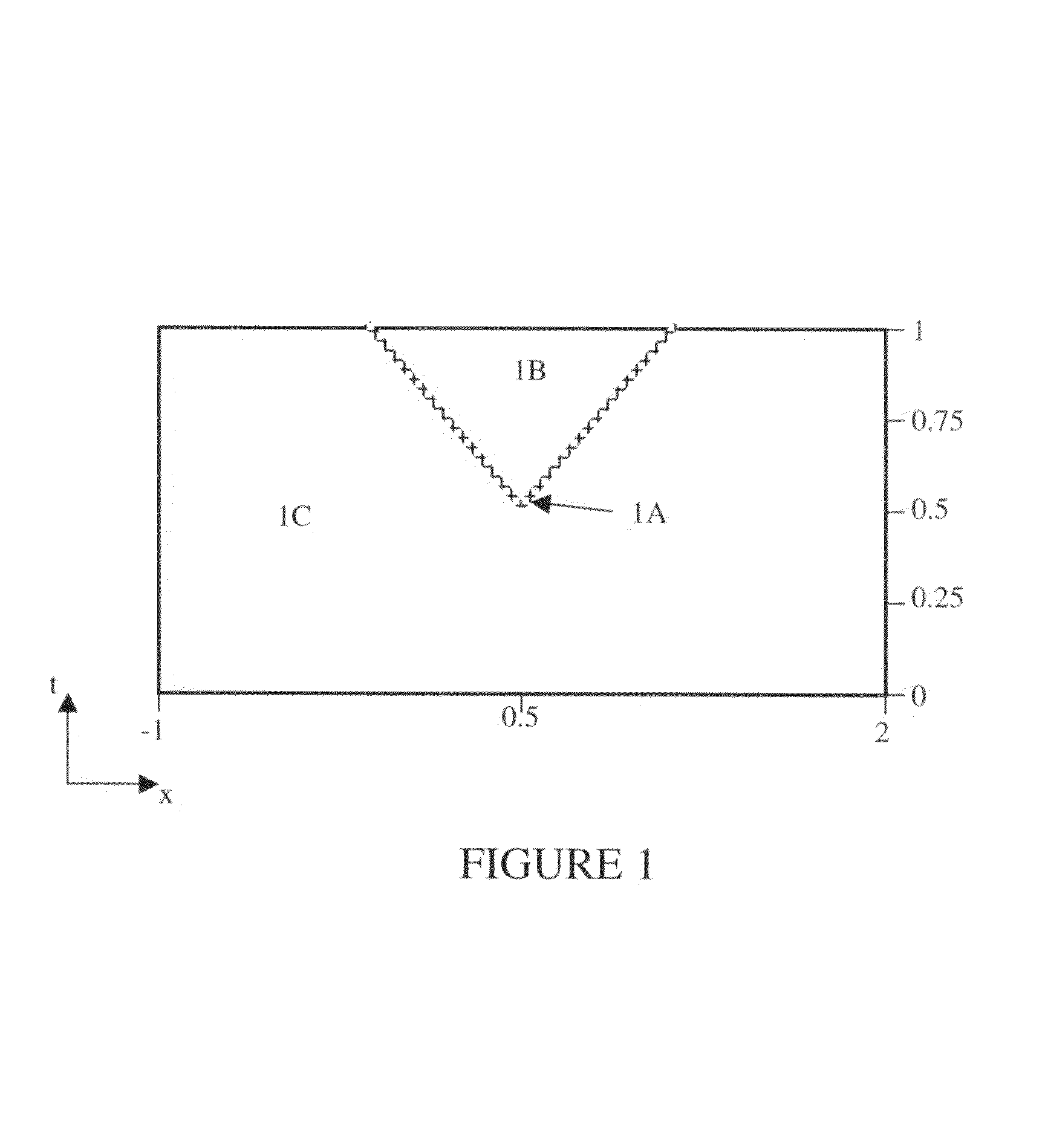

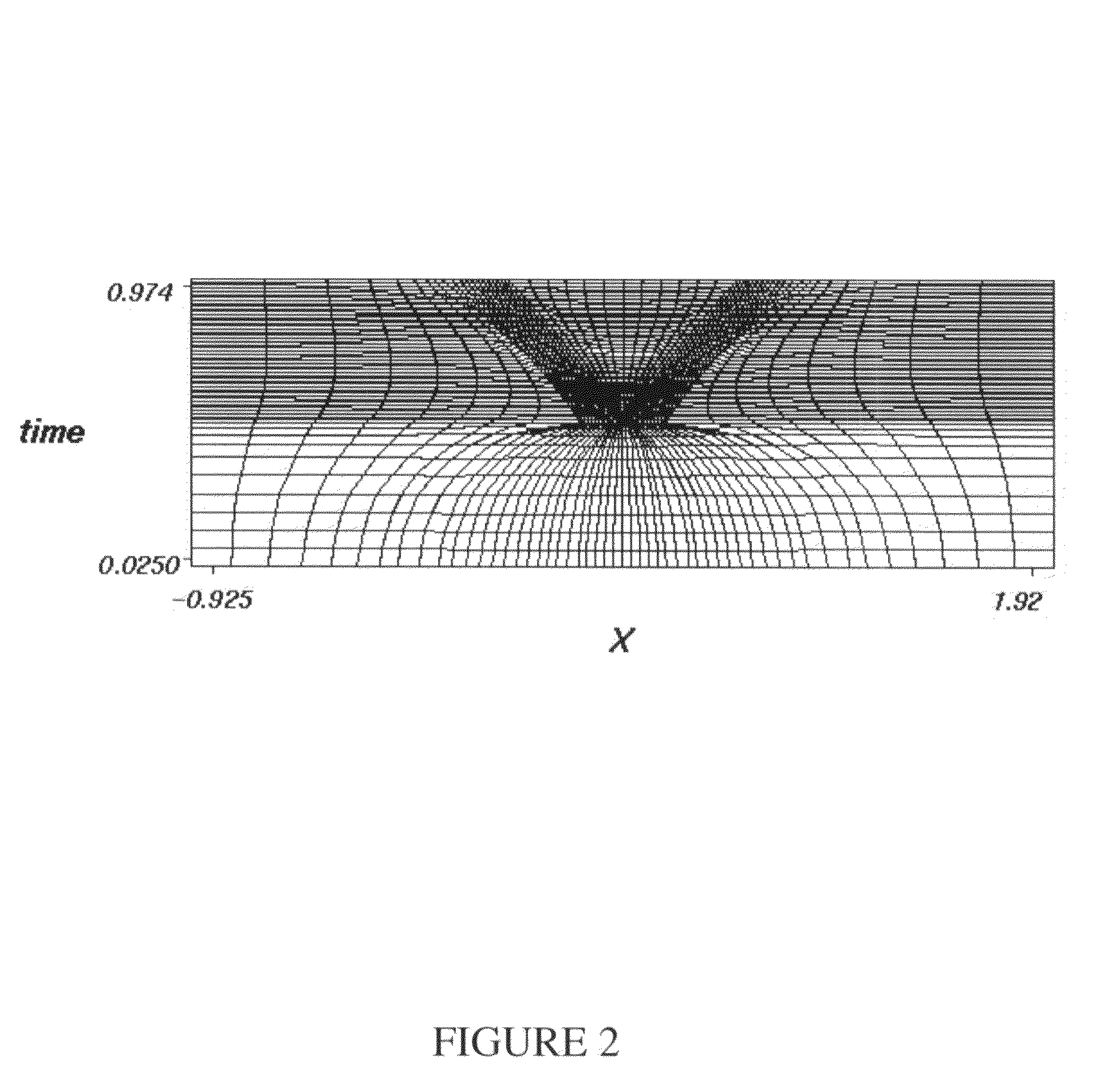

The Space-Time Mapping Analysis (STMA) method and system provides an engineering method and / or system for modeling and / or analyzing and / or designing and / or building and / or operating complex physical processes, components, devices, and phenomena. STMA can be used in a way for modifying and / or improving the design of many different products, components, processes, and devices, for example. Any physical system, whether existing or proposed, which exhibits, for example, unsteady flow phenomena, might be modeled by the STMA. Thus, STMA can be implemented as a part of an engineering system for design improvements and / or modifications and / or evaluations. The STMA system and / or method uses a space-time mapping technique wherein the space and time directions are treated in an equivalent way, such that, rather than solving a three-dimensional unsteady problem by sweeping in the time direction from an initial point in time to a final point in time, the problem is solved as a four-dimensional problem in space-time.

Owner:HIXON TECH

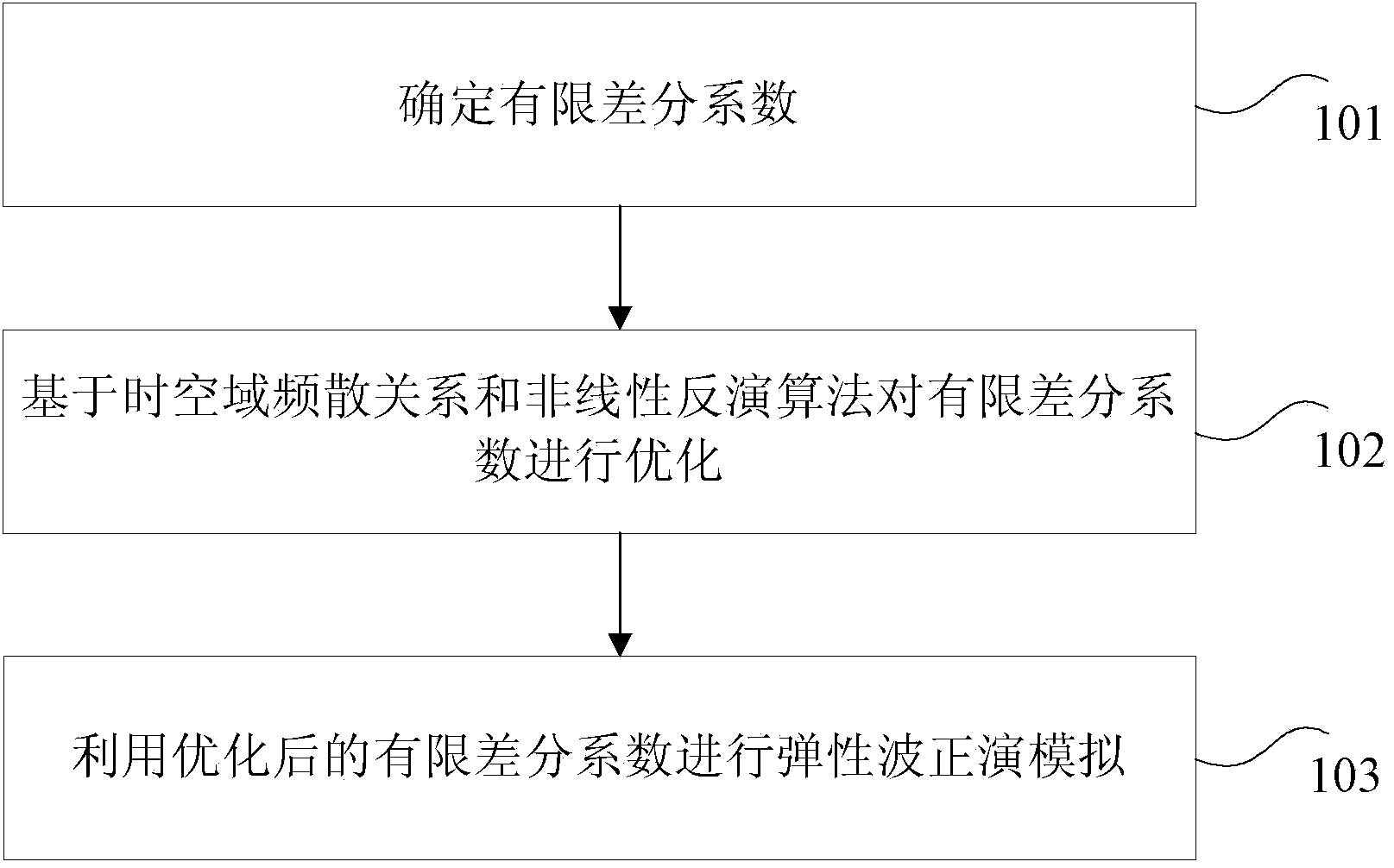

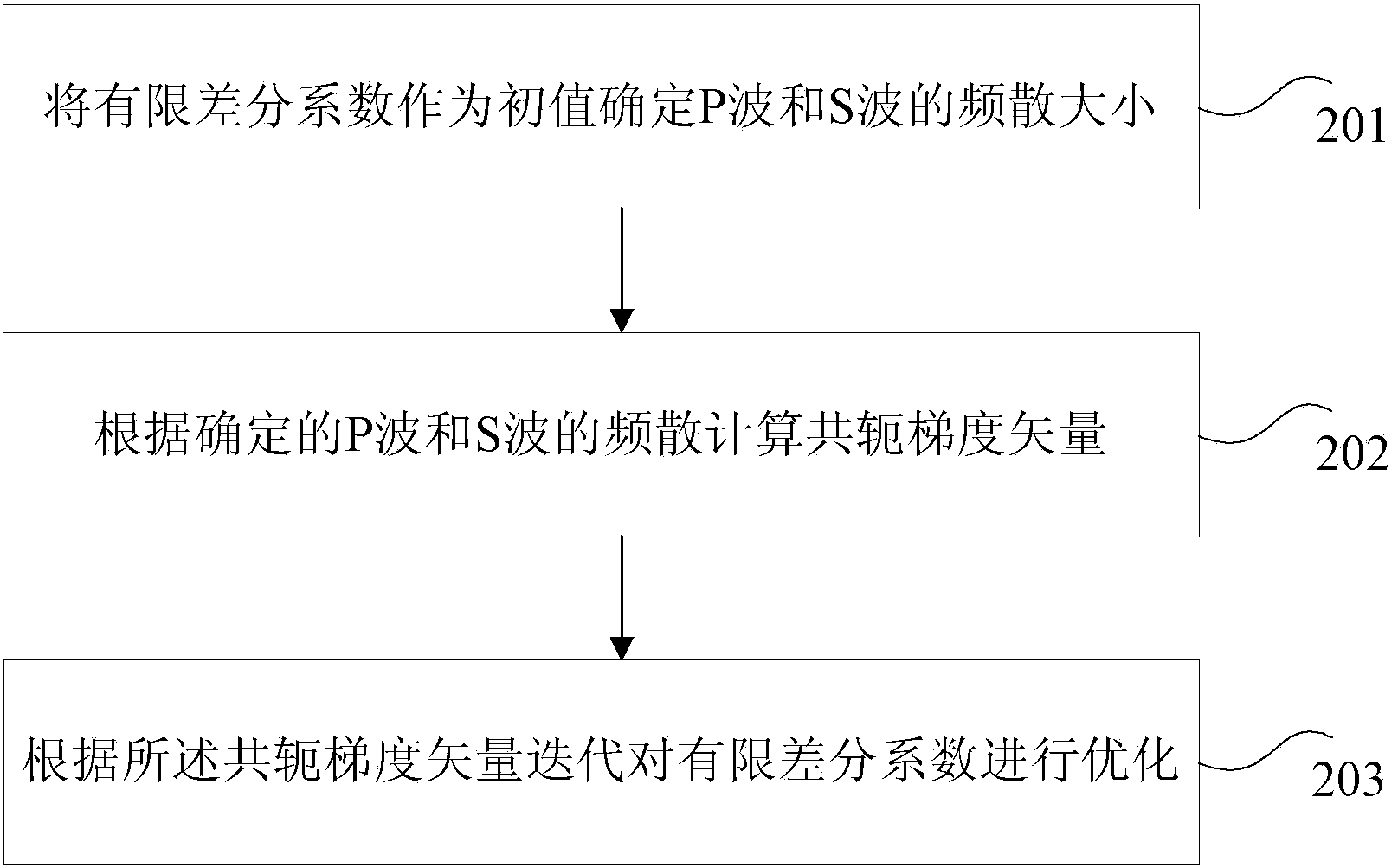

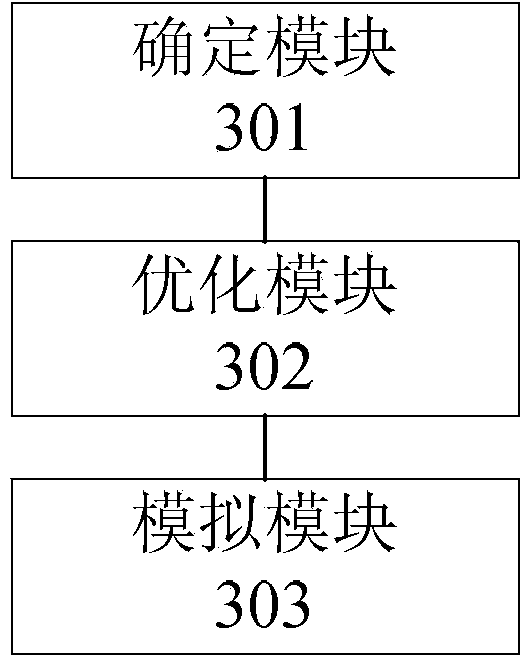

Nonlinear optimization based time-space domain staggered grid finite difference method and device

ActiveCN103630933ALow dispersionImprove simulation accuracySeismic signal processingSpace time domainNonlinear inversion

The invention provides a nonlinear optimization based time-space domain staggered grid finite difference method and device. The method includes: determining finite difference coefficients; optimizing the finite difference coefficients on the basis of time-space domain dispersion relation and a nonlinear inversion algorithm; utilizing the optimized finite difference coefficients to perform elastic wave forward modeling. By the method and device, the technical problems of high middle-high frequency dispersion and low simulation precision caused by the fact that a finite difference method of taylor series expansion and space domain dispersion relation is utilized to acquire the finite difference coefficients so as to perform elastic wave forward modeling are solved, dispersion of middle and high frequency is achieved, and technical effect of simulation precision is improved.

Owner:BC P INC CHINA NAT PETROLEUM CORP +1

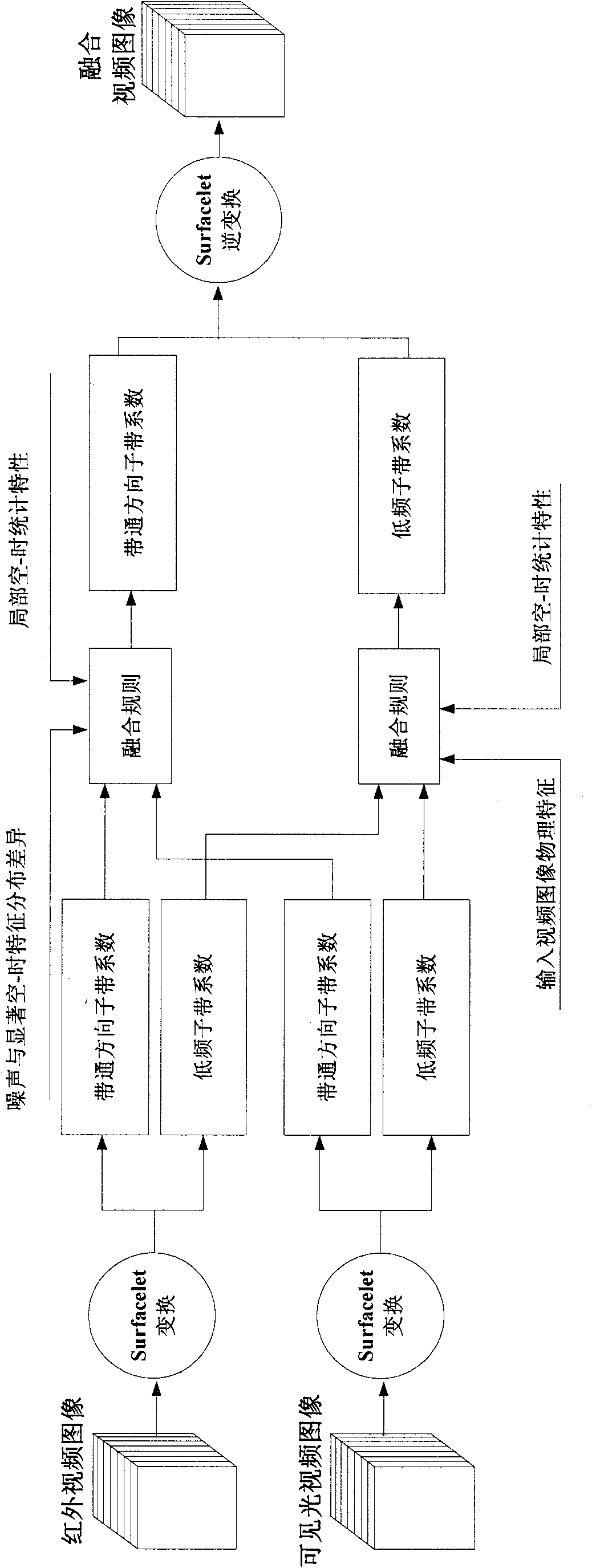

Infrared and visible light video image fusion method based on Surfacelet conversion

InactiveCN101873440AImprove consistencyImprove stabilityImage enhancementTelevision system detailsLow noiseSpace time domain

The invention discloses an infrared and visible light video image fusion method based on Surfacelet conversion, which mainly solves the problem of poor time consistency and stability of a fusion video image in the prior art. The method comprises the following steps of: firstly, carrying out multi-scale and multidirectional decomposition on an input video image by adopting Surfacelet conversion to obtain subband coefficients with different frequency domains; then, combining a low frequency subband coefficient with a band-pass direction subband coefficient of the input video image by using a fusion method of combining selection and weighted average based on three-dimensional partial space-time domain energy matching and a fusion method of combining energy and direction vector standard variance based on a three-dimensional partial space-time domain to obtain the low frequency subband coefficient and the band-pass direction subband coefficient of the fusion video image; and finally, carrying out Surfacelet conversion on each subband coefficient obtained by combination to obtain the fusion video image. The invention has the advantages of good fusion effect, high time consistency and stability and low noise sensitivity and can be used for field safety monitoring.

Owner:XIDIAN UNIV

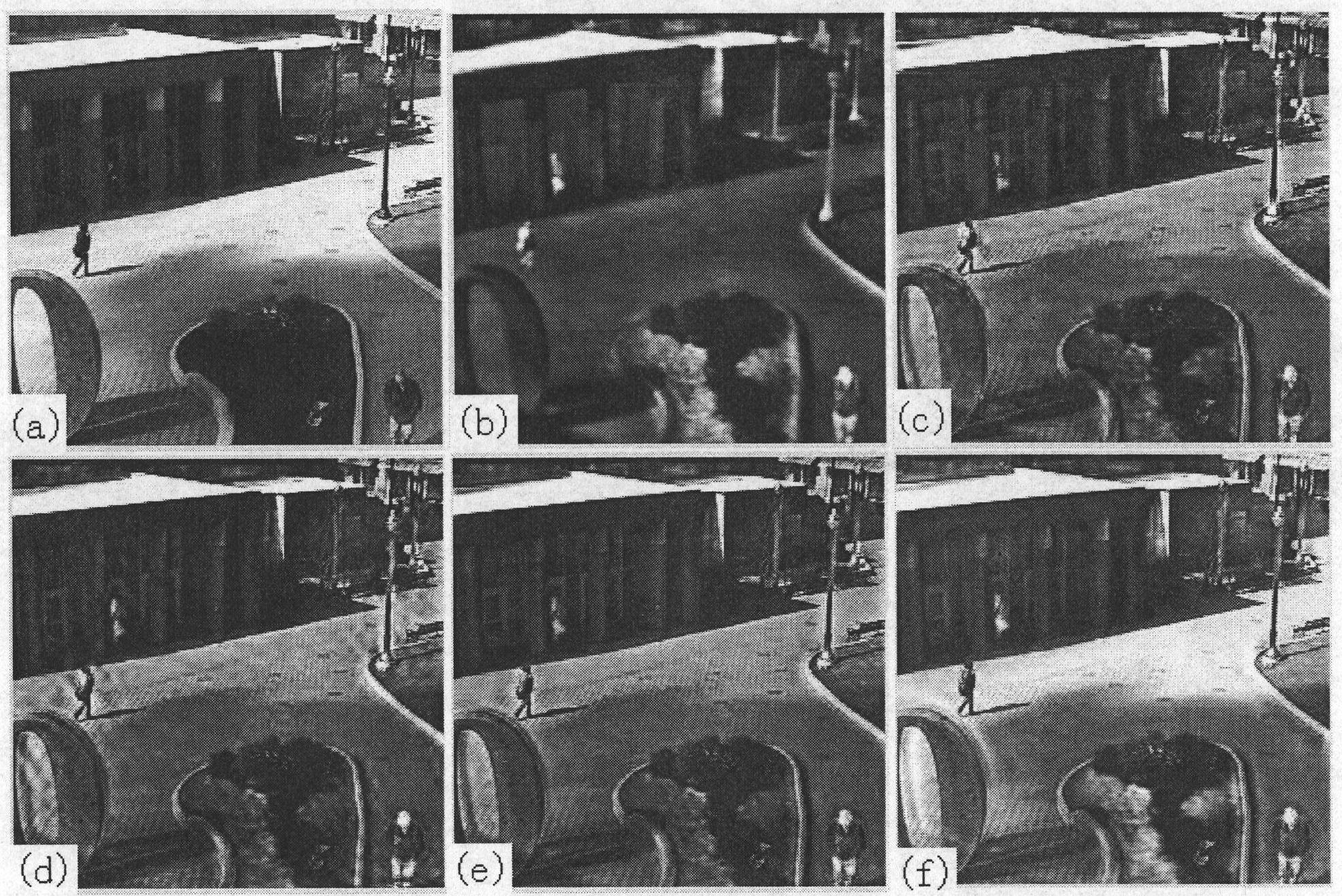

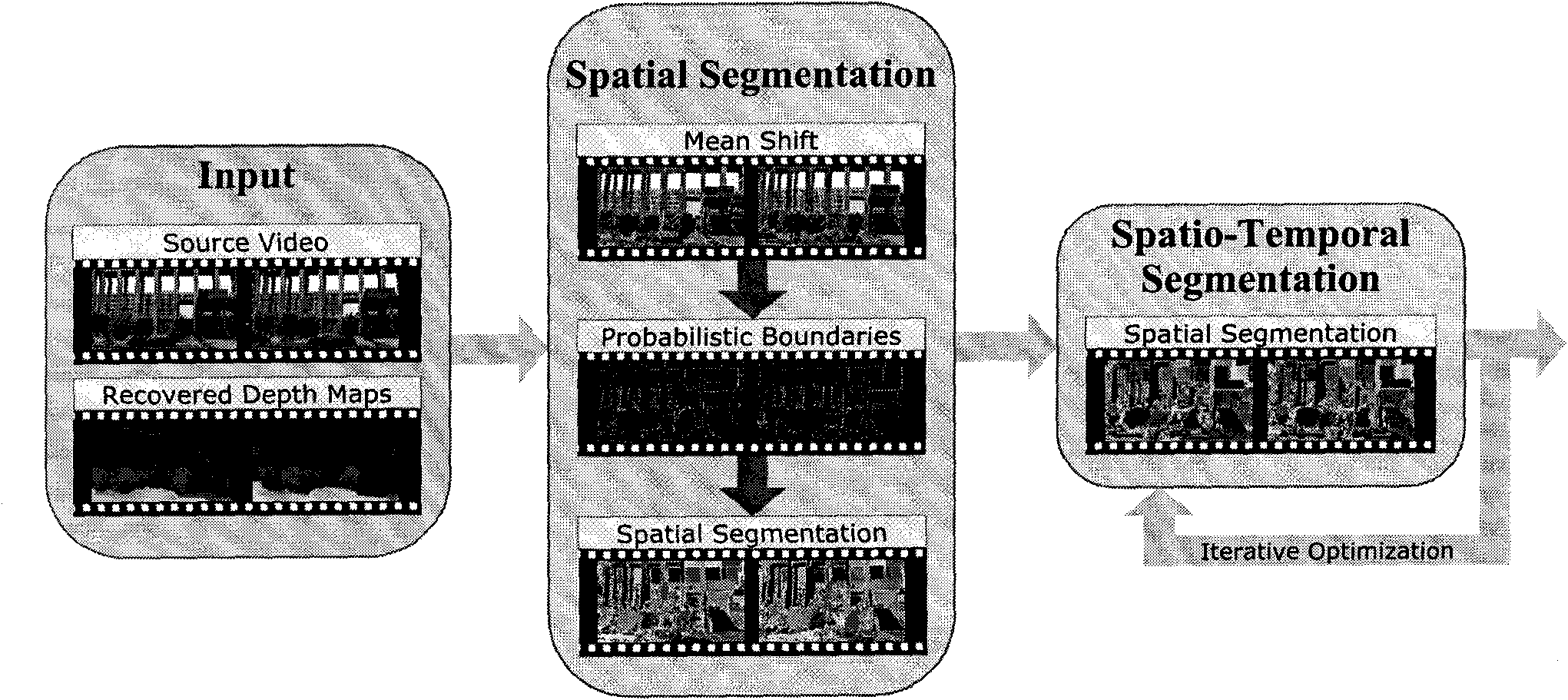

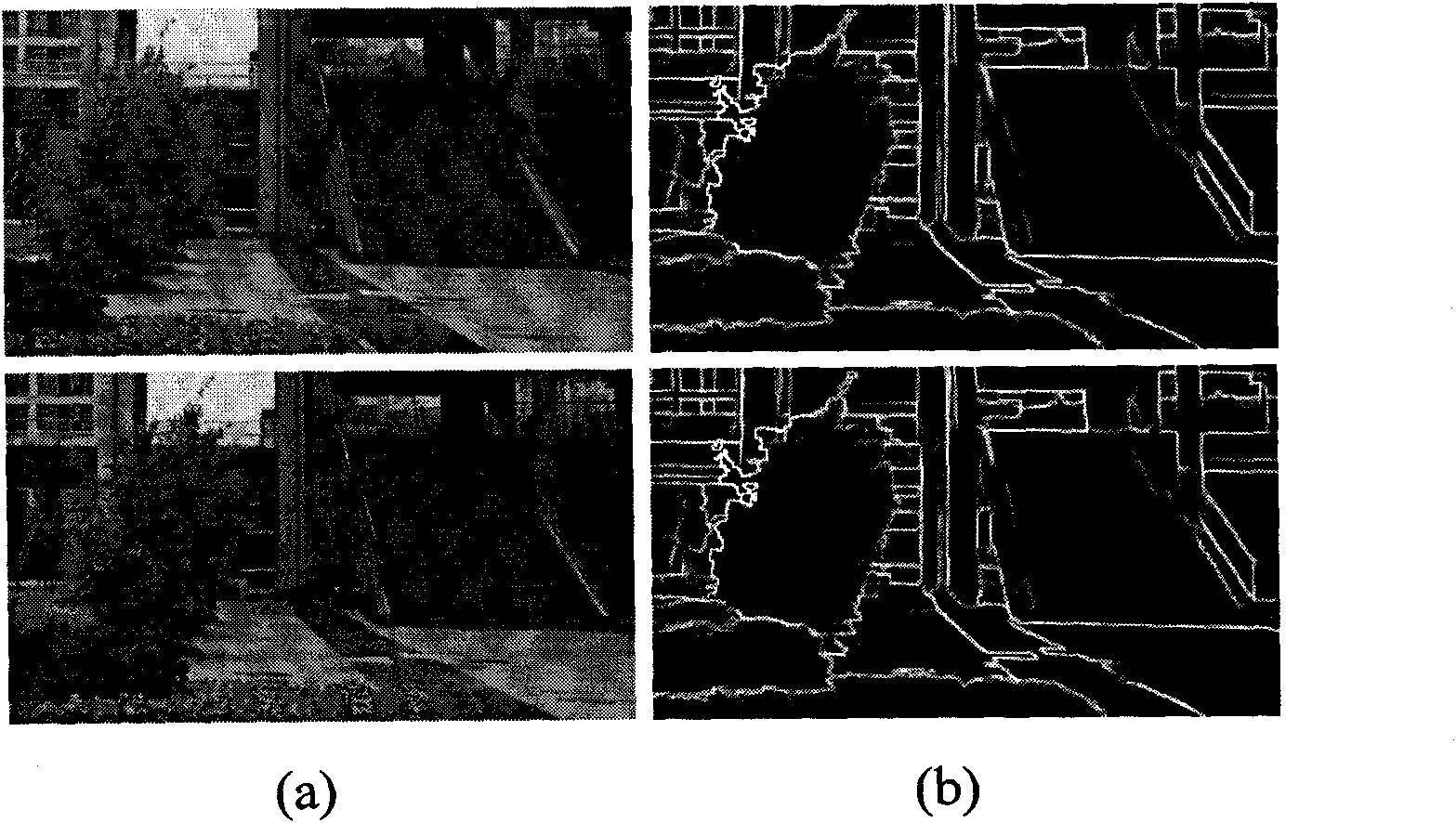

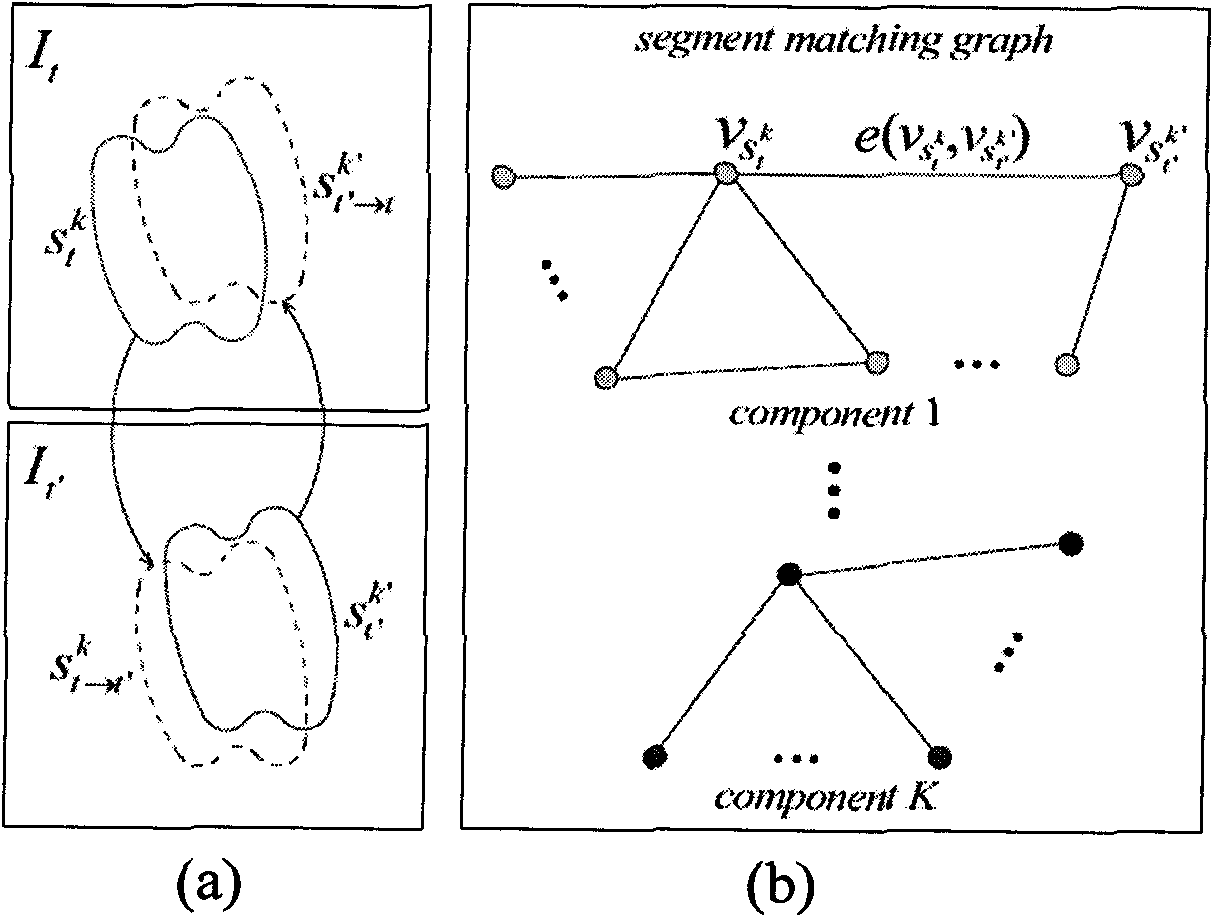

Segmentation method for space-time consistency of video sequence of parameter and depth information of known video camera

ActiveCN101789124AKeep boundariesEnsure consistencyTelevision system detailsImage analysisSpace time domainMean shift segmentation

The invention discloses a segmentation method for the space-time consistency of a video sequence of the parameter and depth information of a known video camera, comprising the following steps of: (1) segmenting videos by using a Mean-shift method; (2) counting Mean-shift segmentation boundaries according to the parameter and depth information of the video camera, and computing a probability boundary diagram of each frame; (3) segmenting the probability boundary diagram by utilizing a Watershed and an energy optimization method to obtain a more consistent segmentation result; (4) matching and connecting segmentation blocks among different frames to generate segmentation blocks in a space-time domain in regard to initialized segmentation obtained by utilizing the Watershed and the energy optimization method; (5) counting the probability of each pixel belonging to each space-time domain segmentation block by utilizing the parameters and the depth of the video camera, and iteratively optimizing frame by frame by using the energy optimization method to obtain a space-time consistent video segmentation result. The segmentation result can well keep object boundaries and the high space-time consistency of the segmentation blocks among multiple frames of the videos without flashing and skipping.

Owner:ZHEJIANG SENSETIME TECH DEV CO LTD

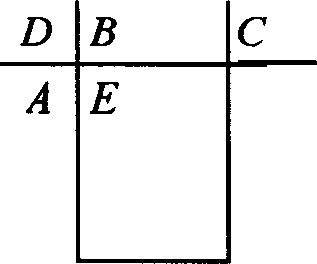

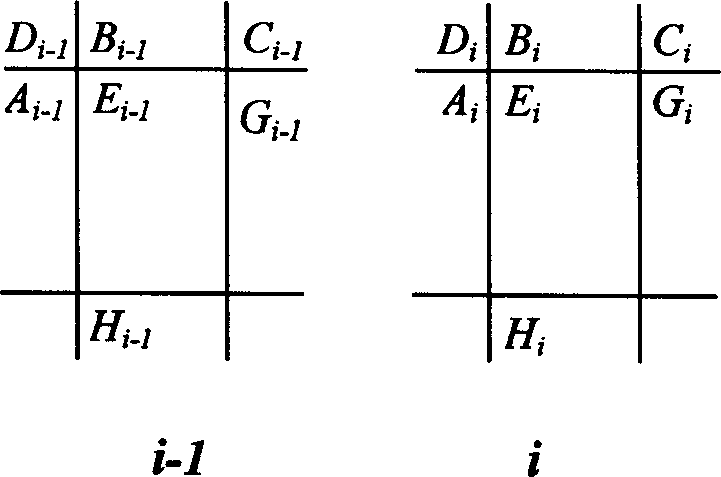

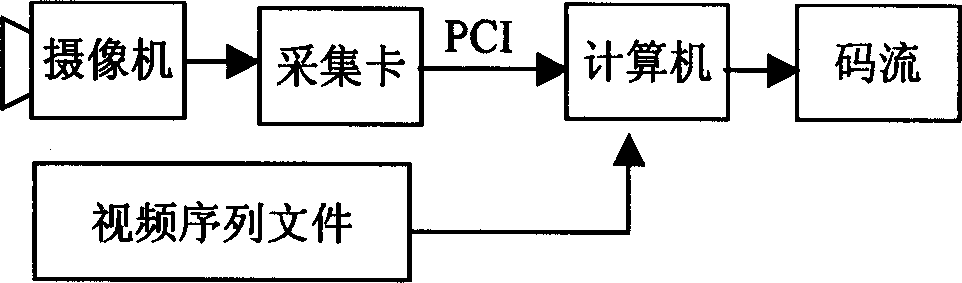

Video encoding method based on prediction time and space domain conerent movement vectors

InactiveCN1457196AImprove forecast accuracyTelevision system detailsColor television detailsDigital videoTime domain

The invention puts forward the predicting method by combining correlation of motion vector between time domain and space domain as well as the method for describing movement correlation between adjacent macro blocks. The method includes following steps. A video camera converts the state of a target object to video frequency signal. The said video signal through an acquisition card is converted to a digital video frequency sequence stored in a flash memory to be used as input from system for further compression. The computer runs motion compensation / DCT mixed coded subroutine for original video frequency frames for carrying out video frequency compression and creating code stream file. The main point of the invention is the subrouting for predicting motion vector.

Owner:BEIJING UNIV OF TECH

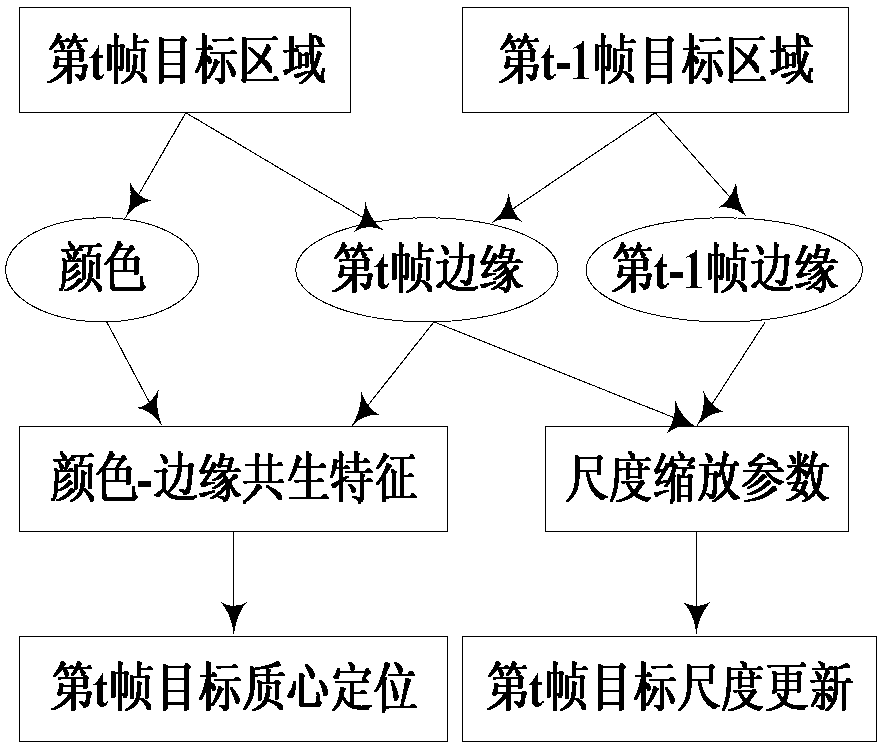

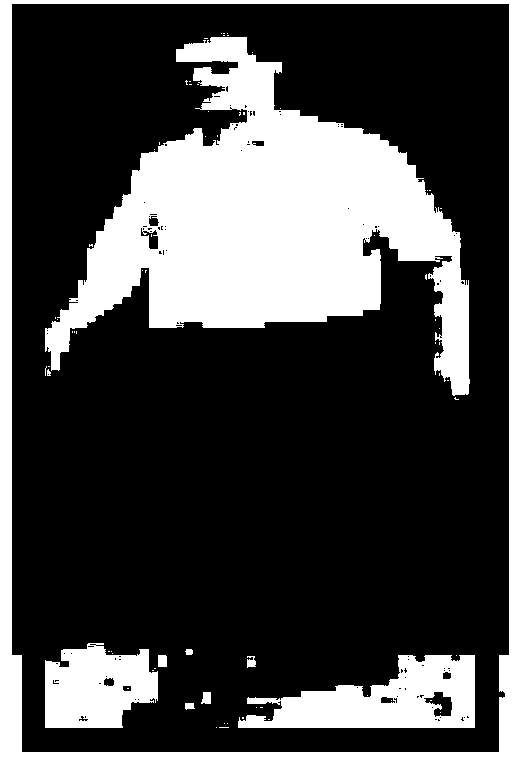

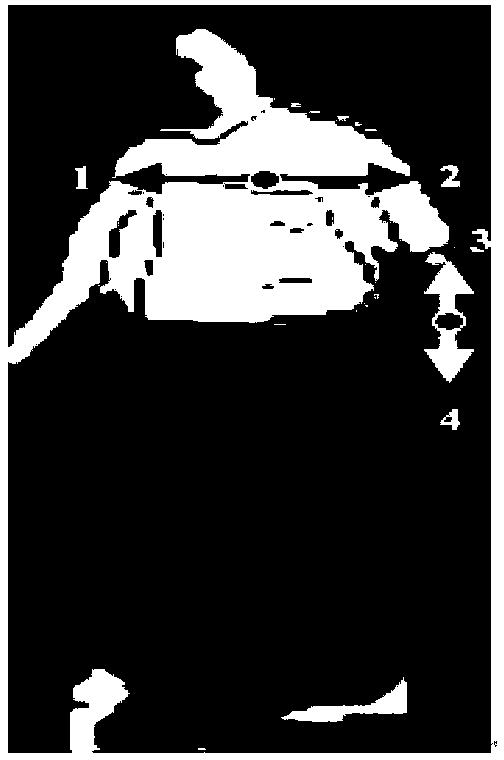

Target tracking method based on correlation of space-time-domain edge and color feature

ActiveCN103065331AEnhanced Color DifferencesEasy extractionImage analysisVideo monitoringTime domain

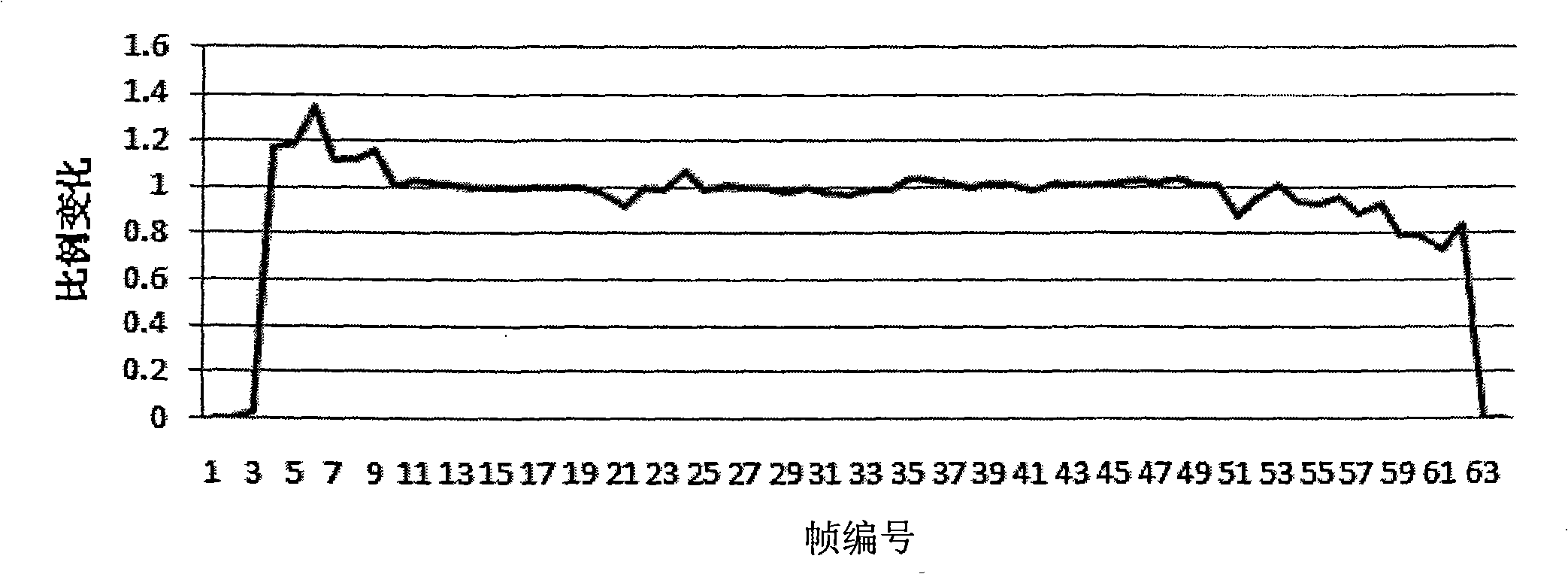

The invention discloses a target tracking method based on correlation of space-time-domain edge and color feature. The target tracking method based on correlation of space-time-domain edge and color feature comprises the following steps: (1) selecting a tracked target area; (2) extracting the edge outline of the target and calculating the direction angle of the edge; (3) along the two orthogonal directions of horizontal direction and vertical direction, conducting statistics of edge-color symbiosis character pairs, and building a target edge-color correlation centroid model; (4) selecting the centroids of the edge-color pairs with high confidence coefficient to conduct probability weighting, so as to gain a transfer vector of a target centroids in a current frame; (5) conducting statistics of histograms of target edge distances between adjacent frames, conducting probability weighting of the successfully matched distance change rates between the adjacent frames so as to gain a target dimension scaling parameter. By means of the target tracking method based on correlation of space-time-domain edge and color feature, a target tracking in a crowded scene, a shelter, and a condition that the target dimension changes is achieved, and robustness, accuracy and instantaneity of the tracking are improved. The target tracking method based on correlation of space-time-domain edge and color feature has a wide application prospect in the video image processing field, and can be applied to the fields such as intelligent video monitoring, enterprise production automation and intelligent robot.

Owner:南京雷斯克电子信息科技有限公司

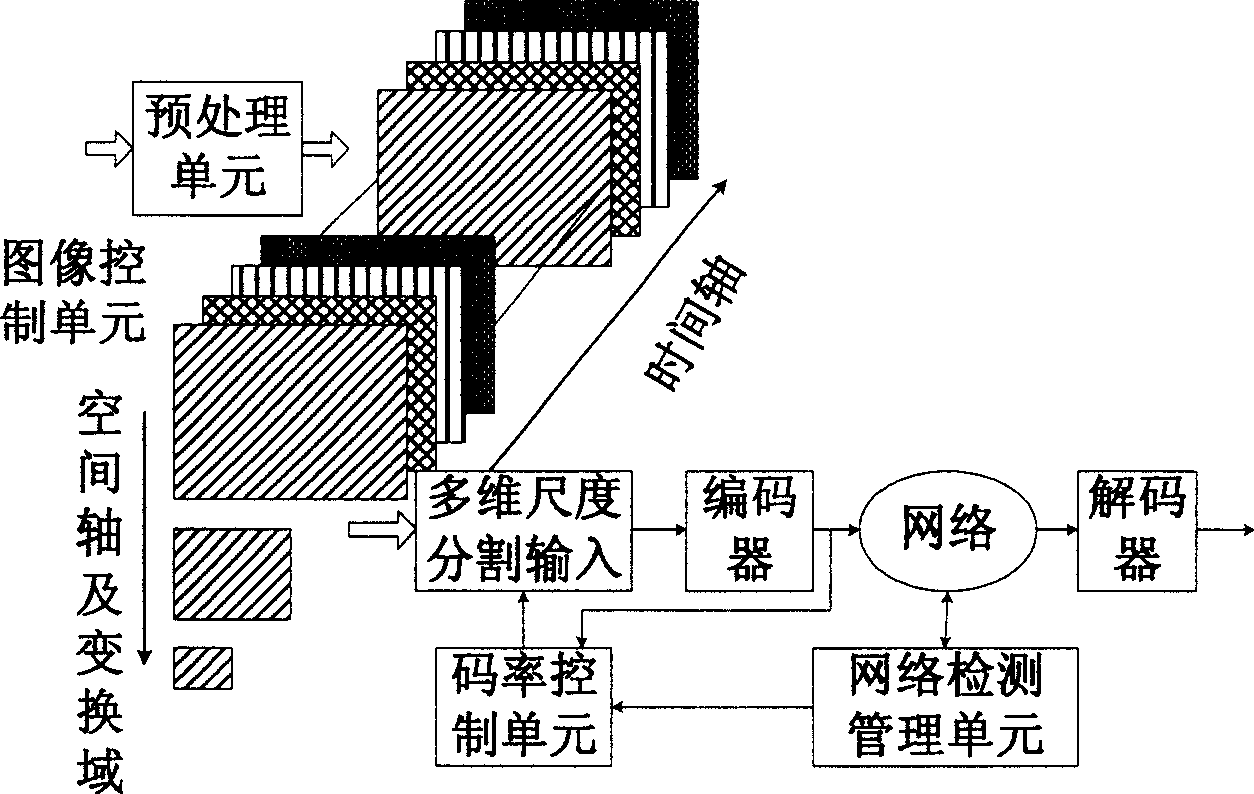

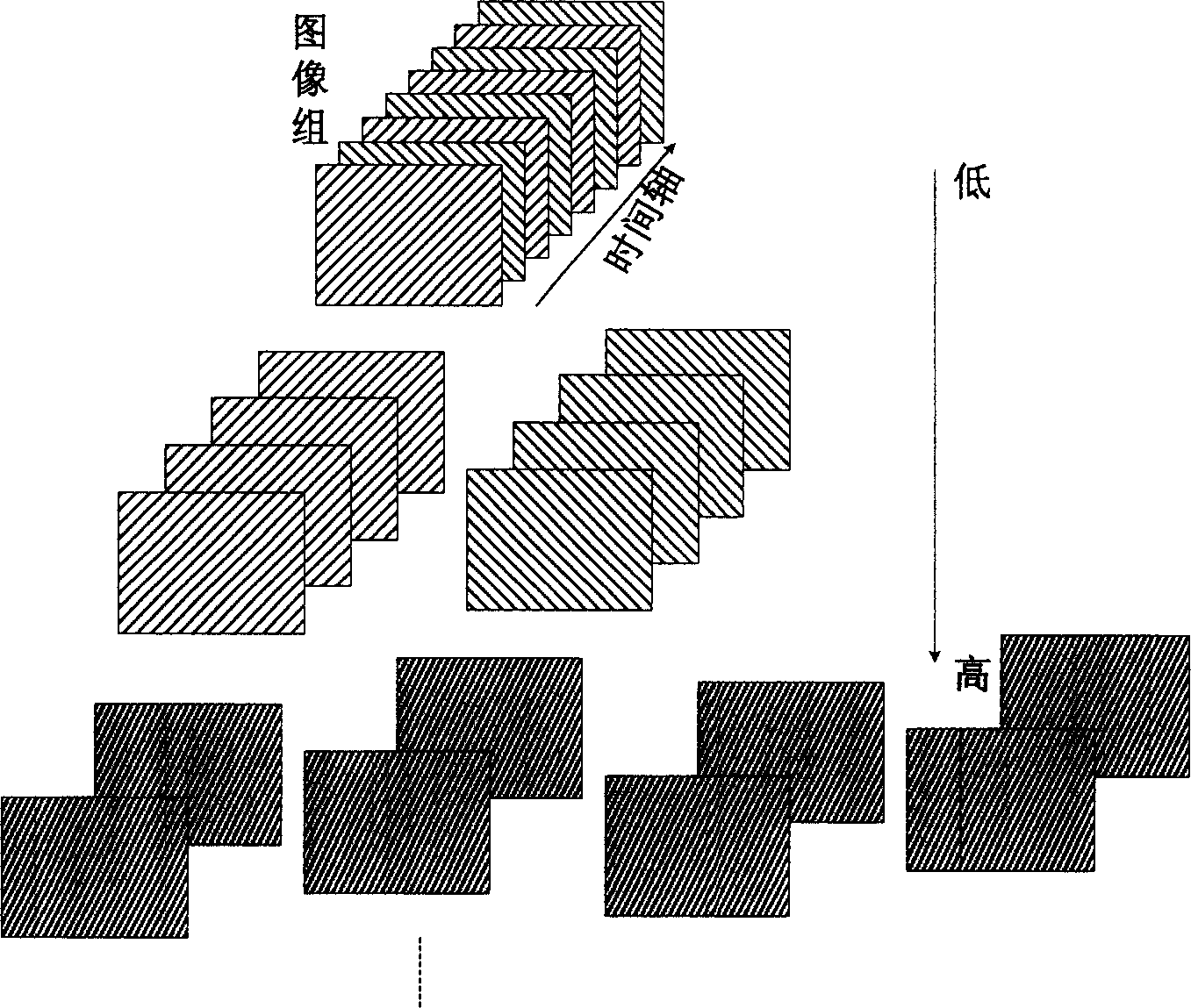

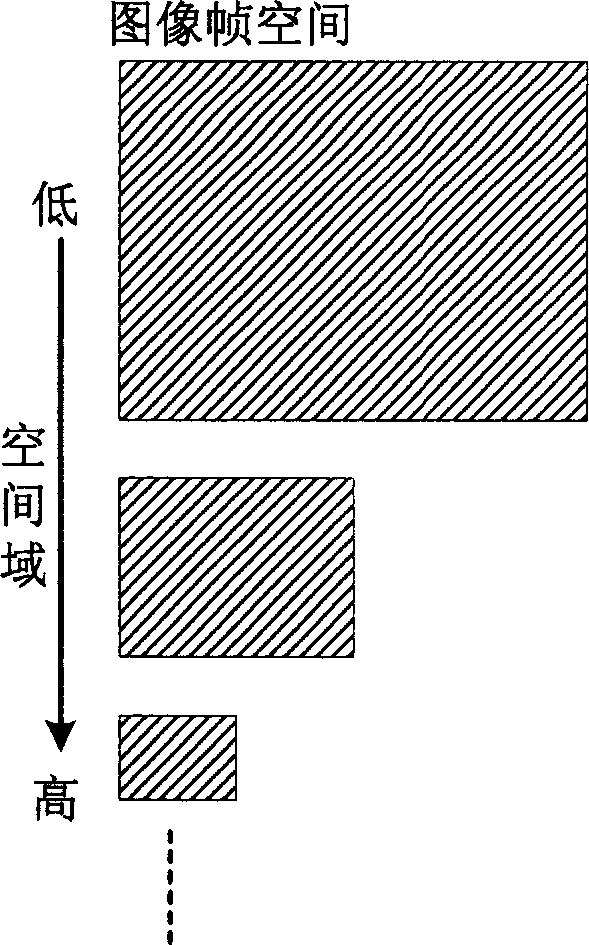

Multi-dimentional scale rate control method of network video coder

InactiveCN1694533AImprove applicabilityImprove good performancePulse modulation television signal transmissionData streamThe Internet

This invention relates to a network video encoder multidimensional code rate control method. The image control unit of an input video sequence containing macroblock strips, image frames or image set is divided into different dimensions of set spaces according to the time domain, space domain, time domain transformation, space domain, time domain transformation, space domain transformation and space-time domain transformation, a code rate control unit transmits the time-varying state of band-width, the occupation degree of a buffer zone and resource and process ability of multi-access terminal based on the multiple element isomeric network channels to carry out variable bit rate encode of the divided set space to match with the current video encoder resource, or network transmission code rate, the process ability of the receive end and the decoder, the video decoder reconstructs related multidimensional images to display image sequence based on the grammar ID plugged by received stream.

Owner:SHANGHAI JIAO TONG UNIV

Method for full-bandwidth deghosting of marine seismic streamer data

Seismic data recorded in a marine streamer are obtained, with the seismic data being representative of characteristics of subsurface earth formations and acquired by deployment of a plurality of seismic receivers overlying an area of the subsurface earth formations to be evaluated, the seismic receivers generating at least one of an electrical and optical signal in response to seismic energy. A complex Laplace frequency parameter is used to transform the seismic data from a space-time domain to a spectral domain. An iterative conjugate gradient scheme, using a physically-based preconditioner, is applied to the transformed seismic data, to provide a least squares solution to a normal set of equations for a deghosting system of equations. The solution is inverse-transformed back to a space-time domain to provide deghosted seismic data, which is useful for imaging the earth's subsurface.

Owner:PGS GEOPHYSICAL AS

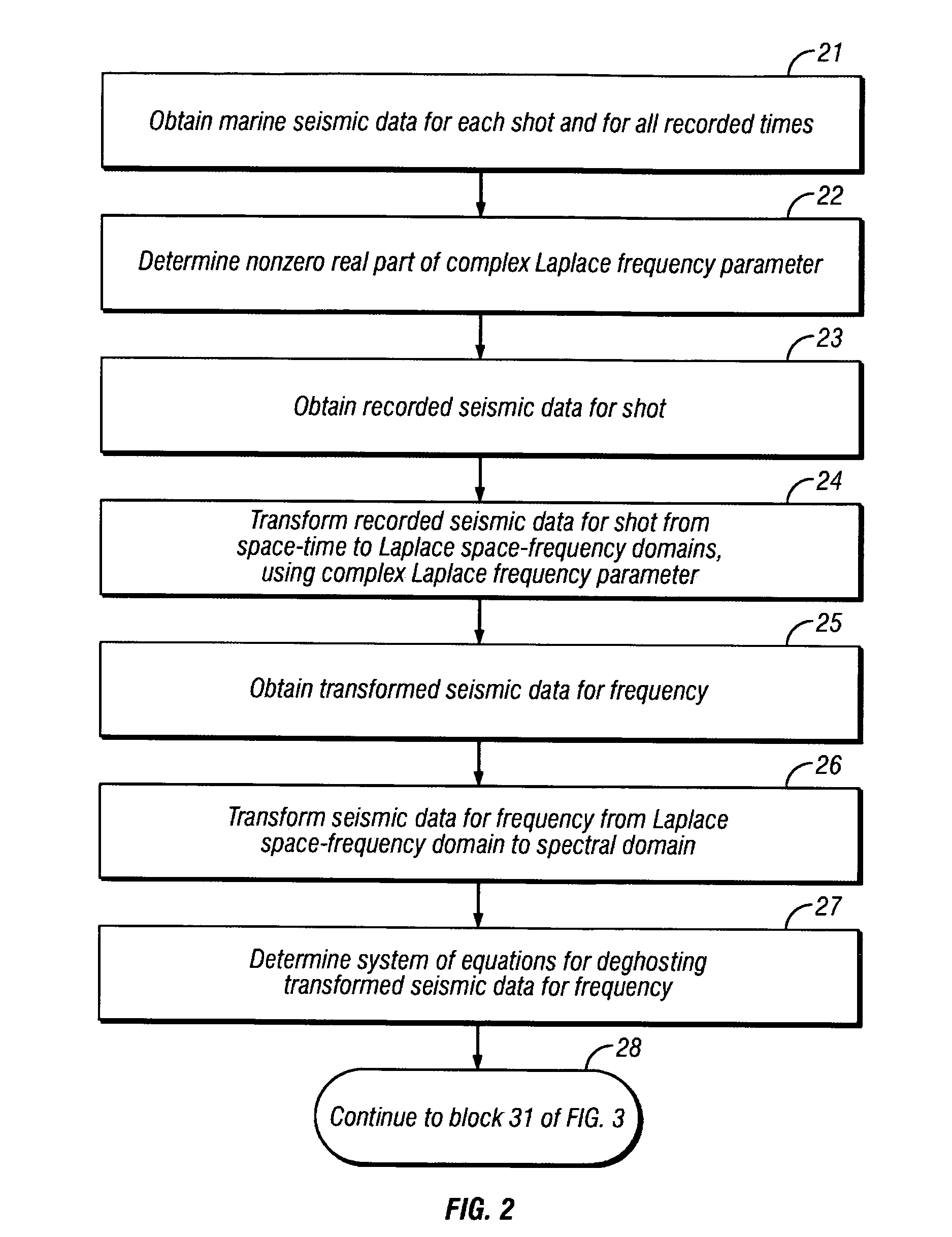

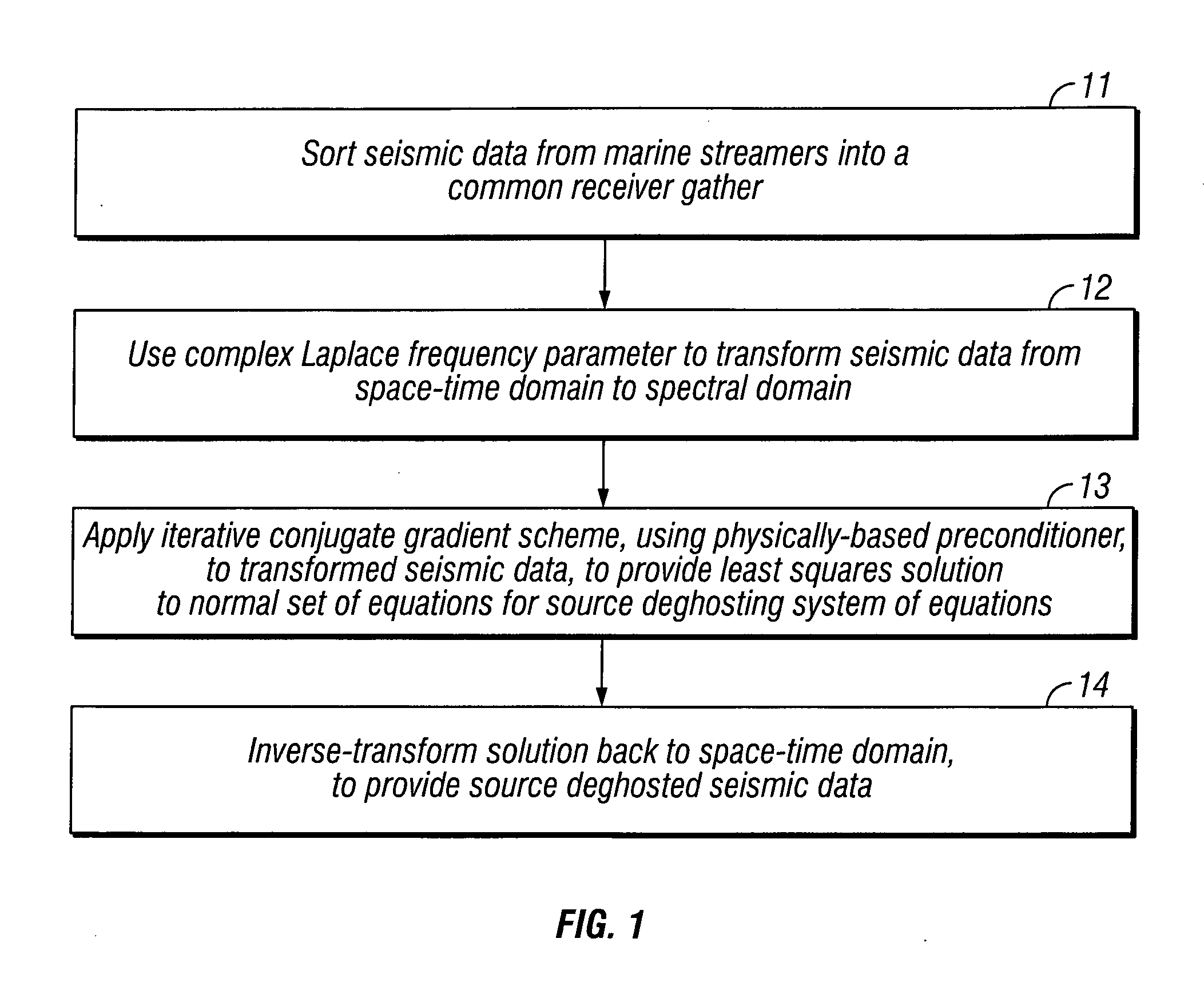

Method for full-bandwidth source deghosting of marine seismic streamer data

Seismic data recorded in a marine streamer are obtained, sorted as a common receiver gather. A complex Laplace frequency parameter is used to transform the seismic data from a space-time domain to a spectral domain. An iterative conjugate gradient scheme, using a physically-based preconditioner, is applied to the transformed seismic data, to provide a least squares solution to a normal set of equations for a source deghosting system of equations. The solution is inverse-transformed back to a space-time domain to provide source deghosted seismic data, which is useful for imaging the earth's subsurface.

Owner:PGS GEOPHYSICAL AS

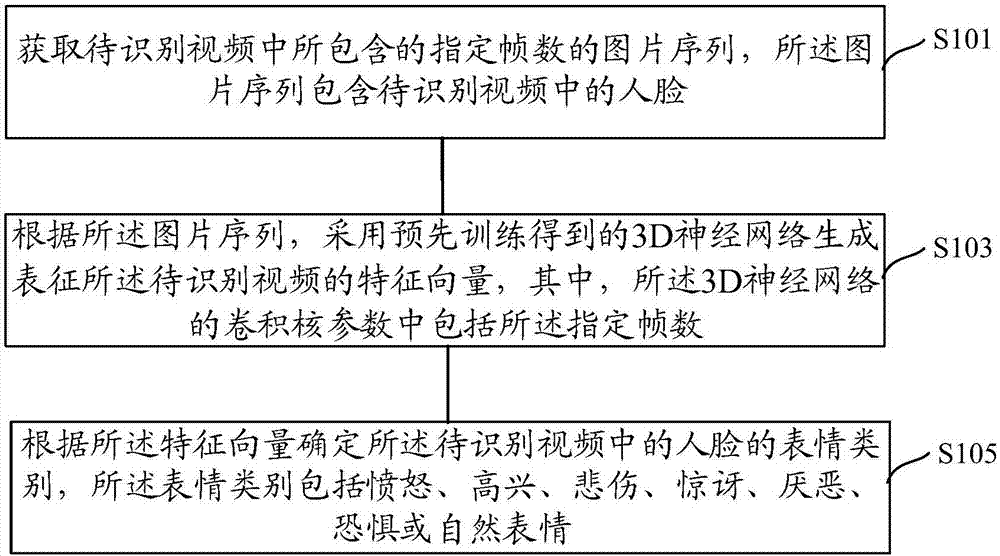

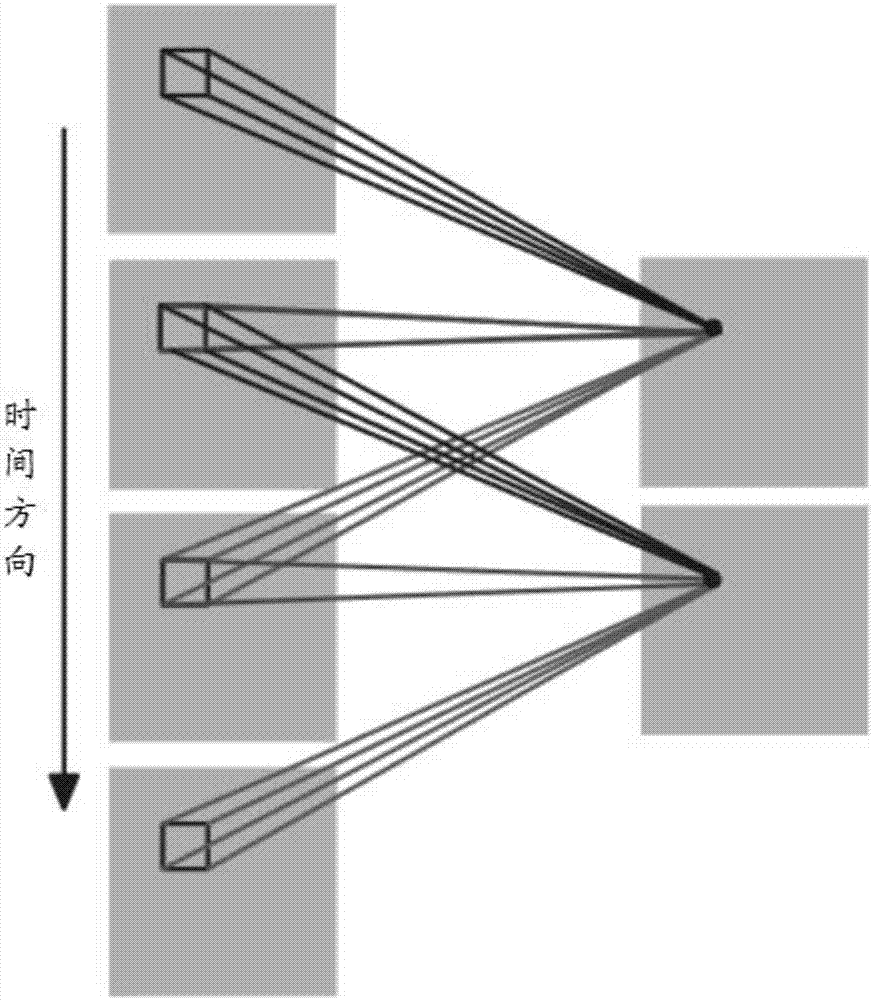

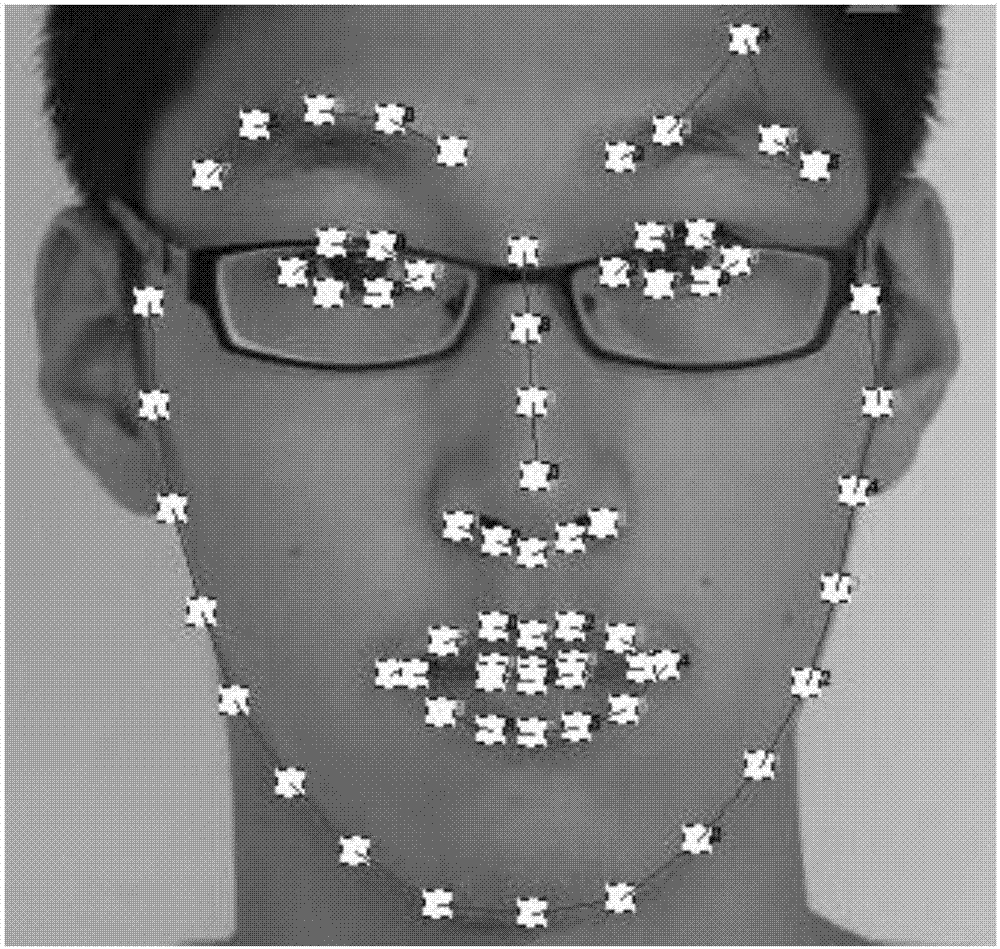

Expression recognition method, device and equipment for video

InactiveCN107977634AAccurate expression recognitionAccurate classificationNeural architecturesAcquiring/recognising facial featuresFeature vectorFace detection

The embodiment of the invention discloses an expression recognition method, device and equipment for a video. The method comprises the steps: extracting a corresponding image sequence from any video comprising a face; extracting a face image in the video through face recognition, and carrying out a corresponding alignment operation as the input; carrying out the feature extraction comprising spatial features and time features through a pre-trained 3D convolution neural network, and generating a feature vector. The method integrates the prediction of the time-space domain of an image sequence,and achieves the more accurate expression recognition according to the feature vector.

Owner:北京飞搜科技有限公司

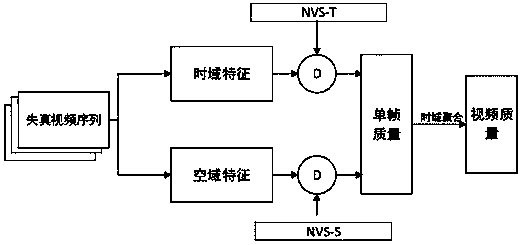

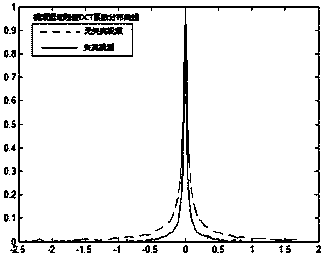

No-reference video quality evaluation method based on space-time domain natural scene statistics characteristics

Objective video quality evaluation is one of the important research points for QoE service in the further. The invention provides a video quality evaluation method based on no-reference natural scene statistics (NSS). Firstly, through analyzing a video sequence, corresponding statistical values of each pixel point and the adjacent point are calculated and space domain statistics characteristics of the video are thus obtained. A predication image of an n+1 frame is obtained according to a motion vector and in combination with a reference frame n, a motion residual image is obtained, and statistical distribution after DCT transformation is carried out on the residual image is observed. Values obtained in the former two steps are used for respectively calculating a mahalanobis distance between the space domain characteristics and the natural video characteristics and a mahalanobis distance between the time domain characteristics and the natural video characteristics so as to obtain statistical differences between a distorted video and the natural video, and the quality of a single-frame image is obtained when the time domain information and the space domain information are converged. Finally, a time domain aggregation strategy on the basis of visual hysteresis effects is adopted to obtain the objective quality of the final video sequence.

Owner:BEIJING UNIV OF POSTS & TELECOMM

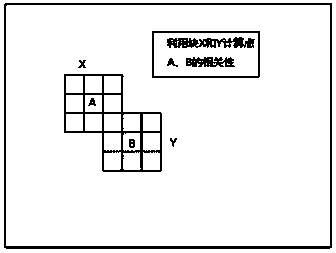

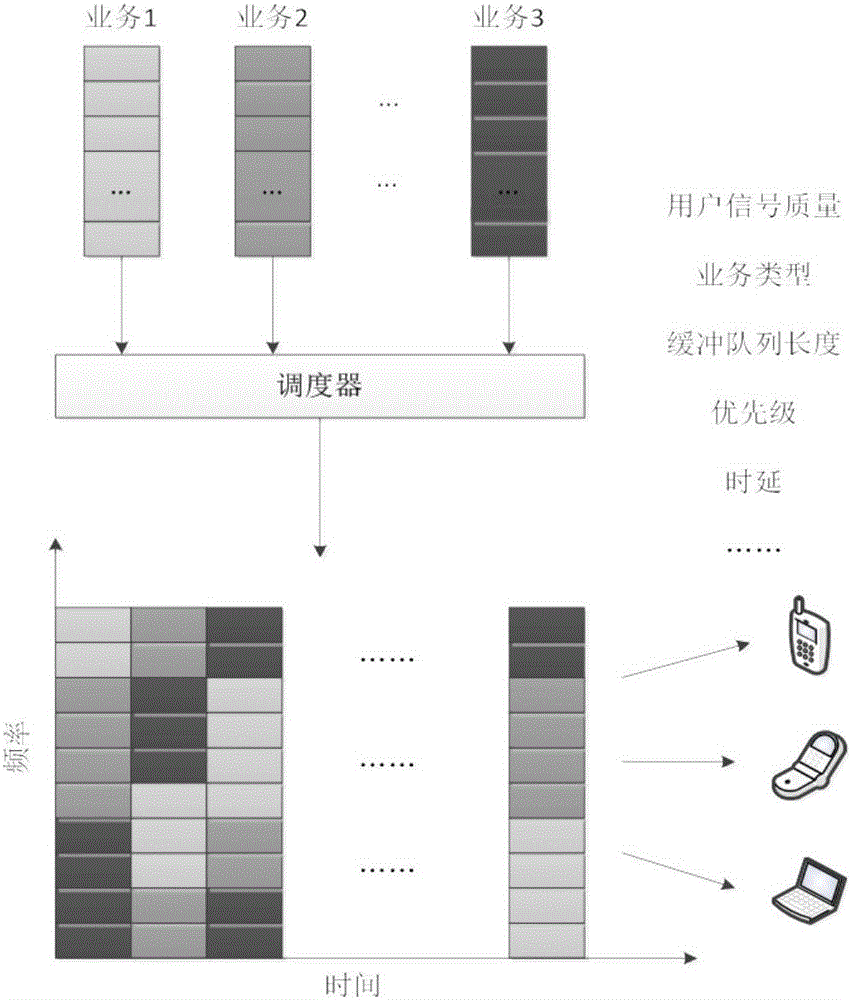

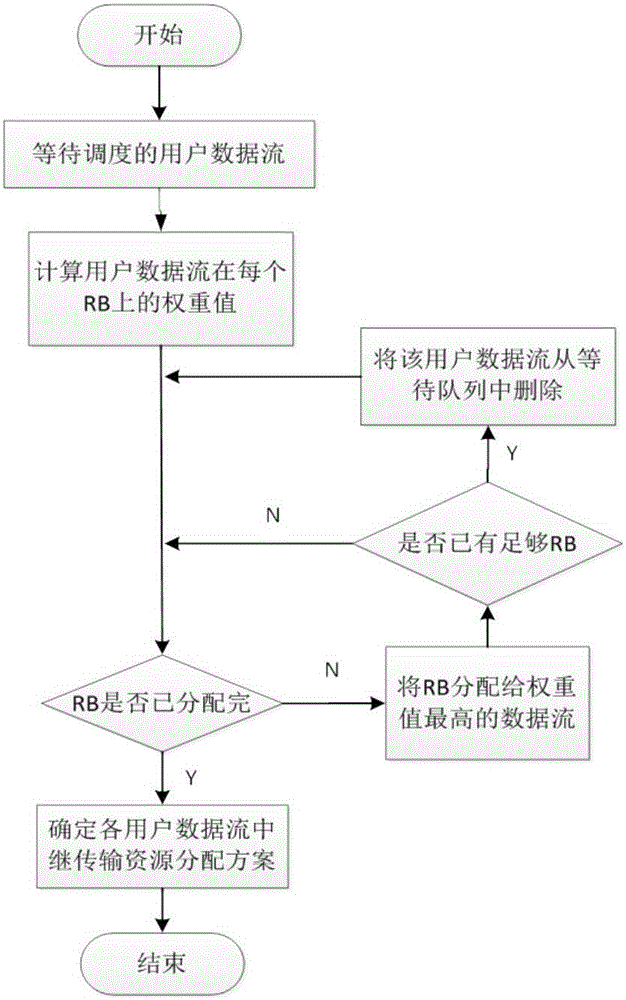

Multiuser-oriented relay satellite space-time frequency domain resource dynamic scheduling method

ActiveCN106507366AImprove throughputImprove spectrum utilizationRadio transmissionNetwork planningQuality of serviceFrequency spectrum

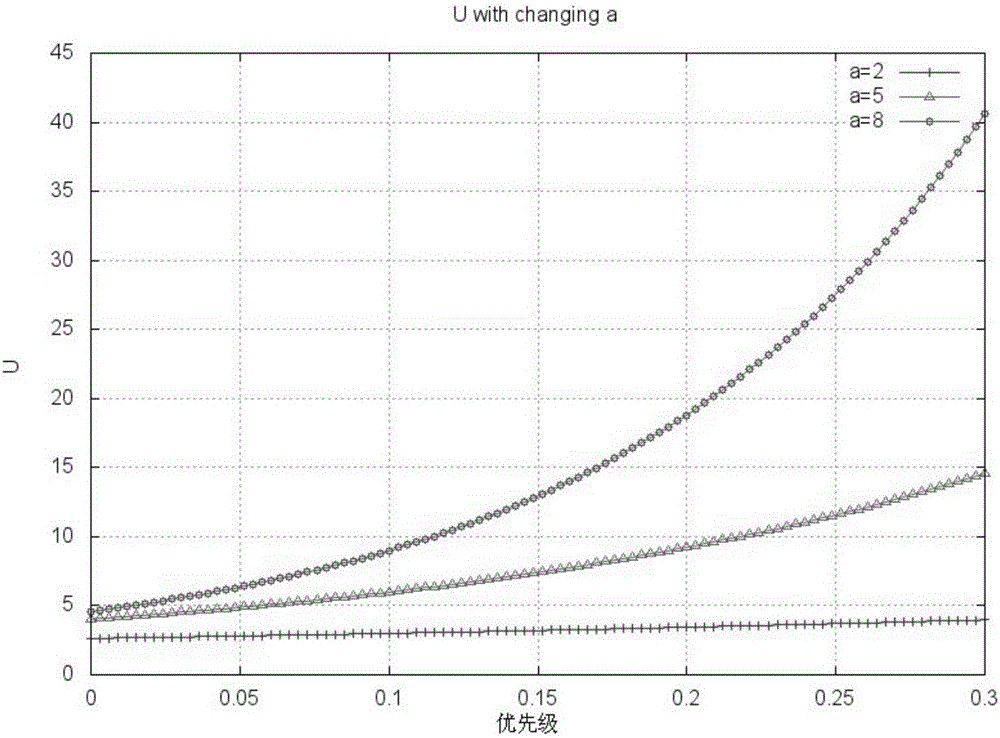

The invention discloses a multiuser-oriented relay satellite space-time frequency domain resource dynamic scheduling method. The method comprises the following steps: I, receiving different paths of user data streams; II, calculating weight values of the paths of user data streams; and III, allocating time-domain resource blocks to the user data streams according to the weight values. Through adoption of the multiuser-oriented relay satellite space-time frequency domain resource dynamic scheduling method, comprehensive consideration is given to the factors of task priority, time delay requirement, packet loss rate, throughput and application time sequence, and reasonable satellite resources are allocated to a plurality of users on a space-time domain. Specific to a relay satellite control and management center, the spectrum utilization rate and network throughput of relay satellite resources are increased. From the aspect of the users, the service quality of different services of the users is ensured, and the user demands are met.

Owner:CHINESE AERONAUTICAL RADIO ELECTRONICS RES INST

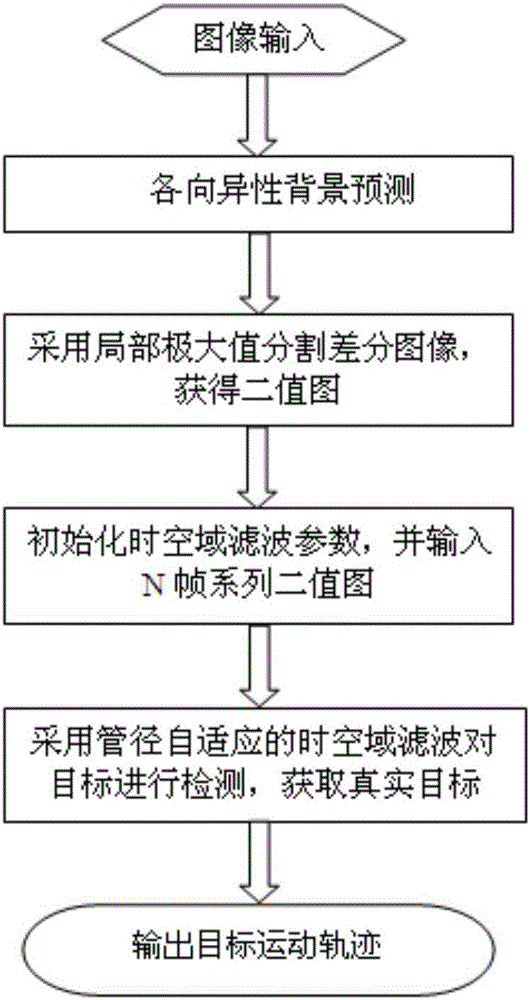

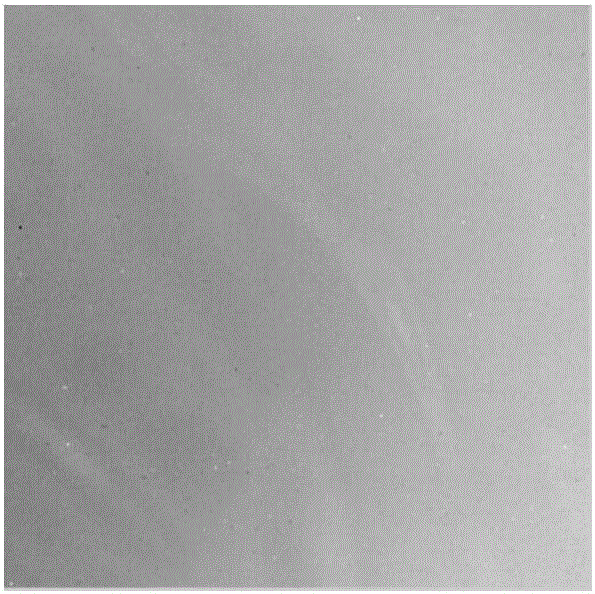

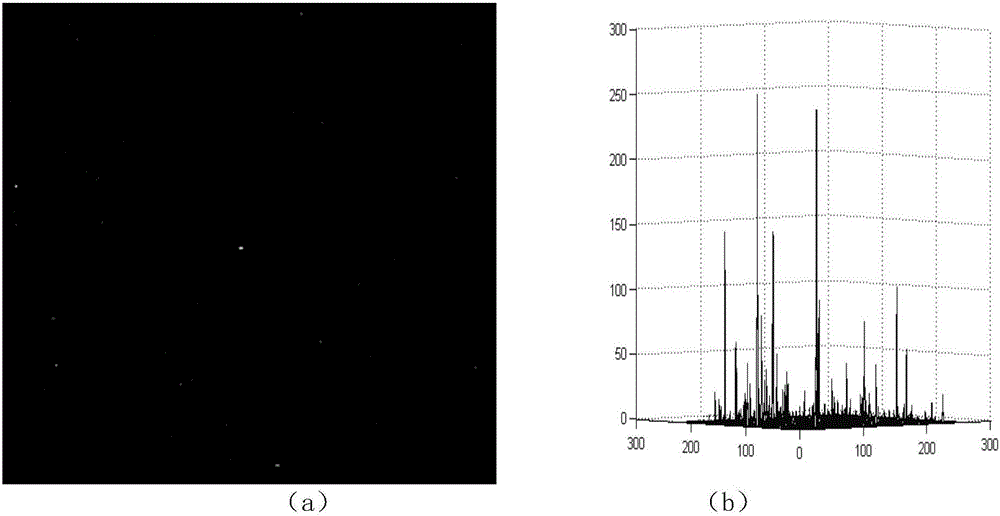

Weak target detection method by use of time-space domain filtering adaptive to pipe diameter

ActiveCN106469313AEffectively preforms background clutterSolve the scale change problemCharacter and pattern recognitionPattern recognitionTime domain

The invention discloses a weak target detection method by use of time-space domain filtering adaptive to a pipe diameter. The weak target detection method comprises steps of: carrying out background detection on a to-be-processed image by use of anisotropic differential algorithm so as to improving subsequent target detection ability; segmenting a difference graph by use of a local maximum value so as to acquire a binary image; initializing a time domain parameter (an accumulation frame length) and a space domain parameter (the size of the pipe diameter), and successively inputting a series of binary images with the accumulation frame length of N; and finally, by use of the time-space domain filtering adaptive to the pipe diameter, detecting multiple frames of images so as to acquire a real target point, and superposing the detection results so as to output a target movement track. Compared with a traditional pipeline filtering target detection method with a fixed pipe diameter, the weak target detection method is advantageous in that based on the correlation between a target and the time-space domain multi-frame movement, the size of the pipe diameter is adaptively modified according to the change of a target scale, so a detection problem caused by the fact that the target becomes small / big due to unchanged pipe diameter is effectively solved and target detection precision is greatly improved.

Owner:INST OF OPTICS & ELECTRONICS - CHINESE ACAD OF SCI

Convex optimization method for three-dimensional (3D)-video-based time-space domain motion segmentation and estimation model

ActiveCN102592287AEfficient use ofRealize multi-target trackingImage analysisSteroscopic systemsSpace time domainTime space

Owner:ZHEJIANG UNIV

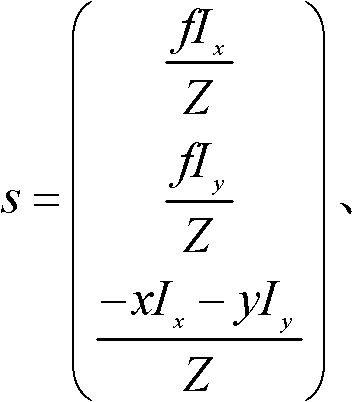

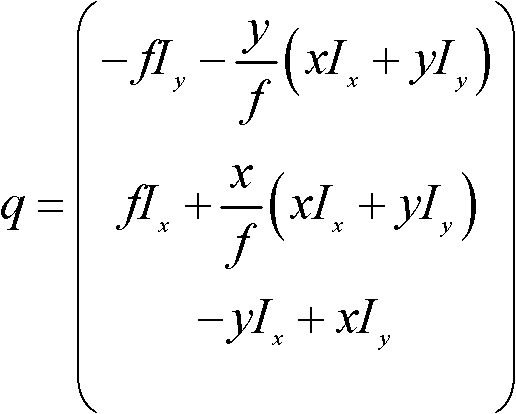

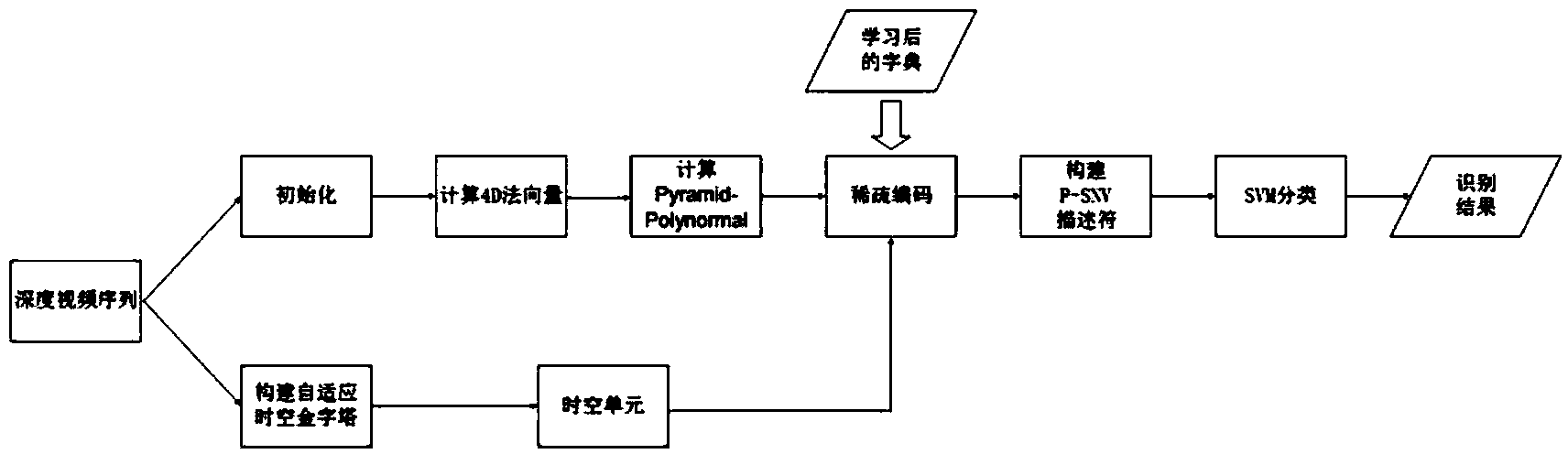

Human body behavior recognition method based on depth video sequence

ActiveCN104298974AEnhance expressive abilityAvoid interferenceCharacter and pattern recognitionHuman bodyLearning based

The invention discloses a human body behavior recognition method based on a depth video sequence. The method comprises the steps that four-dimensional normal vectors of all pixel points in the video sequence are calculated; low-level features of the pixel points in different layers are extracted by establishing behavior sequence space-time pyramid models in different space-time domains; a group sparse dictionary is learned based on the low-level features, so that sparse codes of the low-level features are obtained; the codes are integrated through a space average pool and a time maximum pool, so that high-level features are obtained and serve as descriptors of a final behavior sequence. The descriptors can effectively preserve space-time multi-resolution information of human body behaviors; meanwhile, the sparse dictionary with stronger expressive power is obtained by eliminating similar contents contained in different behavior categories, so that the behavior recognition rate is effectively increased.

Owner:BEIJING UNIV OF TECH

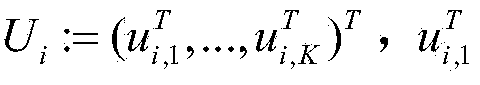

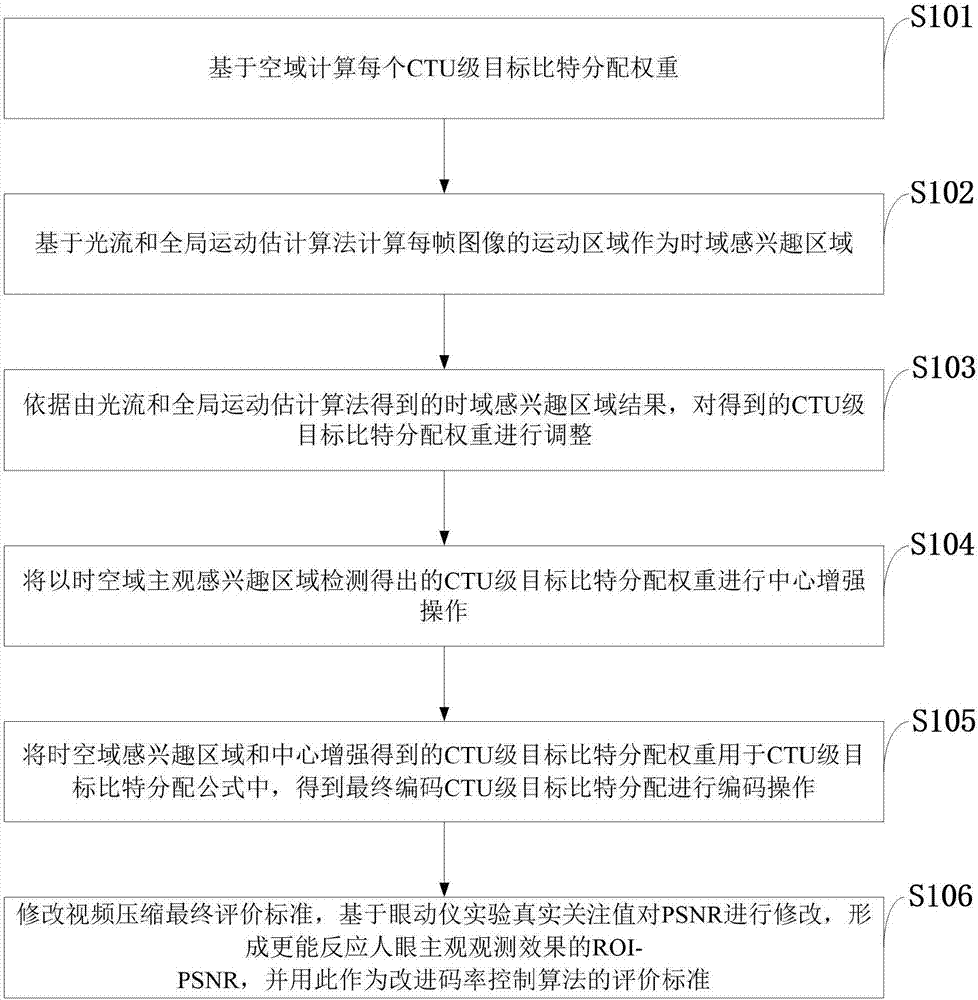

Code rate control method based on subjective region of interest and time-space domain combination

ActiveCN106937118AImproving the quality of subjective observationCompression subjective effect is goodDigital video signal modificationPattern recognitionTime domain

The invention belongs to the technical field of HEVC (High Efficiency Video Coding), and discloses a code rate control method based on a subjective region of interest and time-space domain combination. The method comprises the following steps: calculating each CTU grade target bit allocation weight based on a space domain; calculating a motion region of each frame of image based on light streams and a global motion estimation algorithm to serve as a time domain region of interest; adjusting the obtained CTU grade target bit allocation weights according to a time domain region of interest result obtained on the basis of the light streams and the global motion estimation algorithm; performing a center enhancing operation on the CTU grade target bit allocation weights obtained through time-space domain subjective region of interest detection; obtaining final coding CTU grade target bit allocation to perform a coding operation; and modifying a video compression final evaluation standard. Compared with an HM16.0 compression model, the code rate control method has the advantages that the HEVC compression subjective effect is enhanced under the condition of ensuring that a target bit code rate is invariable.

Owner:XIDIAN UNIV +1

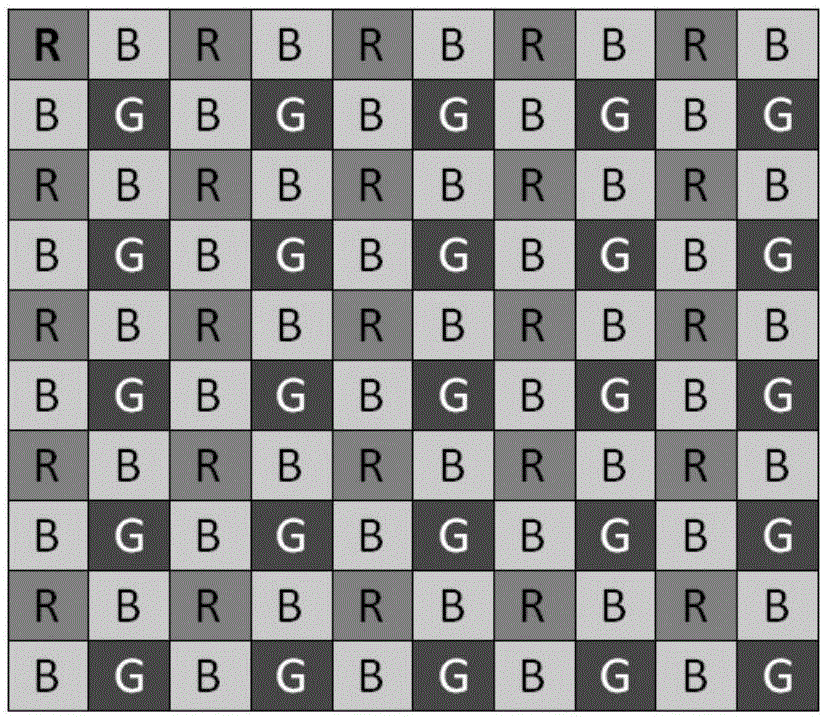

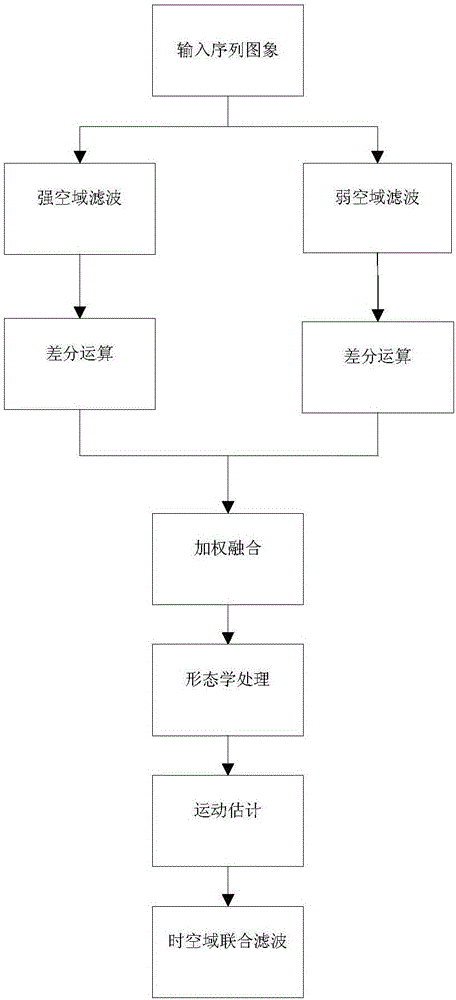

Denoising device and method for sequence image

ActiveCN106251318AImprove picture qualityCancel noiseImage enhancementImage analysisSpace time domainImaging quality

The invention relates to a denoising device and a denoising method for sequence image. The denoising device comprises an input image unit, a motion estimation unit, a time-space domain federated filtering unit and a noise estimation module, wherein the input image unit inputs the sequence image to the motion estimation unit, and the sequence image is processed by the motion estimation unit and then input to the time-space domain federated filtering unit for processing; the noise estimation module outputs data to the motion estimation unit for processing; the motion estimation unit comprises a space domain filtering module, a differential module, a differential image fusion module, a morphological processing module and a motion estimation module; the space domain filtering module carries out filtering processing on the image and then transmits the image to the differential module for difference operation; and the differential image fusion module carries out weighting fusion processing on the image of the previous module, and the image is further processed by the morphological processing module and then transmitted to the motion estimation module for motion estimation operation after processing. The denoising device and the denoising method effectively complete the separation of a moving object and noise in the sequence image, effectively eliminate the noise, and improve the image quality.

Owner:杭州雄迈集成电路技术股份有限公司

Video clustering method, ordering method, video searching method and corresponding devices

InactiveCN102542066AReal-time searchExact searchSpecial data processing applicationsSpace time domainCross correlation analysis

The invention relates to the field of video information, and discloses a video clustering method, an ordering method, a video searching method and corresponding devices. The video clustering method comprises a clustering step used for clustering multiple pixels of which the similarity is higher than a predetermined threshold value together by aiming at each frame in a video, so as to obtain super pixels capable of imitating the minimum entity of human vision, wherein the similarity is calculated according to the colors, the positions and / or the motion characteristics of the pixels. Through conducting time-space domain self-correlation and cross-correlation analysis on pixel areas in the video and ordering the pixel areas in the video on the basis of the super pixels capable of imitating the minimum entity of the human vision, the invention has the advantage that videos can be accurately searched in a real-time manner even in a massive video database.

Owner:冉阳

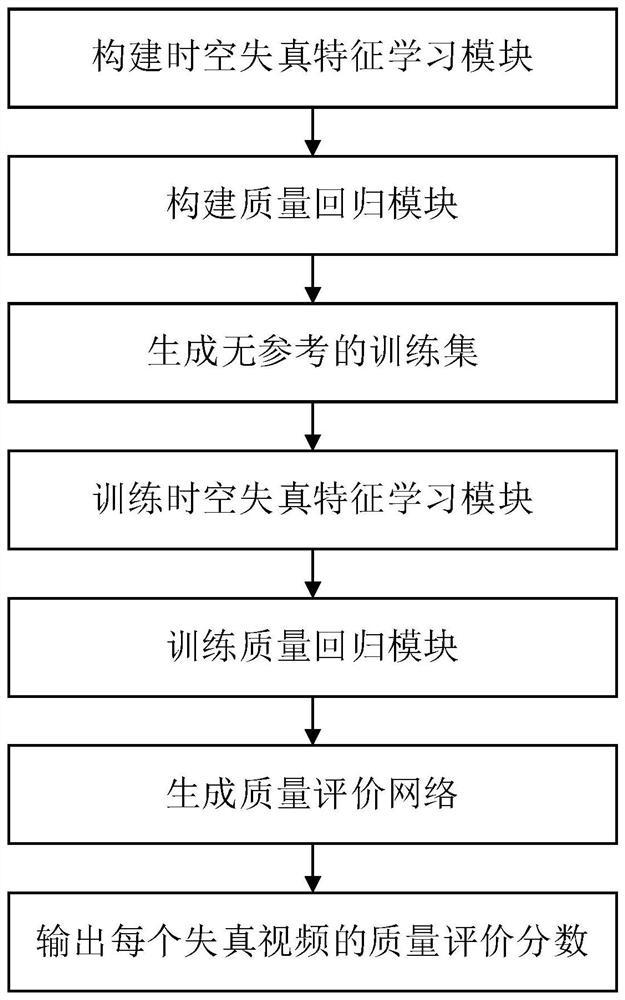

No-reference video quality evaluation method based on three-dimensional spatial-temporal feature decomposition

ActiveCN112085102AOvercoming Confusion in Space-Time Domain OperationsOvercome operational difficultiesCharacter and pattern recognitionTelevision systemsData setSpace time domain

The invention discloses a no-reference video quality evaluation method based on three-dimensional spatial-temporal feature decomposition, which comprises the following steps of: constructing a qualityprediction network consisting of a spatial-temporal distortion feature learning module and a quality regression module, generating a no-reference training data set and a no-reference test data set, training the spatial-temporal distortion feature learning module and the quality regression module, and outputting the quality evaluation score value of each distorted video in the test set. The invention is used for accurately and efficiently extracting the quality perception characteristics of the time-space domain content from the input distorted video, the corresponding prediction quality scoreis obtained at the output end of the network, and the method has the advantages that the result is more accurate and the application is wider when the quality of the non-reference video is evaluated.

Owner:XIDIAN UNIV

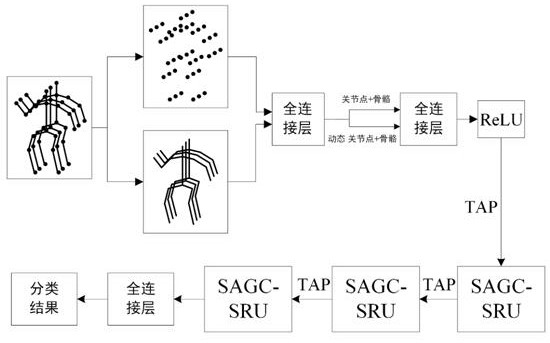

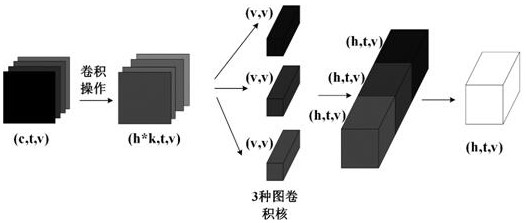

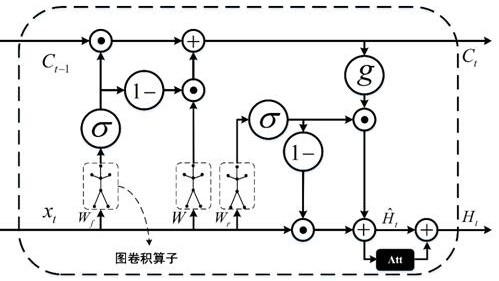

Skeleton action recognition method based on multi-stream spatial attention graph convolution SRU network

PendingCN112733656AImprove the efficiency of action recognitionSolve the slow calculationCharacter and pattern recognitionNeural architecturesSpace time domainTheoretical computer science

The invention provides a skeleton action recognition method based on a multi-stream spatial attention graph convolution SRU network. The method comprises the following steps: firstly, embedding a graph convolution operator into a simple cycle unit to construct a graph convolution model to capture time-space domain information of skeleton data; meanwhile, in order to enhance the discrimination between the joint points, a spatial attention network and a multi-stream data fusion mode are designed, and a graph convolution simple cycle network model is further expanded into a multi-stream spatial attention graph convolution SRU. According to the method, the high classification precision is maintained, the complexity of the method is remarkably reduced, the reasoning speed of the model is increased, the balance between the calculation efficiency and the classification precision is achieved, and the method has a very wide application prospect.

Owner:HANGZHOU DIANZI UNIV

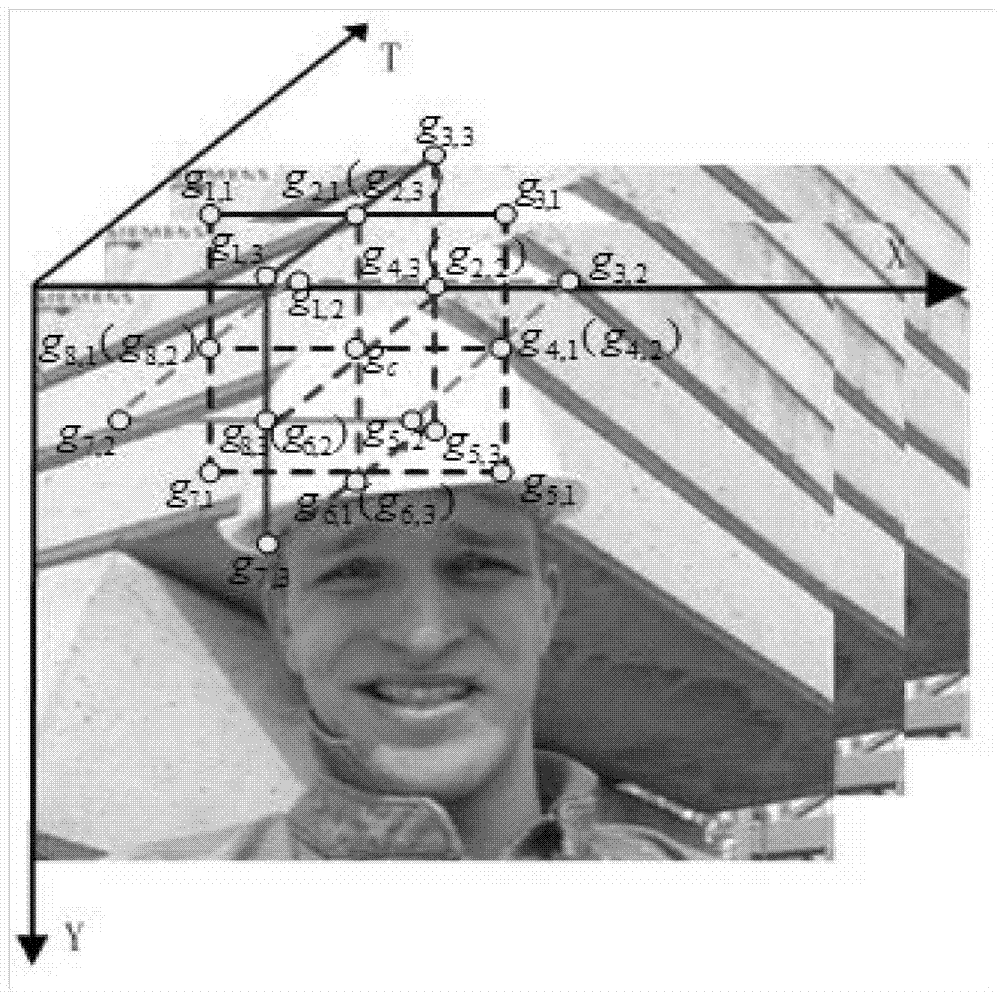

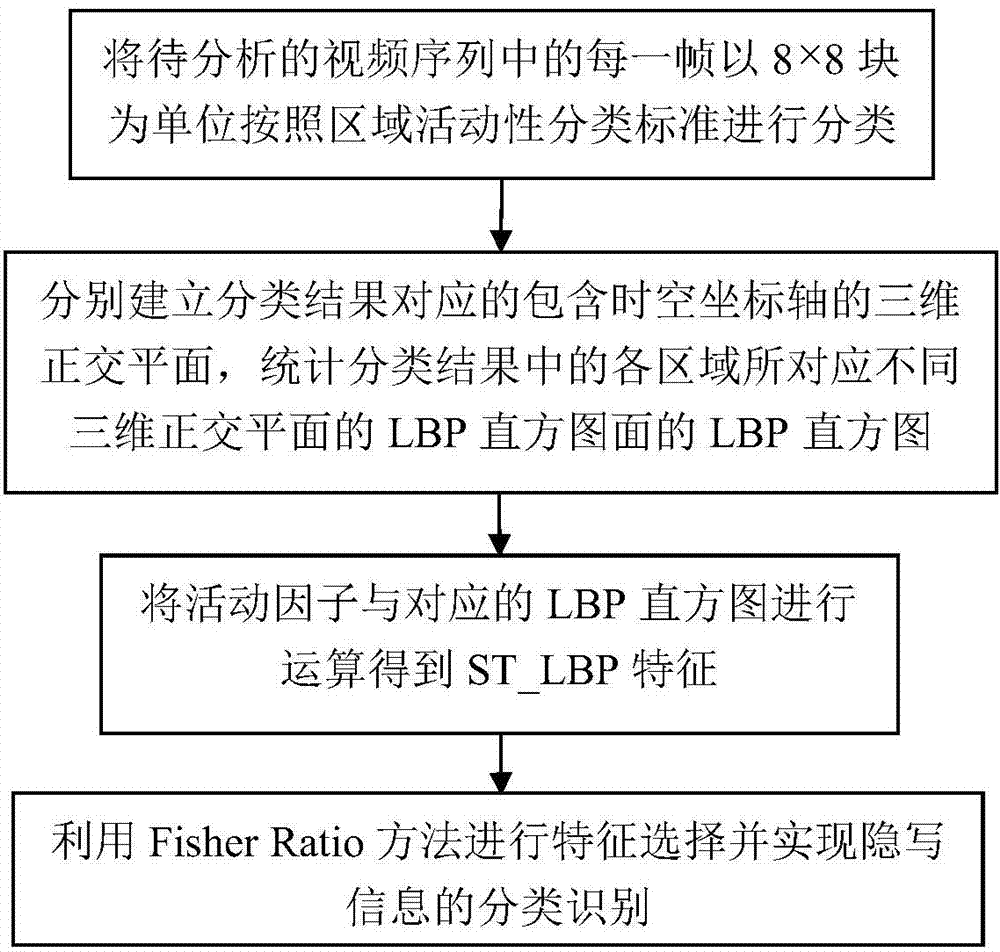

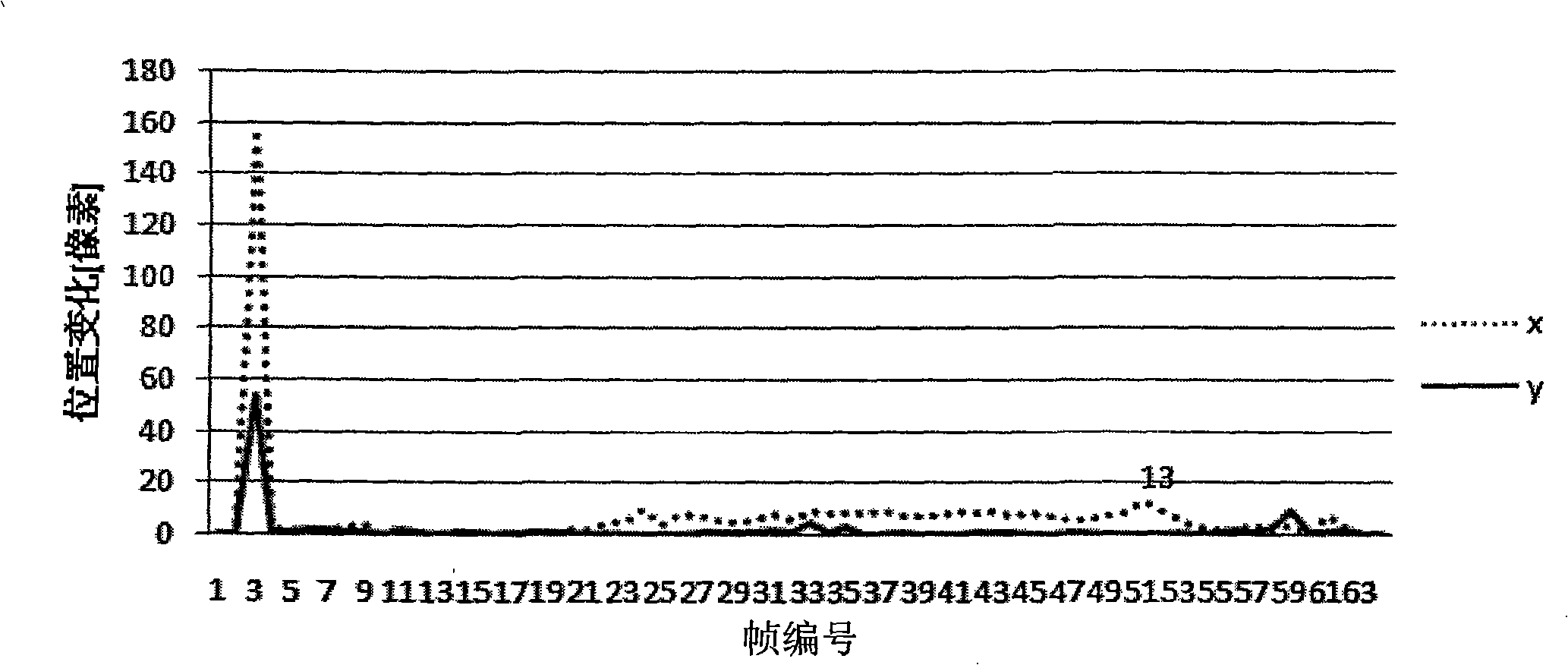

Video steganography analysis method based on space-time domain local binary pattern

InactiveCN104519361AReduce distractionsImprove detection effectivenessCharacter and pattern recognitionDigital video signal modificationFeature DimensionLiveness

The invention discloses a video steganography analysis method based on a space-time domain local binary pattern. The video steganography analysis method includes step 1, classifying each frame in a video sequence to be analyzed by taking 8x8 blocks as a unit according to regional activity classifying standards; step 2, respectively establishing three-dimensional orthogonal planes, containing time-space coordinate axis, corresponding to classifying result, and making statistic analysis on LBP histograms of different three-dimensional orthogonal planes corresponding to each area in the classifying result; step 3, introducing a concept of activity factor, and enabling the activity factor and the corresponding LBP histograms to be in operation to acquire ST_LBP features; step 4, utilizing a Fisher Ratio method for feature selection, and realizing classifying recognition of steganographic information. Compared with the prior art, the video steganography analysis method has the advantages that by introducing the activity factor, interference, on detection result, caused by movement of an activity area object can be lowered, so that detection effectiveness is improved effectively; limitation of feature dimension is avoided, and valuing of radius R and field point number P in an LBP definition can be further expanded, so that relevance of space-time domain of the video sequence is utilized more effectively.

Owner:TIANJIN UNIV

Method for rapidly detecting human face based on video

InactiveCN101350062AAvoid the pitfalls of searching full framesReduce search areaCharacter and pattern recognitionFace detectionVideo monitoring

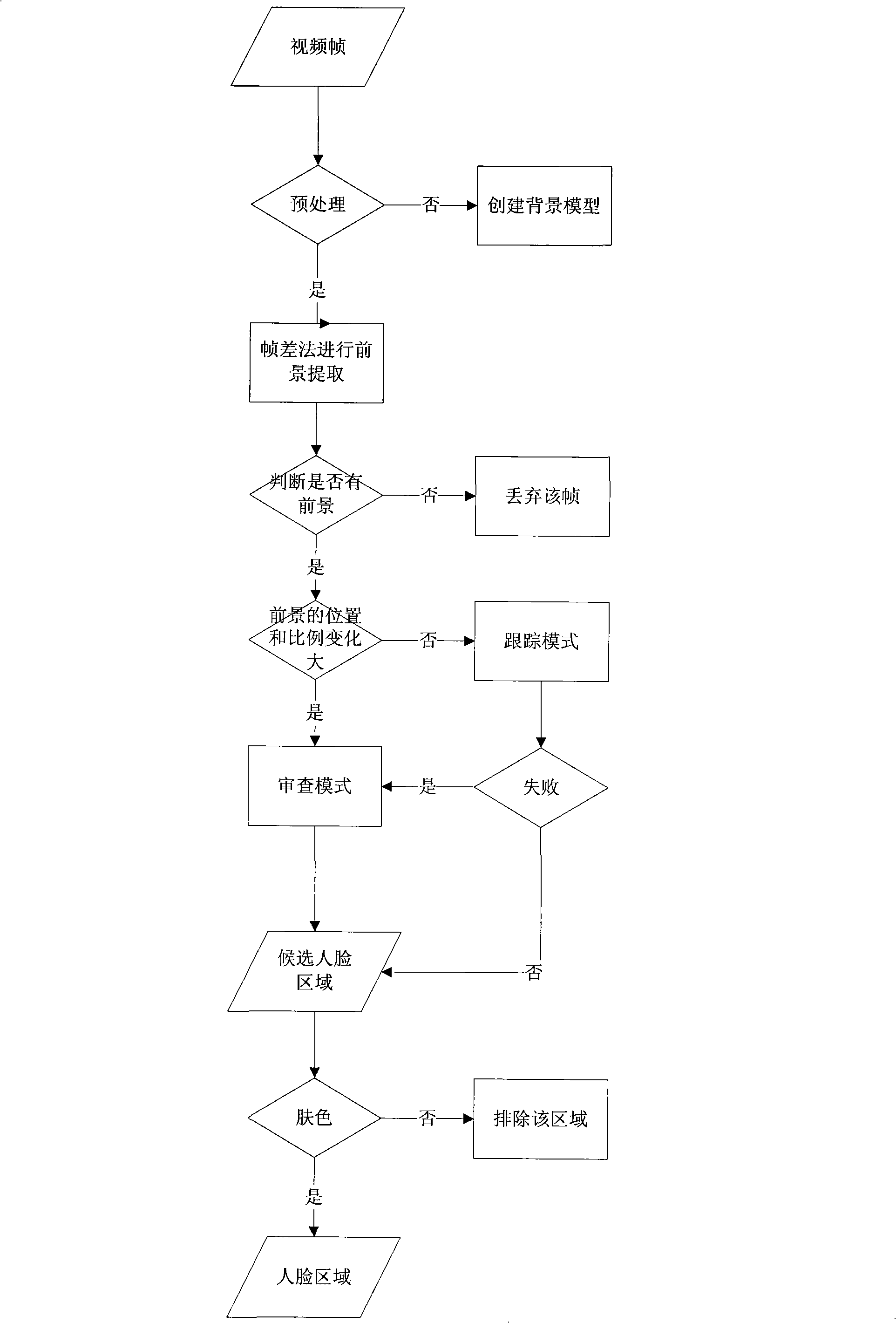

The invention discloses a method for detecting human faces on the basis of video. The method comprises: concerning foreground areas in video frames, using the space-time domain characteristics between the video frames to detect the human faces of the foreground areas through preprocessing, dividing the human face detecting process into two different modes of an inspection mode and a tracking mode, switching a detecting flow to the inspection mode if the human faces can not be detected in the tracking mode or the tracking mode forecasts that the human faces which are tracked will escape a monitoring area in next frame, searching the foreground area wholly, and recollecting human face information of the monitoring area. The invention can reduce the region area which needs searching in the detecting process, can reduce areas which need searching in the detecting process, can avoid the defect that a traditional AdaBoost algorism searches full frames, can greatly lower the computational complexity, and can improve the real-time performance of human face detection in the intelligent video monitoring application.

Owner:ZHEJIANG UNIV

Non-invasive method and system for characterizing cardiovascular systems

The present disclosure uses physiological data, ECG signals as an example, to evaluate cardiac structure and function in mammals. Two approaches are presented, e.g., a model-based analysis and a space-time analysis. The first method uses a modified Matching Pursuit (MMP) algorithm to find a noiseless model of the ECG data that is sparse and does not assume periodicity of the signal. After the model is derived, various metrics and subspaces are extracted to image and characterize cardiovascular tissues using complex-sub-harmonic-frequencies (CSF) quasi-periodic and other mathematical methods. In the second method, space-time domain is divided into a number of regions, the density of the ECG signal is computed in each region and inputted into a learning algorithm to image and characterize the tissues.

Owner:ANALYTICS FOR LIFE

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com