Segmentation method for space-time consistency of video sequence of parameter and depth information of known video camera

A technology of camera parameters and depth information, which is applied in image communication, image analysis, image data processing, etc., can solve problems such as overhead memory, lack of two-dimensional motion estimation, matching or transmission of affected areas, and achieve high application value.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

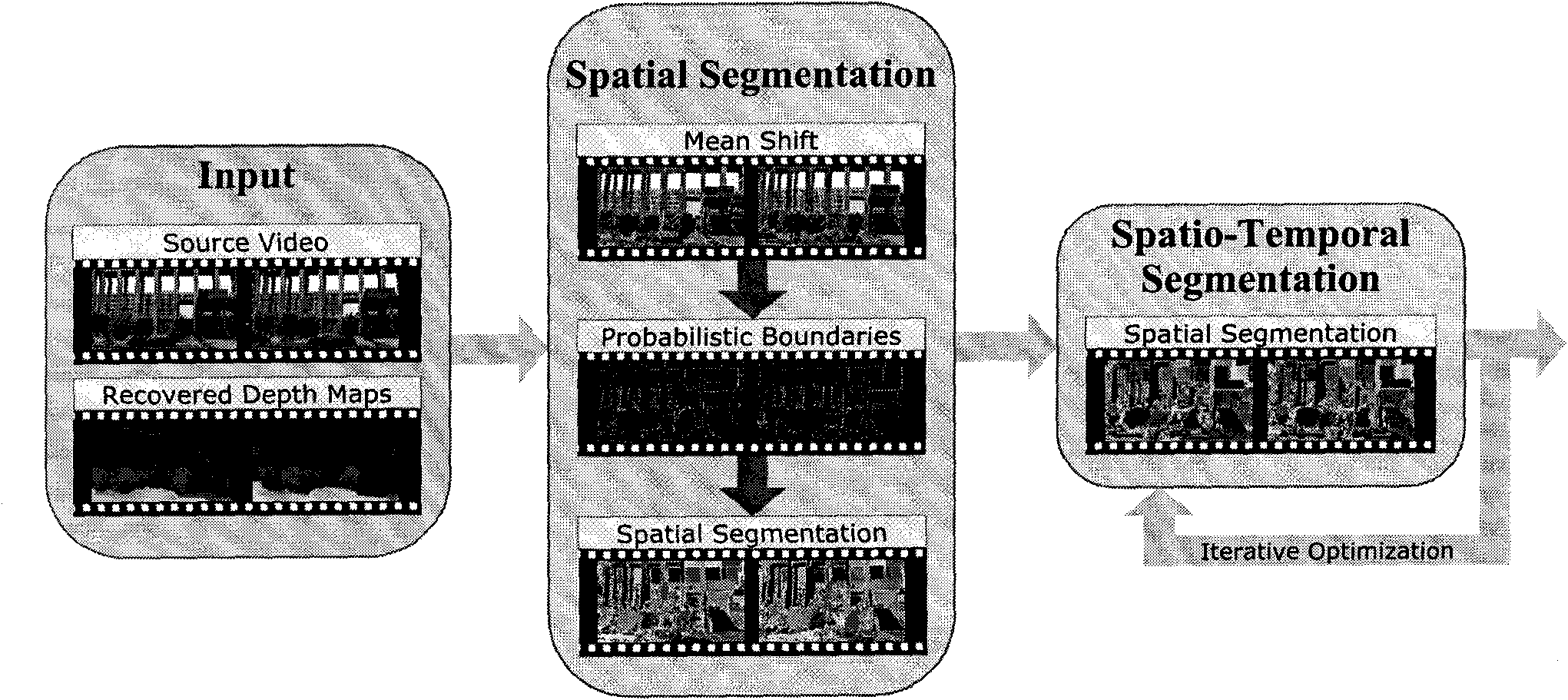

[0038] The spatio-temporal consistency segmentation method for a video sequence with known camera parameters and depth information includes the following steps:

[0039]1) Use the Mean-shift method to perform separate region segmentation on each frame of the video;

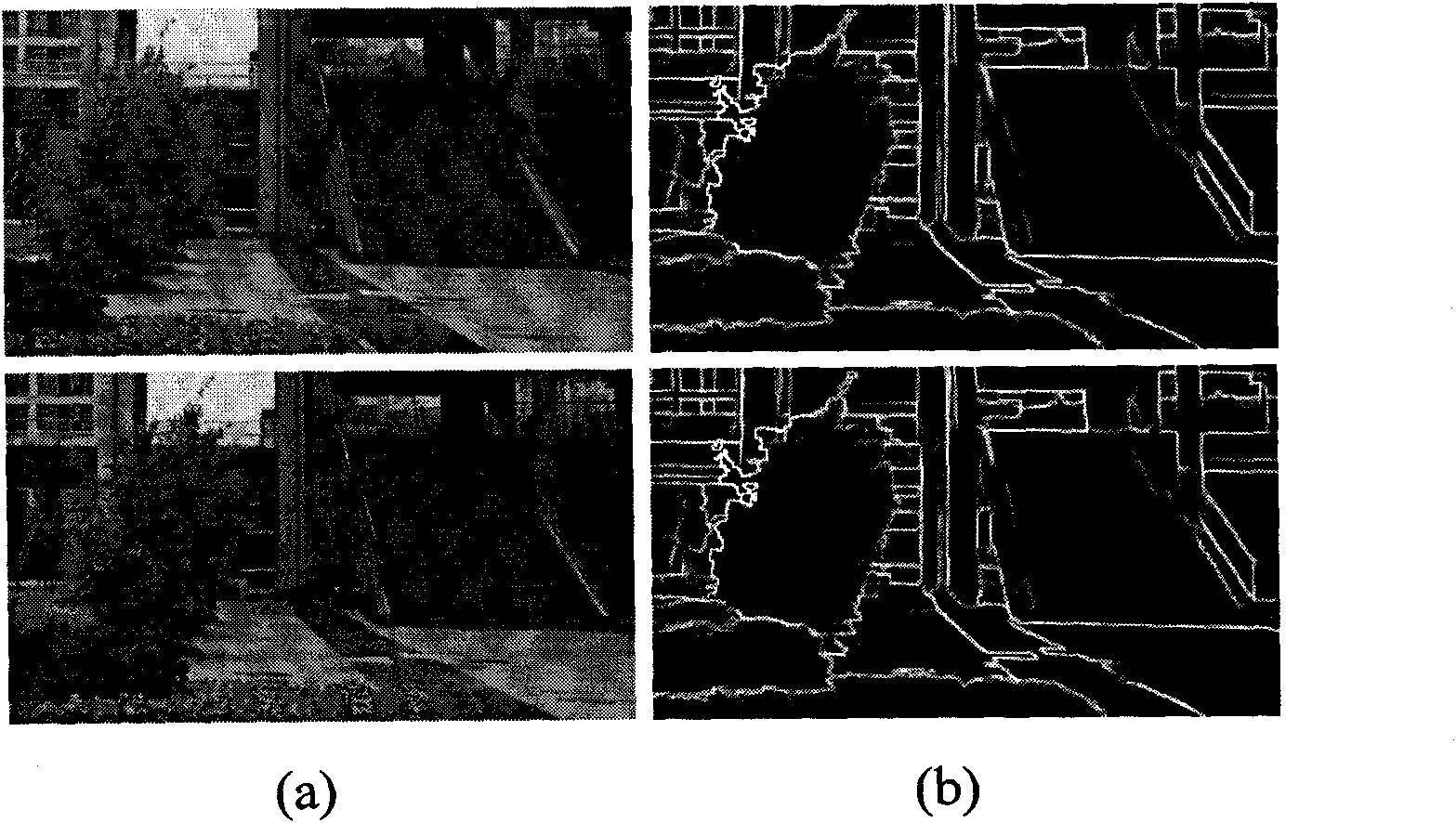

[0040] 2) According to the camera parameters and depth information, the Mean-shift region segmentation boundary is counted on the entire sequence, and a "probability boundary map" is calculated for each frame;

[0041] 3) Using Watershed transformation and energy optimization method to segment the "probabilistic boundary map", to obtain a more consistent and coherent image segmentation in different frames than Mean-shift;

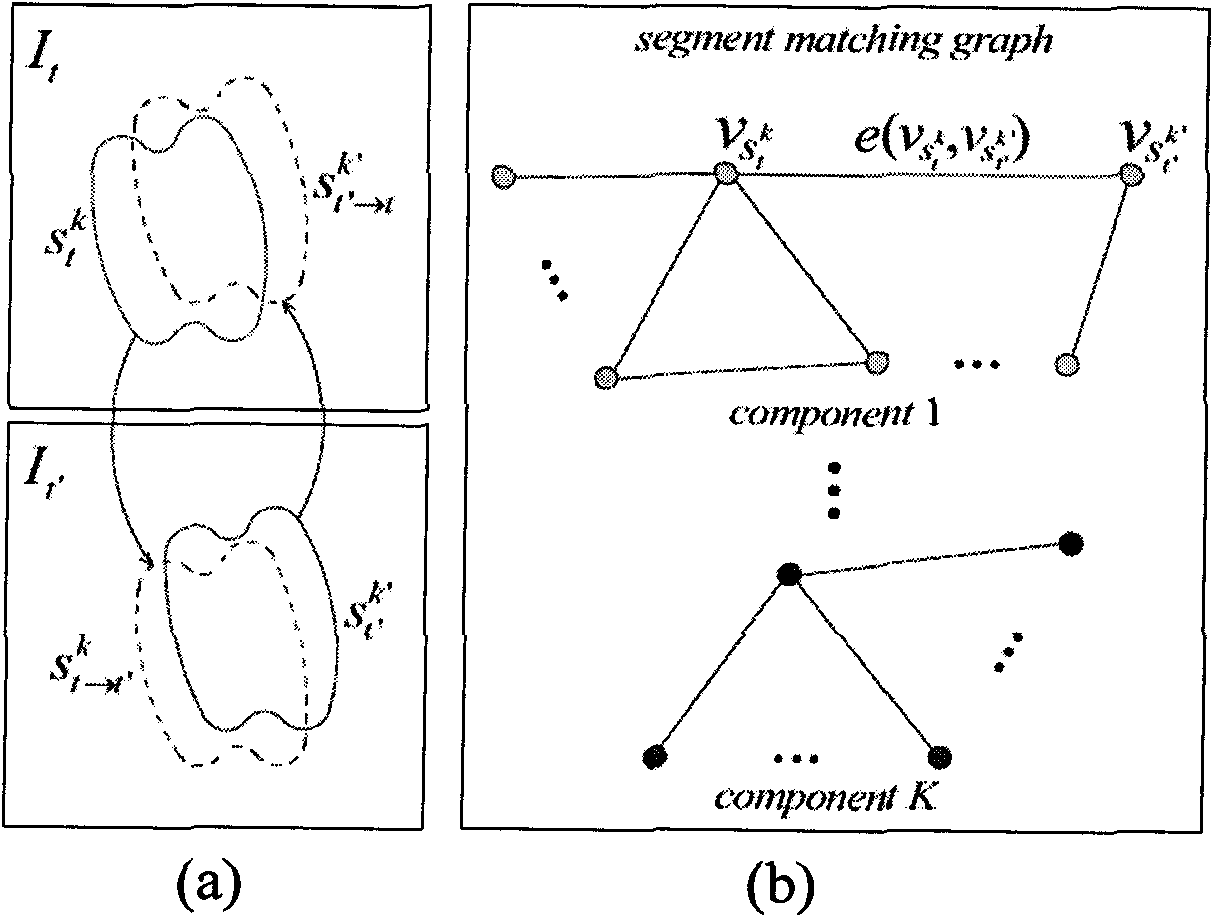

[0042] 4) For the initial segmentation obtained by the Watershed transformation and the energy optimization method, the matching and connection of the segmentation blocks are performed between different frames, thereby generating the segmentation blocks in the space-time domain;

[0043] 5) Us...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com