Patents

Literature

66 results about "Temporal consistency" patented technology

Efficacy Topic

Property

Owner

Technical Advancement

Application Domain

Technology Topic

Technology Field Word

Patent Country/Region

Patent Type

Patent Status

Application Year

Inventor

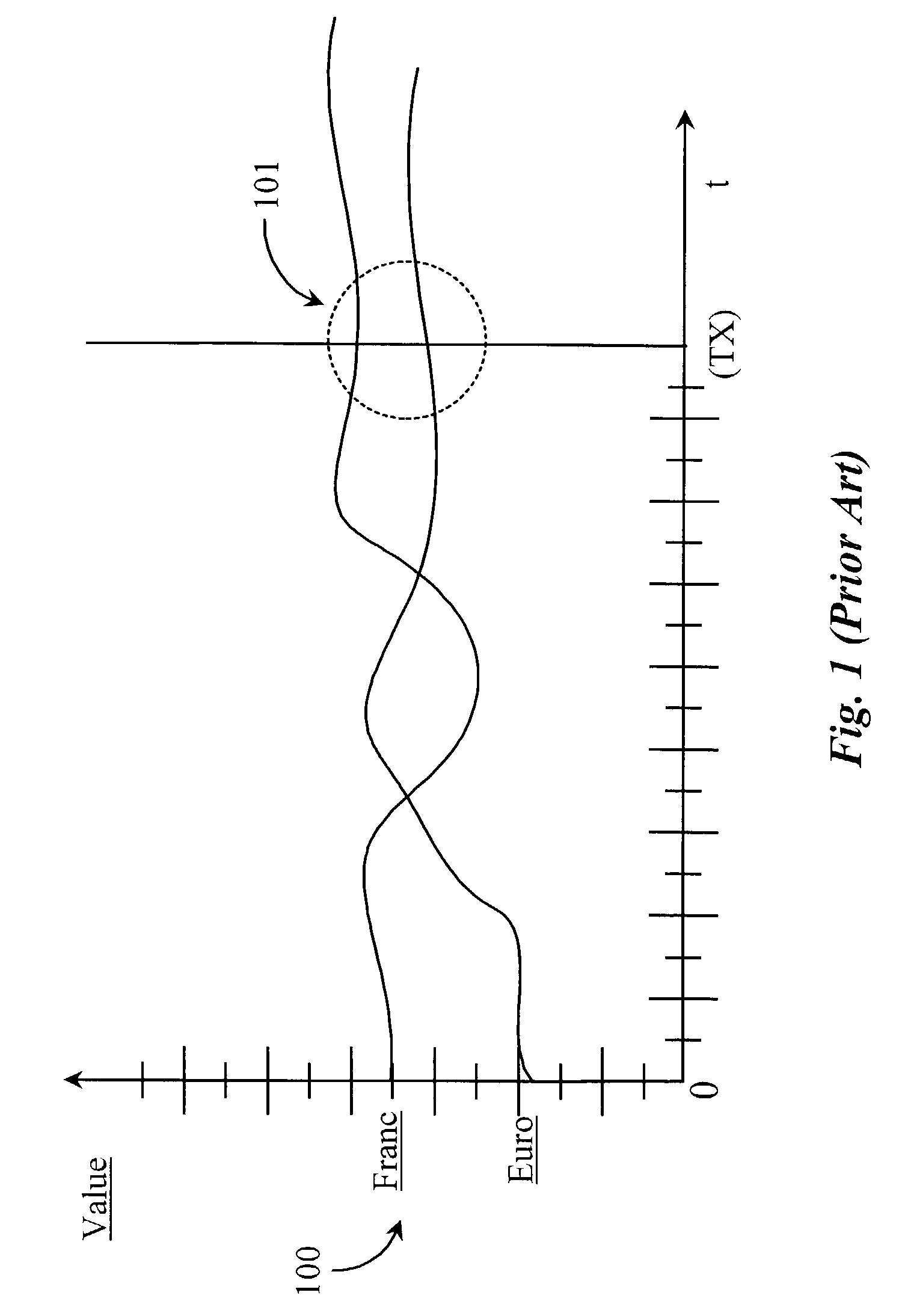

Temporal Consistency. The consistency that needs to be maintained between the actual state of the environment (the controlled system’s state) and the state reflected by the contents of the database (the state perceived by the controlling system) is called as temporal consistency.

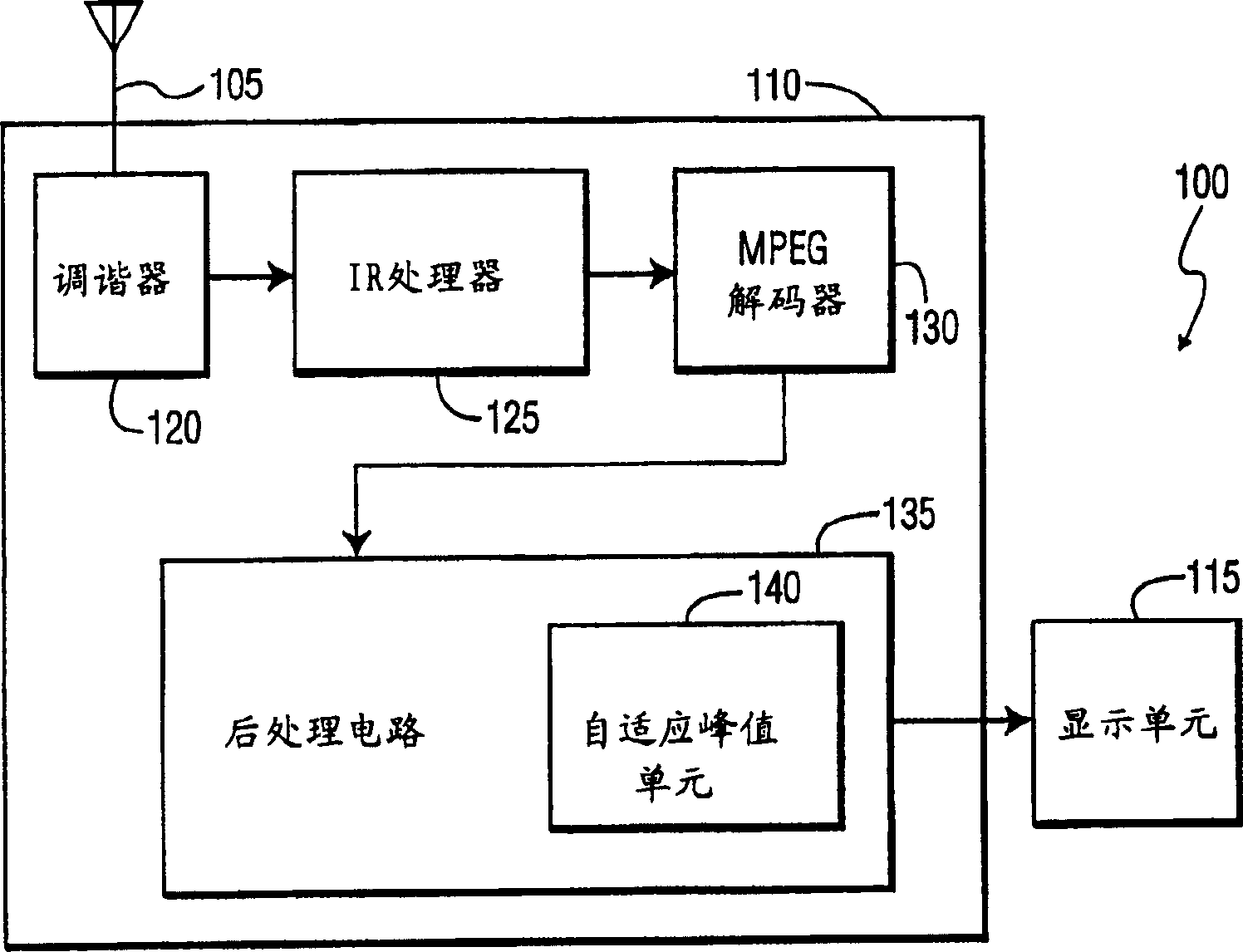

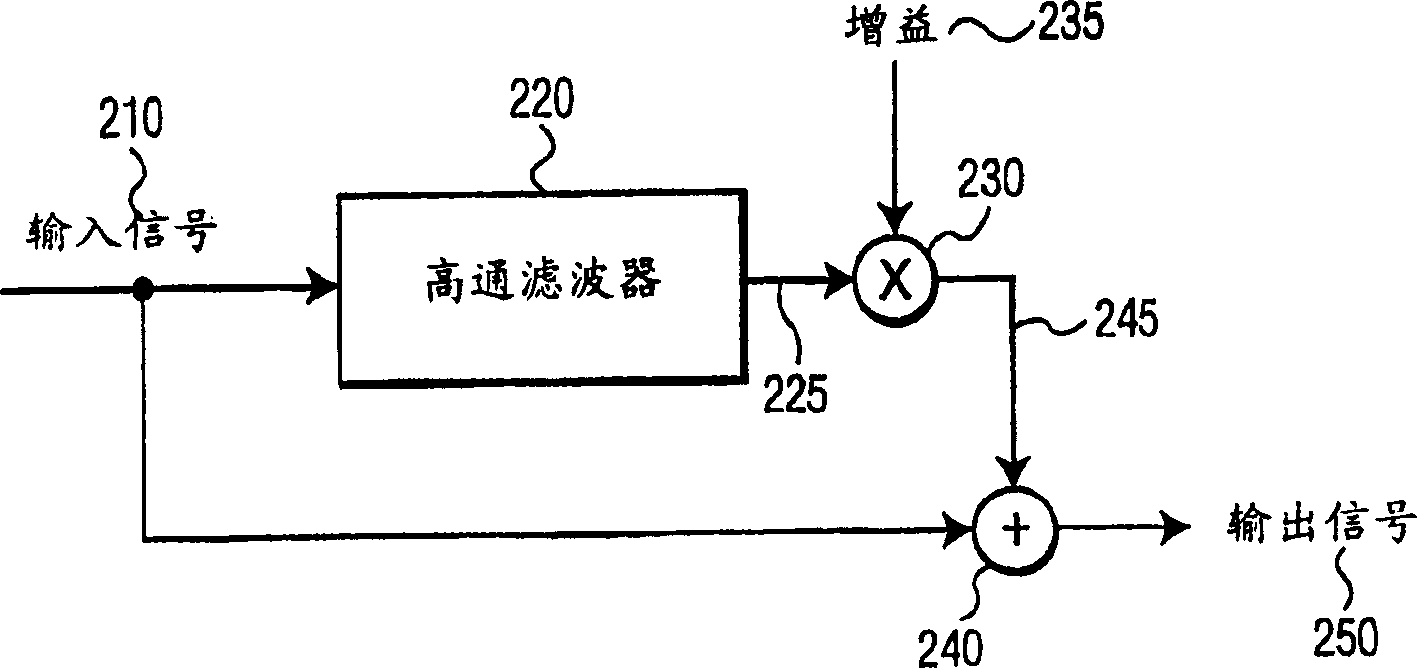

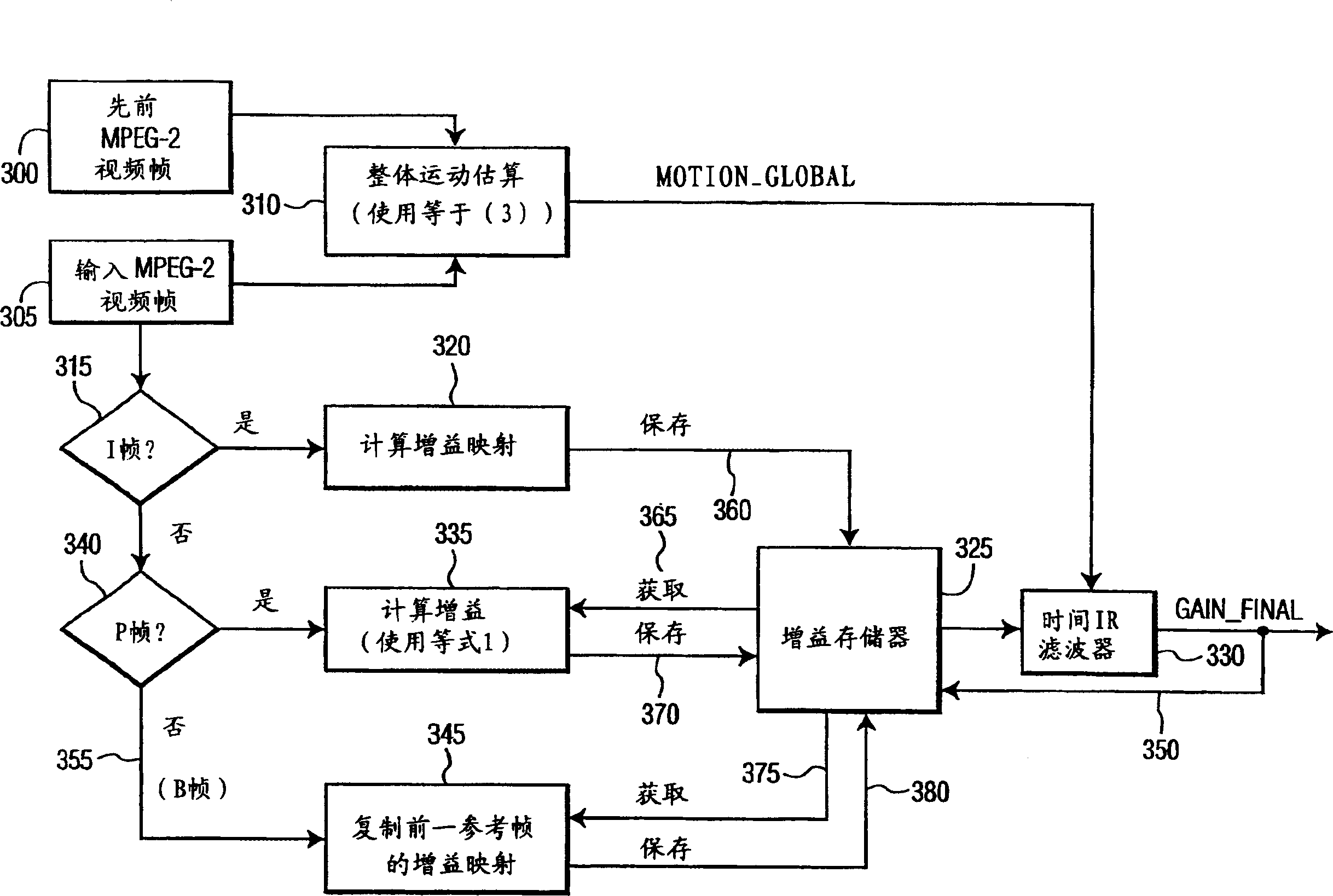

Method of and system for improving temporal consistency in sharpness enhancement for a video signal

ActiveUS6873657B2Improving temporal consistencyHigh gainTelevision system detailsPicture reproducers using cathode ray tubesIir filteringMotion vector

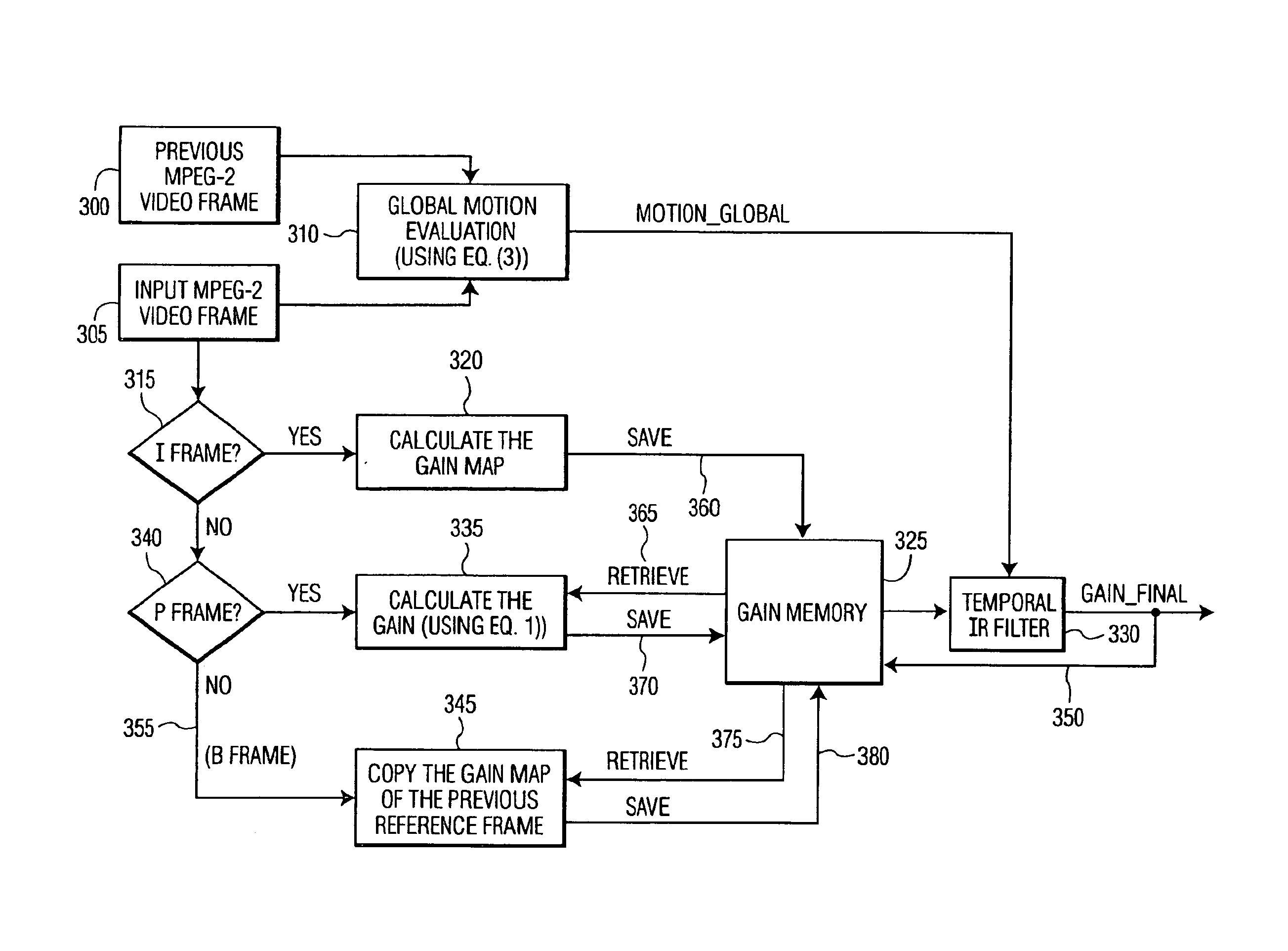

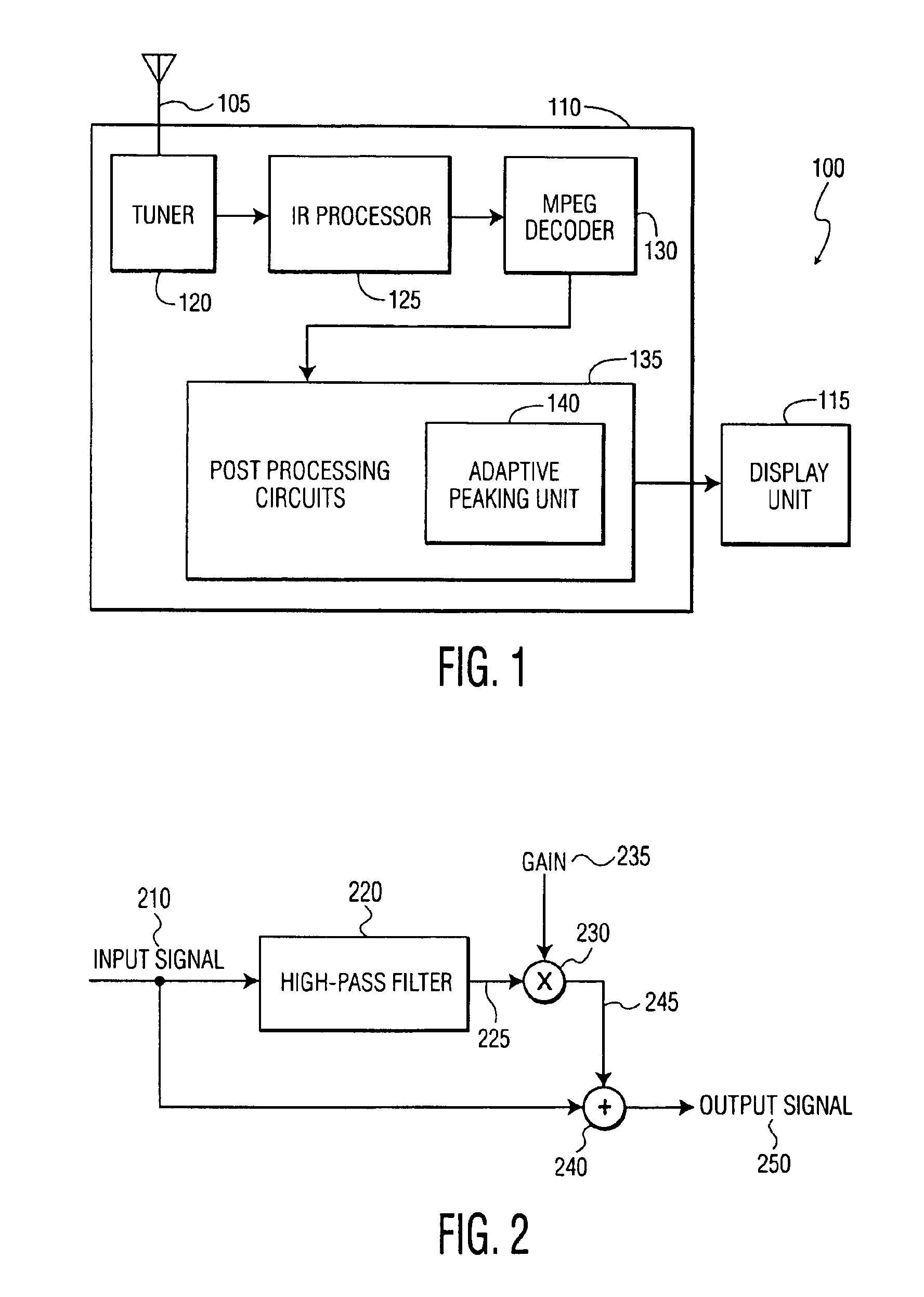

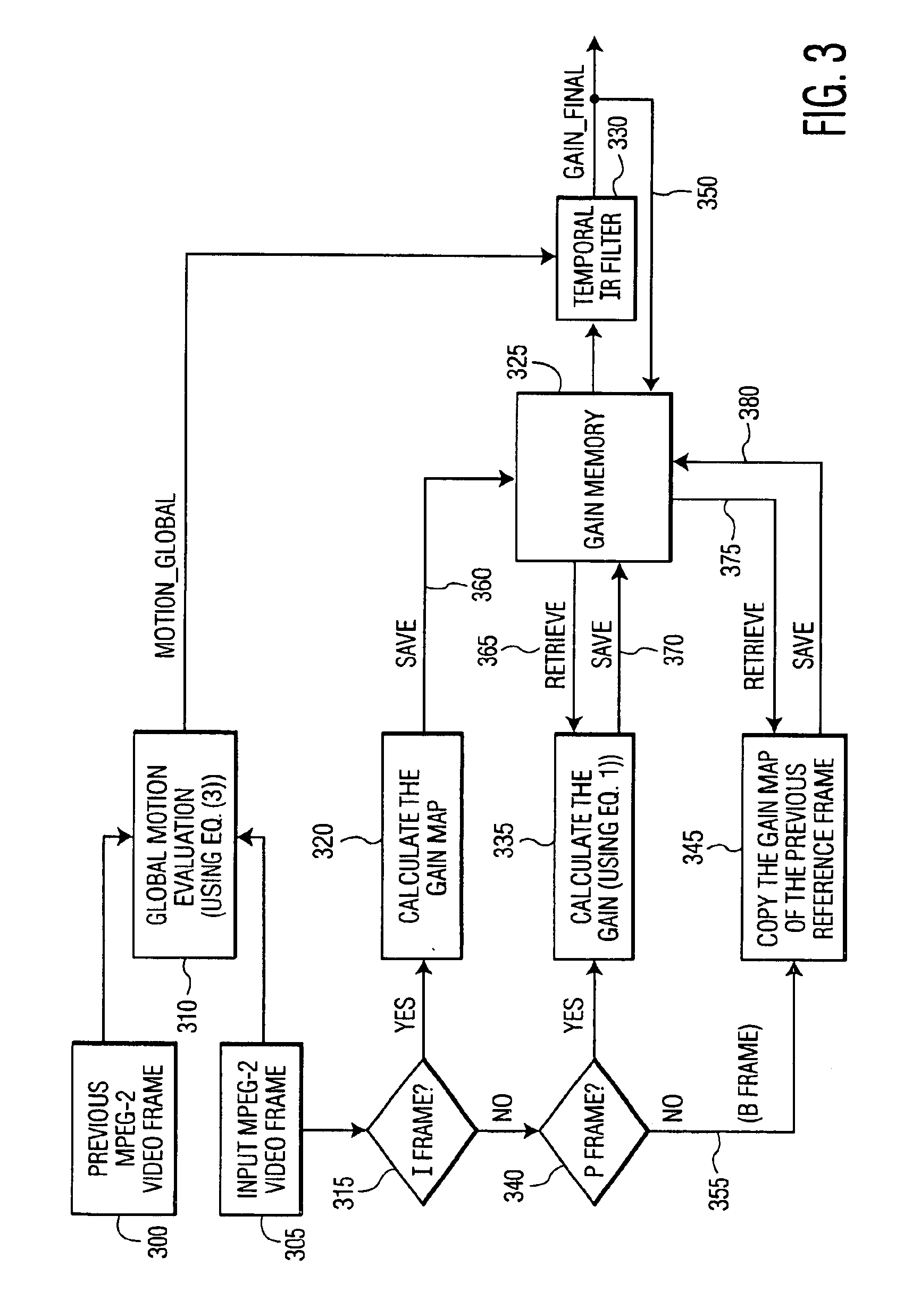

In accordance with the preferred embodiment of the present invention, a method of and system for improving temporal consistency of an enhanced signal representative of at least one frame using a sharpness enhancement algorithm with an enhancement gain are provided. The method comprises the steps of: receiving the enhanced signal comprising at least one frame, obtaining an enhancement gain for each pixel in the frame, retrieving an enhancement gain value of each pixel in a reference frame from a gain memory using motion vectors, identifying if the frame is an I, P or B frame type and determining an updated enhancement gain for an I frame type by calculating a gain map for use in the sharpness enhancement algorithm. The updated enhancement gain of each pixel is equal to enhancement gain previously determined for use in the sharpness enhancement algorithm. In addition, the method includes storing the updated enhancement gain to gain memory, and applying the updated enhancement gain to the sharpness enhancement algorithm to improve temporal consistency of the enhanced signal. The method may further comprise the step of further improving the updated enhancement gain by applying a motion adaptive temporal IIR filter on the updated enhancement gain.

Owner:FUNAI ELECTRIC CO LTD

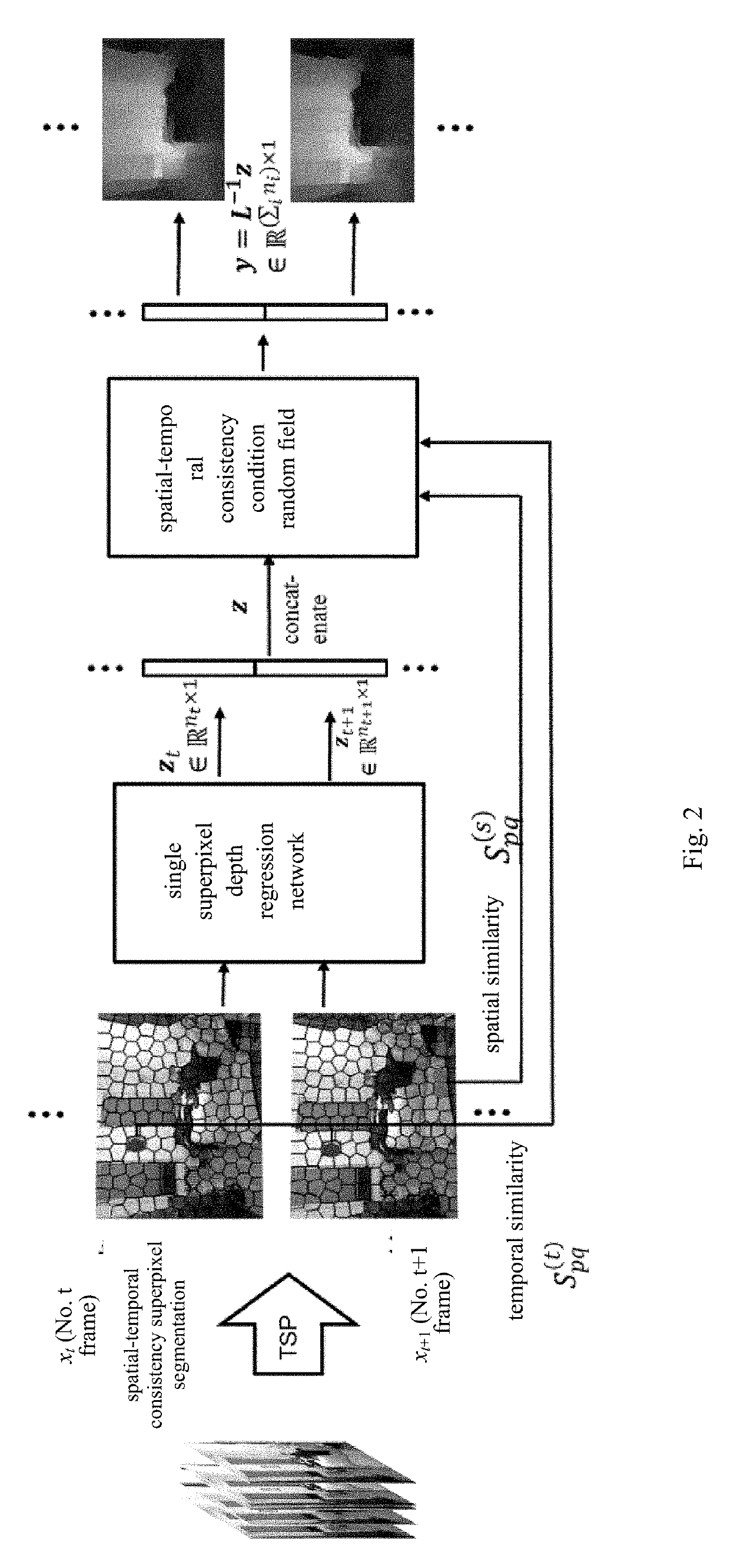

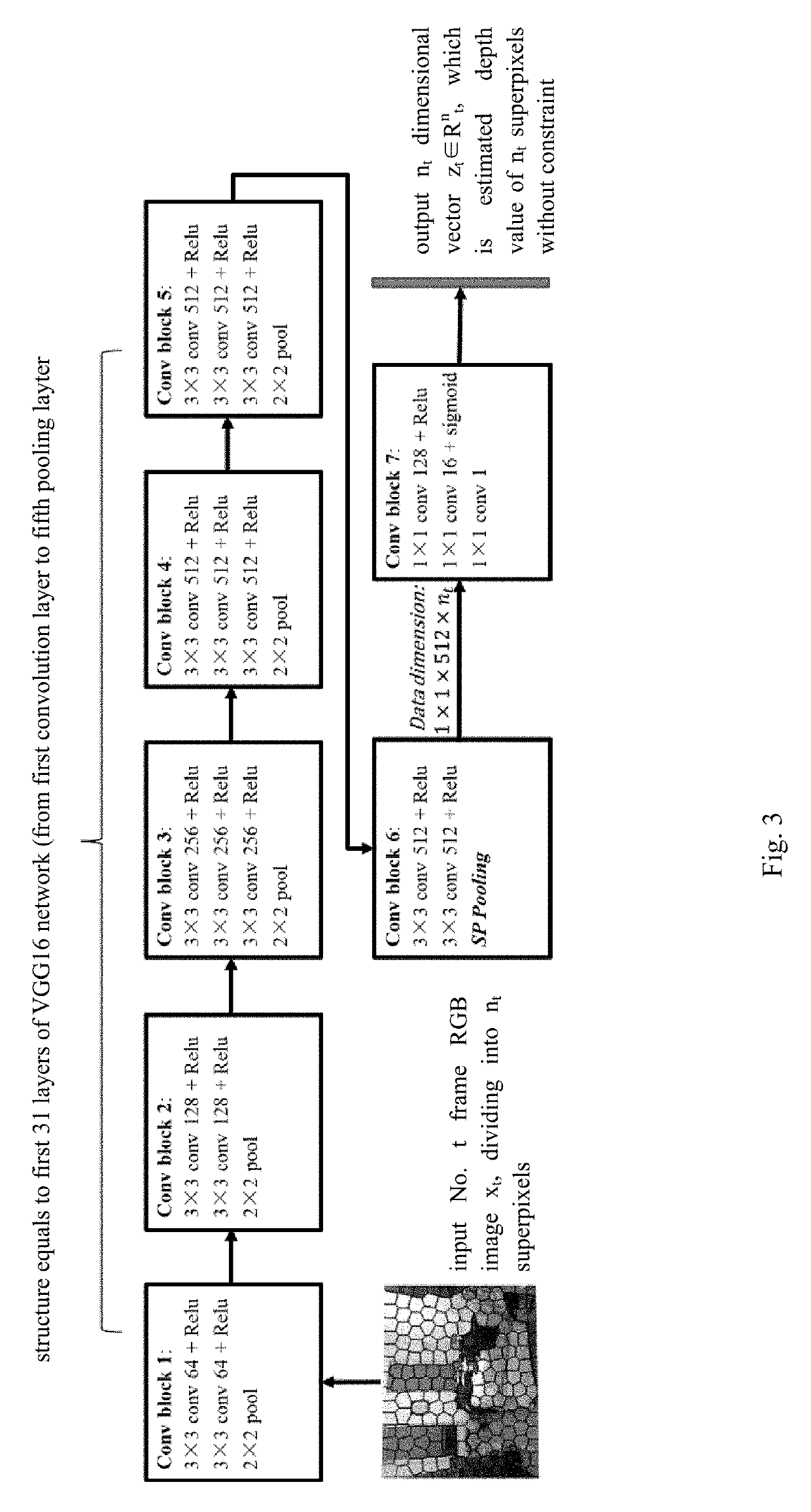

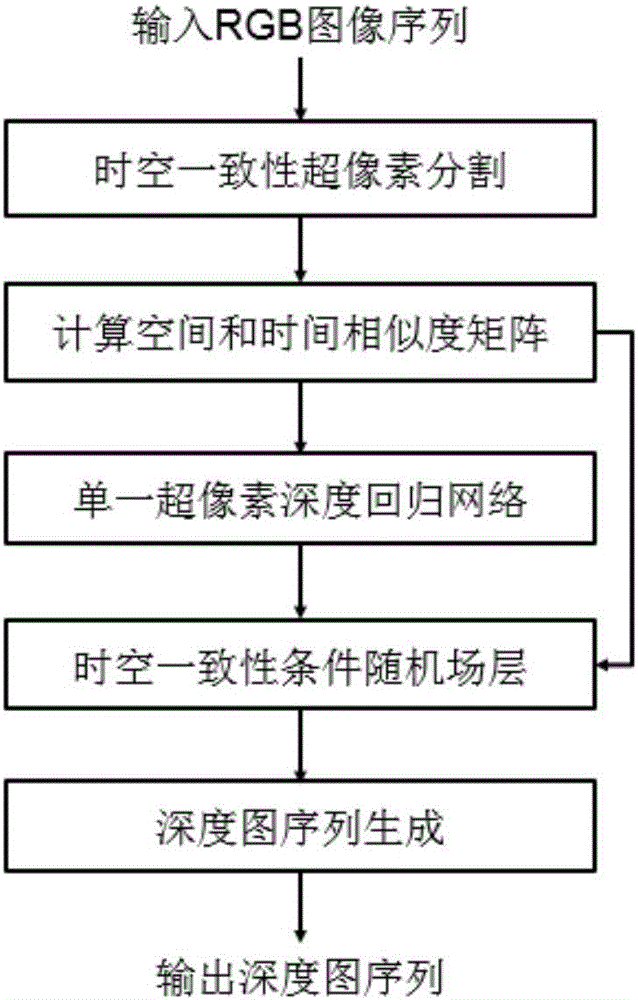

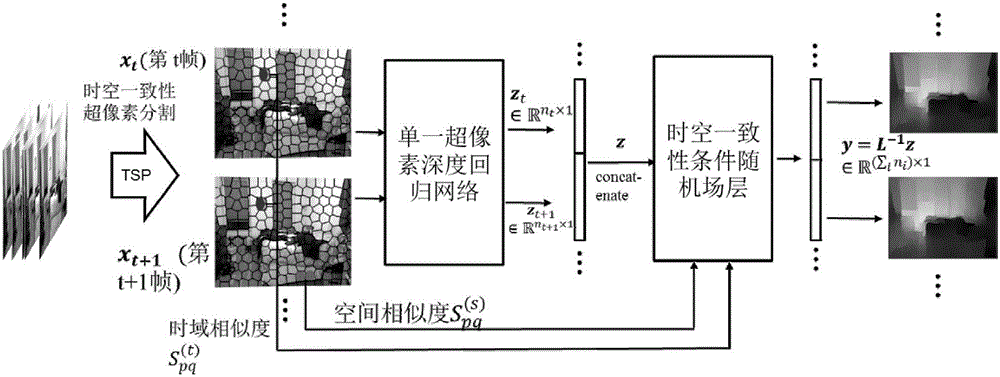

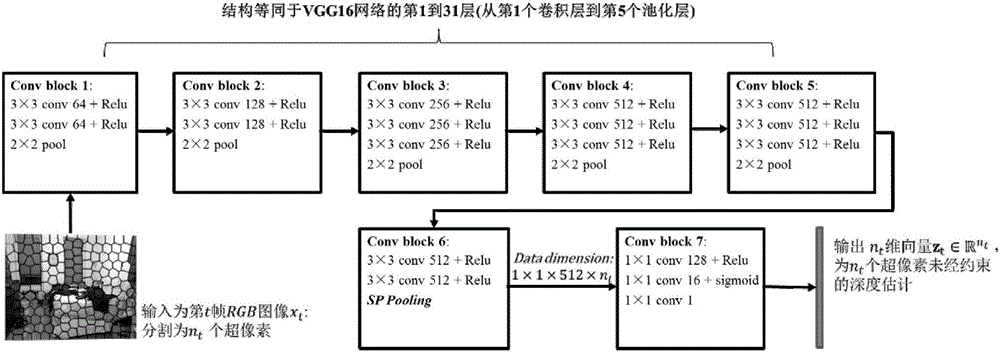

Method for generating spatial-temporally consistent depth map sequences based on convolution neural networks

ActiveUS20190332942A1Improve accuracyImprove continuityImage enhancementImage analysisConditional random fieldRecovery method

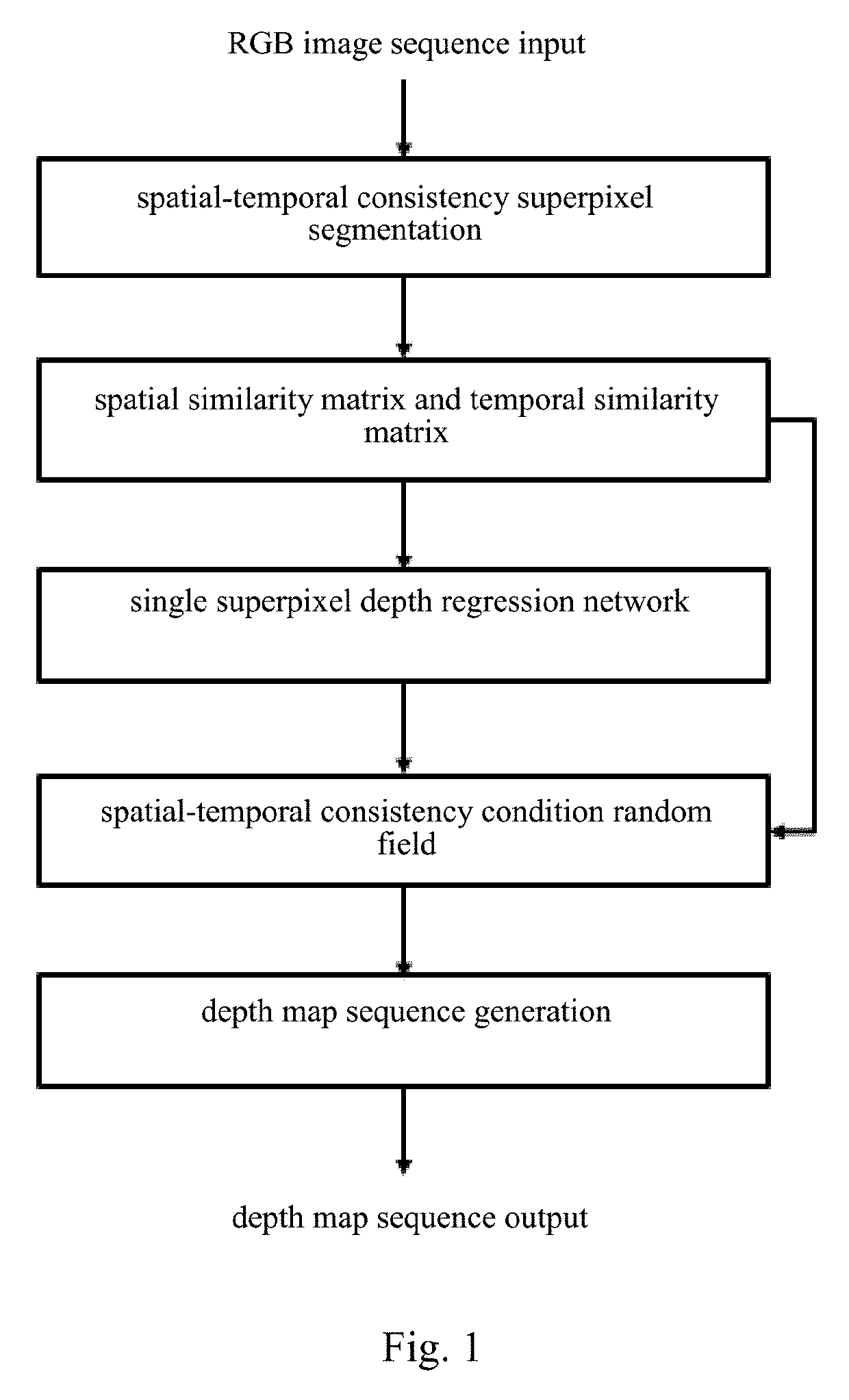

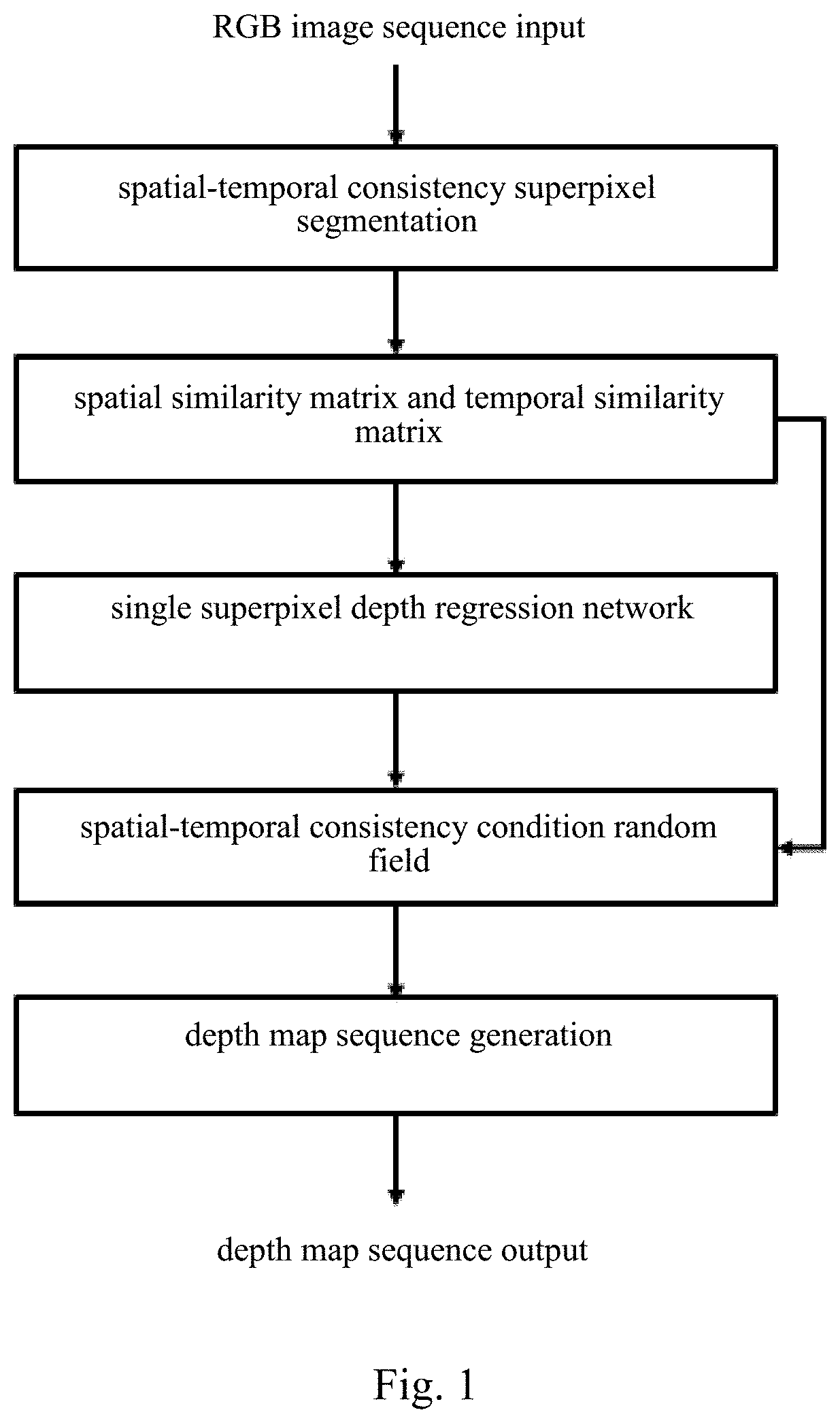

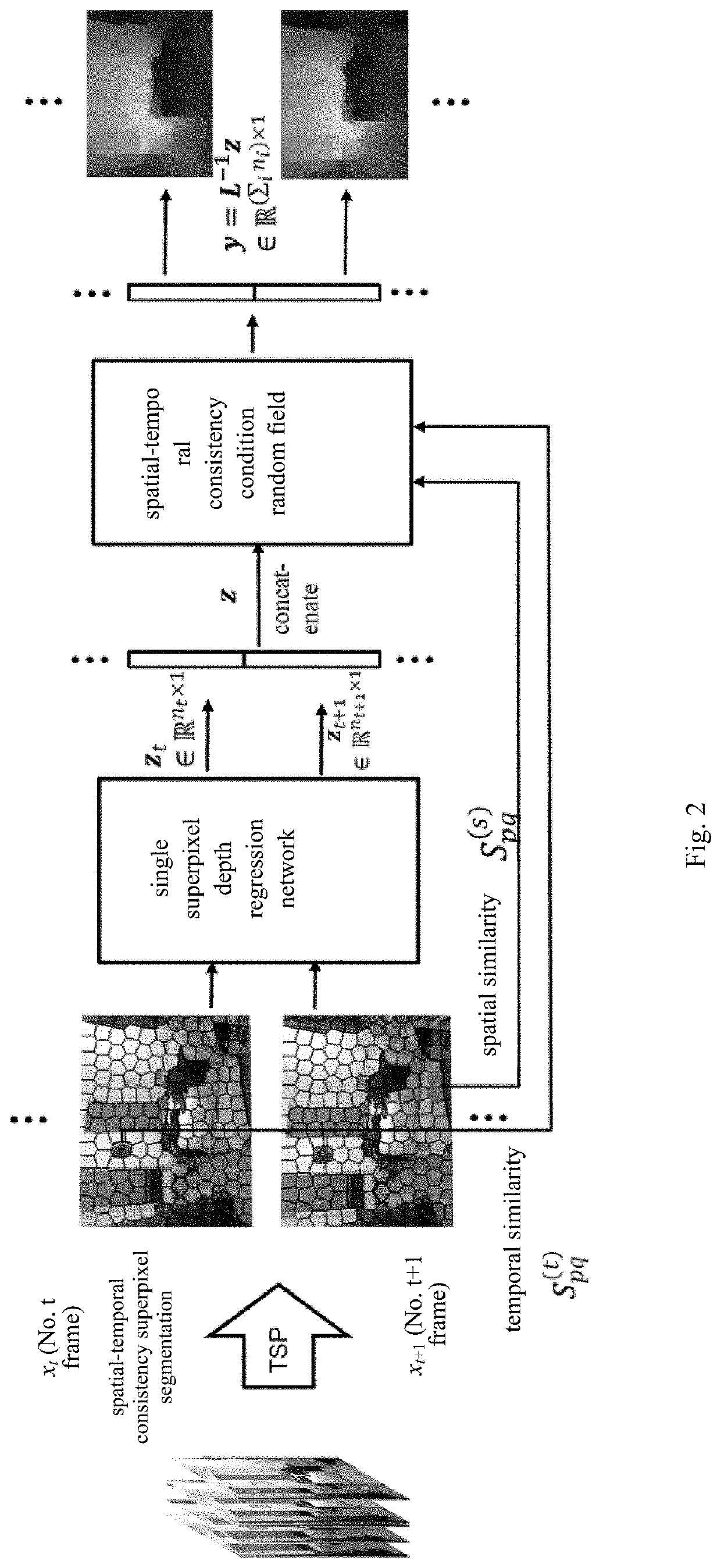

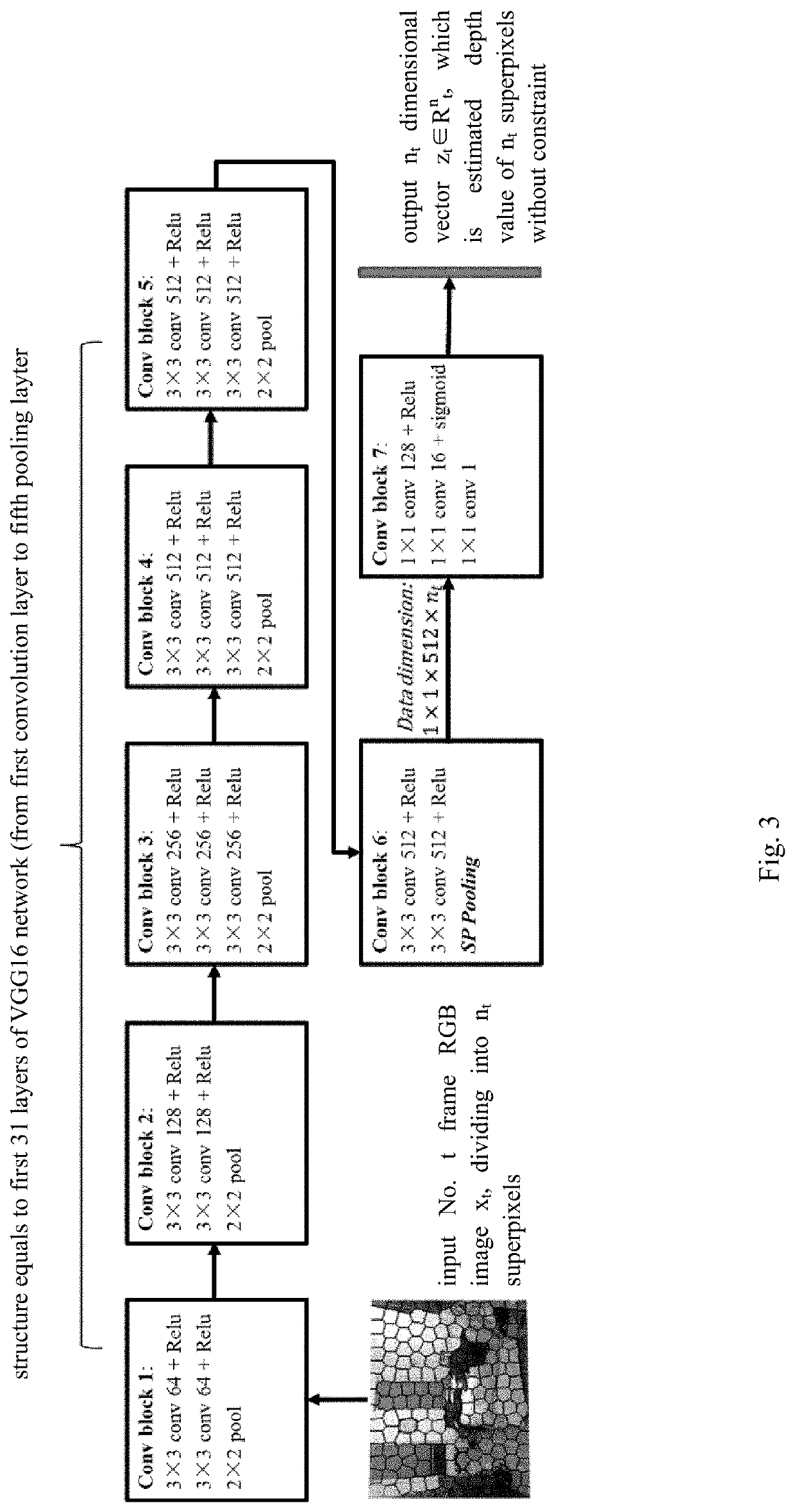

A method for generating spatial-temporal consistency depth map sequences based on convolutional neural networks for 2D-3D conversion of television works includes steps of: 1) collecting a training set, wherein each training sample thereof includes a sequence of continuous RGB images, and a corresponding depth map sequence; 2) processing each image sequence in the training set with spatial-temporal consistency superpixel segmentation, and establishing a spatial similarity matrix and a temporal similarity matrix; 3) establishing the convolution neural network including a single superpixel depth regression network and a spatial-temporal consistency condition random field loss layer; 4) training the convolution neural network; and 5) recovering a depth maps of a RGB image sequence of unknown depth through forward propagation with the trained convolution neural network; which avoids that clue-based depth recovery method is greatly depended on scenario assumptions, and inter-frame discontinuity between depth maps generated by conventional neural networks.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

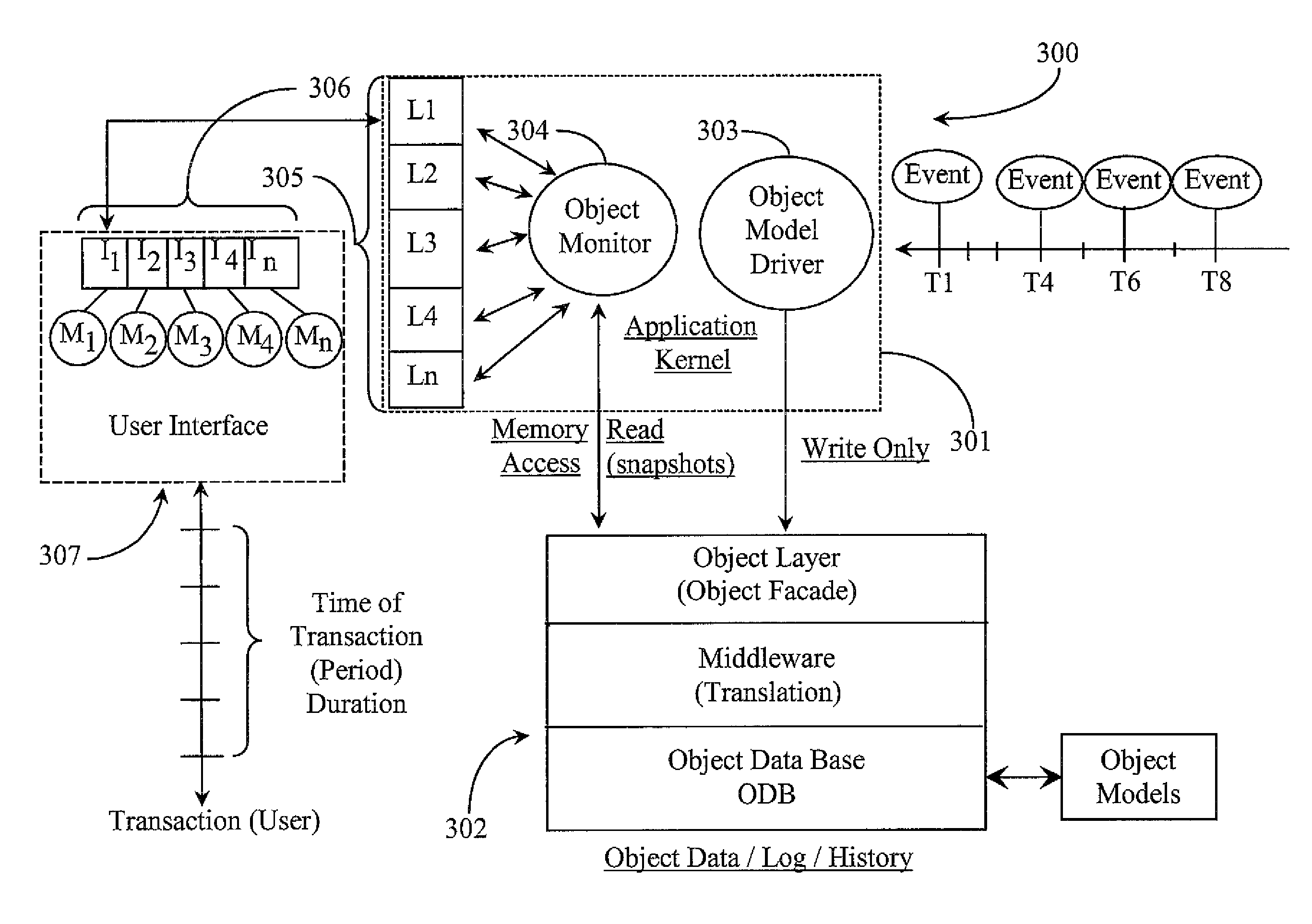

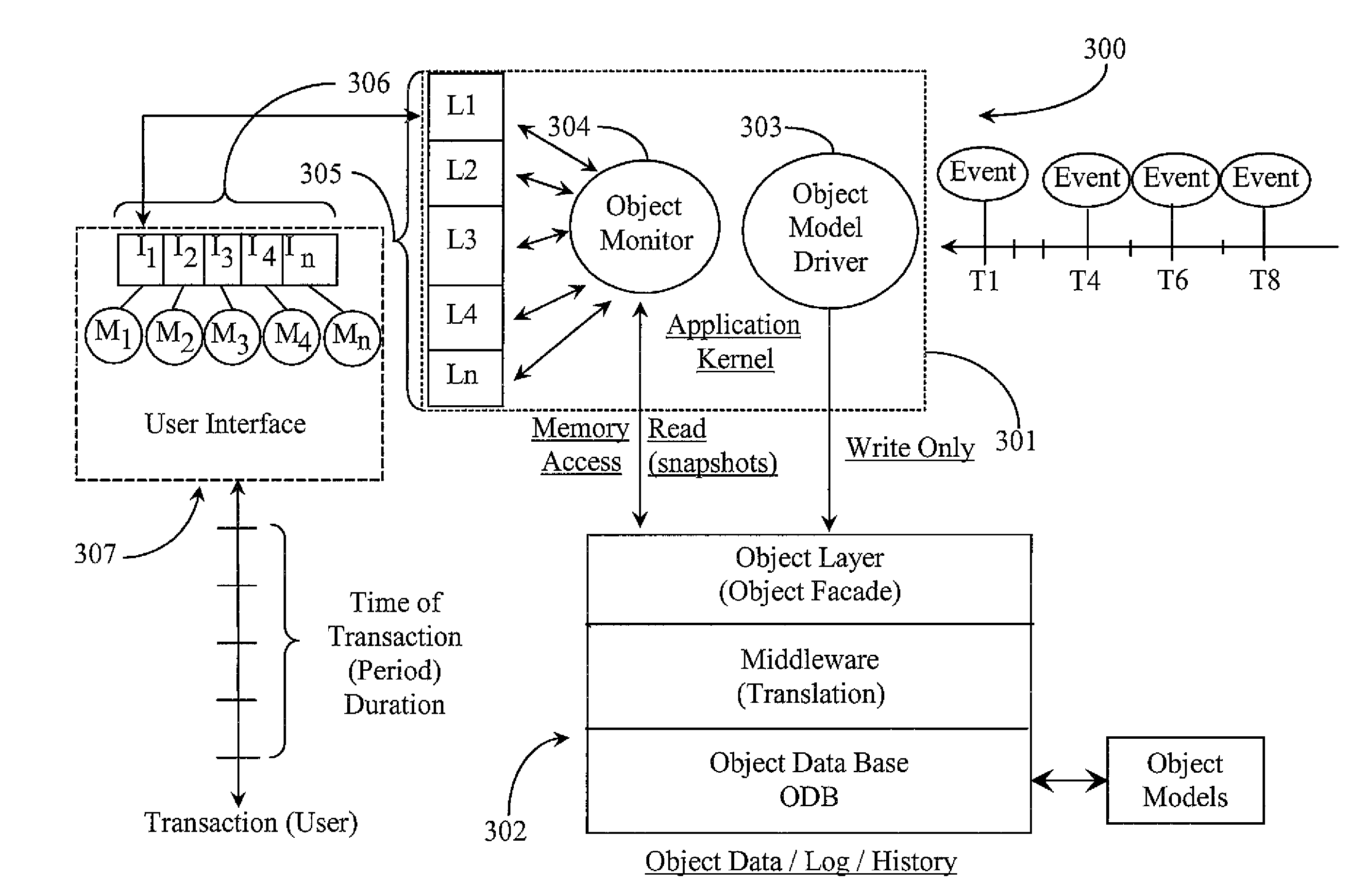

Method for improving temporal consistency and snapshot recency in a monitored real-time software-reporting-application architecture

ActiveUS7434229B2Great customizationFinanceMultiprogramming arrangementsTemporal consistencyDatabase application

An object-oriented software application is provided for receiving updates that change state of an object model and reporting those updates to requesting users. The application includes a database application for storing data; an object model driver for writing updates into the database; a notification system for notifying about the updates; and, a plurality of external monitors for reading the updates. In a preferred embodiment the object model produces multiple temporal snapshots of itself in co-currency with received events, each snapshot containing associated update information from an associated event and whereupon at the time of occurrence of each snapshot coinciding with an event the notification system notifies the appropriate external monitor or monitors, which in turn access the appropriate snapshot, performs calculations thereupon if required and renders the information accessible to the users.

Owner:GENESYS TELECOMMUNICATIONS LABORATORIES INC

Method for generating spatial-temporal consistency depth map sequence based on convolution neural network

ActiveCN106612427AAvoid jumping between framesHigh precisionImage analysisSteroscopic systemsConditional random fieldRecovery method

The invention discloses a method for generating a spatial-temporal consistency depth map sequence based on a convolution neural network, which can be used in a film and television work 2D-to-3D technology. The method comprises the following steps: (1) collecting a training set, wherein each training sample in the training set is composed of a continuous RGB image sequence and a corresponding depth map sequence; (2) carrying out spatial-temporal consistency super pixel segmentation on each image sequence in the training set, and constructing a spatial similarity matrix and a temporal similarity matrix; (3) constructing a convolution neural network composed of a single super pixel depth regression network and a spatial-temporal consistency conditional random field loss layer; (4) training the convolution neural network; and (5) for an RGB image sequence of unknown depth, using the trained neural network to recover a corresponding depth map sequence through forward propagation. The problem that a depth recovery method based on clues relies too much on the scene hypothesis and the problem that the frames of a depth map generated by the existing depth recovery method based on a convolution neural network are discontinuous are avoided.

Owner:ZHEJIANG GONGSHANG UNIVERSITY

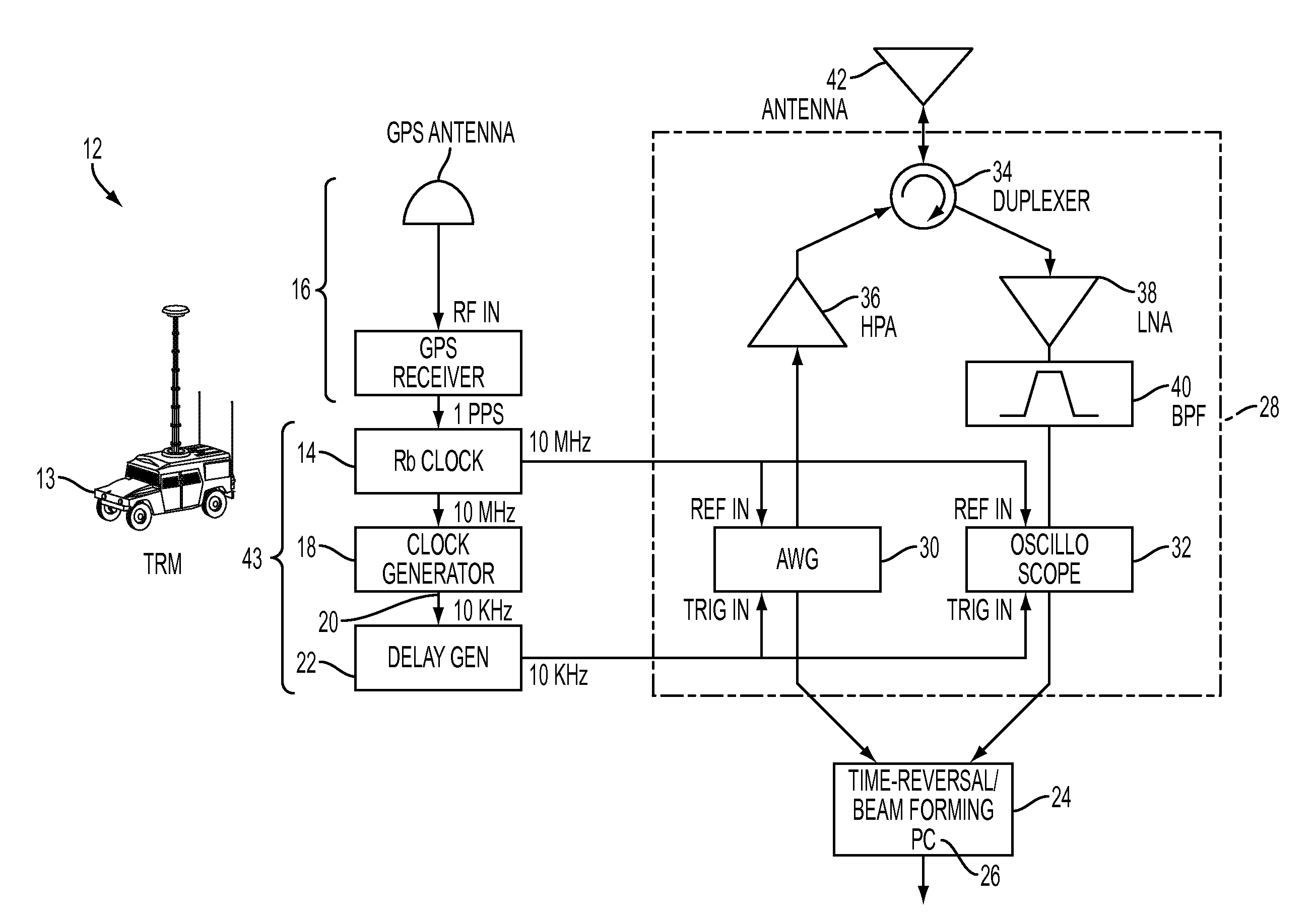

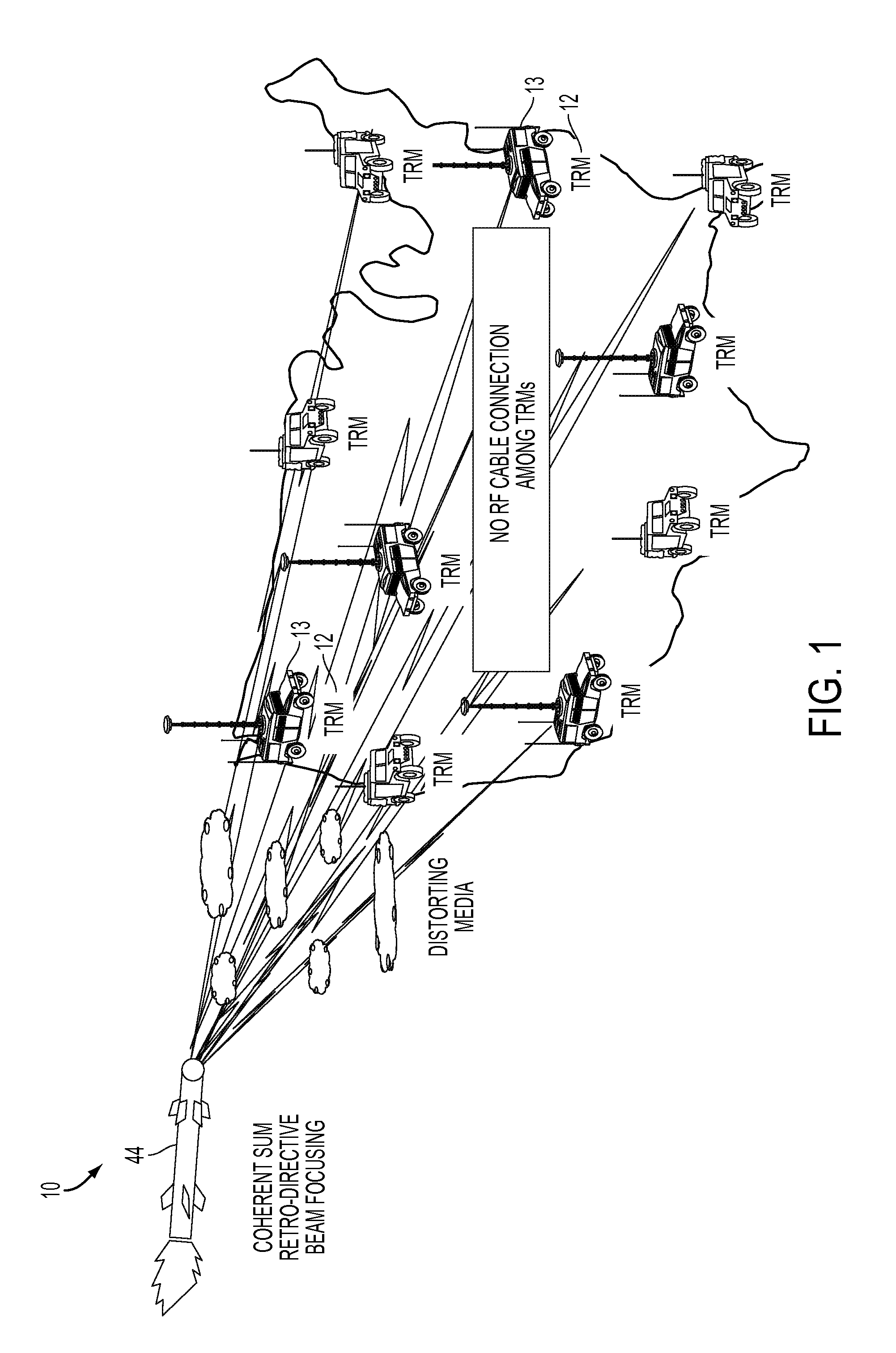

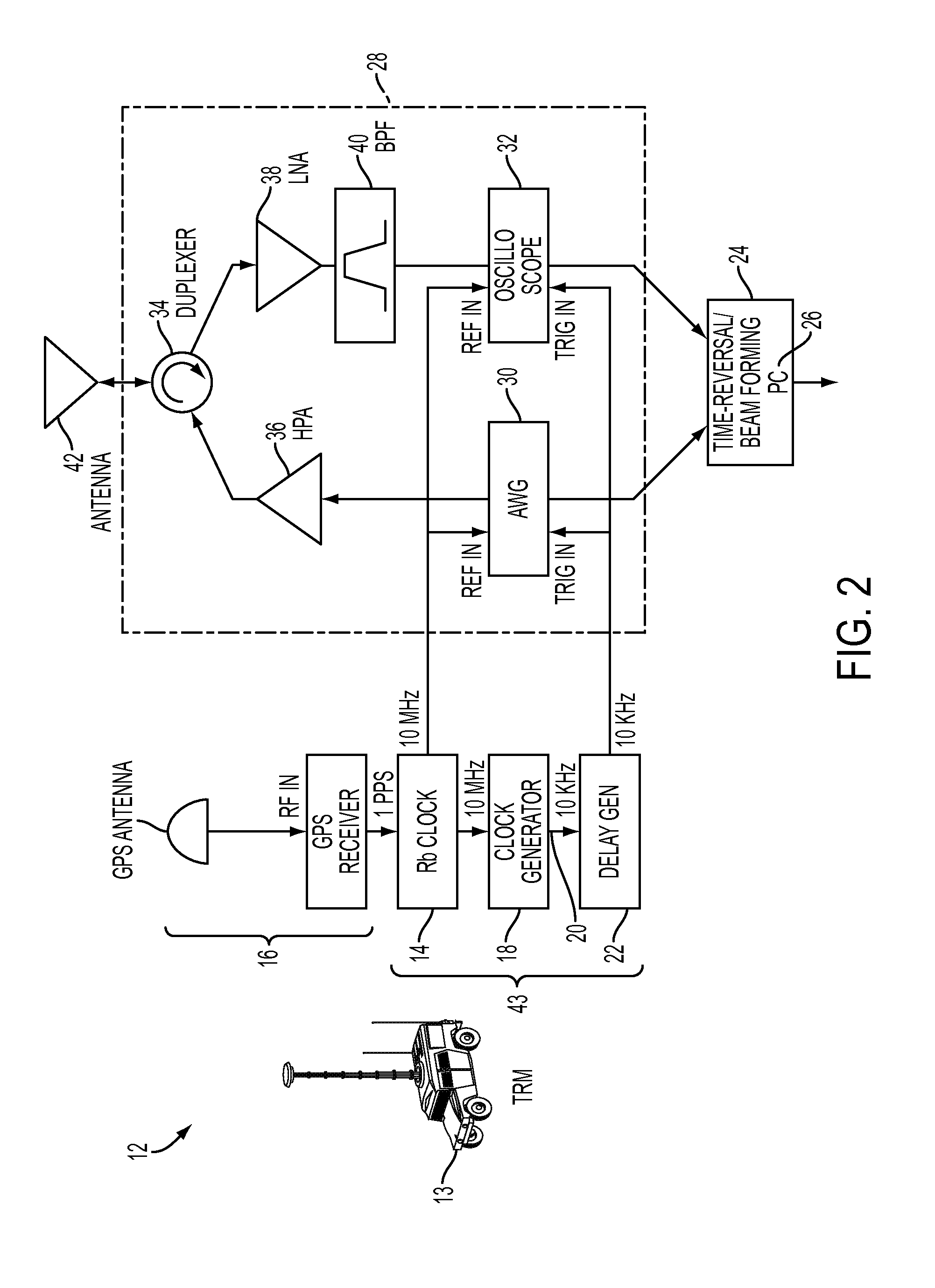

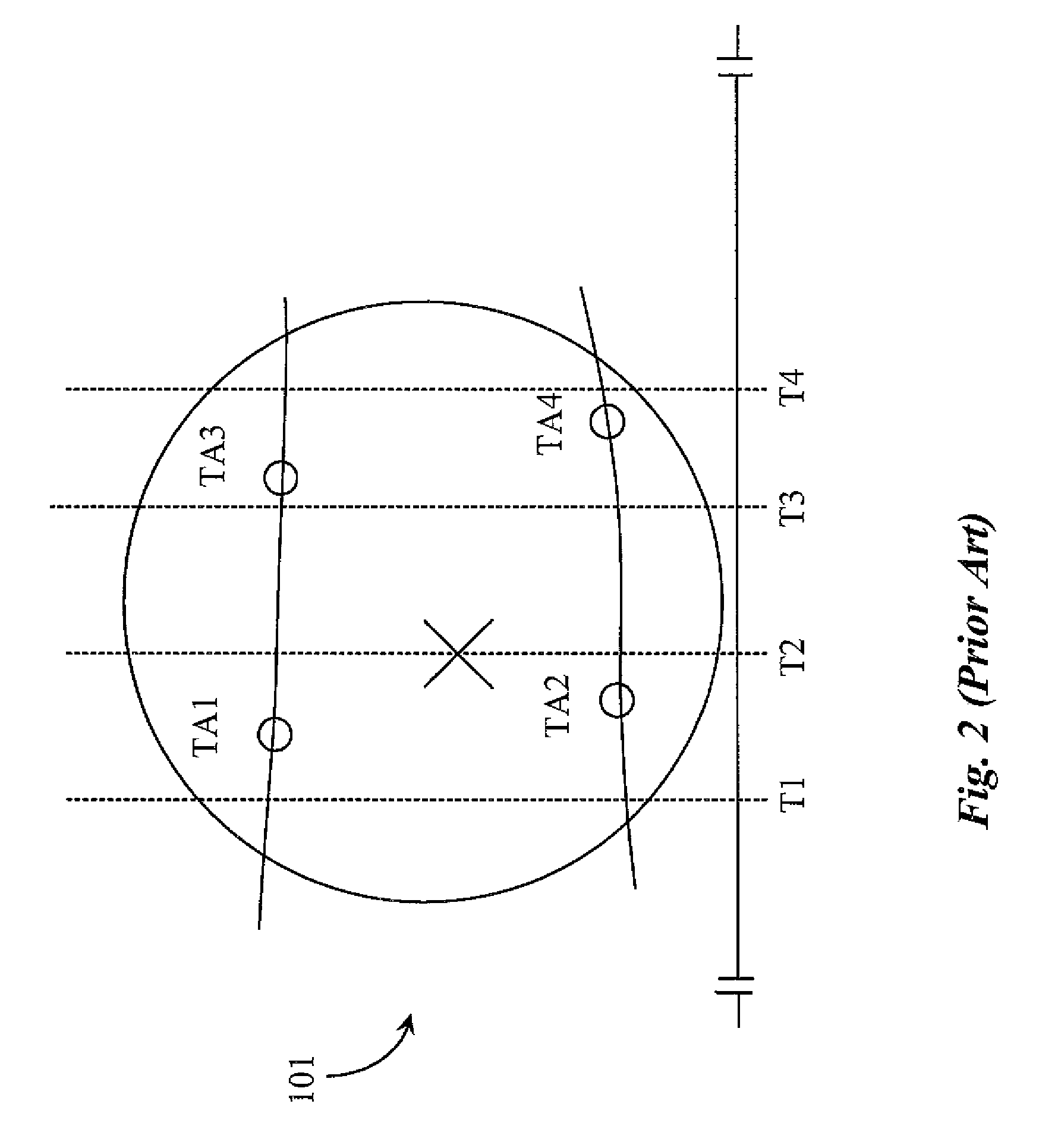

Distributed time-reversal mirror array

InactiveUS20120127020A1Efficient target trackingQuick installationRadio wave reradiation/reflectionTransceiverProgram instruction

A distributed time reversal mirror array (DTRMA) system includes a plurality of independent, sparsely distributed time reversal mirrors (TRMs). Each of the TRMs includes an antenna; a transceiver connected to the antenna for transmitting a signal toward a target, for receiving a return, reflected signal from the target, and for retransmitting a time-reversed signal toward the target; means for phase-locking and for maintaining spatial and temporal coherences between the TRMs; and a computer including a machine-readable storage media having programmed instructions stored thereon for computing and generating the time-reversed retransmitted signal, thereby providing a phased array functionality for the DTRMA while minimizing distortion from external sources. The DTRMA is capable of operating in an autonomous, unattended, and passive state, owing to the time-reversal's self-focusing feature. The beam may be sharply focused on the target due to the coherently synthesized extended aperture over the entire array.

Owner:THE UNITED STATES OF AMERICA AS REPRESENTED BY THE SECRETARY OF THE NAVY

Method for Improving Temporal Consistency and Snapshot Recency in a Monitored Real-Time Software-Reporting-Application Architecture

InactiveUS20080320496A1Great customizationFinanceMultiprogramming arrangementsTemporal consistencyDatabase application

An object-oriented software application is provided for receiving updates that change state of an object model and reporting those updates to requesting users. The application includes a database application for storing data; an object model driver for writing updates into the database; a notification system for notifying about the updates; and, a plurality of external monitors for reading the updates. In a preferred embodiment the object model produces multiple temporal snapshots of itself in co-currency with received events, each snapshot containing associated update information from an associated event and whereupon at the time of occurrence of each snapshot coinciding with an event the notification system notifies the appropriate external monitor or monitors, which in turn access the appropriate snapshot, performs calculations thereupon if required and renders the information accessible to the users.

Owner:GENESYS TELECOMMUNICATIONS LABORATORIES INC

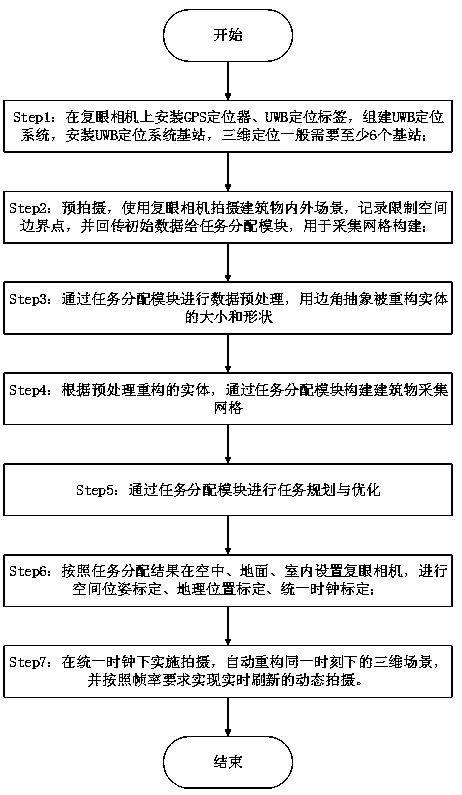

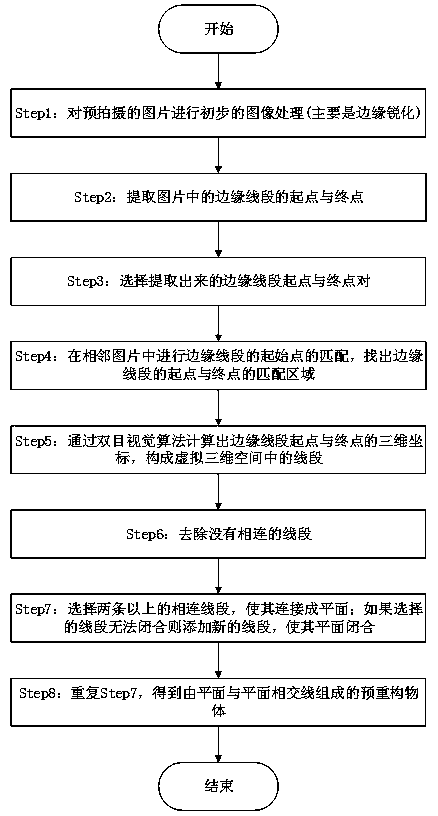

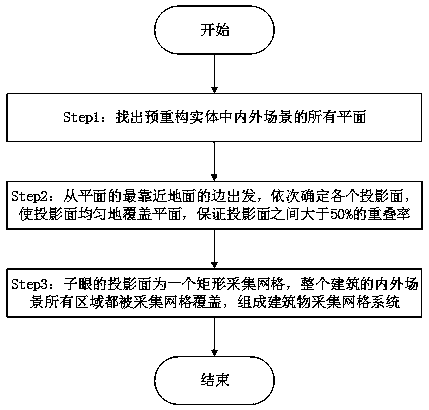

Virtual compound-eye camera system for acquiring three-dimensional scene of building satisfying time-space consistency and working method thereof

ActiveCN109118585ASpatiotemporal consistencyRealize dynamic 3D scene acquisitionTelevision system detailsImage enhancementComputer graphics (images)Data acquisition

The invention relates to the field of three-dimensional digital scene construction, provided are a virtual compound eye camera system for acquiring three-dimensional scene of a building satisfying spatio-temporal consistency and a working method thereof, the system includes a data acquisition module, positioning module and task assignment module, wherein the data acquisition module is composed ofall compound eye cameras facing the building object, and all compound eye cameras facing the building object are programmed according to a predetermined building acquisition grid, and the virtual group is formed into a complete and systematic compound eye system, which is called virtual compound eye; Virtual compound eye is composed of multiple compound eye cameras, which are programmed accordingto the acquisition grid of a given building, and are cooperated with each other to form a virtual compound eye. The virtual compound-eye camera system and the working method thereof can realize real-time shooting and real-time production with consistent time and space, and obtain more accurate and real dynamic three-dimensional virtual scene.

Owner:WUHAN UNIV OF TECH

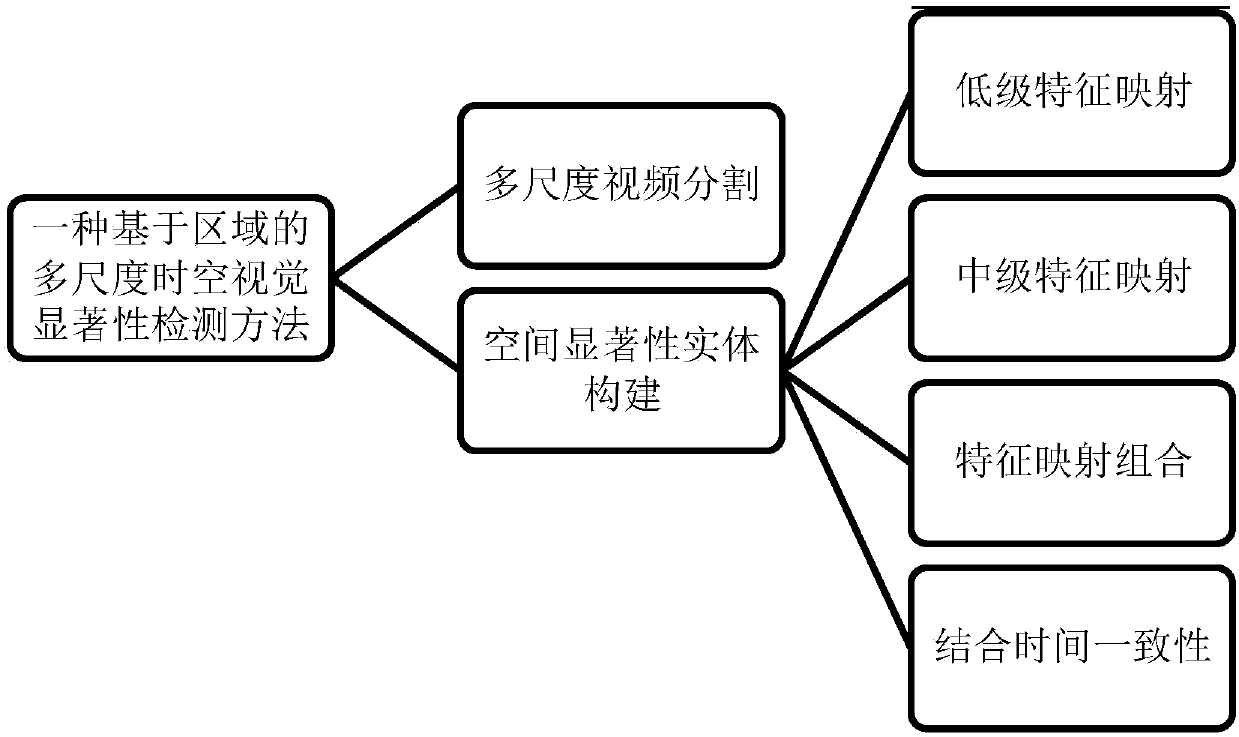

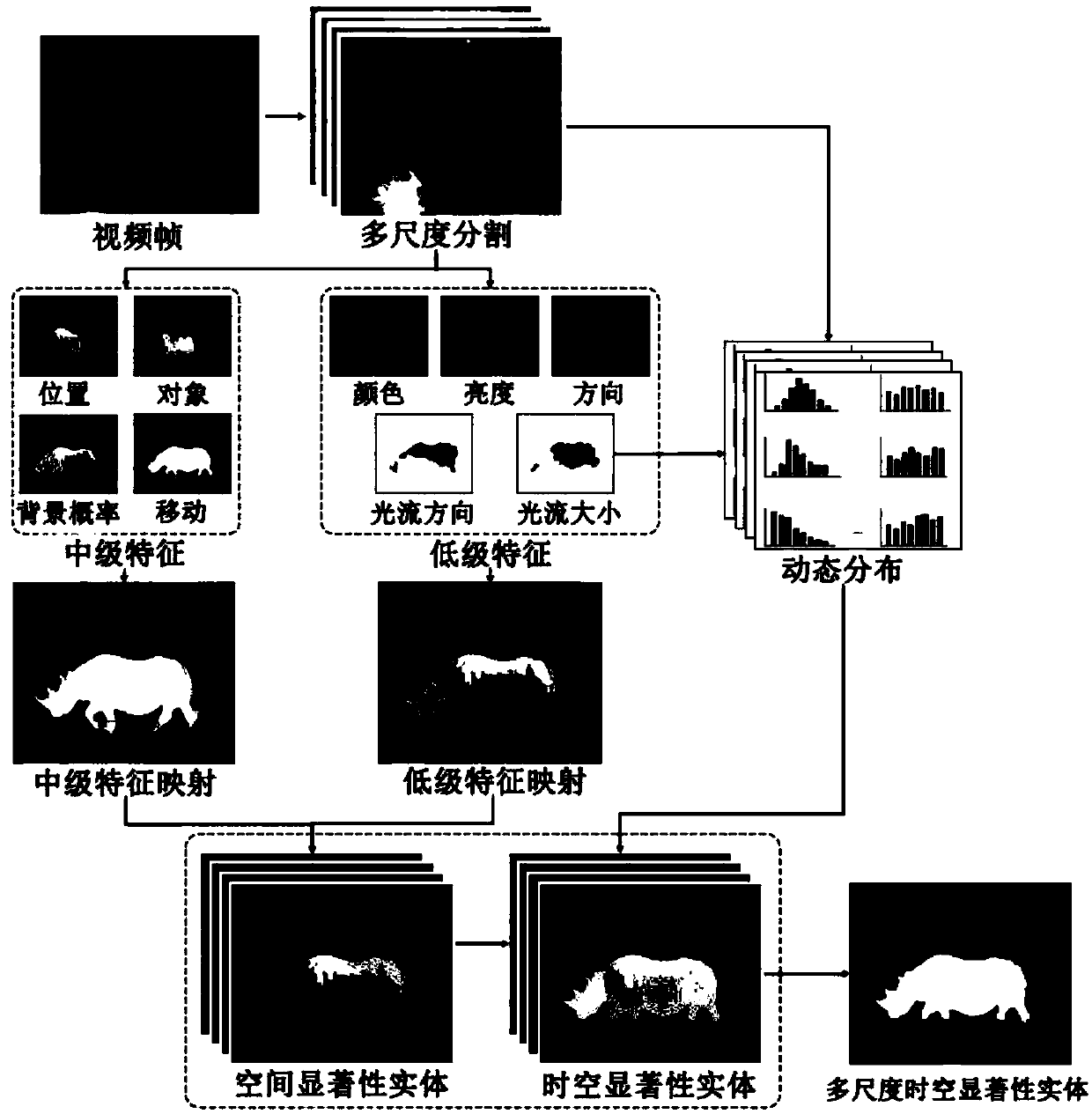

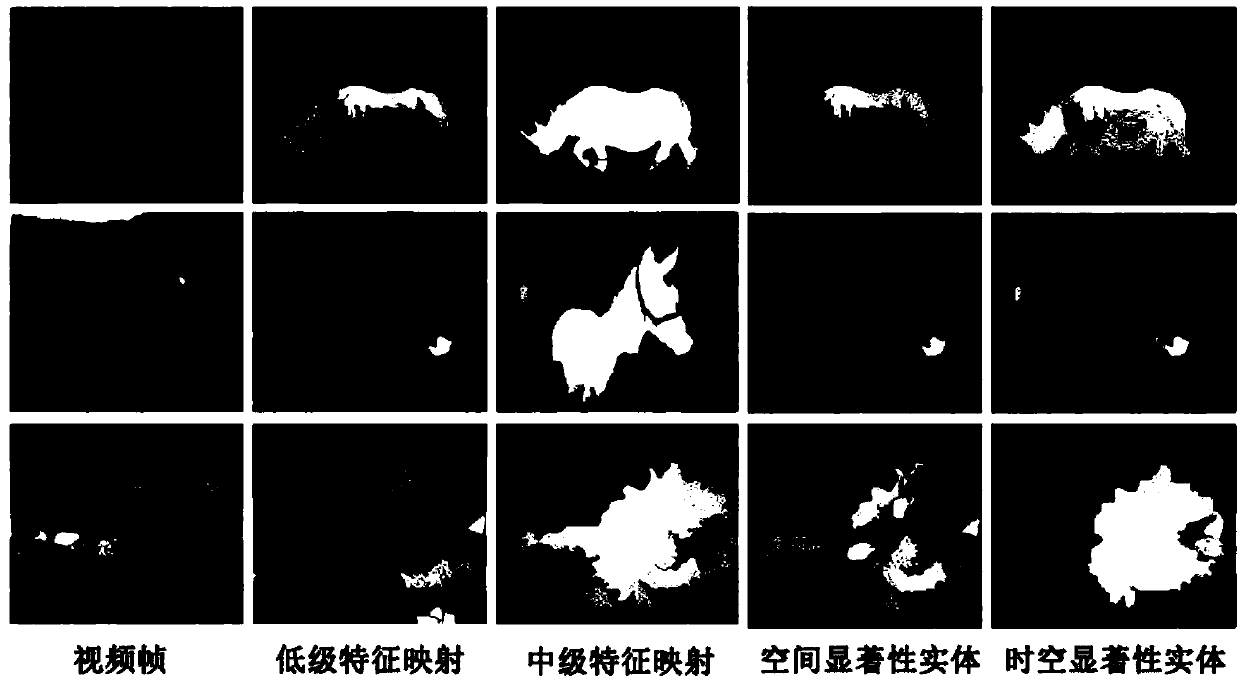

Region-based multi-scale spatial-temporal visual saliency detection method

The invention provides a region-based multi-scale spatial-temporal visual saliency detection method that includes the following steps of: multi-scale video segmentation and spatial saliency entity construction the process of which comprises: firstly executing a time super pixel model in order to divide a video into spatial-temporal regions in various scale levels; extracting the motion informationin each scale level and the characteristics of each frame, creating characteristic mapping, combing the characteristic mapping to generate a spatially salient entity for each scale-level region; thenindividually using an adaptive time window smoothness saliency value for each region, and bringing in time consistency to form a spatial-temporal saliency entity trans frame; and finally, generatinga spatial-temporal saliency map for each frame by fusing multi-scale spatial-temporal saliency entities. The method overcomes the limitation of using a fixed number of reference frames, and introduces a new metric of the adaptive time window, can maintain the time consistency between consecutive frames of each entity in the video and reduce the fluctuation of the target between frames.

Owner:SHENZHEN WEITESHI TECH

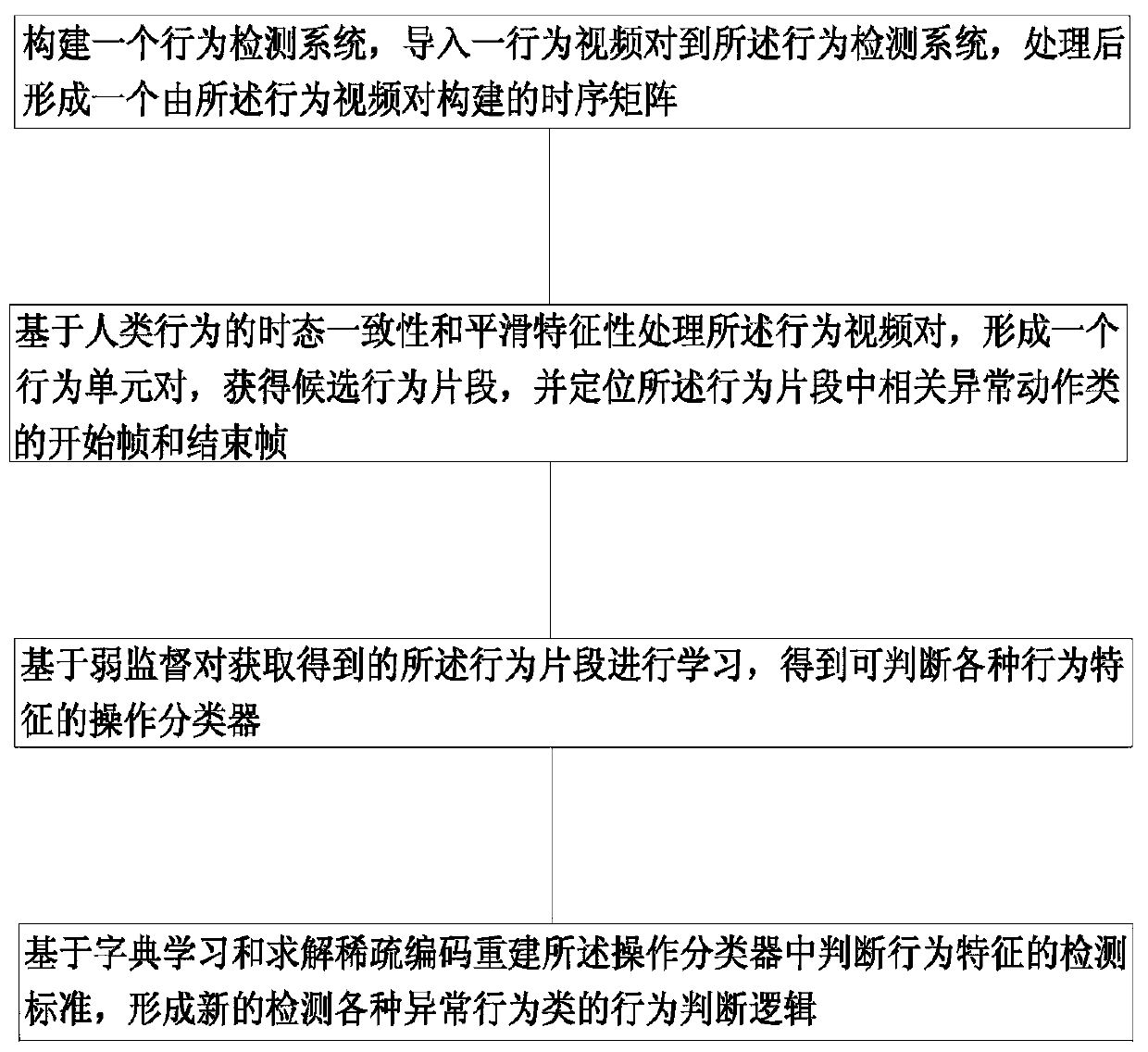

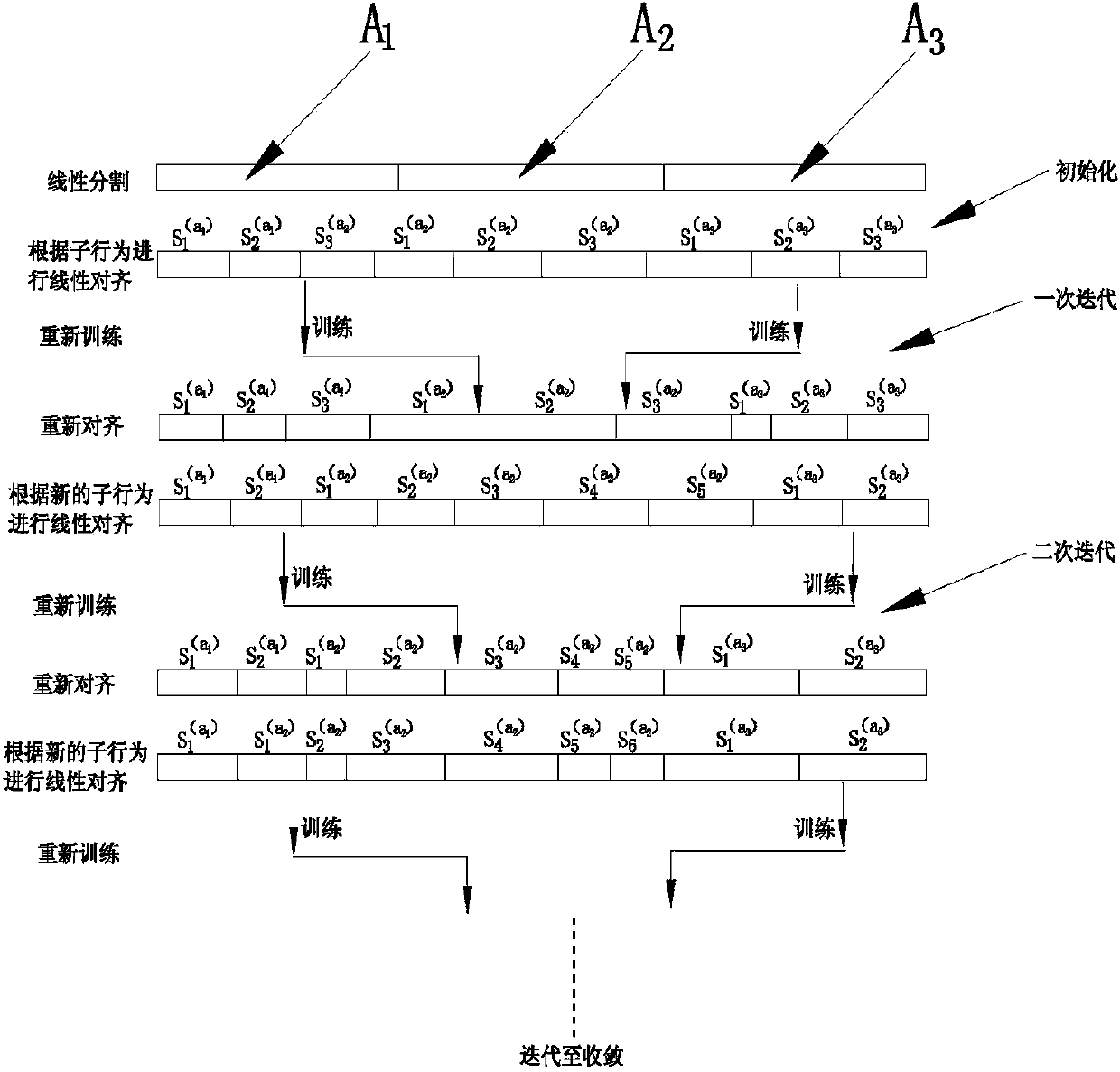

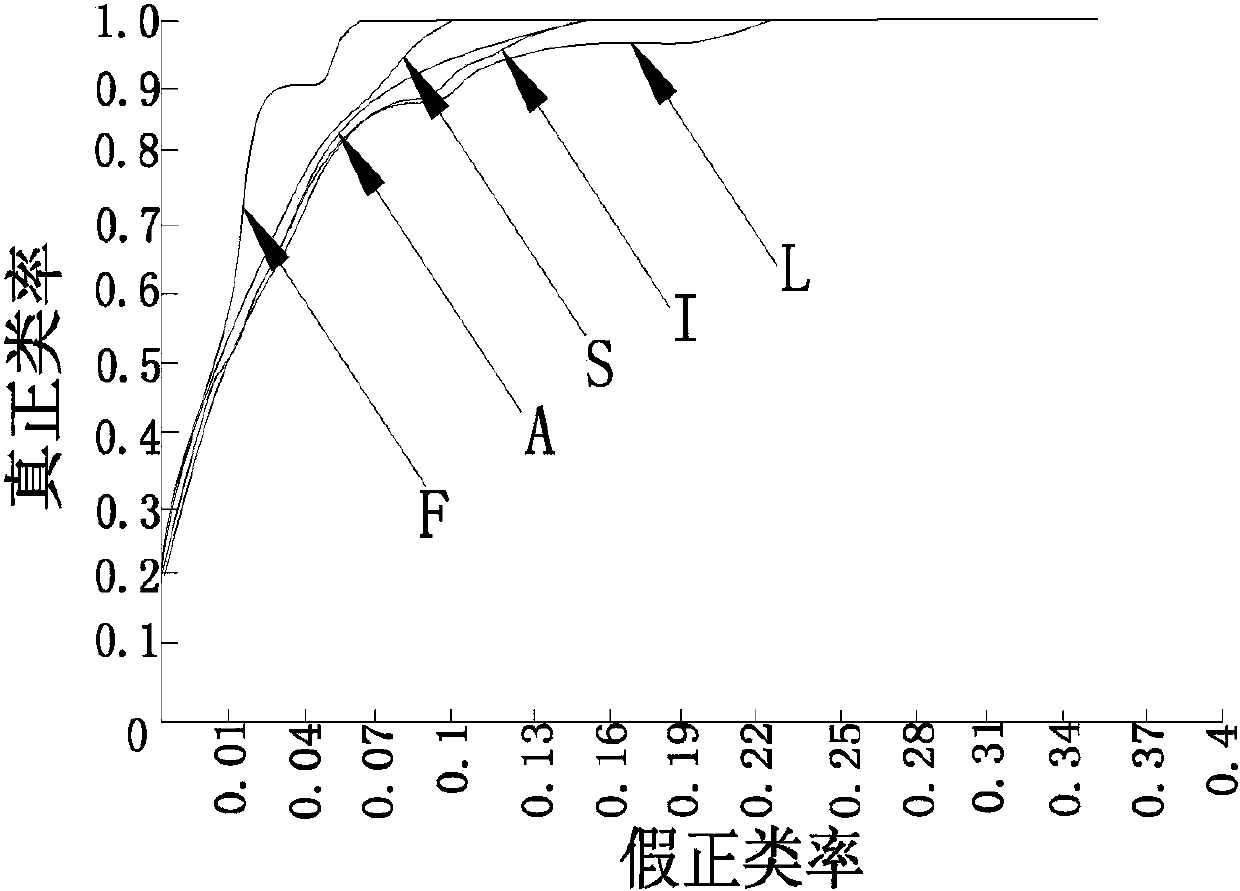

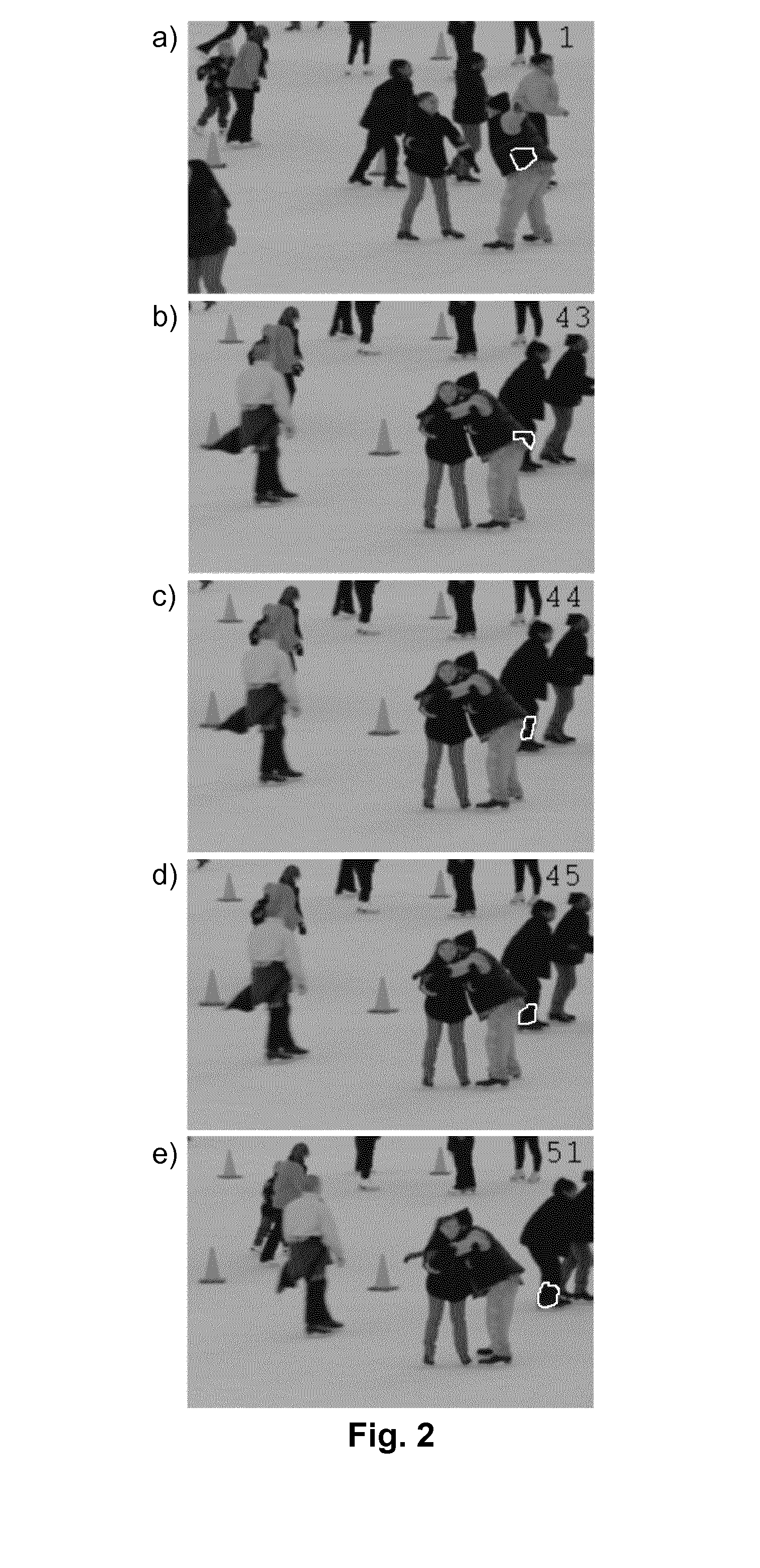

Weakly supervised anomaly detection method based on temporal consistency

The invention discloses a weak supervision abnormal behavior detection method based on time sequence consistency. The method comprises the following steps: constructing a behavior detection system, importing a behavior video pair into the behavior detection system, and forming a time sequence matrix constructed by the behavior video pair after processing. Based on the temporal consistency and smoothing characteristic of human behavior, a behavior unit pair is formed to obtain relevant behavior segments, and the start and end frames of relevant abnormal actions in the behavior segments are located. Based on weak supervision, the obtained behavior segments are learned to obtain the operation classifier which can judge various behavior characteristics. Based on dictionary learning and solvingthe detection criteria of judging behavior features in sparse coding reconstruction operation classifier, a new behavior judgment logic is formed to detect various abnormal behavior classes. The weakly supervised abnormal behavior detection method provided by the invention is easy to learn the characteristics of the related behavior classification without manually labeling the boundary of the frame to establish the related behavior model.

Owner:NANJING UNIV OF POSTS & TELECOMM

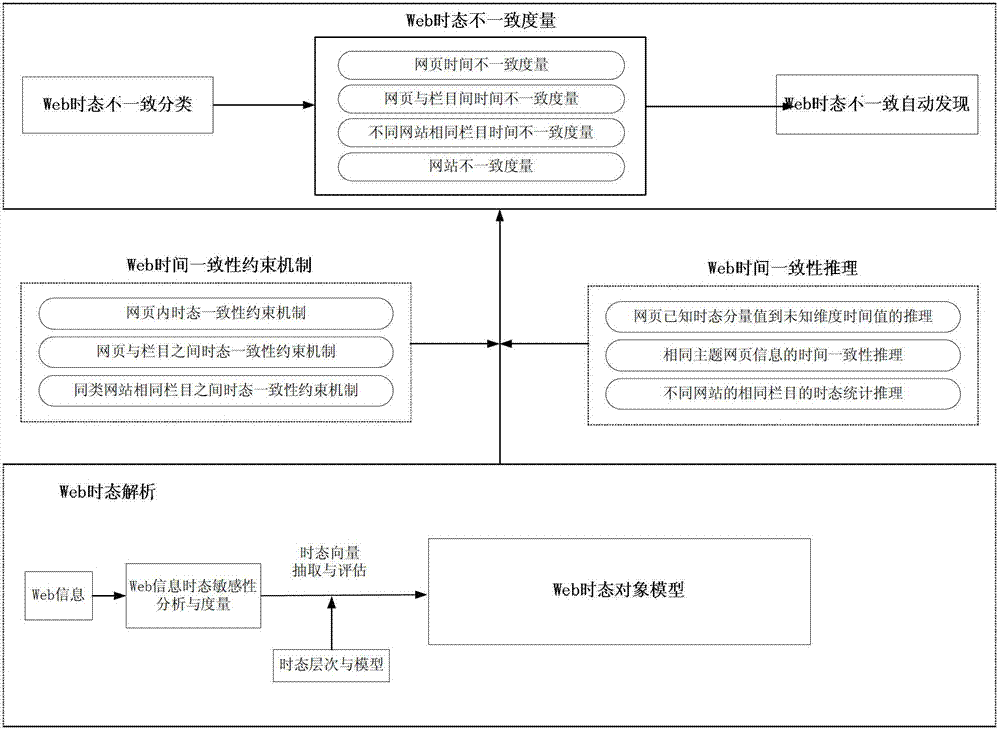

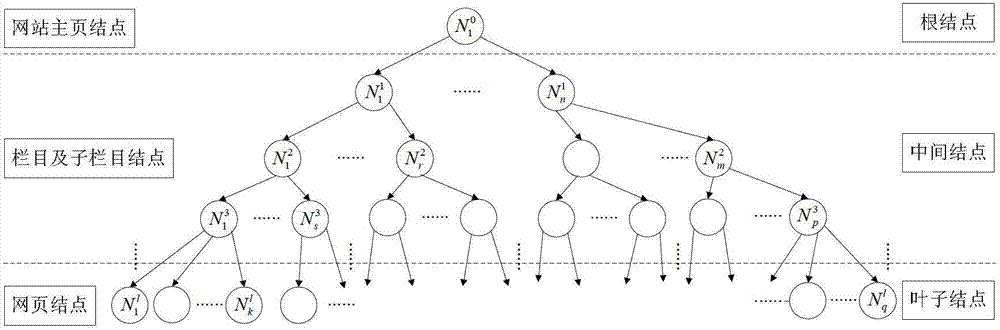

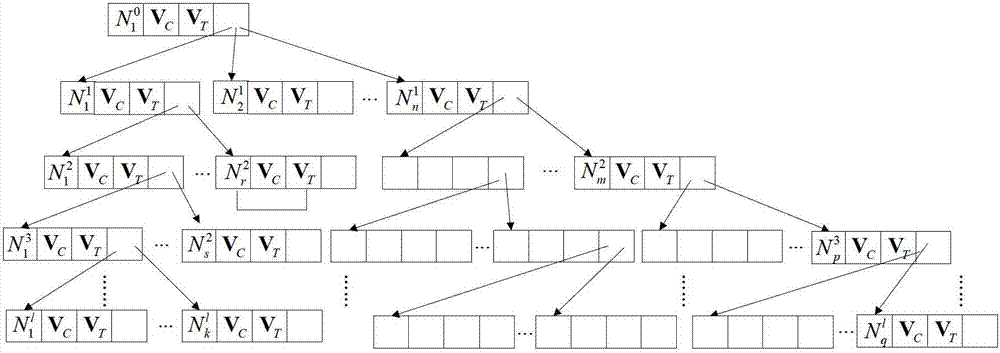

Web temporal object model-based outdated webpage information automatic discovering method

InactiveCN102737125AEfficient tense problemEasy to handleSpecial data processing applicationsTemporal informationTemporal consistency

The invention discloses a web temporal object model-based outdated webpage information automatic discovering method, belongs to the field of research of data quality, and relates to the technical fields of temporal Web, network information quality estimation, semantic comprehension and extraction of temporal information, constraint and reasoning of temporal information, automatic screening of consistency of webpage information and the like. Aiming at the phenomenon of low web data quality caused by temporal inconsistency, a Web temporal object model is established on the basis of different temporal sensitivities of different web-pages, network data temporal consistency constraint relation, reasoning mechanism and an algebraic operation rule are constructed, automatic screening is performed and outdated information in the Web webpage is promoted, and information with timeliness and relevancy is provided for a network user. The method can be widely applied to the aspects such as quality ordering of similar websites and search ordering of time perception, and the Web information quality is improved to a certain extent.

Owner:WUHAN UNIV

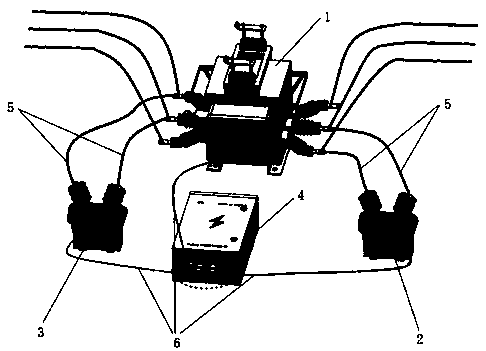

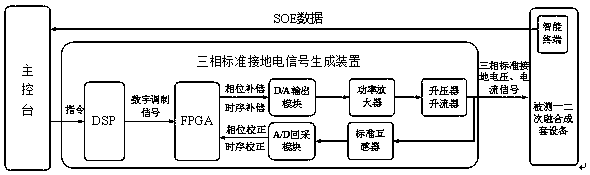

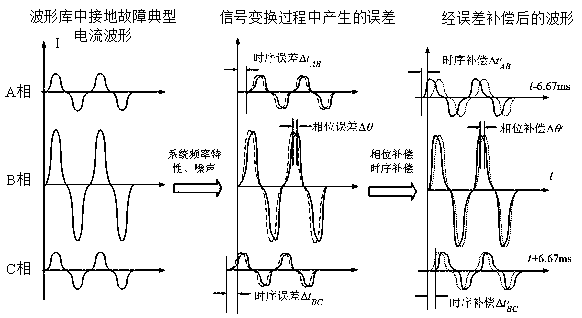

Three-phase standard ground electric signal generating method and device and primary and secondary fusion switch ground fault detecting function testing method and system

InactiveCN109507460AHigh precisionImprove stabilityElectrical measurement instrument detailsCircuit interrupters testingTemporal consistencyInductor

The invention discloses a three-phase standard ground electric signal generating method and device and a primary and secondary fusion switch ground fault detecting function testing method and system.The three-phase standard ground electric signal generating method comprises performing modulation and error compensation on ground fault typical electric signals to obtain a corresponding three-phasewaveform sequence; performing digital-to-analogue conversion, amplification and PI (proportional-integral) correction on the three-sequence waveform sequence to obtain three-phase standard ground electric signals. The primary and secondary fusion switch ground fault detecting function testing method comprises loading the three-phase standard ground electric signals onto the primary side of the mutual inductor of a primary and secondary fusion switch to be tested; transmitting electric signals output from the secondary side of the mutual inductor to a supported intelligent terminal; according to SOE (state of energy) data uploaded by the intelligent terminal, determining accuracy of a ground fault detecting function. The three-phase standard ground electric signal generating method and device and a primary and secondary fusion switch ground fault detecting function testing method and system can help generate high-precision, high-stability and high-temporal-consistency three-phase standard ground electric signals and improve detecting accuracy of primary and secondary fusion switch ground fault detecting functions.

Owner:STATE GRID JIANGSU ELECTRIC POWER CO ELECTRIC POWER RES INST +3

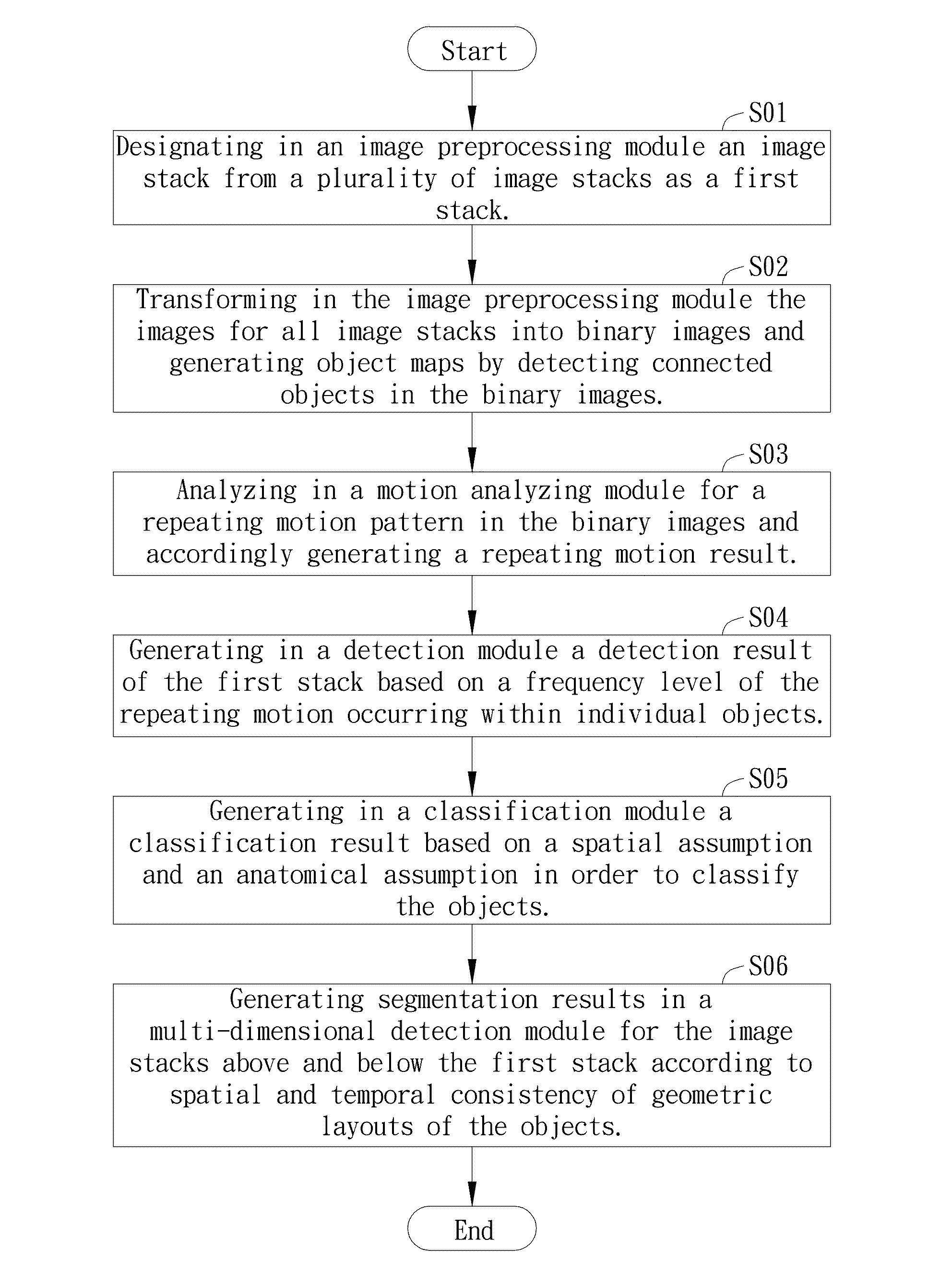

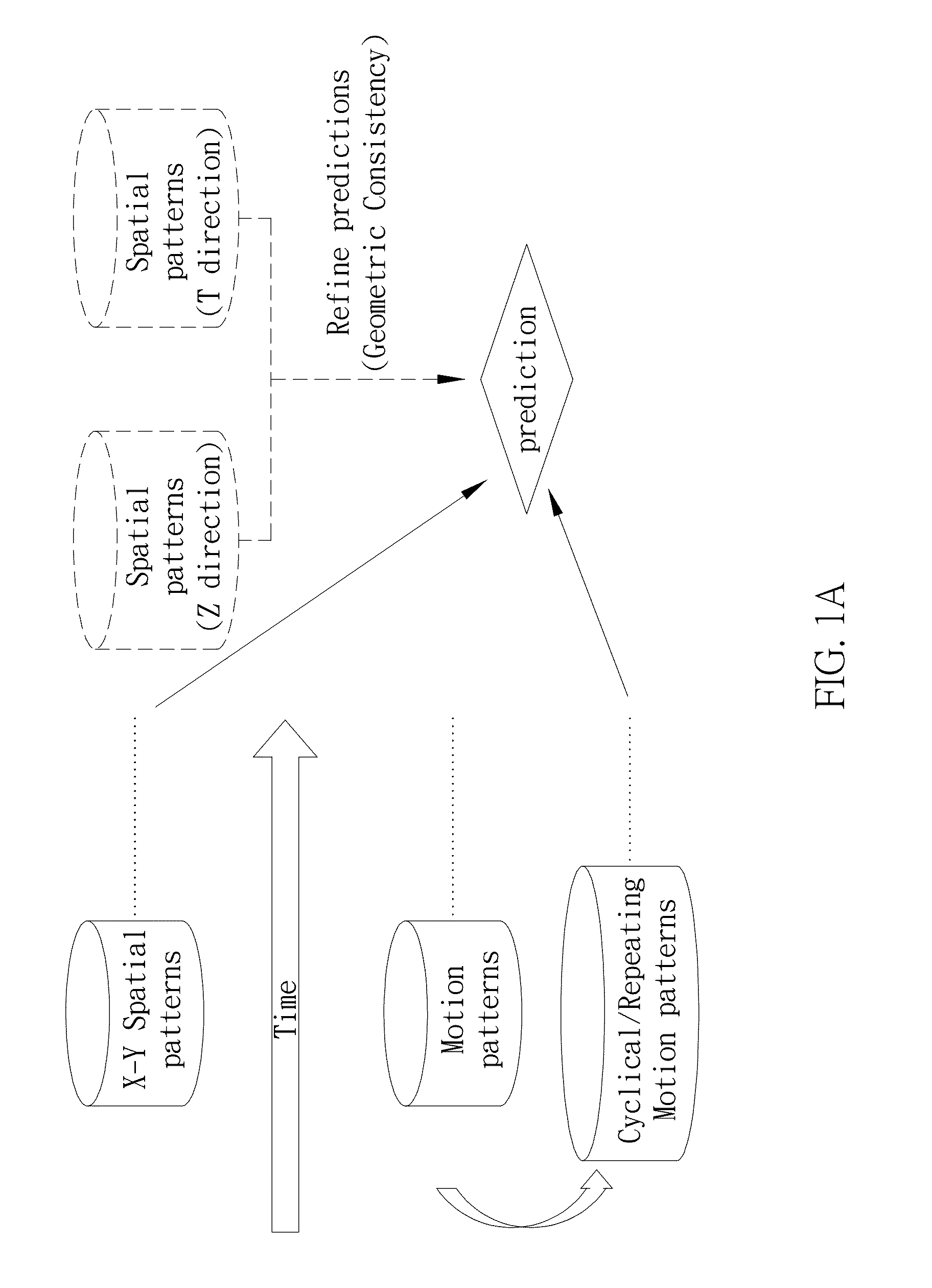

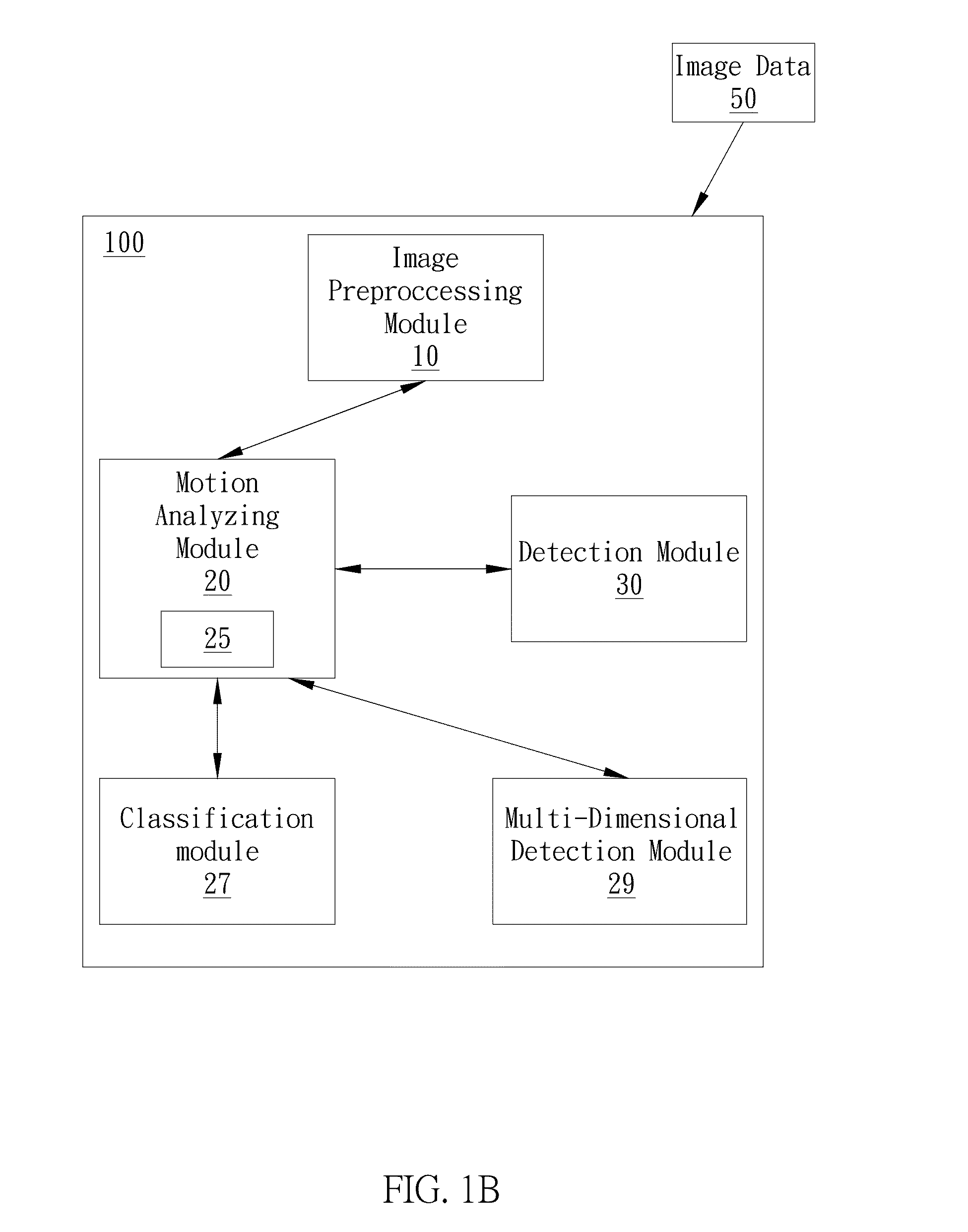

Image segmentation system and operating method thereof

InactiveUS20150093001A1Automatically performEnsure high efficiency and accuracyImage enhancementImage analysisObject structureTemporal consistency

An image segmentation system for performing image segmentation on an image data includes an image preprocessing module, a motion analyzing module, a detection module, a classification module, and a multi-dimensional detection module. The image data has a plurality of image stacks ordered chronologically that respectively have a plurality of images sequentially ordered according to spatial levels, wherein one spatial level is designated as a first stack. The image preprocessing module transforms the images into binary images while the motion analyzing module finds a repeating pattern in the binary images in the first stack and accordingly generates a repeating motion result. The classification module generates a classification result based on a spatial and an anatomical assumption to classify objects. The multi-dimensional detection module generates segmentation results for stacks above and below the first stack using spatial and temporal consistency of geometric layouts of object structures.

Owner:NAT TAIWAN UNIV OF SCI & TECH +1

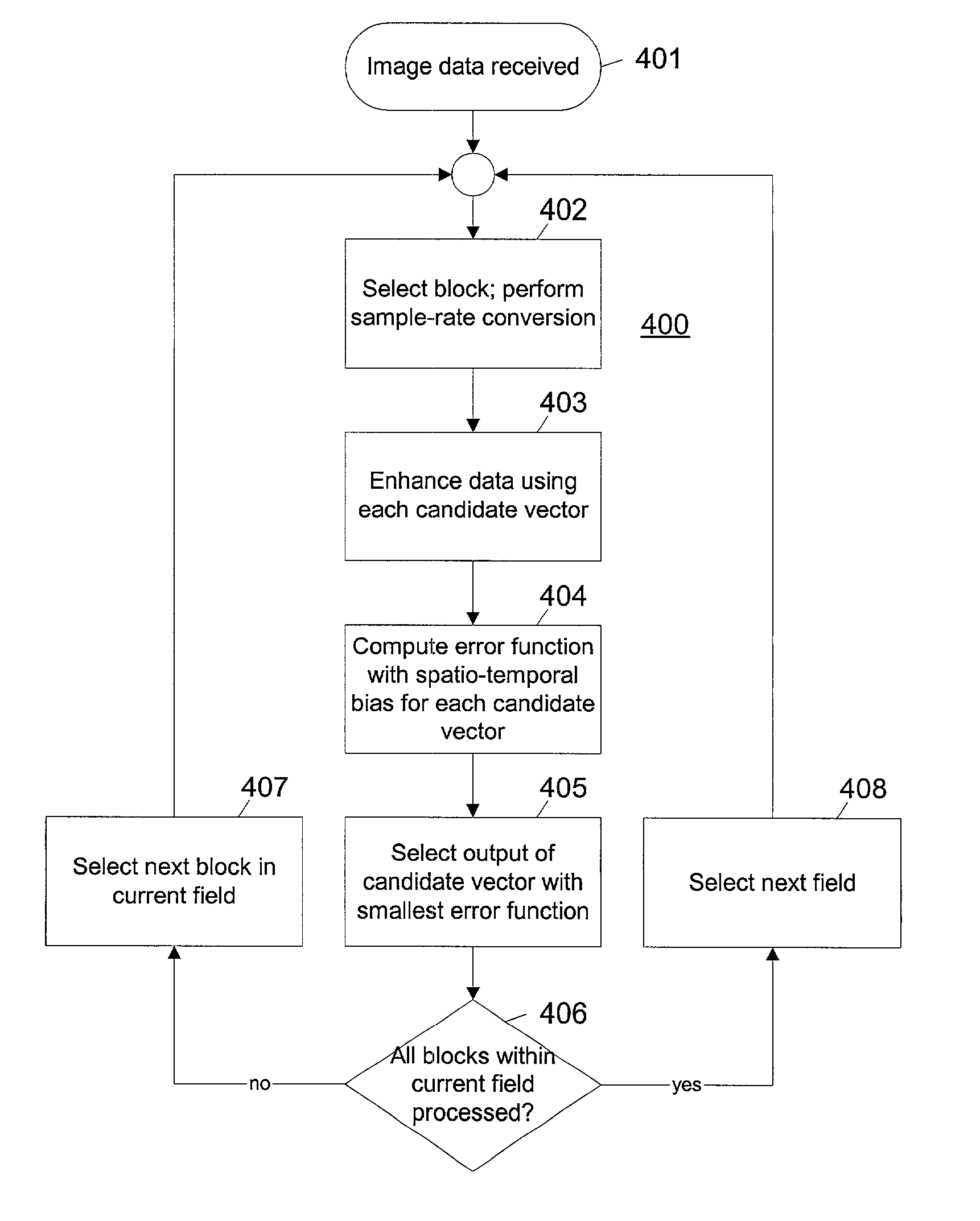

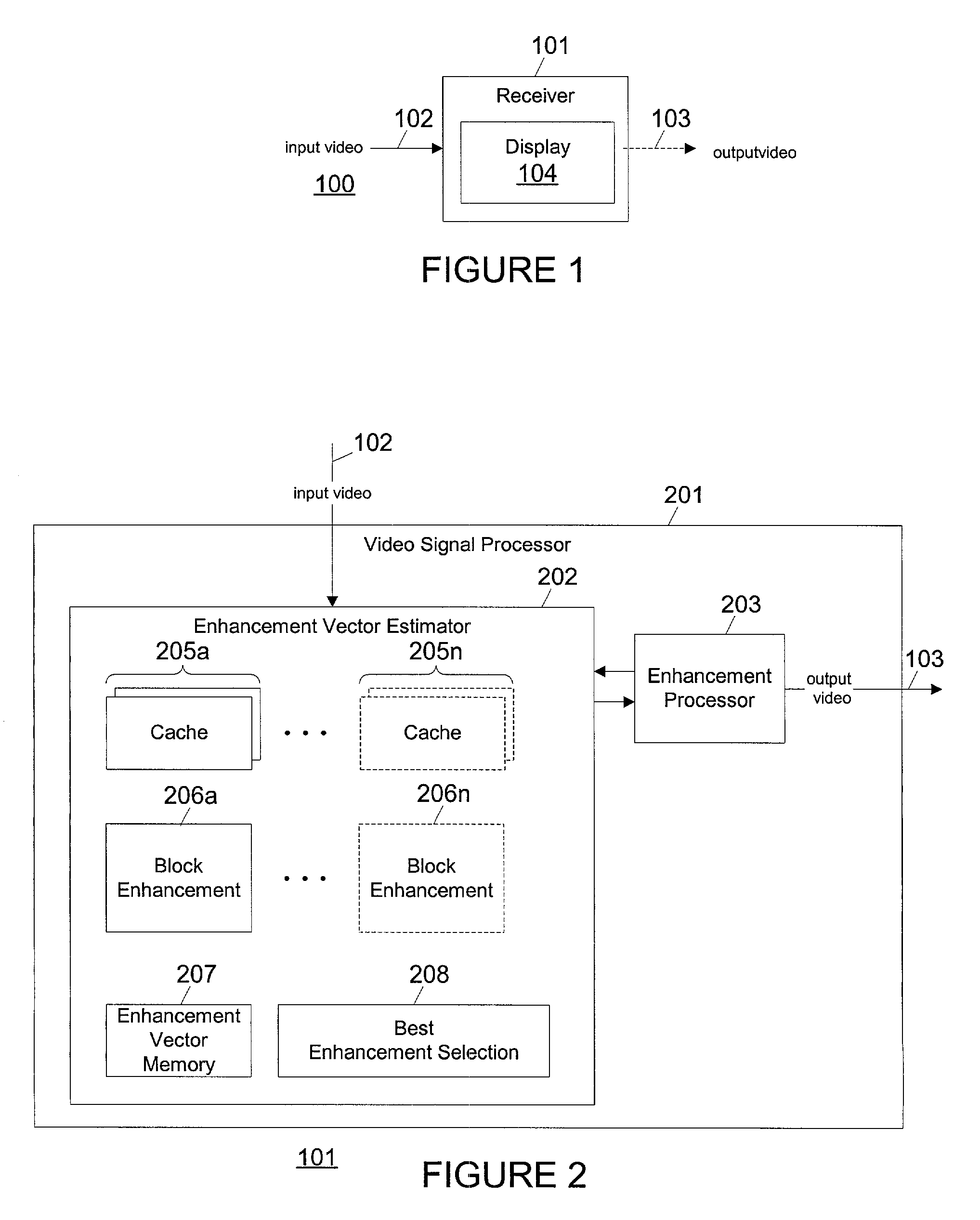

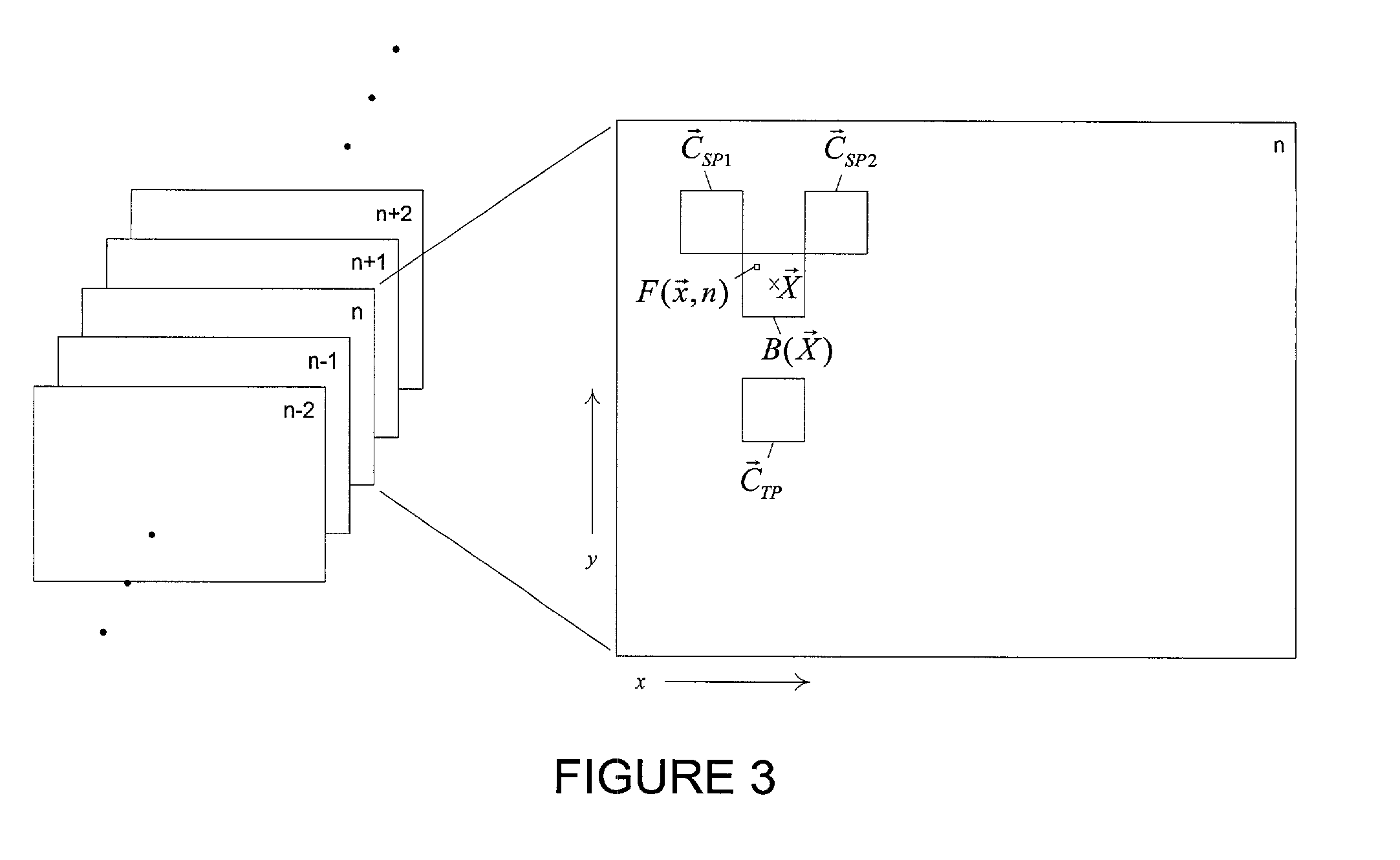

3-D recursive vector estimation for video enhancement

InactiveUS7042945B2Enhancing video informationEnhanced informationImage enhancementImage analysisTemporal consistencyError function

A video signal processor enhancing video information evaluates candidate vectors of enhancement algorithms utilizing an error function biased towards spatio-temporal consistency with a penalty function. The penalty function increases with the distance—both spatial and temporal—of the subject block from the block for which the candidate vector was optimal. Enhancements are therefore gradual across both space and time and the enhanced video information is intrinsically free of perceptible spatio-temporal varying artifacts.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

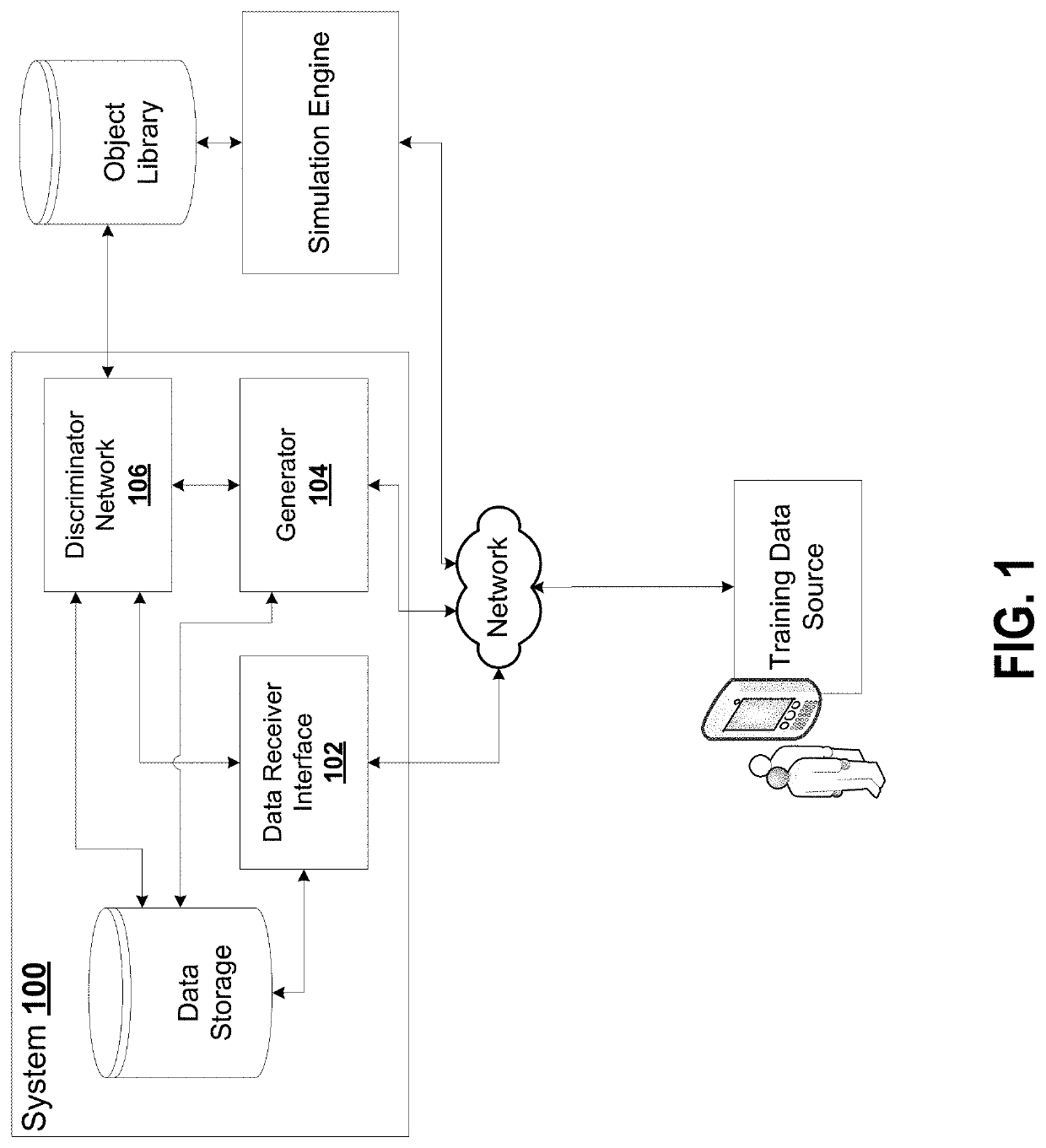

System and method for generation of unseen composite data objects

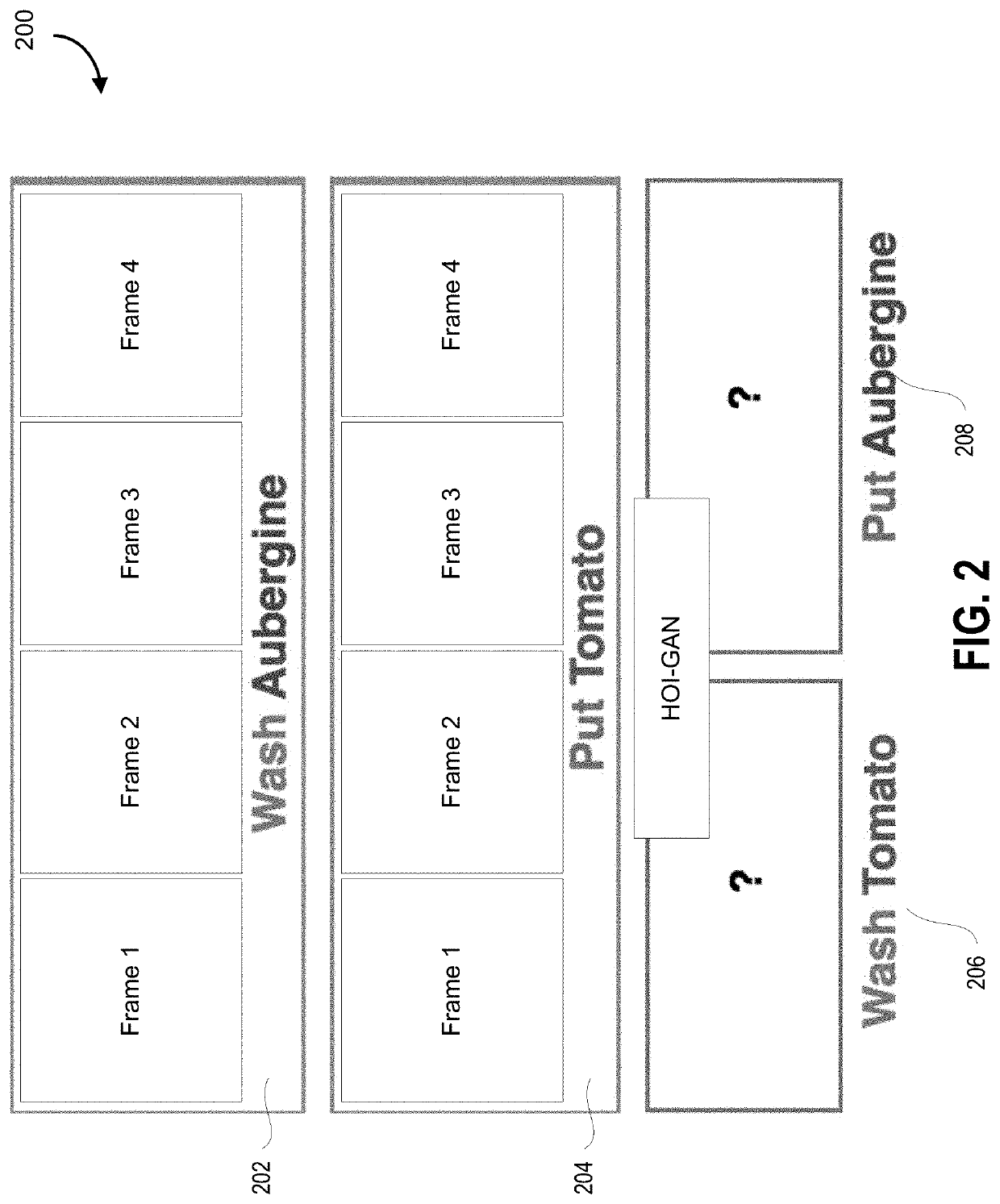

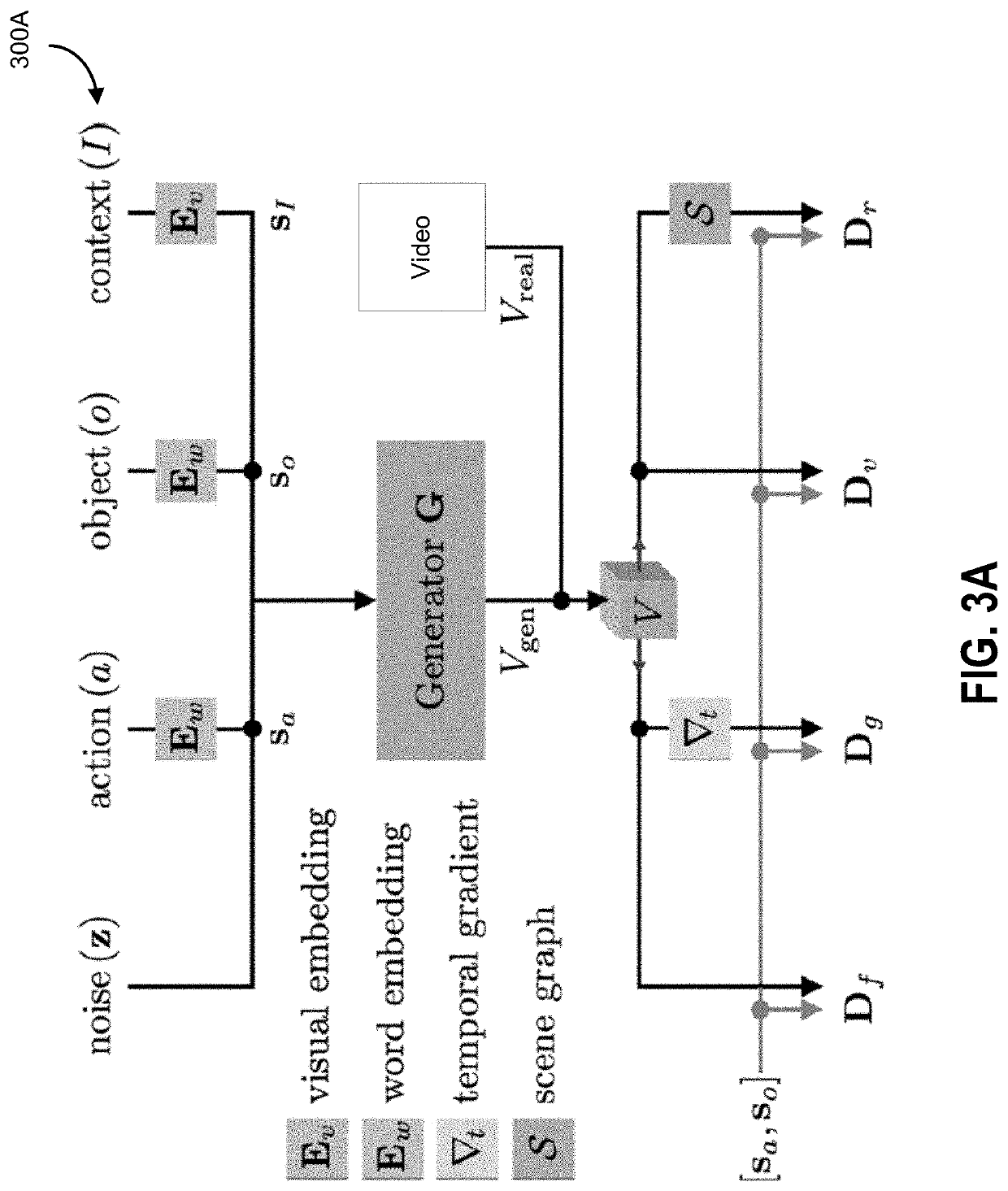

ActiveUS20200302231A1Avoiding combinatorial explosionLess cycleTelevision system detailsCharacter and pattern recognitionData setTight frame

A computer implemented system for generating one or more data structures is described, the one or more data structures representing an unseen composition based on a first category and a second category observed individually in a training data set. During training of a generator, a proposed framework utilizes at least one of the following discriminators—three pixel-centric discriminators, namely, frame discriminator, gradient discriminator, video discriminator; and one object-centric relational discriminator. The three pixel-centric discriminators ensure spatial and temporal consistency across the frames, and the relational discriminator leverages spatio-temporal scene graphs to reason over the object layouts in videos ensuring the right interactions among objects.

Owner:ROYAL BANK OF CANADA

Assembly control method based on long short-term memory neural network incremental model

InactiveCN110154024AReduce mistakesRealize the fusion of virtual and realProgramme-controlled manipulatorSimulationTemporal consistency

The invention provides a control method based on a long short-term memory neural network incremental model, and belongs to the technical field of intelligent control of information physical systems. According to the method, virtual models are established for a to-be-assembled product, an assembly robot and an assembly task entity which are on an assembly line through modeling software, and the movement pose of the assembly robot is accurately controlled on the basis of the dynamics principle; a feasible assembly scheme is planned through virtual assembly, real-time accurate mapping between thevirtual models and actual models is achieved, the actual assembly meets the error requirements of virtual assembly, so that the spatio-temporal consistency of the virtual models and equipment entityduring the movement process is maintained. According to the control method, the accuracy of a virtual assembly result can be improved, and it is ensured that the actual assembly is successfully completed.

Owner:TSINGHUA UNIV

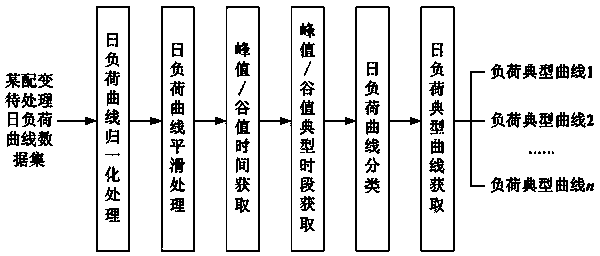

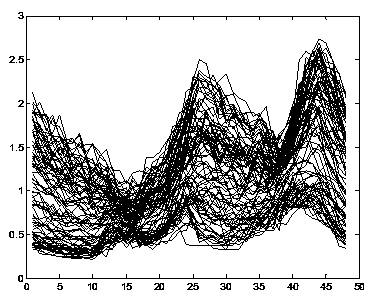

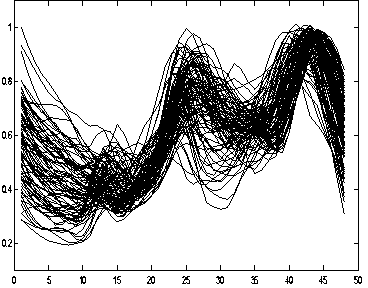

Method for analyzing distribution and transformation daily load typical curves on basis of structure features

InactiveCN103914621AClear peaks/valleysImprove toleranceSpecial data processing applicationsClassification methodsTemporal consistency

The invention discloses a method for analyzing distribution and transformation daily load typical curves on the basis of structure features. The method focuses on the peak / valley temporal consistency of similar daily load curves when the distribution and transformation daily load typical curves are acquired. The method includes selecting curves without abnormal data from distribution and transformation historical daily load curve databases and utilizing the curves without the abnormal data as to-be-analyzed objects; utilizing categories with high duty cycles as daily load curve typical categories by the aid of a classification process; solving the distribution and transformation daily load typical curves. The method has the advantages that peak / valley typical periods which are acquired statically are utilized as main bases in daily load curve classification procedures, and accordingly distribution and transformation daily load peak / valley occurrence time can be controlled advantageously.

Owner:STATE GRID CORP OF CHINA +1

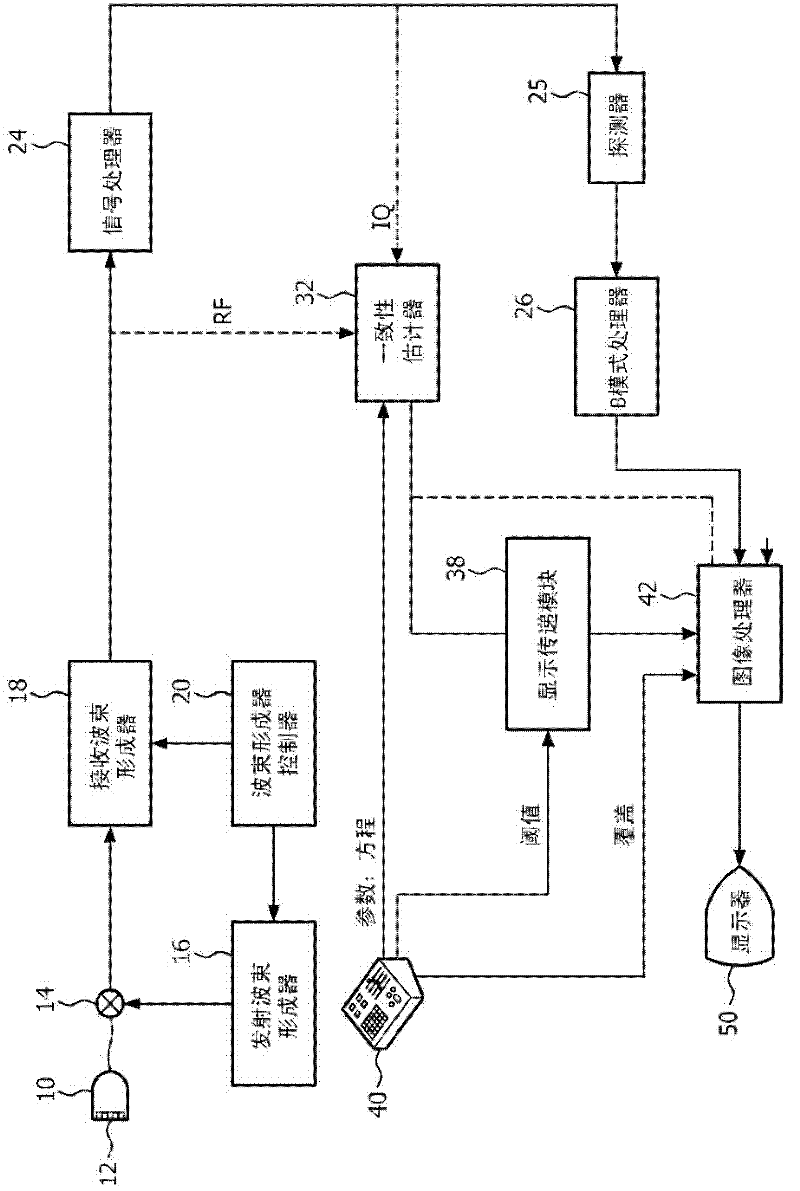

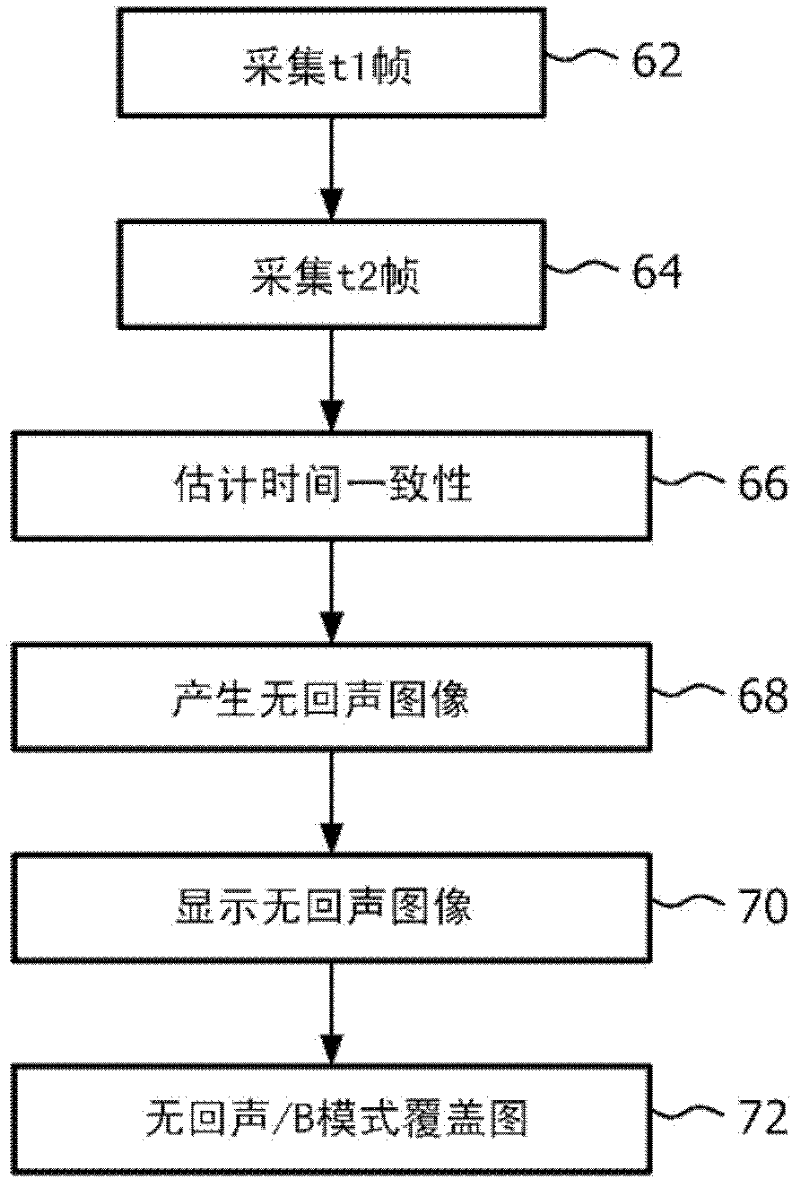

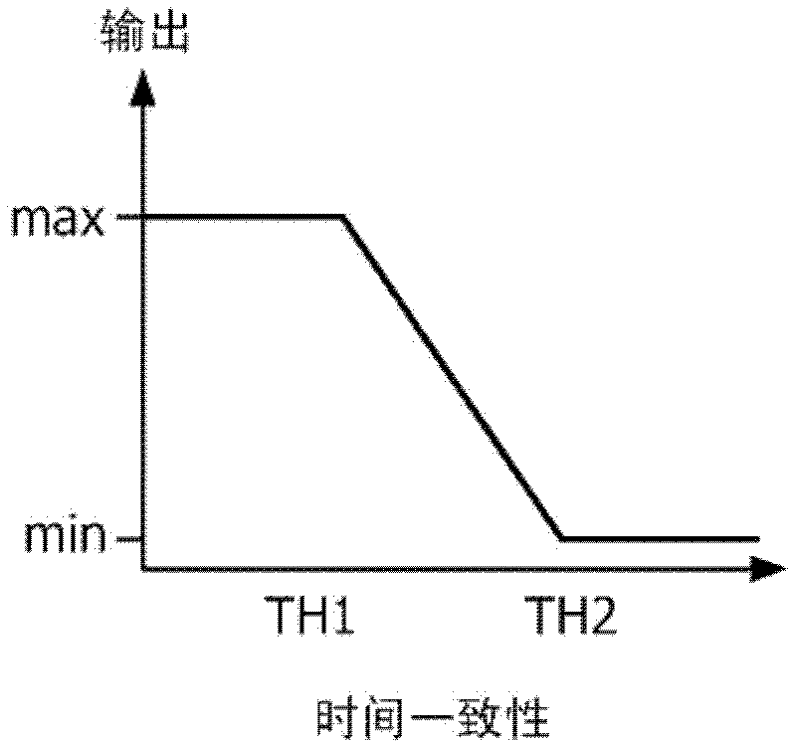

Ultrasonic anechoic imaging

This invention relates to a method of ultrasonically imaging a region of interest that may contain anechoic and / or hypoechoic echoes. The method comprises the steps of: providing a first and a second set of ultrasound data, said two sets comprising information of the region of interest at two different instants of time respectively, determining from the first and second data sets, a temporal consistency value of at least an area of the region of interest, and producing an image indicating this area as being hypoechoic or anechoic in accordance with said temporal consistency value. Doing so, an anechoic image produced by the method of the invention emphazises the rendering of anechoic and / or hypoechoic areas over echoic ones.

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

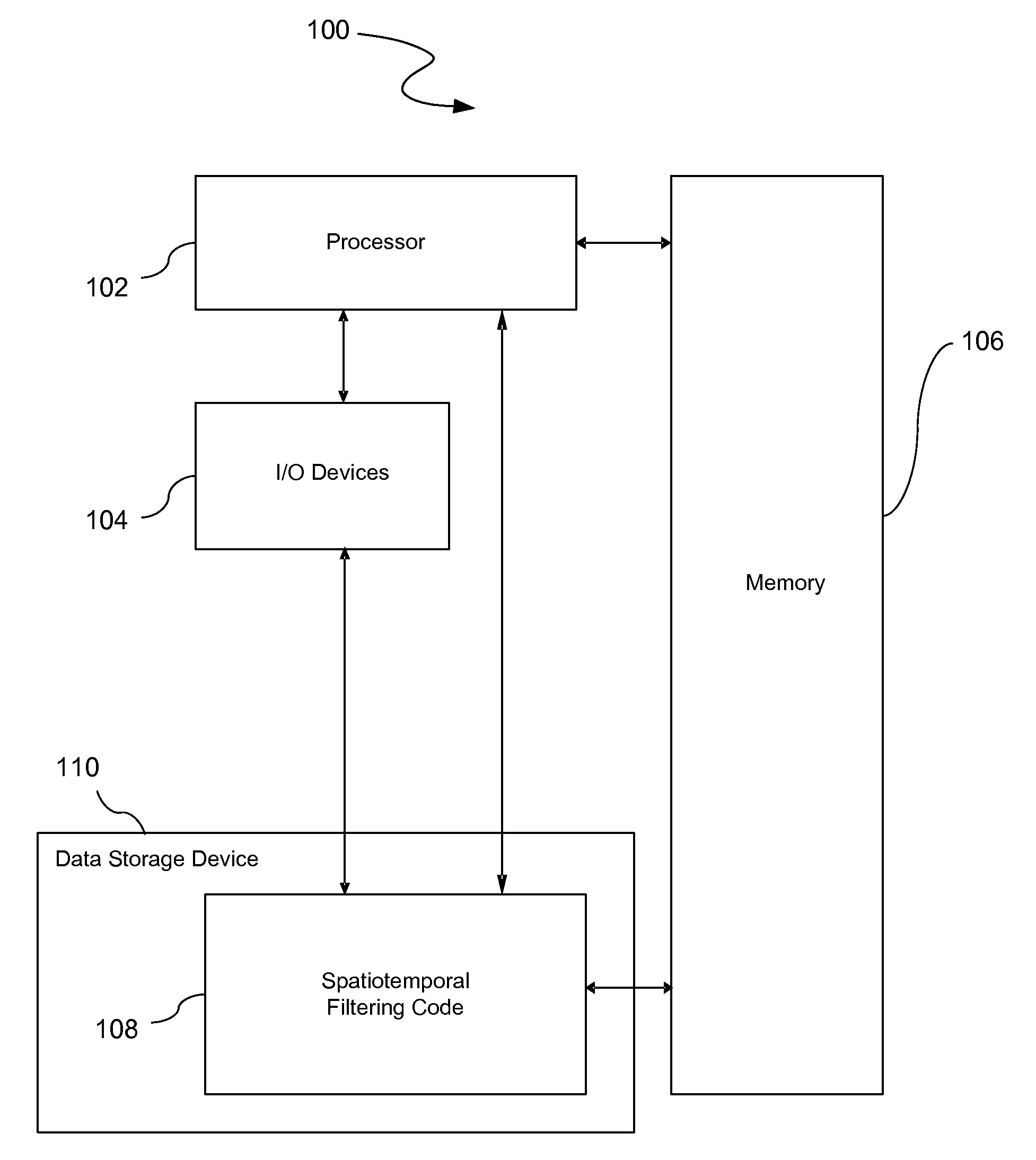

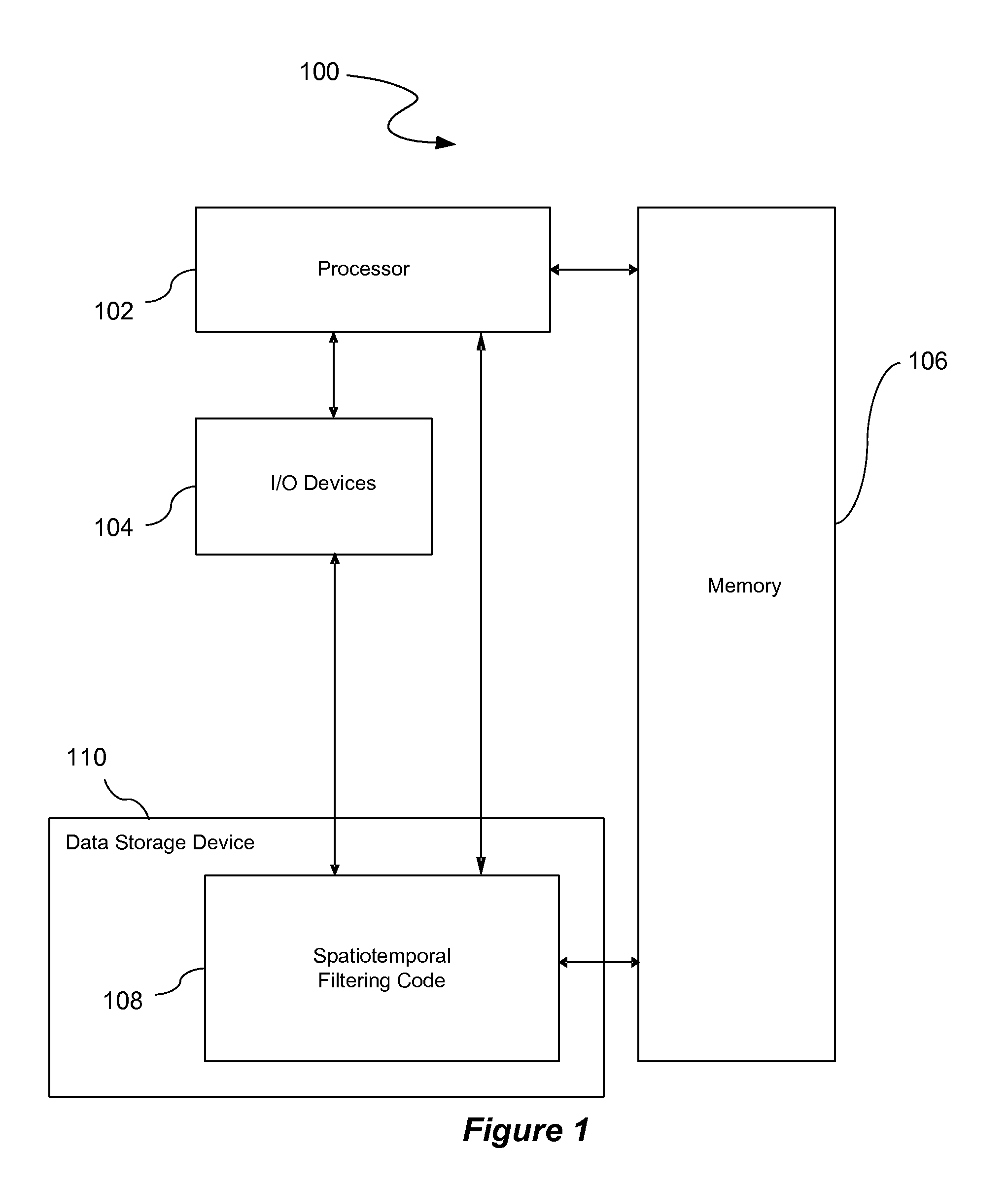

Practical temporal consistency for video applications

ActiveUS20140146235A1Television system detailsColor signal processing circuitsTemporal consistencyVideo sequence

A video sequence having a plurality of frames is received. A feature in a first frame from the plurality of frames and a first position of the feature in the first frame are detected. The position of the feature in a second frame from the plurality of frames is estimated to determine a second position. A displacement vector between the first position and the second position is also computed. A plurality of content characteristics is determined for the first frame and the second frame. The displacement vector is spatially diffused with a spatial filter over a frame from the plurality of frames to generate a spatially diffused displacement vector field. The spatial filter utilizes the plurality of content characteristics. A temporal filter temporally diffuses over a video volume the spatially diffused displacement vector field to generate a spatiotemporal vector field. The temporal filter utilizes the plurality of content characteristics.

Owner:DISNEY ENTERPRISES INC +1

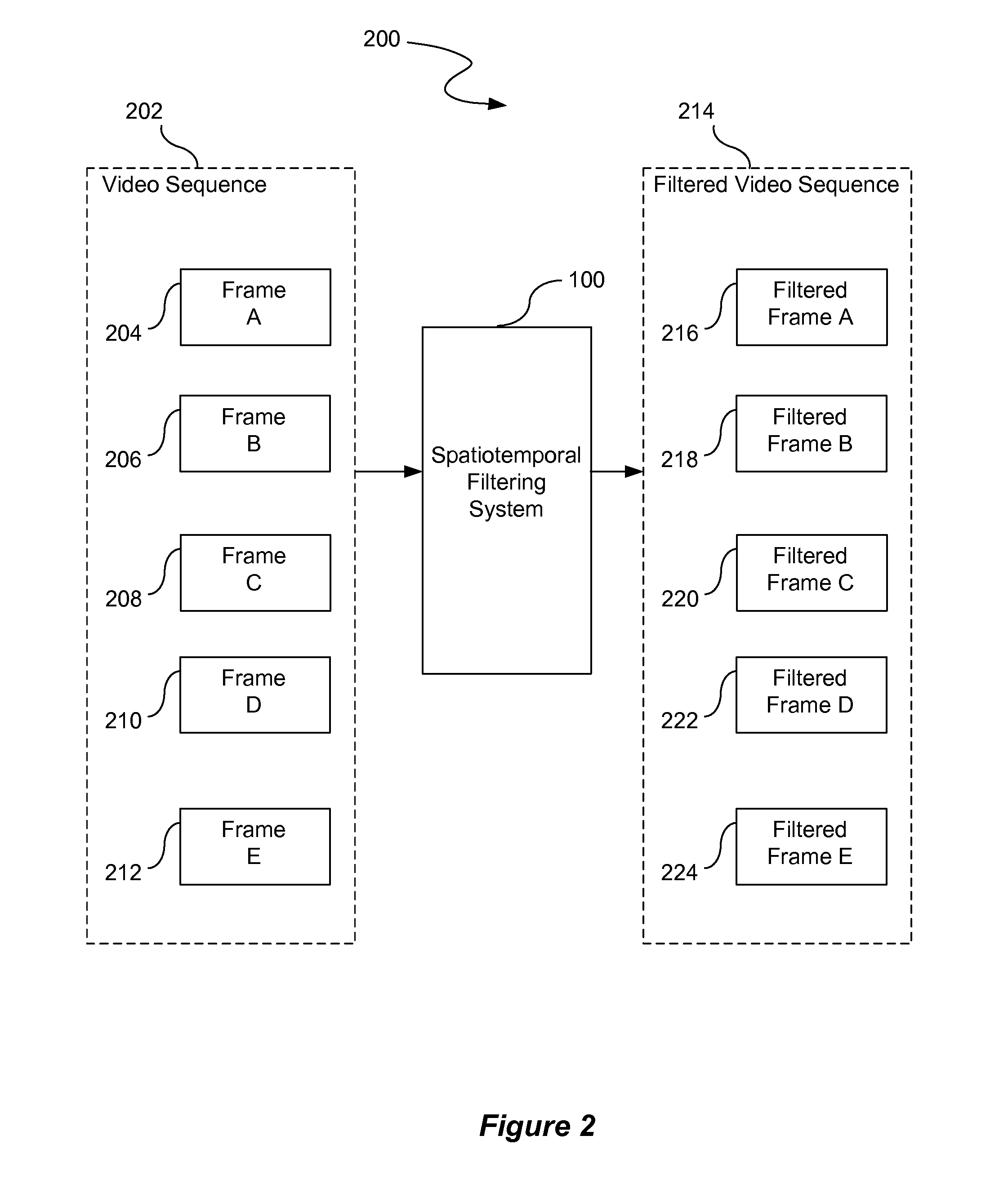

Visual loopback detection method based on auto-encoding network

PendingCN112419317AImprove accuracyImprove robustnessImage enhancementImage analysisCosine similarityFeature vector

The invention discloses a visual loopback detection method based on an auto-encoding network. The visual loopback detection method comprises the following steps: 1, acquiring an image; 2, calculatinga memorability score of the image, comparing the memorability score with a set memorability score threshold value, determining whether to reserve the image or not, and determining a key frame; 3, inputting the screened key frames into a trained convolutional self-encoding network, and obtaining a GIST global feature f after noise reduction; 4, taking out a feature fpre from the feature database, calculating cosine similarity of two feature vectors fpre and f, comparing the cosine similarity with a set similarity threshold, determining whether the frame is a candidate frame or not, and performing loop-back verification; and 5, in a loopback verification stage, on the premise of completing space consistency verification, carrying out time consistency verification, enabling one image to meetloopback conditions and become loopback candidate frames in a continuous motion process, enabling the obtained key frames to become candidate frames within a certain time range, and finally determining loopback only when the conditions are met.

Owner:NORTHEASTERN UNIV

Method and apparatus for generating temporally consistent superpixels

InactiveUS20160210755A1Detect erroneous temporal consistencyMinimize the numberImage enhancementImage analysisPattern recognitionFrame sequence

A method and an apparatus for generating superpixels for a sequence of frames. A feature space of the sequence of frames is separated into a color subspace and a spatial subspace. A clustering is then performed in the spatial subspace on a frame basis. In the color subspace a clustering is performed on stacked frames. An erroneous temporal consistency of a superpixel associated to a frame is detected by performing a similarity check between a corresponding superpixel in one or more past frames and one or more future frames using two or more metrics. The affected superpixels is future frames are corrected accordingly.

Owner:MAGNOLIA LICENSING LLC

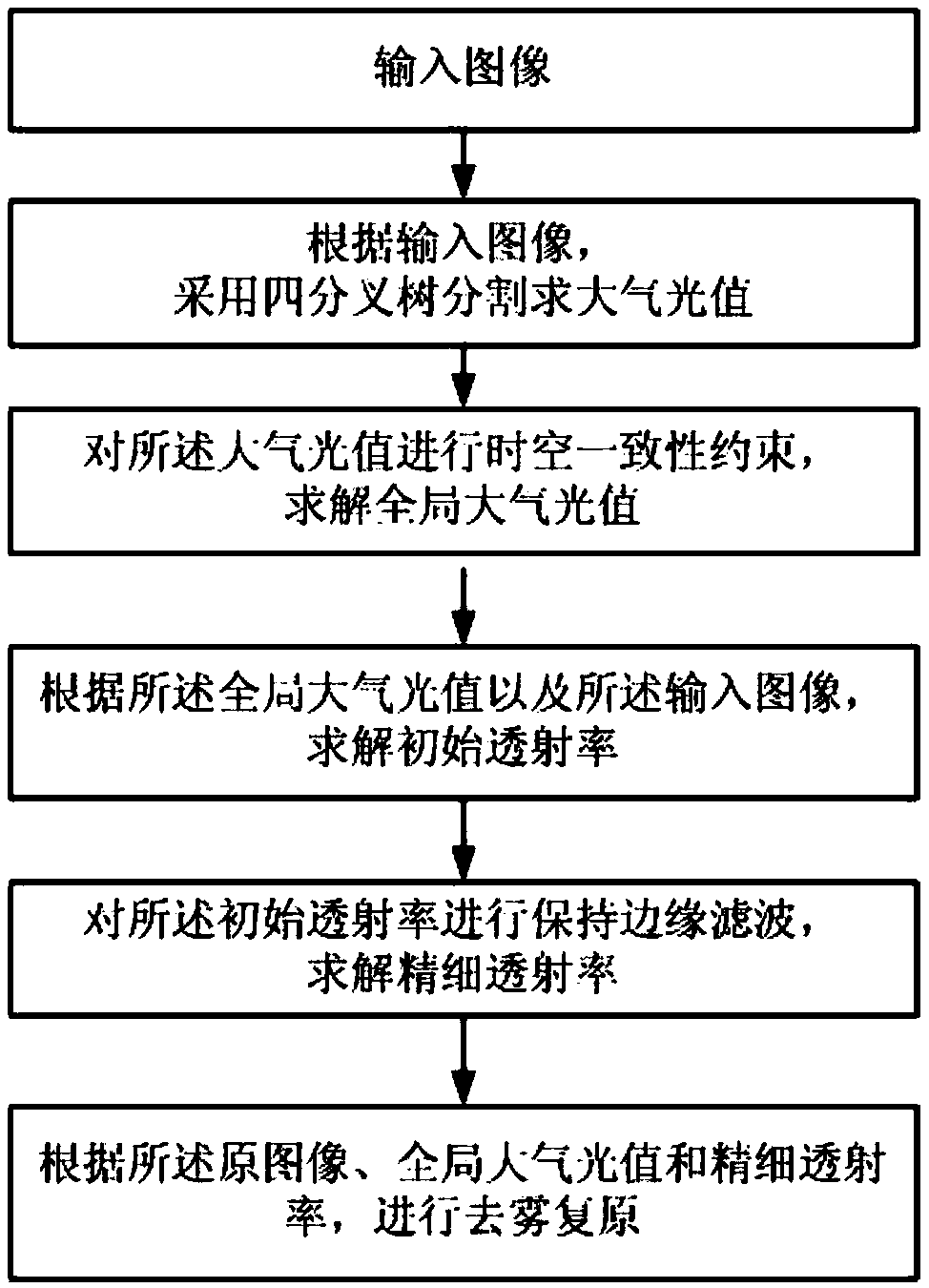

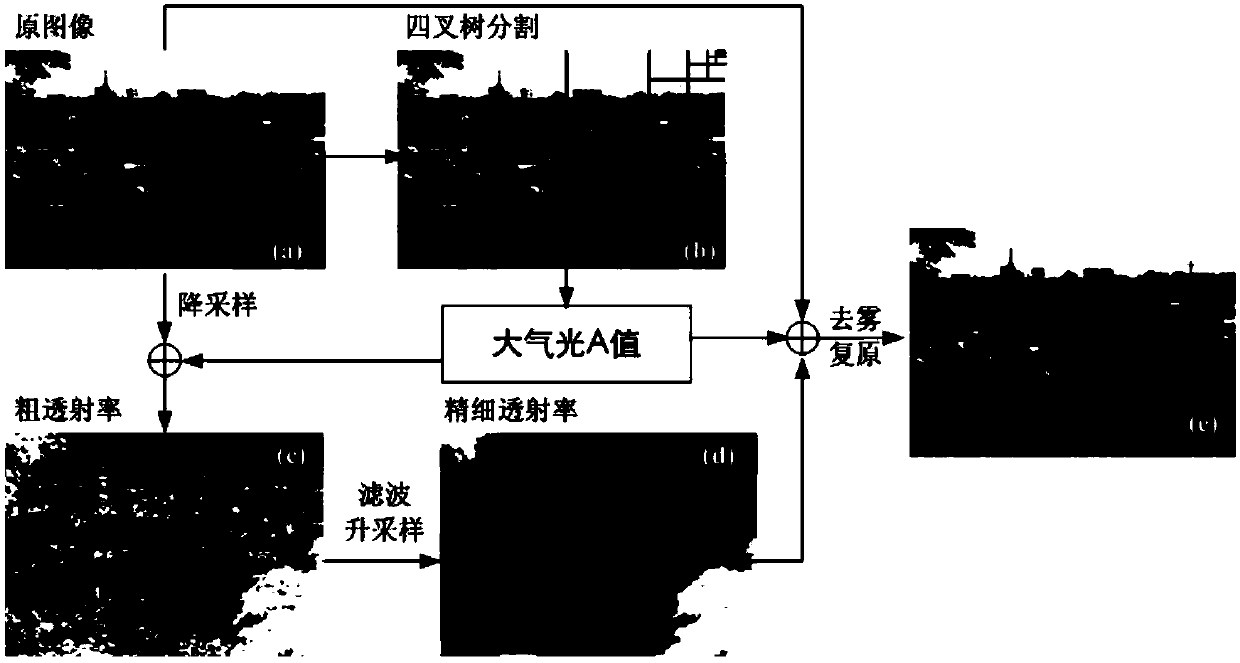

Fast video defogging method based on space-time consistency constraint

ActiveCN109636735AAvoid flickeringEasy to implementImage enhancementImage analysisTemporal consistencySelf adaptive

The invention provides a fast video defogging method based on space-time consistency constraint. The method comprises: dividing the input image by using a quadtree to obtain an instantaneous atmospheric light value; and performing spatial-temporal consistency constraint on the instantaneous atmospheric light value, solving a global atmospheric light value, solving an initial transmissivity according to the global atmospheric light value and the input image, performing edge maintaining filtering on the initial transmissivity, solving a fine transmissivity, and performing defogging restoration according to the input image, the global atmospheric light value and the fine transmissivity. According to the method, the global atmospheric light value is subjected to space-time consistency constraint, so that the flickering phenomenon possibly generated when a video image is processed by a single-frame defogging algorithm is avoided. In the transmissivity estimation, the fog concentration of the image is judged, and the minimum value of the transmissivity is constrained by using the judgment result, so that the self-adaptive capability of the method under different fog conditions is ensured. A comparison algorithm is mostly adopted in transmissivity estimation, and hardware logic implementation and transplantation are very convenient.

Owner:LUOYANG INST OF ELECTRO OPTICAL EQUIP OF AVIC

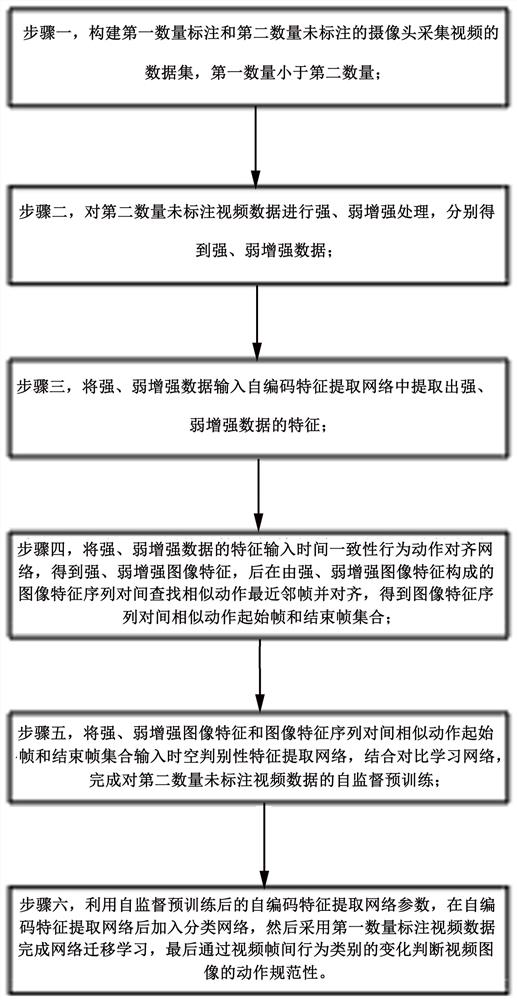

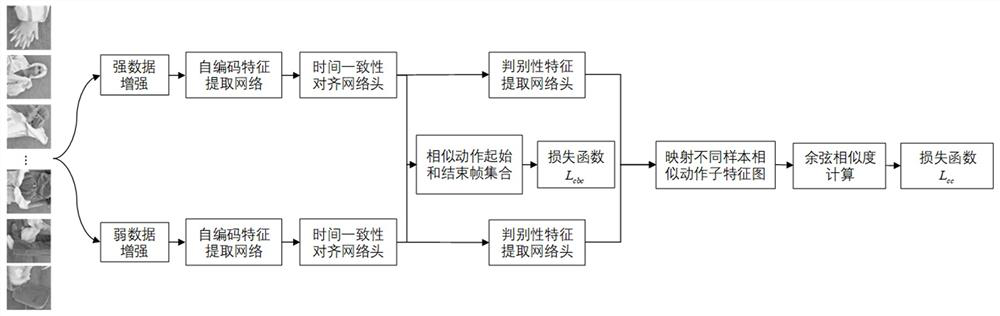

Motion normalization detection method and device based on time consistency contrast learning

PendingCN114648723ALow costImprove performanceCharacter and pattern recognitionNeural architecturesPattern recognitionVideo monitoring

The invention relates to the field of intelligent video monitoring and deep learning, in particular to an action normalization detection method and device based on time consistency comparative learning, and the method comprises the steps: firstly constructing a data set for videos which are acquired through a camera and are marked by a first number and are not marked by a second number, and the first number is smaller than the second number; secondly, performing strong and weak data enhancement on an unlabeled video, extracting features, inputting the features into a time consistency behavior alignment network, outputting a feature map and a similar action starting and ending frame set between different samples, mapping sub-feature maps corresponding to the sets on the feature map, and constructing similar and different types of sub-feature map samples; sending to a comparative learning network to extract space-time discriminant features; the first number of labeled videos are sent to a pre-trained network for transfer learning, and behavior categories are output; and finally, the behavior normalization is judged according to the inter-frame behavior category change, and if the behavior normalization is not standard, early warning is given out.

Owner:ZHEJIANG LAB +1

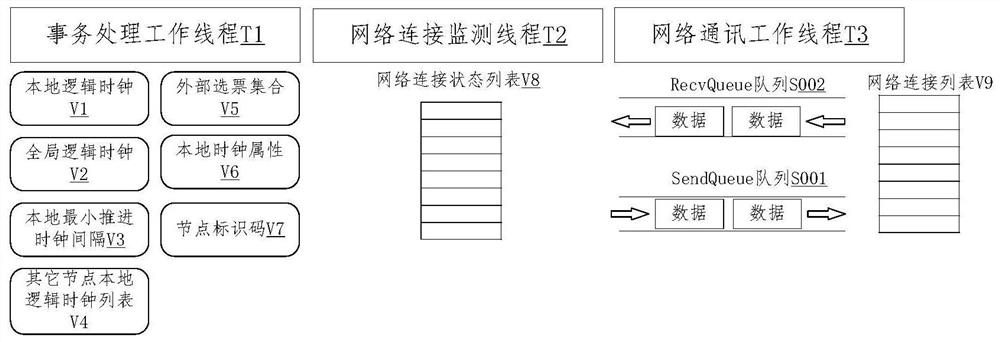

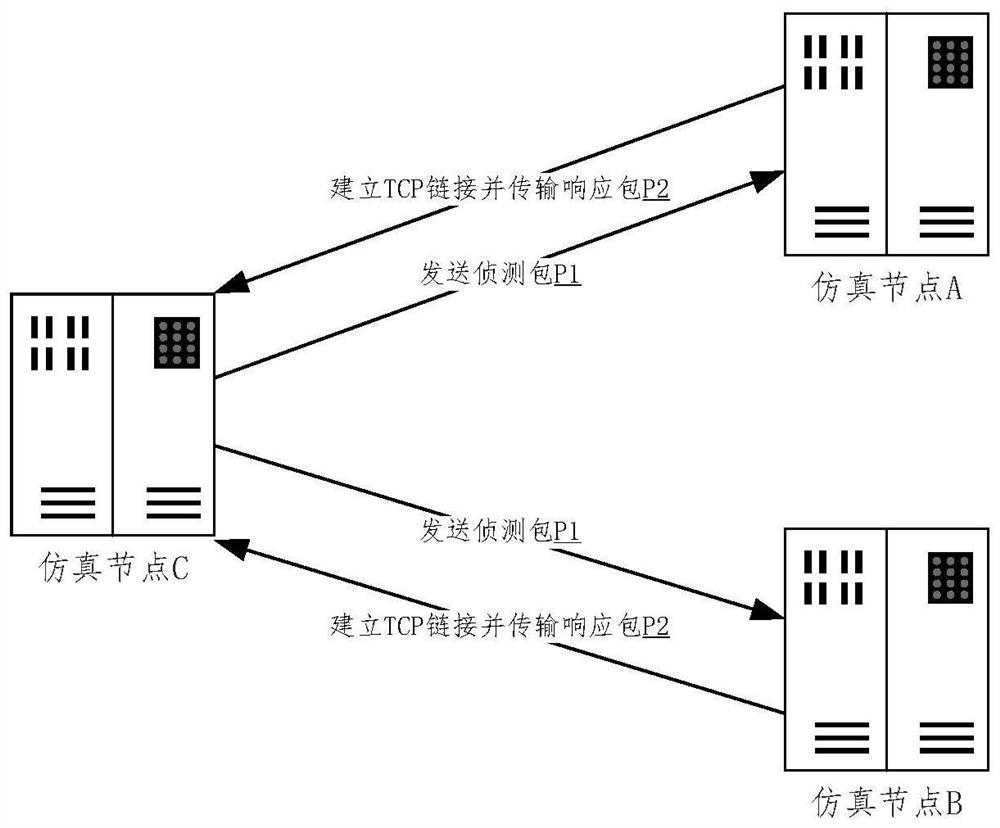

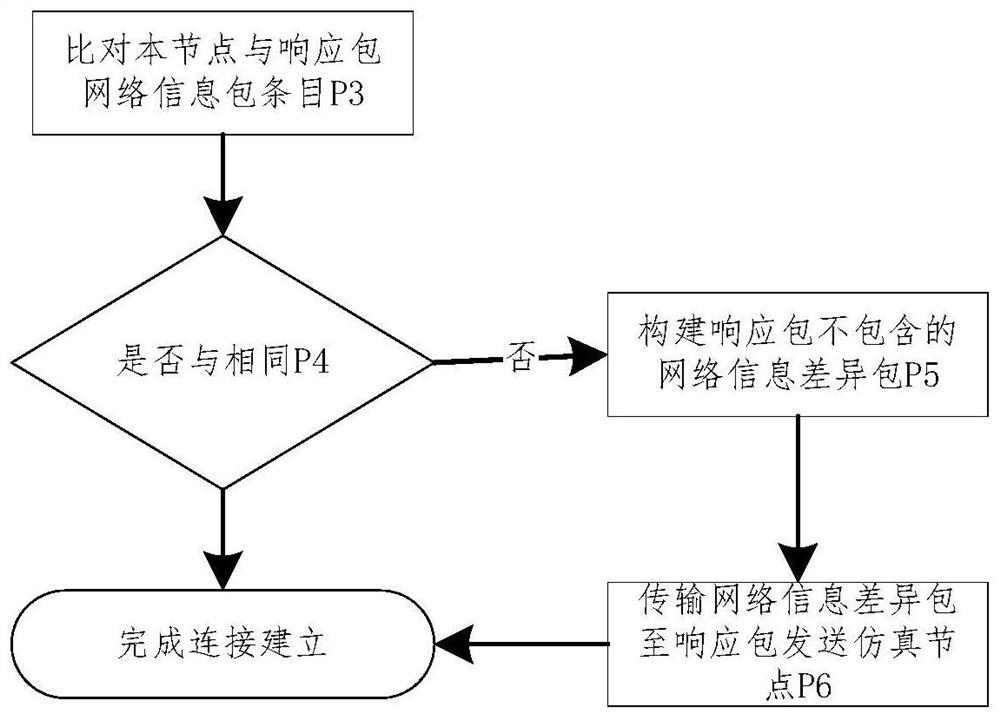

Time consistency synchronization method for distributed simulation

ActiveCN111641470AGuaranteed correctnessEnsure consistencySynchronisation information channelsTime-division multiplexTemporal consistencyEngineering

The invention belongs to the technical field of time synchronization of computer co-simulation, and particularly relates to a time consistency synchronization method for distributed simulation. According to the time consistency synchronization method, the most appropriate master party clock is selected according to votes, other clocks in a network are controlled to synchronize by using the masterparty clock, therefore, the consistency of data of each node and time-related events in time logic is ensured, even if a certain simulation node goes down, the influence on other links of the whole system is relatively small, decentration can be effectively carried out, the time consistency can be ensured to the greatest extent, and the correctness and the scale of the whole distributed system areensured.

Owner:HARBIN ENG UNIV

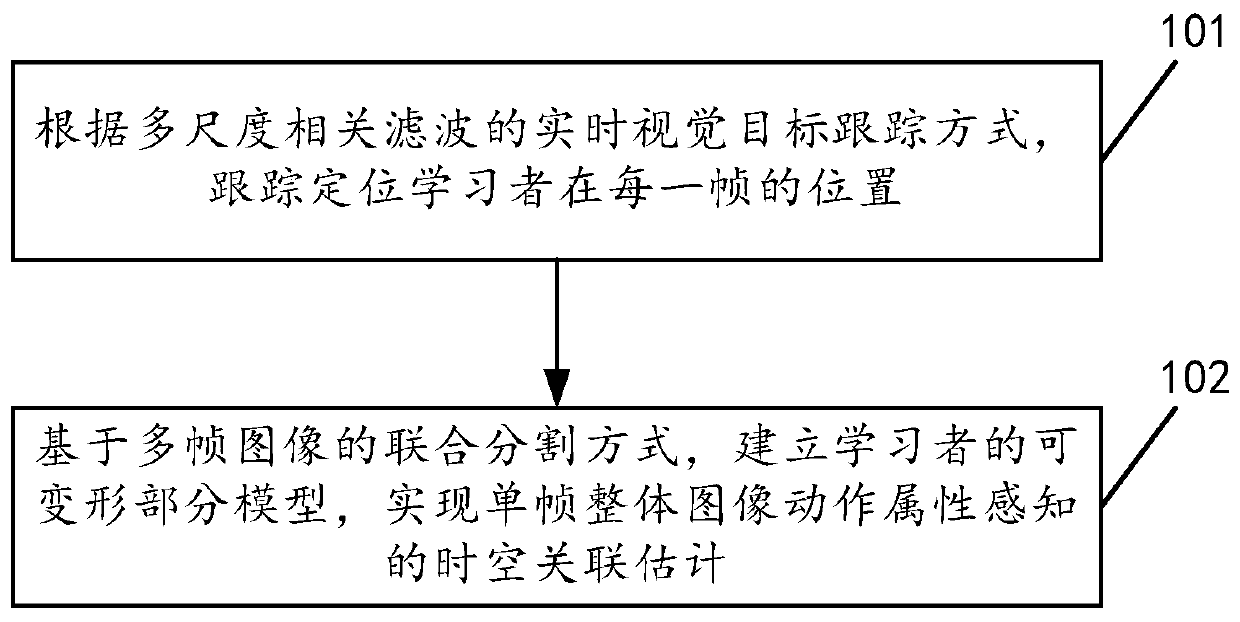

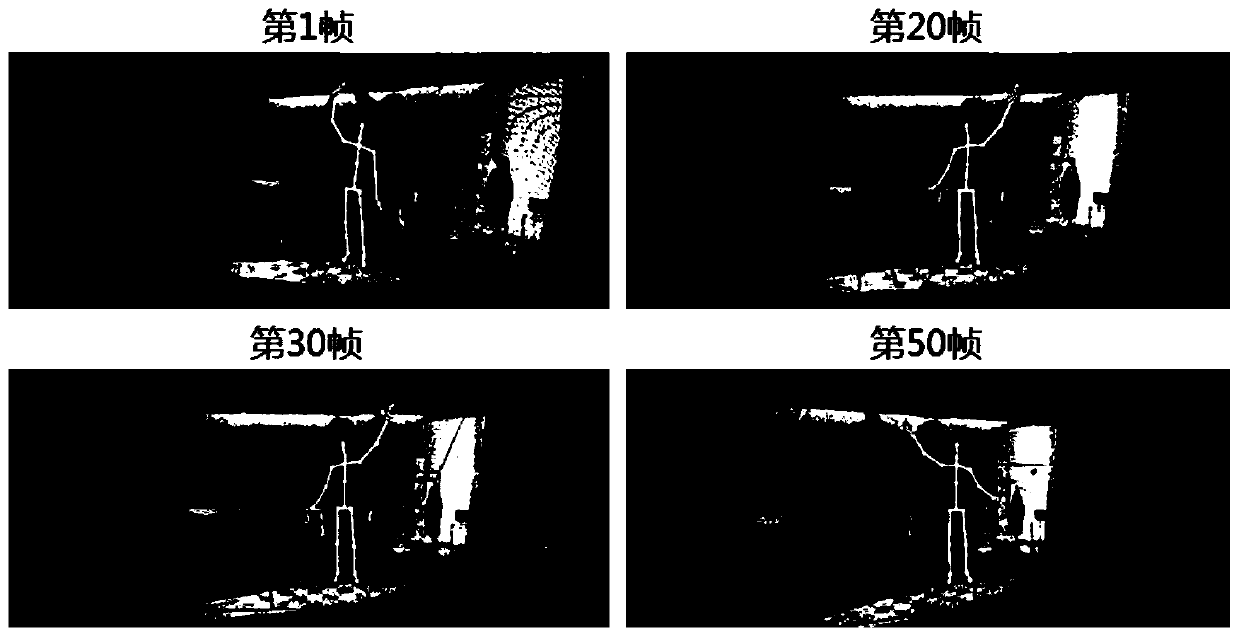

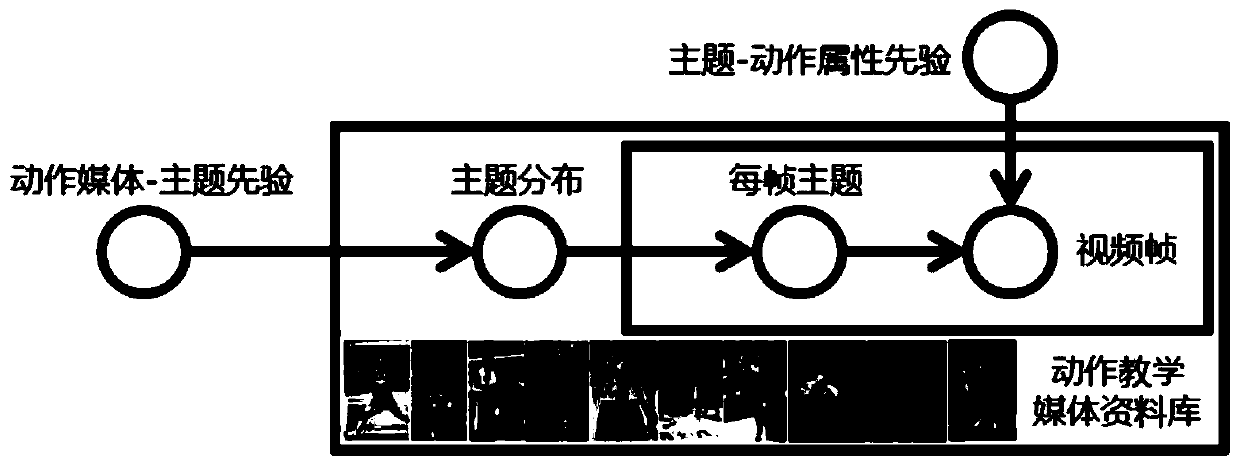

A physical action attribute analysis and recognition method for intelligent teaching space-time correlation

InactiveCN109919024ARealize analysisAchieving identifiabilityData processing applicationsImage analysisPattern recognitionTemporal consistency

The invention discloses a physical action attribute analysis and recognition method for intelligent teaching space-time correlation. The method comprises the following steps: tracking and positioningthe position of a learner in each frame according to a real-time visual target tracking mode of multi-scale correlation filtering; And based on a multi-frame image joint segmentation mode, establishing a deformable part model of the learner, and realizing space-time correlation estimation of single-frame overall image action attribute perception. The scheme provided by the invention can improve the spatial-temporal structure prediction and recognition capability of the high-quality physical action education resource visual media of the education resource public service platform, and the structural, continuous and spatial-temporal consistency of physical actions and the like are analyzed to realize the analysis and recognition of the physical actions and the accurate prediction of the change trend of the physical actions.

Owner:陈强 +2

Method for generating spatial-temporally consistent depth map sequences based on convolution neural networks

Owner:ZHEJIANG GONGSHANG UNIVERSITY

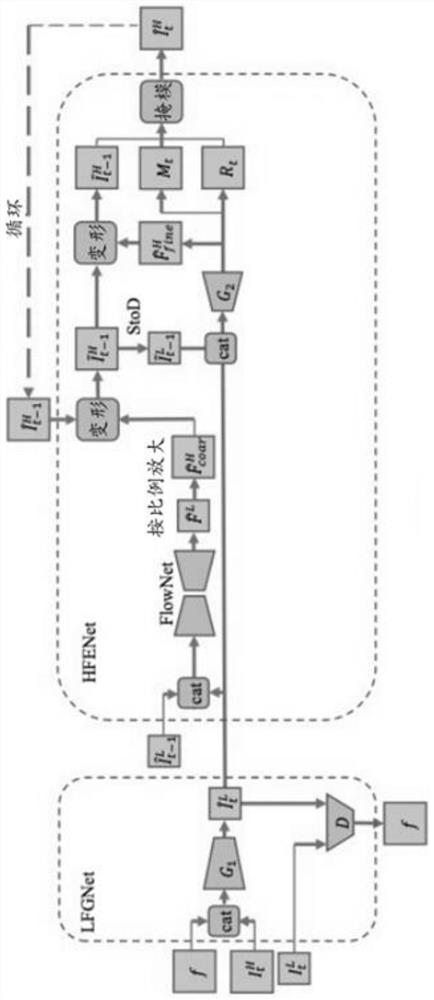

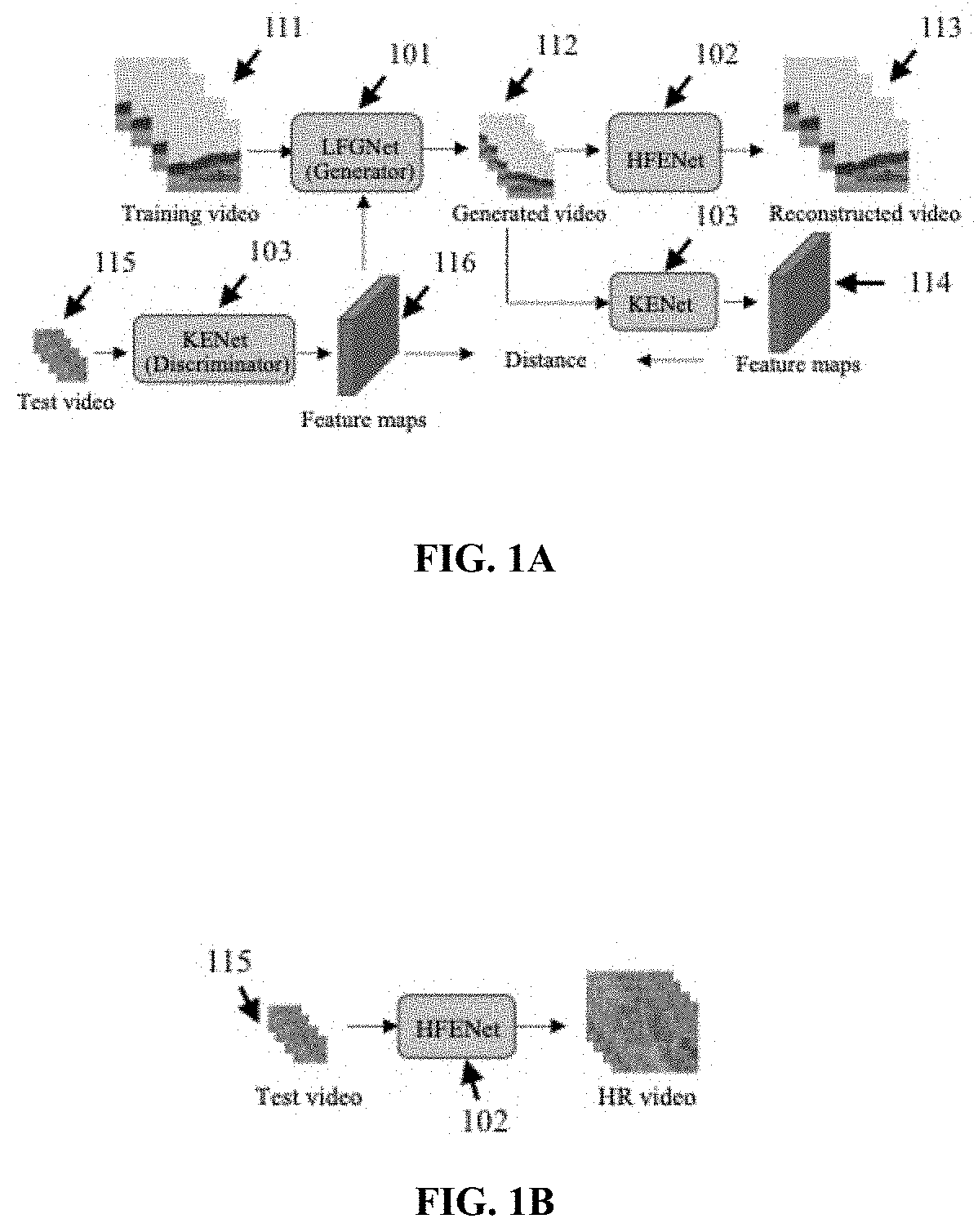

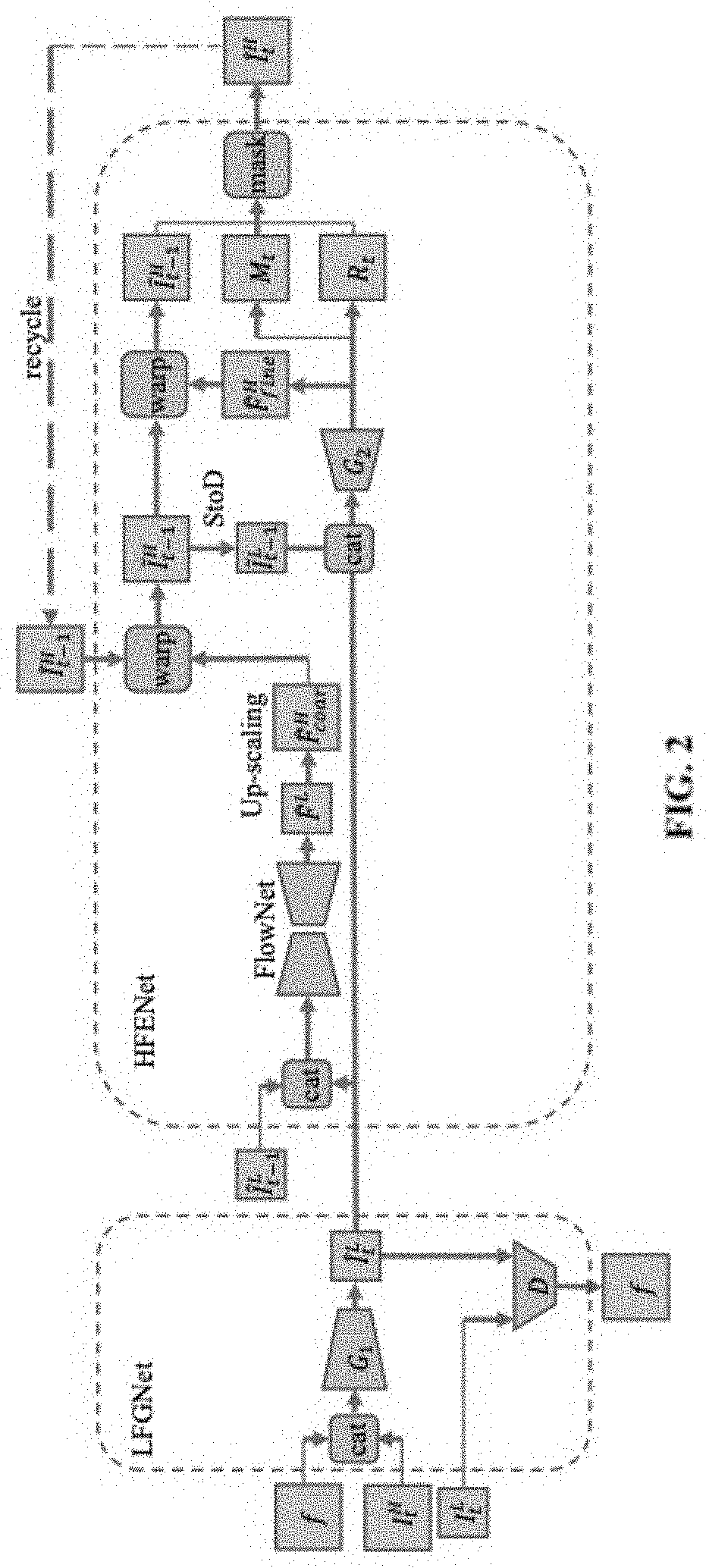

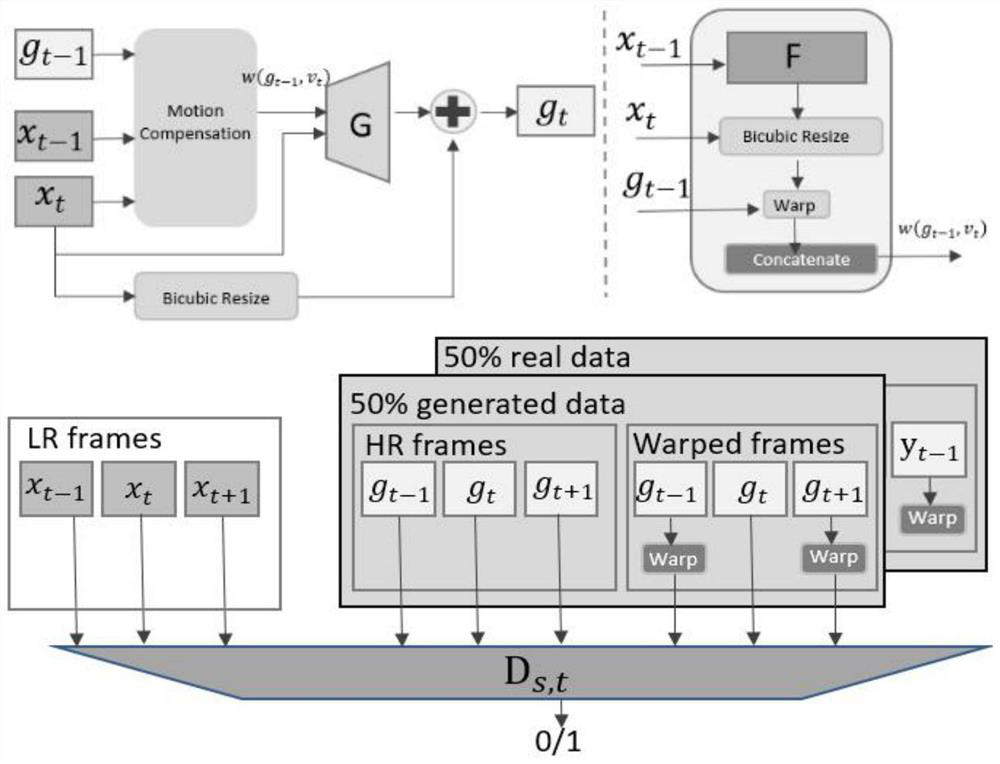

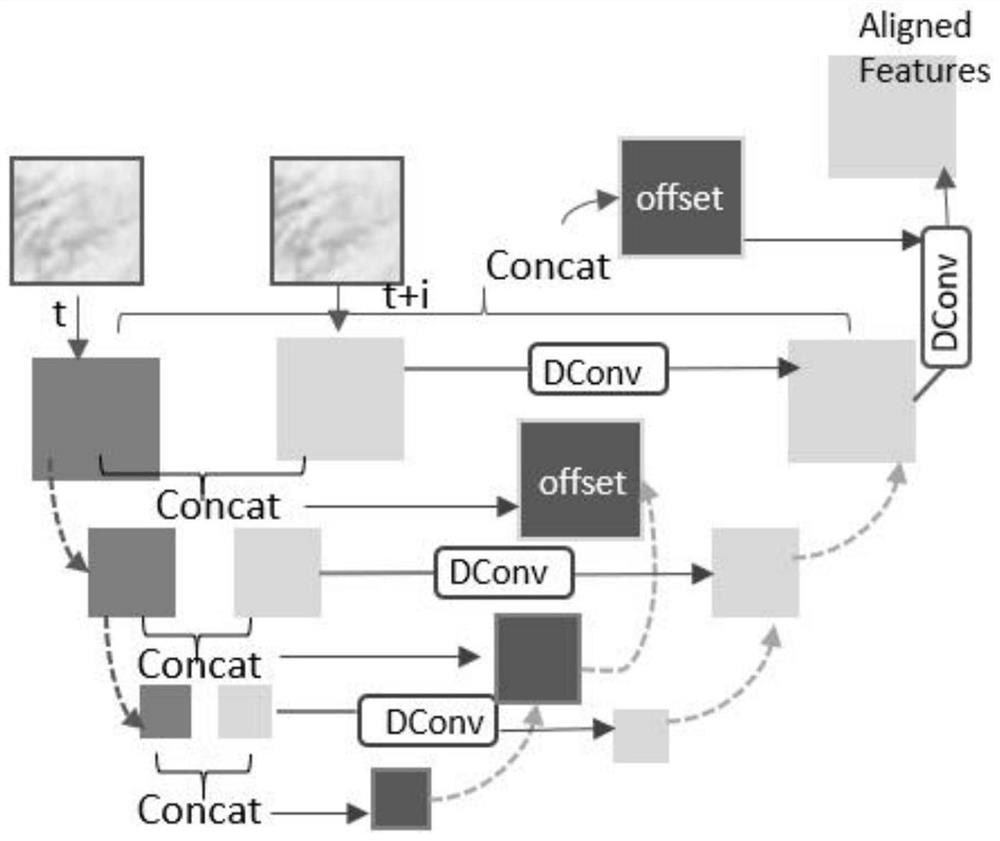

Apparatus and method for unsupervised video super-resolution using generative adversarial networks

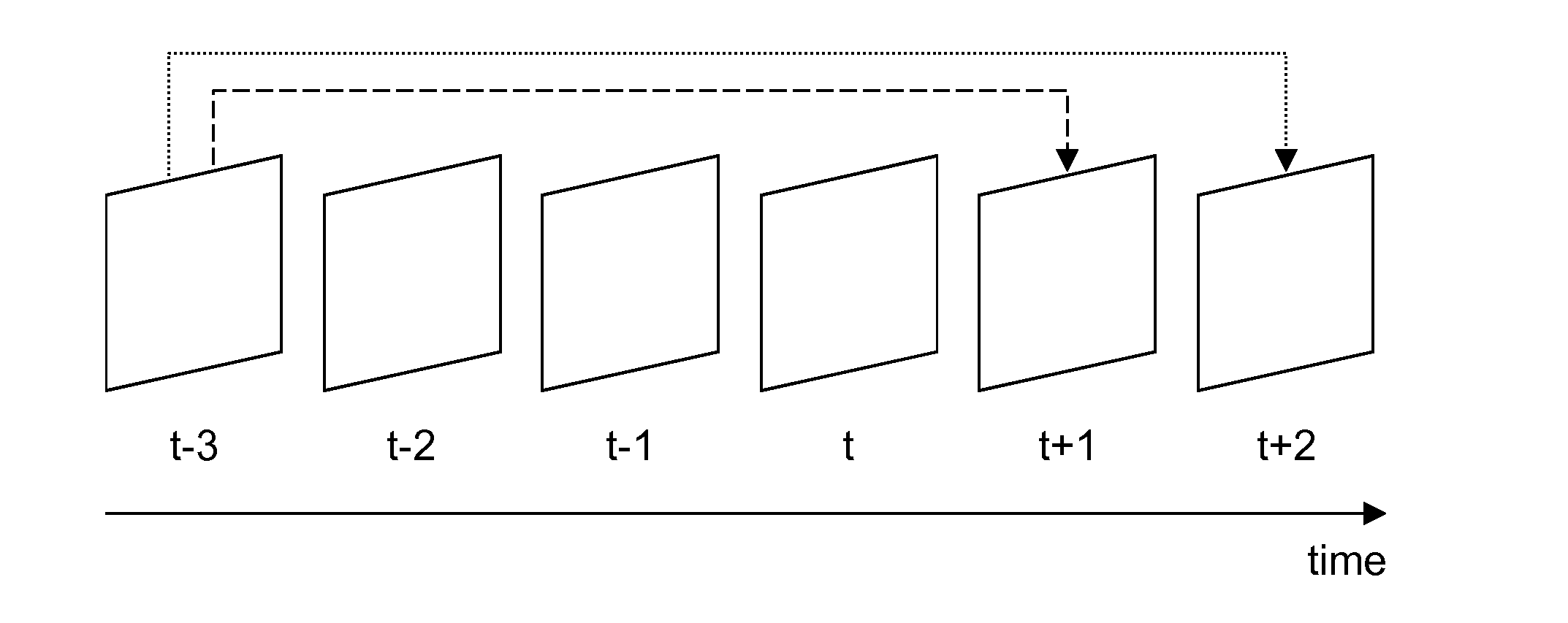

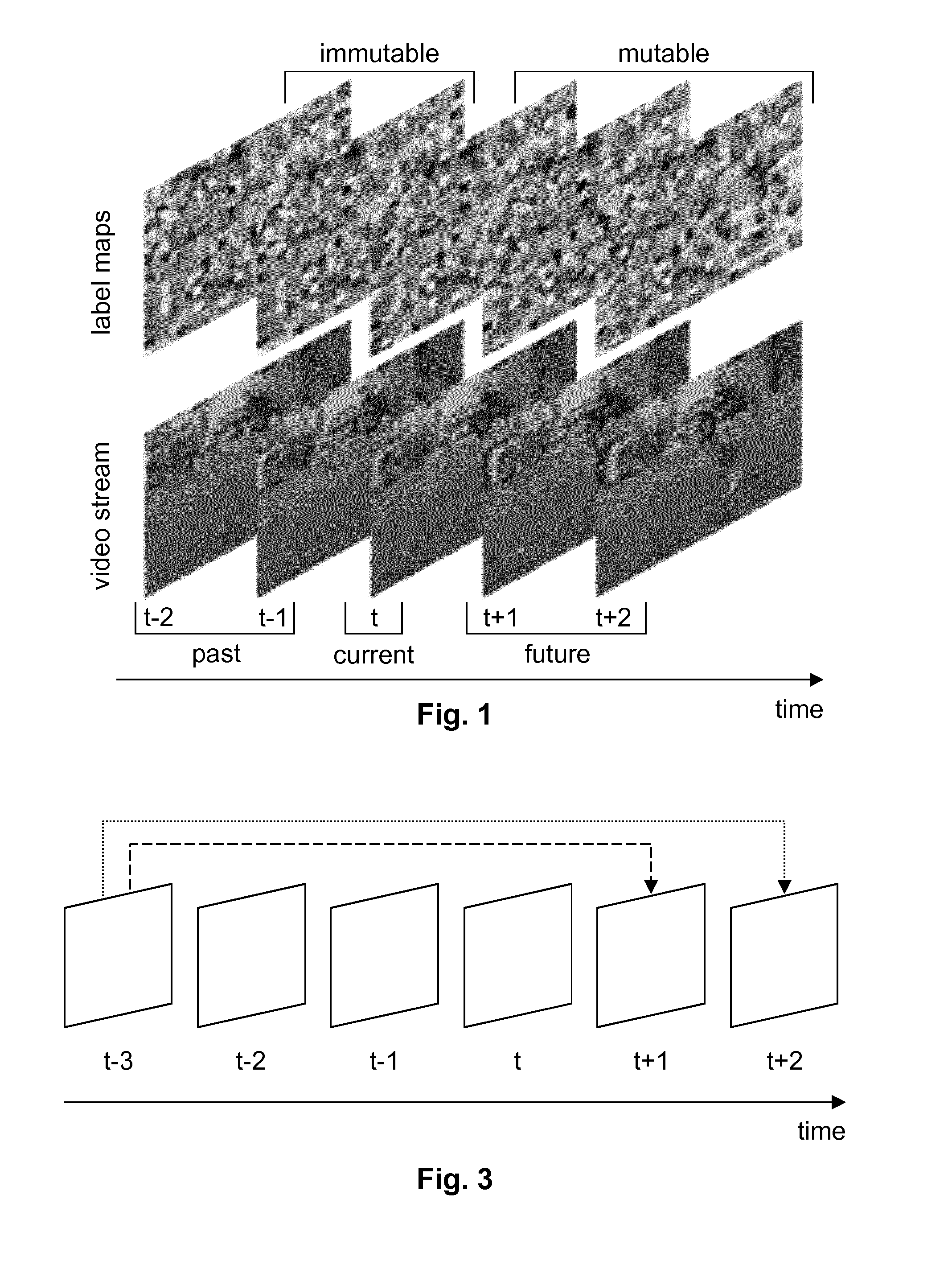

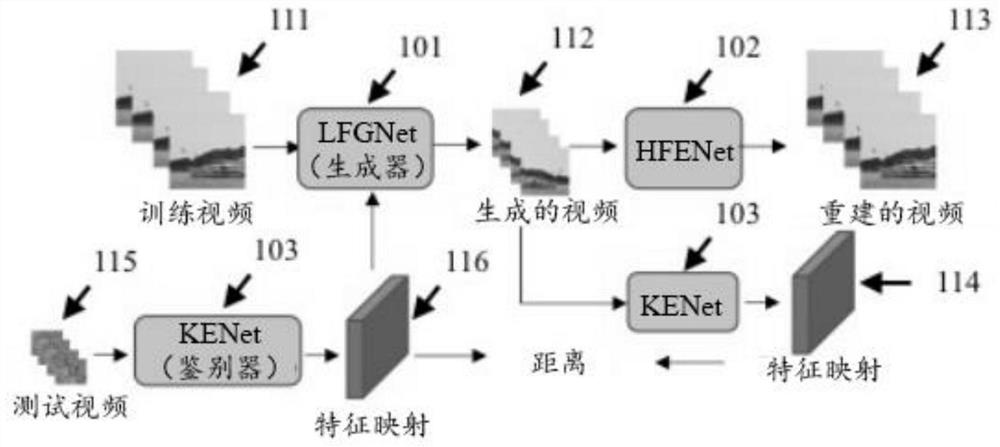

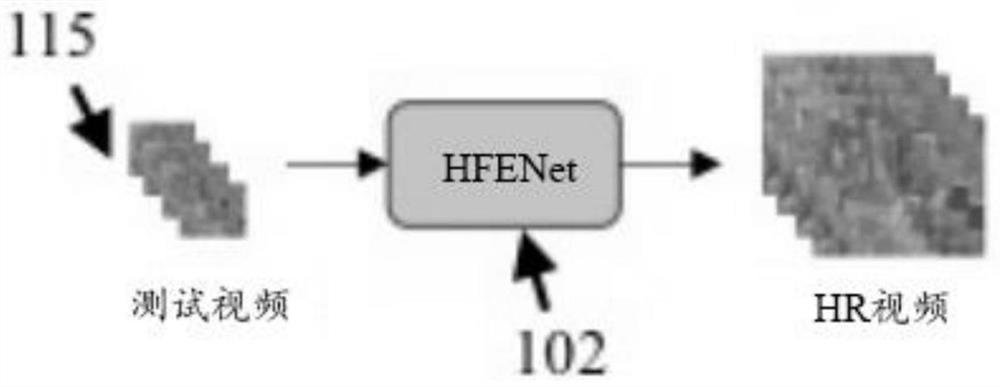

A method of video super-resolution (VSR) with temporal consistency using a generative adversarial network (VistGAN) that only requires training a high resolution video sequence to generate pairs of high resolution / low resolution video frames for training, without the need for pre-artificially synthesized pairs of high resolution / low resolution video frames for training. By this unsupervised learning approach, the encoder degenerates the input high-resolution video frame training the high-resolution video sequence to its low-resolution counterpart, and the decoder attempts to recover the original high-resolution video frame from the low-resolution video frame. To improve temporal coherency, an unsupervised learning approach provides a sliding window that explores temporal coherency in a high resolution domain and a low resolution domain. It maintains time consistency and also makes full use of high frequency details from the reconstructed high resolution video frame generated last time.

Owner:THE HONG KONG UNIV OF SCI & TECH

Vistgan: unsupervised video super-resolution with temporal consistency using GAN

ActiveUS20210281878A1Stable trainingImprove consistencyImage enhancementImage analysisImage resolutionGenerative adversarial network

A VSR approach with temporal consistency using generative adversarial networks (VistGAN) that requires only the training HR video sequence to generate the HR / LR video frame pairs, instead of the pre-artificial-synthesized HR / LR video frame pairs, for training. By this unsupervised learning method, the encoder degrades the input HR video frames of a training HR video sequence to their LR counterparts, and the decoder seeks to recover the original HR video frames from the LR video frames. To improve the temporal consistency the unsupervised learning method provides a sliding window that explores the temporal correlation in both HR and LR domains. It keeps the temporal consistent and also fully utilizes high-frequency details from the last-generated reconstructed HR video frame.

Owner:THE HONG KONG UNIV OF SCI & TECH

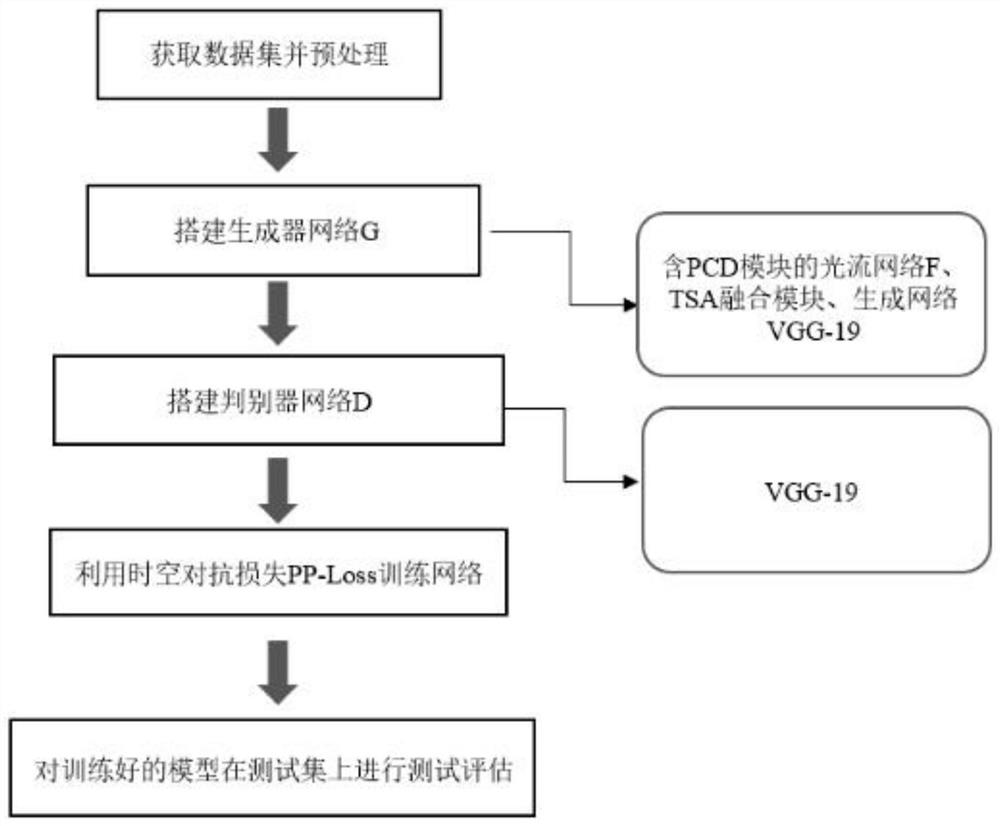

Three-dimensional signal processing method based on space-time adversarial

PendingCN112215140AGood estimateHigh spatio-temporal consistencyCharacter and pattern recognitionNeural architecturesTime informationImage resolution

The invention discloses a three-dimensional signal processing method based on space-time adversarial. A space-time adversarial network comprises a cycle generator, an optical flow estimation network and a spatio-temporal discriminator. The cycle generator is used for recursively generating a high resolution video frame from the low resolution input; the optical flow estimation network learns motion compensation between frames; the spatio-temporal discriminator may take into account spatial and temporal aspects and penalize unreal temporal discontinuities in the result without excessively smoothing the image content. According to the method, the problem that the visual effect is remarkably reduced under diverse and fuzzy motions in overclocking and super-resolution is solved, meanwhile, time information in the video is fully utilized, and the spatio-temporal consistency of the video after super-resolution is guaranteed.

Owner:苏州天必佑科技有限公司

Improving temporal consistency in video sharpness enhancement

InactiveCN1608378AGood time consistencyTelevision system detailsColor television detailsIir filteringMotion vector

A method and system are provided for improving the temporal consistency of an enhanced signal (210) representing at least one frame by enhancing gain using a sharpness enhancement algorithm. The method comprises the steps of: receiving an enhancement signal comprising at least one frame (210); obtaining an enhancement gain for each pixel in the frame; obtaining an enhancement gain value for each pixel in the reference frame from a gain memory using a motion vector, determining Whether the frame is an I, P or B frame type and update enhancement gain is determined for the I frame type by computing the gain map used in the sharpness enhancement algorithm (235). The updated enhancement gain for each pixel is equal to the previously determined enhancement gain used in the sharpness enhancement algorithm. Additionally, the method includes storing an updated enhancement gain in a gain memory; and applying the updated enhancement gain to said sharpness enhancement algorithm to improve temporal consistency of said enhanced signal (210).

Owner:KONINKLIJKE PHILIPS ELECTRONICS NV

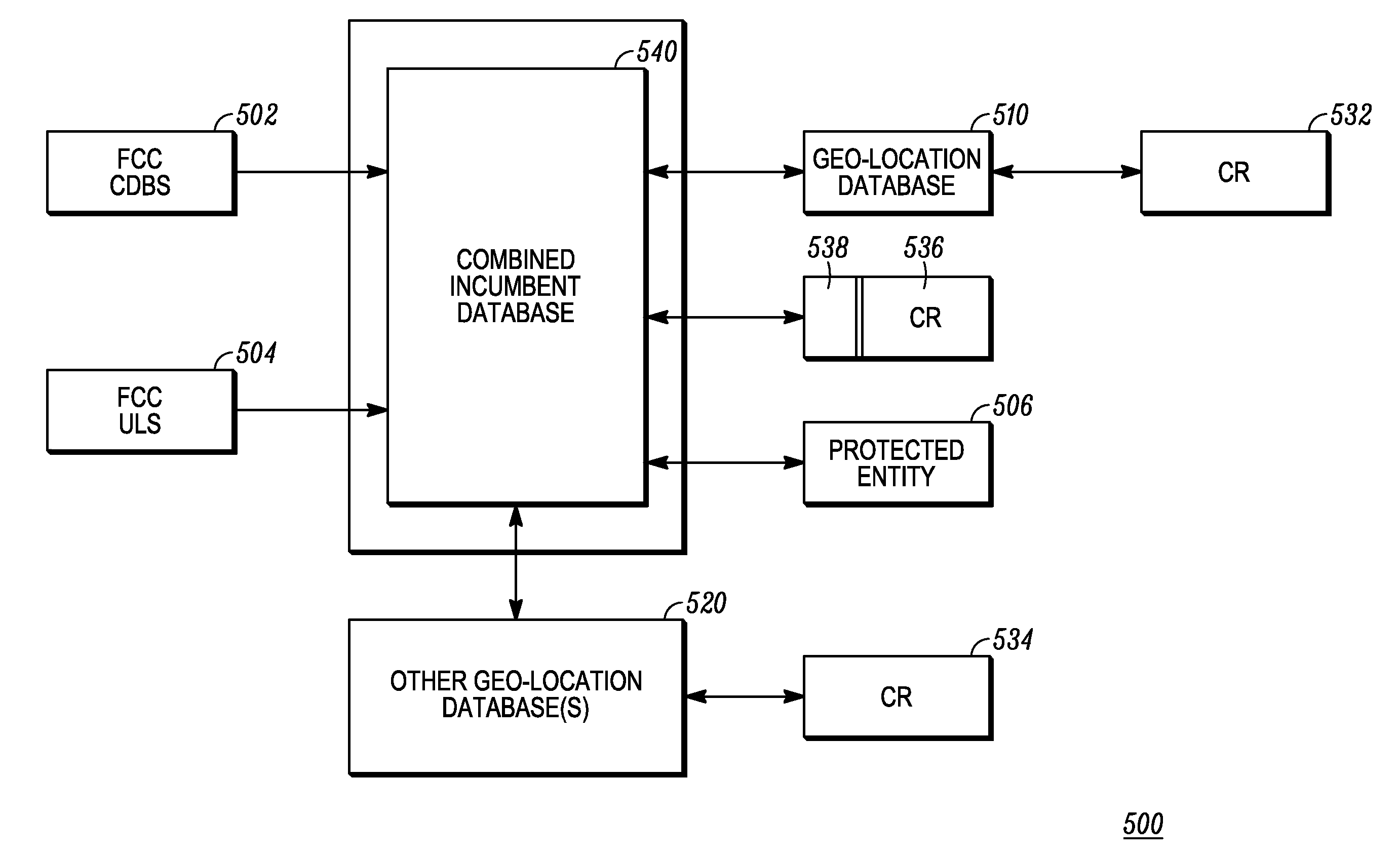

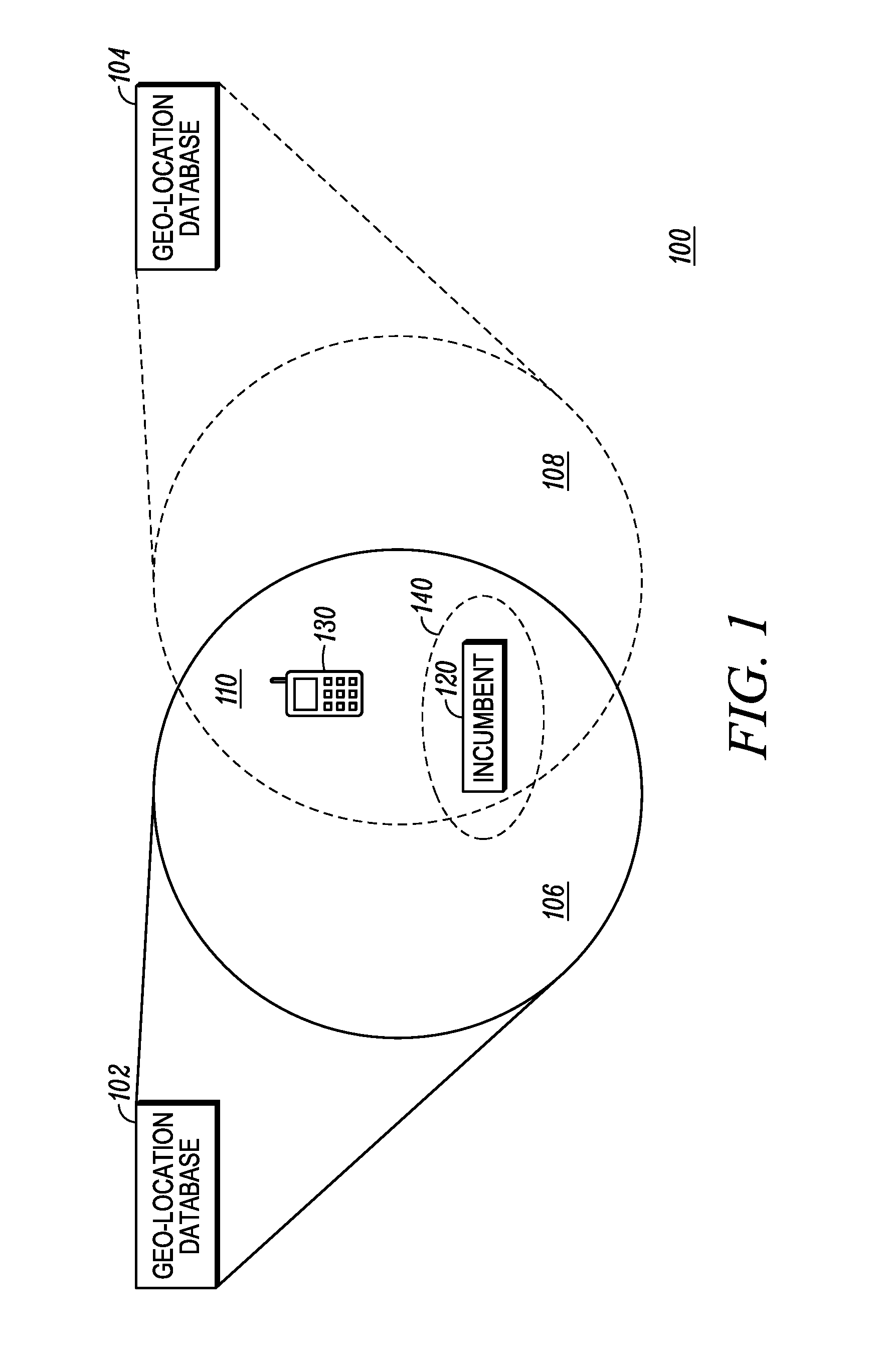

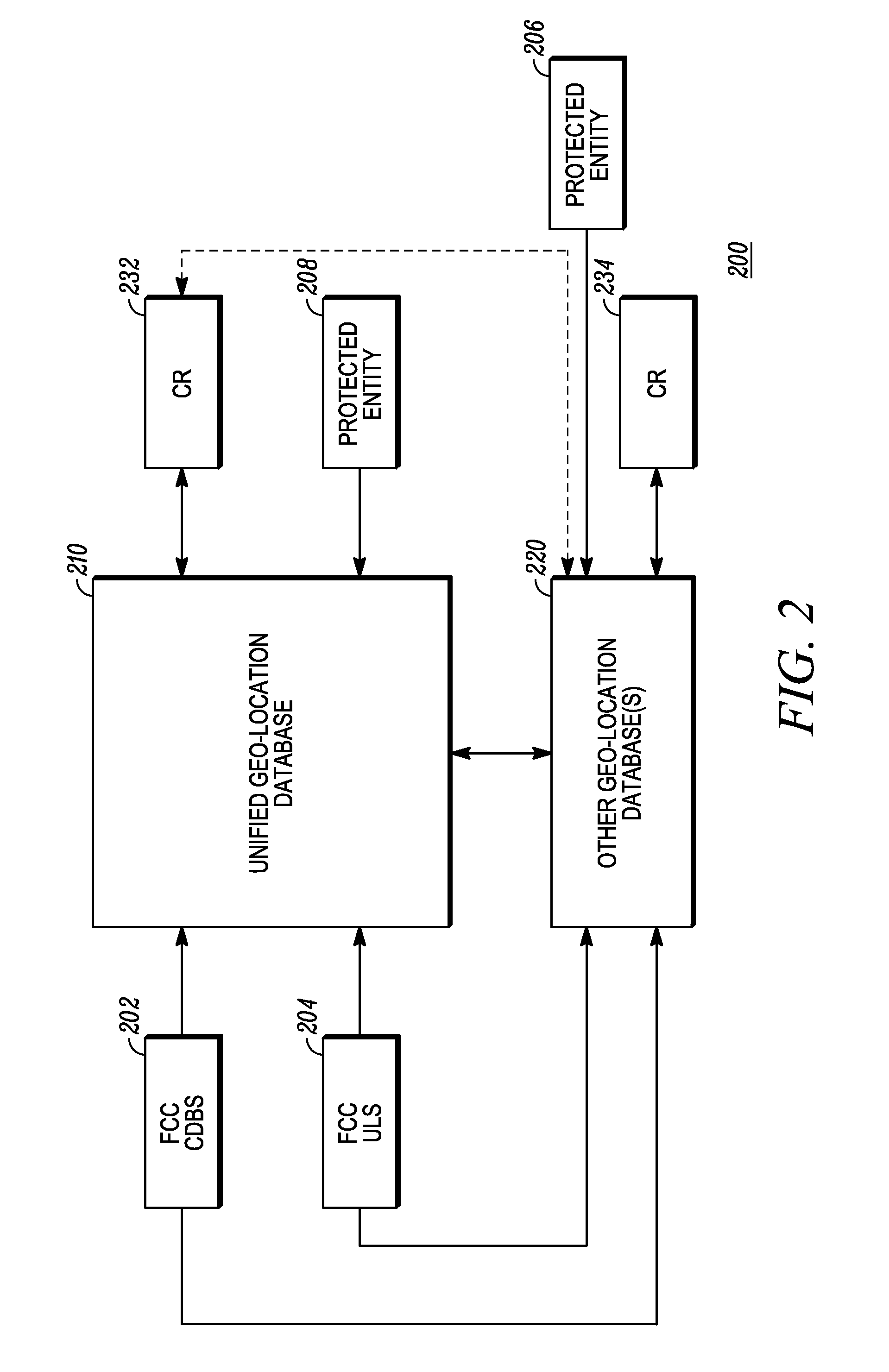

Method and apparatus for automatically ensuring consistency among multiple spectrum databases

ActiveUS8589359B2Digital data processing detailsMulti-dimensional databasesDatabase queryFrequency spectrum

An apparatus and method of providing accurate and consistent open spectrum results for secondary devices from different geo-location databases is presented. The results, which may be independently derived by each database, are independent of the database queried. The comparison permits some amount of latitude in spatial and temporal consistency between the databases as errors are only indicated if the temporal or spatial discrepancies are pervasive. In addition, large percentages of different locations showing discrepancies when compared also lead to corrective action being taken. Corrective actions that may be taken include forcing problematic databases to update, shunting requests by secondary devices in the problematic locations to acceptable databases or shutting down the problematic databases entirely.

Owner:MOTOROLA SOLUTIONS INC

Features

- R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

Why Patsnap Eureka

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Social media

Patsnap Eureka Blog

Learn More Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com