No-reference video quality evaluation method based on three-dimensional spatial-temporal feature decomposition

A technology of video quality and spatiotemporal features, applied in the fields of image processing and video processing, can solve the problems of insufficient spatiotemporal feature extraction of distorted video, insufficient representation of distorted semantic information, neglect of temporal modeling, etc., to achieve optimization effectiveness and accuracy , high practicability, accurate results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

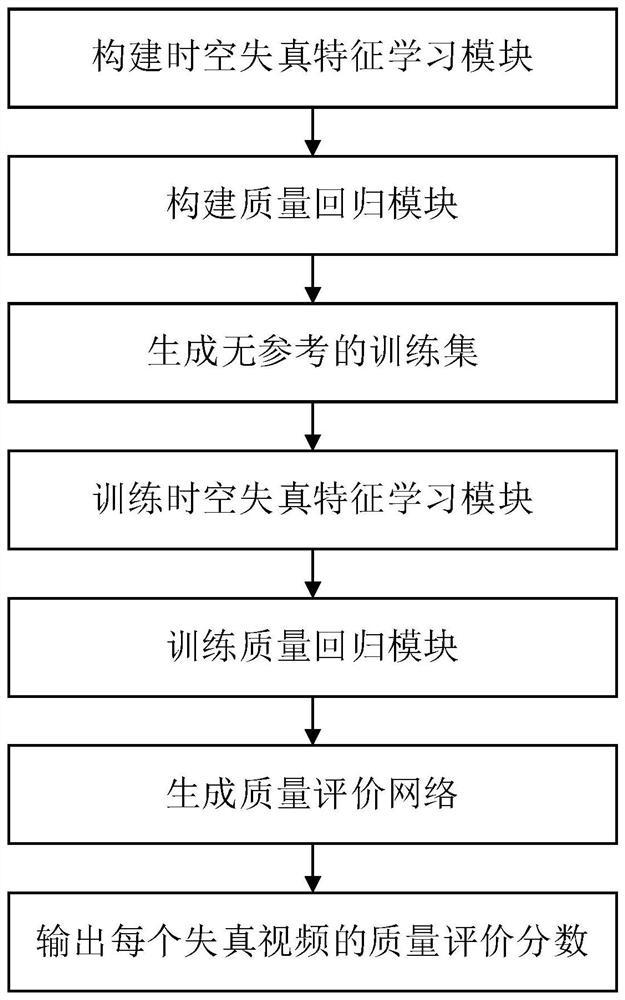

[0052] Attached below figure 1 The specific steps of the present invention are further described in detail.

[0053] Step 1, construct the spatio-temporal distortion feature learning module.

[0054] Build a spatio-temporal distortion feature learning module, the structure of which is as follows: rough feature extraction unit → 1st residual subunit → 1st pooling layer → Non-Local unit → 2nd residual subunit → 2nd pooling layer → 3rd residual subunit → 3rd pooling layer → 4th residual subunit → global pooling layer → fully connected layer.

[0055] The structure of the rough feature extraction unit is: input layer→first convolution layer→first batch normalization layer→second convolution layer→second batch normalization layer→pooling layer.

[0056] The 1st, 2nd, 3rd, and 4th residual subunits are three-dimensional extensions of the residual network, and then decompose the 3×3×3 convolution kernel into 3×1×1 one-dimensional time convolution and 1 ×3×3 two-dimensional spatial...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com