Infrared and visible light video image fusion method based on Surfacelet conversion

A technology of video image and fusion method, which is applied in image communication, image enhancement, image data processing, etc., can solve problems, increase the difficulty of implementing video image fusion technology, and the detection accuracy is easily affected by environmental factors such as lighting.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

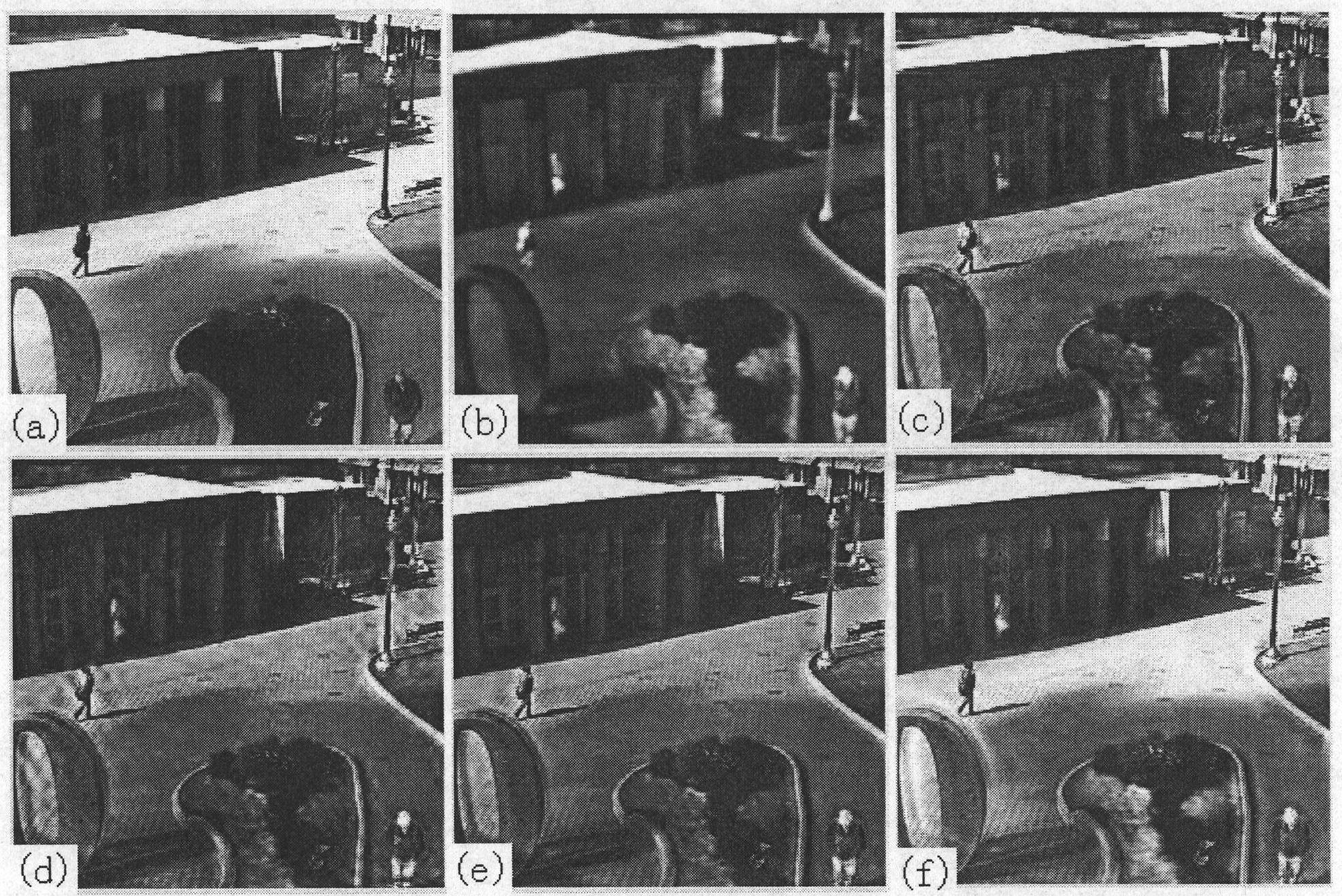

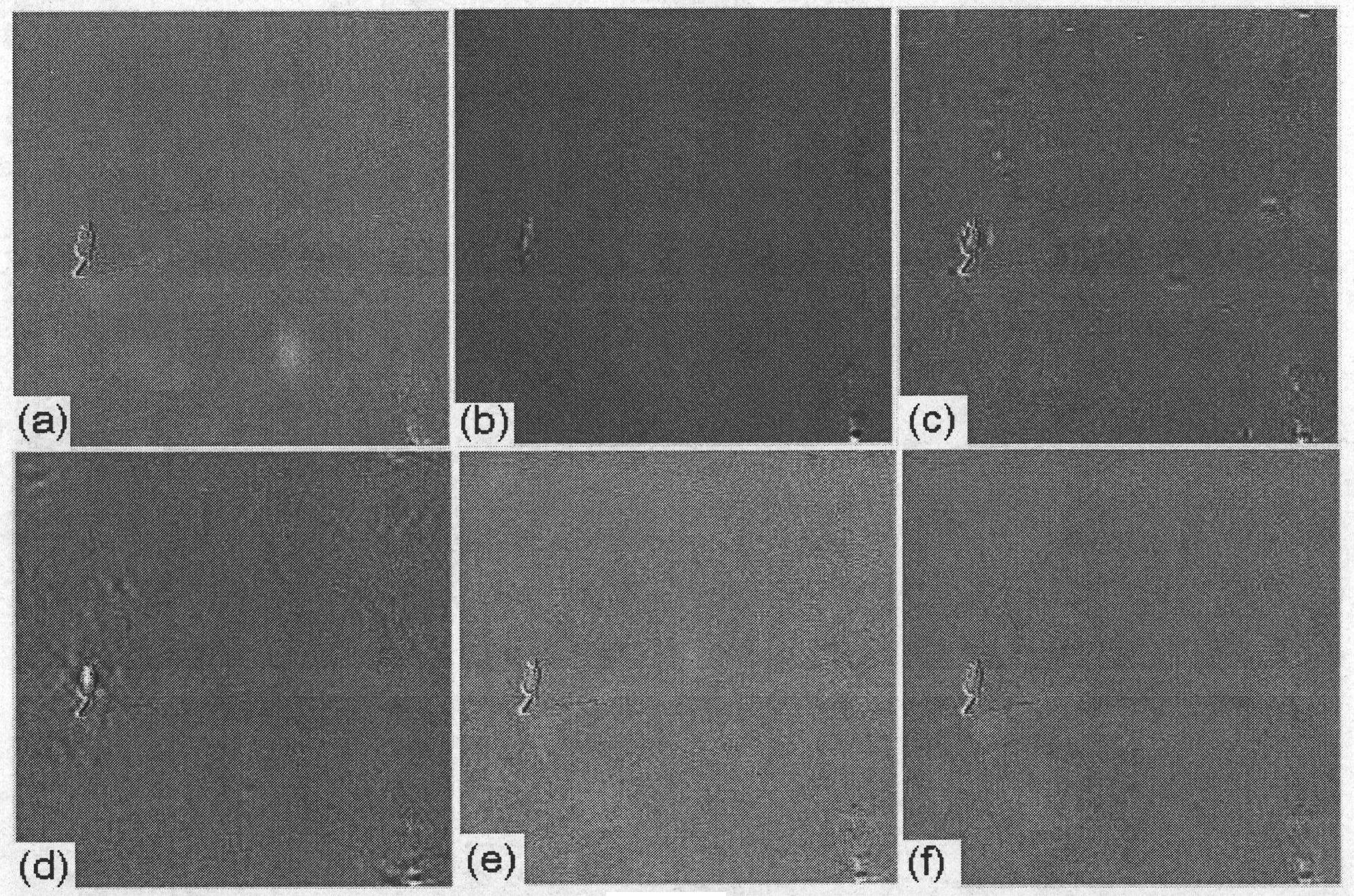

[0032] The present invention will be described in further detail below in conjunction with the accompanying drawings.

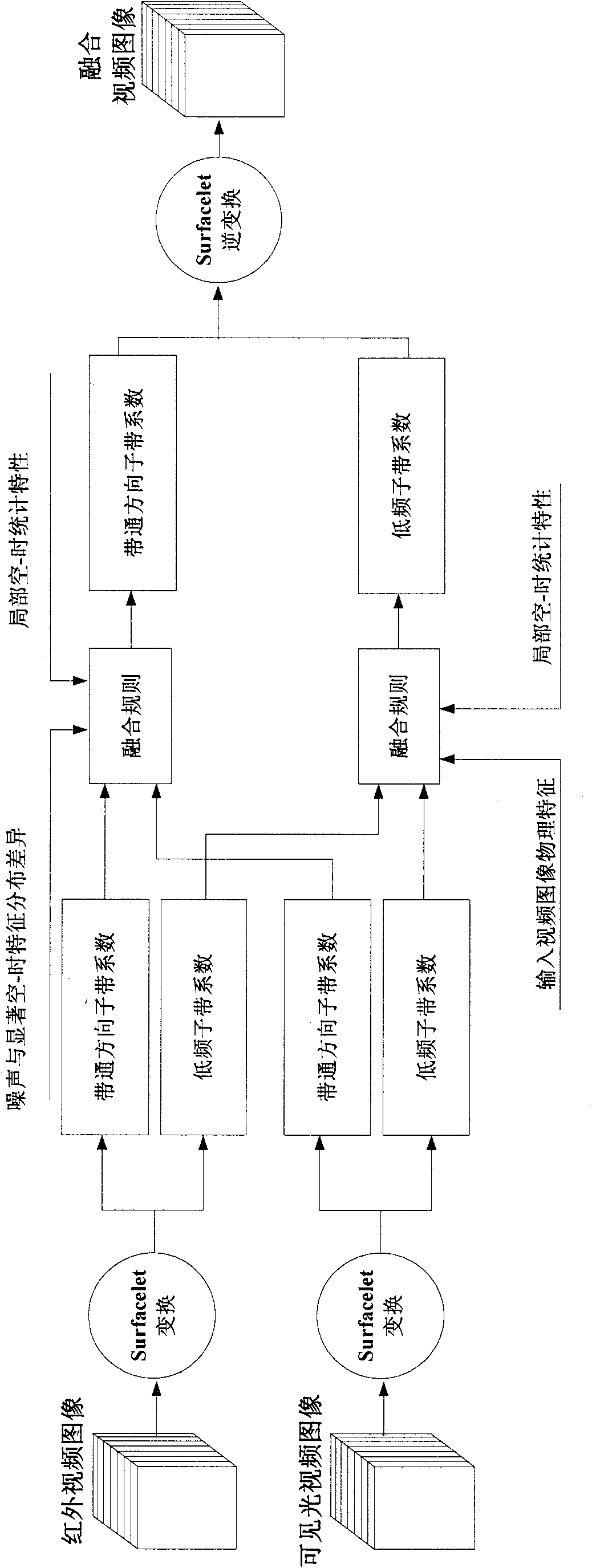

[0033] refer to figure 1 , the present invention comprises the following steps:

[0034] Step 1: Use Surfacelet transform to decompose the input video image in multiple scales and directions.

[0035] Perform Surfacelet transformation on the infrared video image Vir(x, y, t) and visible light video image Vvi(x, y, t) that have undergone strict spatial and temporal registration, and obtain their respective transformation coefficients and in, and represent the low-frequency subband coefficients of the infrared input video image and the visible light input video image at the roughest scale S, respectively, and Respectively represent infrared video image and visible light video image in scale s (s=1, 2, ..., S), direction (j, k) (wherein, j=1, 2, 3; ) and space-time position (x, y, t) at the band-pass direction sub-band coefficient, S represents sca...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com