Human body behavior recognition method based on depth video sequence

A deep video sequence and recognition method technology, applied in the field of computer pattern recognition, can solve the problems of lower recognition rate, wrong representation coefficient, and inability to express the detailed information of local descriptors more accurately, so as to improve the recognition rate and strong dictionary expression ability Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

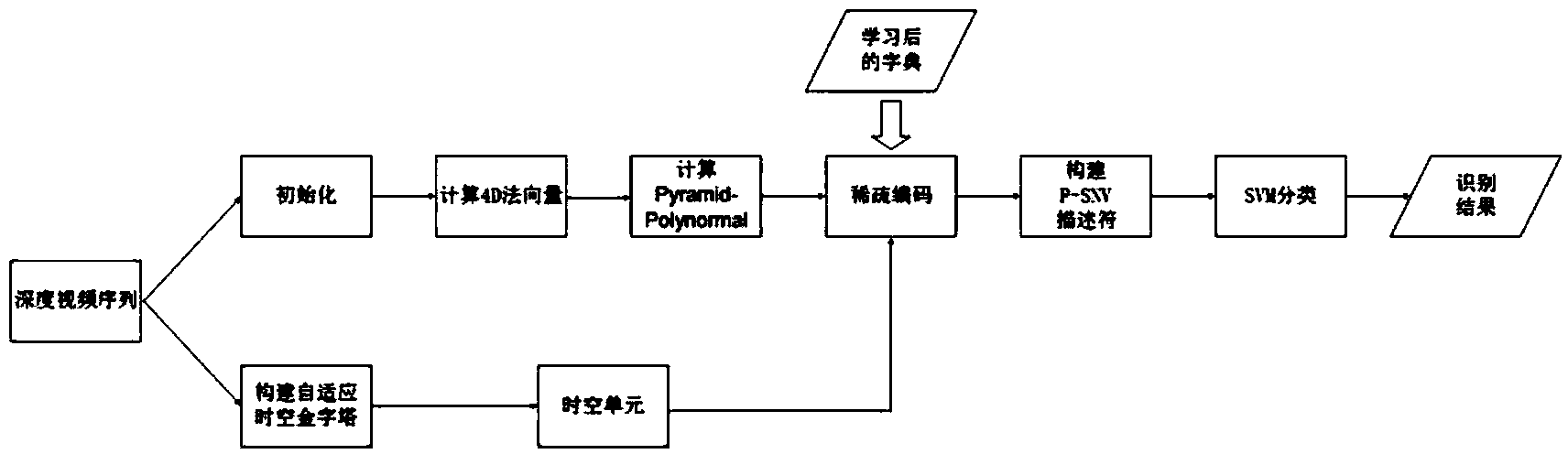

[0011] Such as figure 1 As shown, this method of human behavior recognition based on depth video sequences calculates the four-dimensional normal vectors of all pixels in the video sequence, and builds a spatiotemporal pyramid model of the behavior sequence in different time and space domains to extract the pixels in different layers. The low-level features, based on the sparse dictionary of the low-level feature learning group, obtain the sparse coding of the low-level features, and use the spatial average pool and the time maximum pool to integrate the coding, thereby obtaining the high-level features as the descriptor of the final behavior sequence.

[0012] The present invention constructs a spatiotemporal pyramid model, which specifically retains the information in the multi-layer spatiotemporal domain of local descriptors. At the same time, because a group sparse dictionary is used to encode the underlying features, the interference of different categories containing similar ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com