A method, system and device for dynamic background differential detection based on spatiotemporal classification

A dynamic background and detection method technology, applied in image analysis, instruments, calculations, etc., can solve the problems of reducing the detection accuracy rate and affecting the detection effect, and achieve the effect of large sampling range, enhanced ability and strong representativeness

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

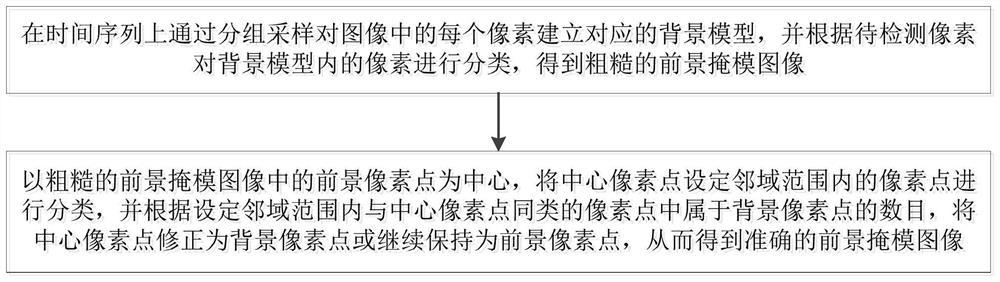

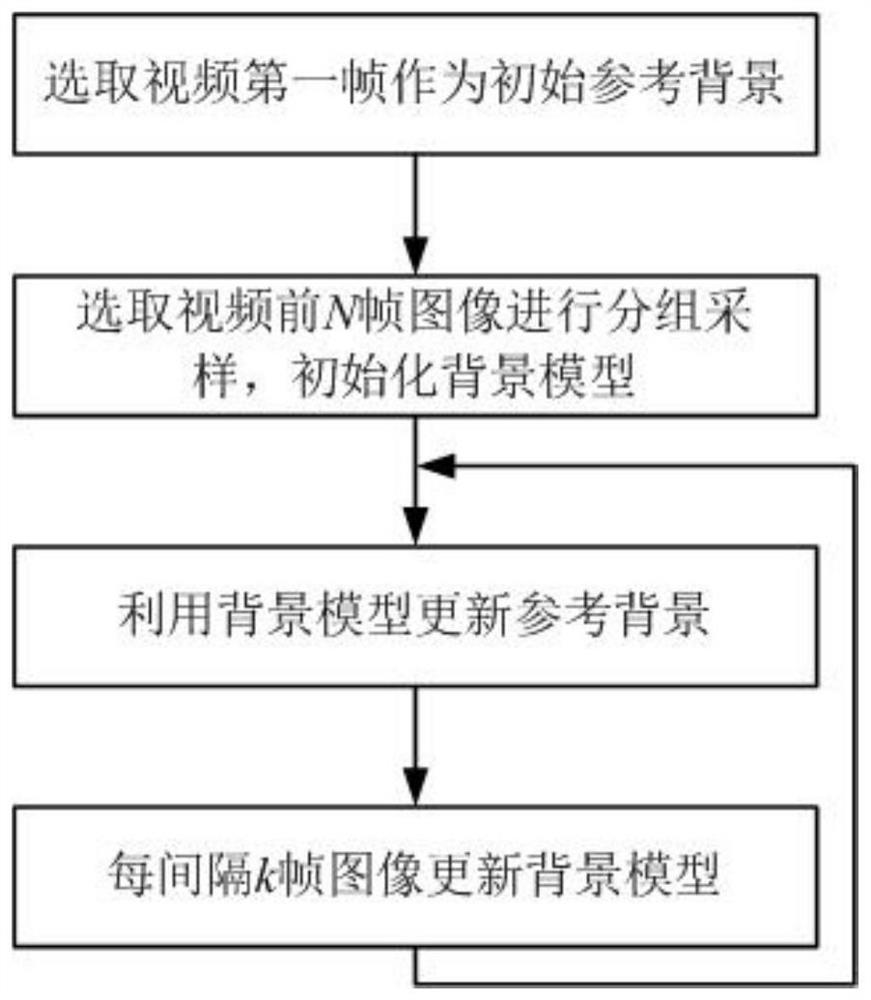

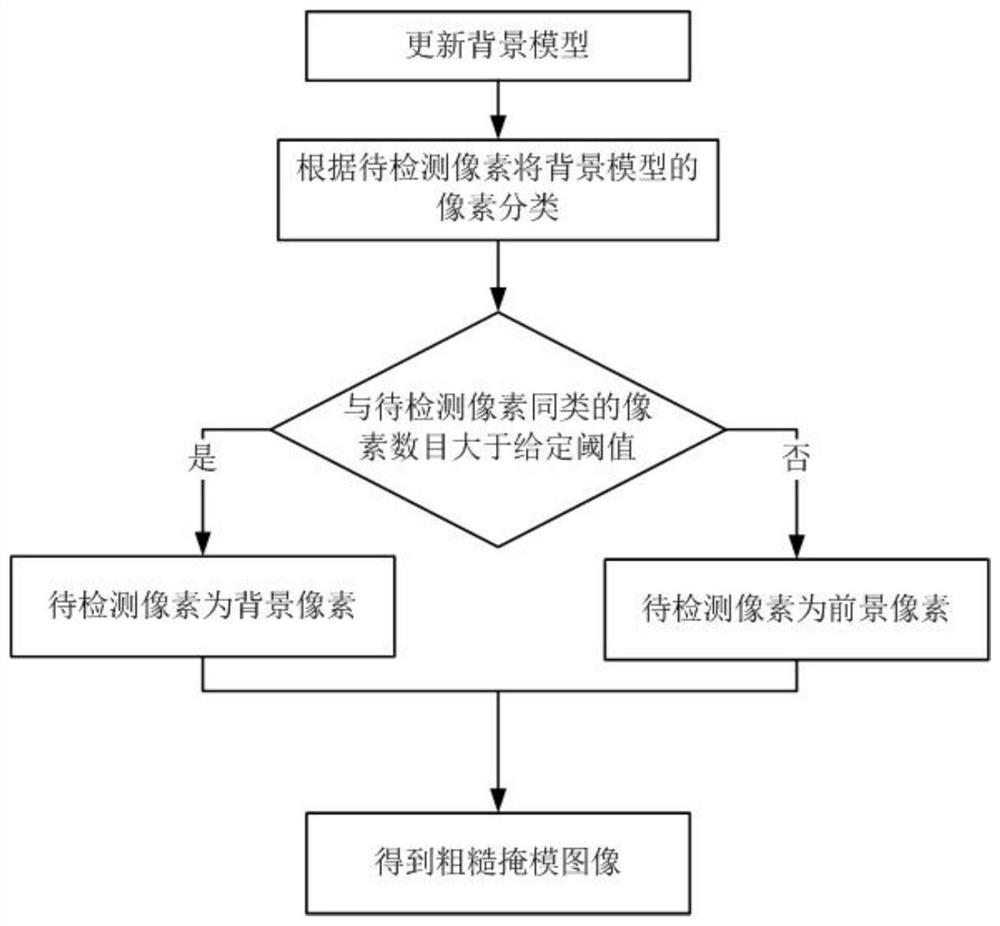

[0096] The present invention proposes a new dynamic background difference detection method based on spatio-temporal classification. The method adopts the group sampling method when establishing the background model, while the prior art directly uses continuous video frames to initialize the background model. Therefore, the present invention The method adopted can obtain more representative pixel samples, and can better represent the dynamic background; the present invention distinguishes the categories of neighboring pixels in the spatial classification step, and only uses similar pixels to further determine whether the central pixel is the real foreground pixels, while the prior art uses all the neighboring pixels to describe the background pixels, if some of the neighboring pixels are foreground pixels, it will be wrongly described as background pixels and affect the detection effect, so the method adopted in the present invention It can effectively improve the accuracy of mo...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com