A Visual Method for Interpreting Convolutional Neural Networks

A convolutional neural network and neuron technology, applied in the field of machine learning and visualization, can solve problems that are not universal, complex, and limited in scope of use

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

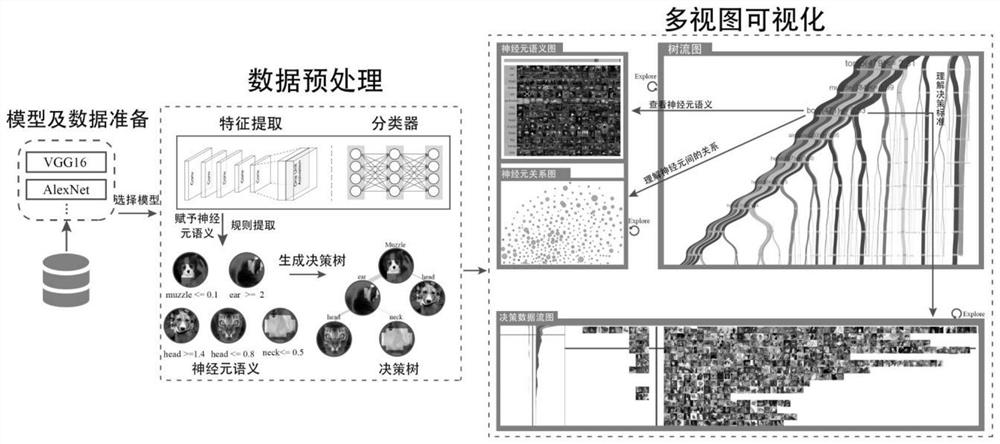

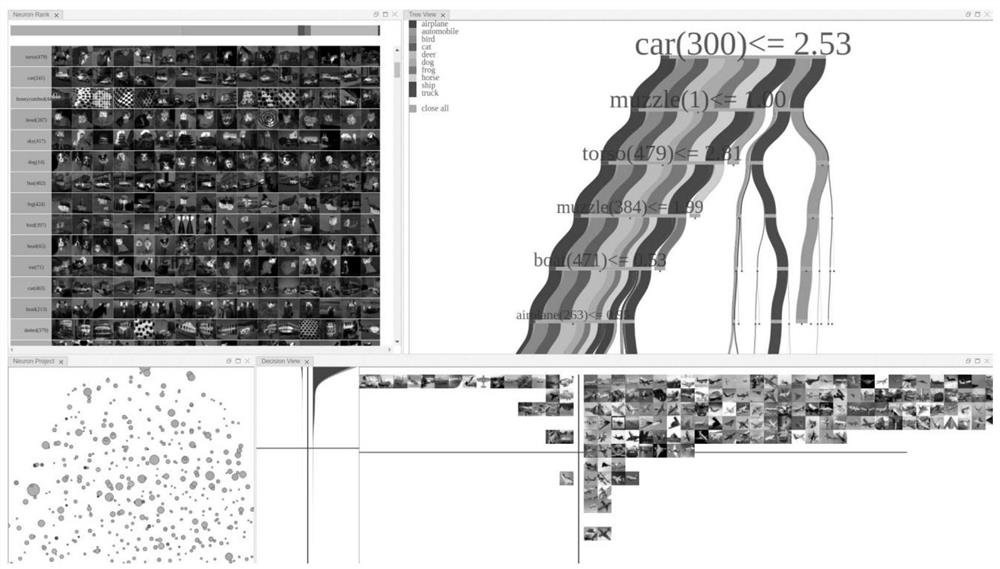

[0047] The specific processing process of the method proposed in the present invention includes three main steps: model and data preparation, data preprocessing, and multi-view visualization.

[0048] 1. Model and data preparation

[0049] The model and data preparation are the input of this visualization method. The model can be AlexNet or complex VGG16, etc. The data is the data set used to train the model. These models and data can be found in some open source libraries, such as Caffe, Tensorflow etc., the model and its training data will be used in the data preprocessing stage.

[0050] 2. Data preprocessing

[0051] The purpose of preprocessing is to provide data for visualization, which mainly includes steps such as extraction of judgment conditions, semantic generation, and decision tree generation.

[0052] (1) Judgment condition extraction:

[0053] Choose the appropriate judgment condition form according to the complexity of the model. The form can be roughly divide...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com