Food volume estimation method and device

A food and volume technology, applied in the field of deep learning, can solve problems such as massive computing resources, high user input requirements, and inappropriateness, and achieve the effect of avoiding complex processes

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

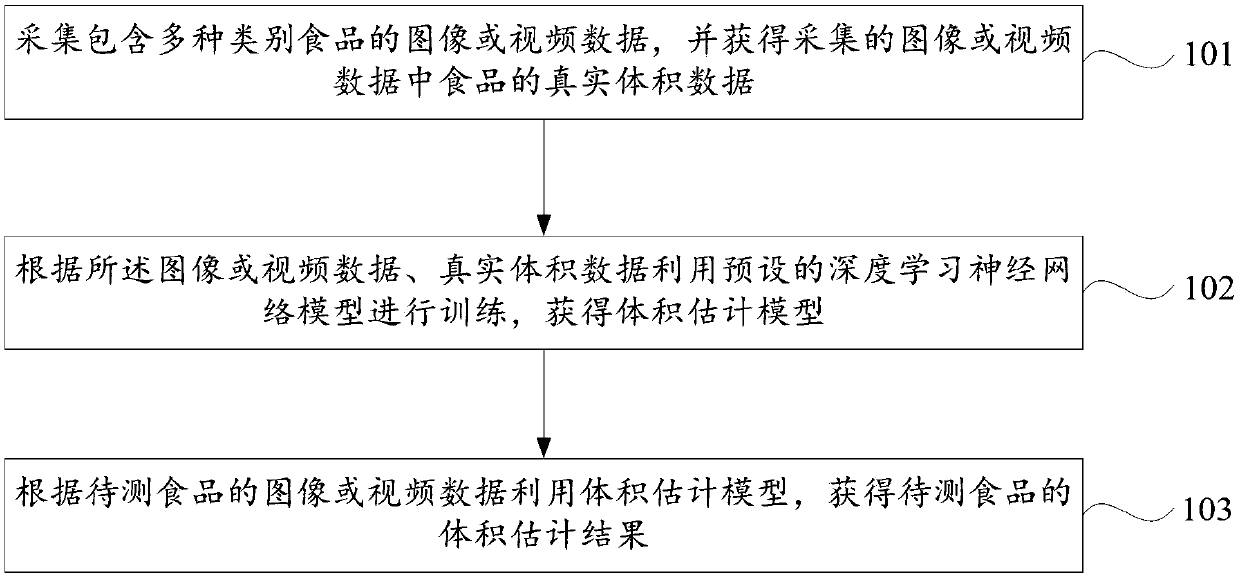

[0061] figure 1 It is a schematic flowchart of the food volume estimation method provided by the embodiment of the present invention. Such as figure 1 As shown, the method for estimating the volume of food provided by the embodiments of the present invention includes the following steps:

[0062] 101. Collect image or video data containing multiple categories of food, and obtain real volume data of the food in the collected image or video data.

[0063] Specifically, images or video data containing various types of food are collected under various backgrounds, scenes, and shooting angles. Various backgrounds include but are not limited to simple backgrounds (such as desktop backgrounds, solid white backgrounds) and complex backgrounds. The background and scenes include ordinary indoor scenes and ordinary outdoor scenes, and a variety of shooting angles include at least a front view and an oblique view with a certain offset. The food image preferably includes a relatively st...

Embodiment 2

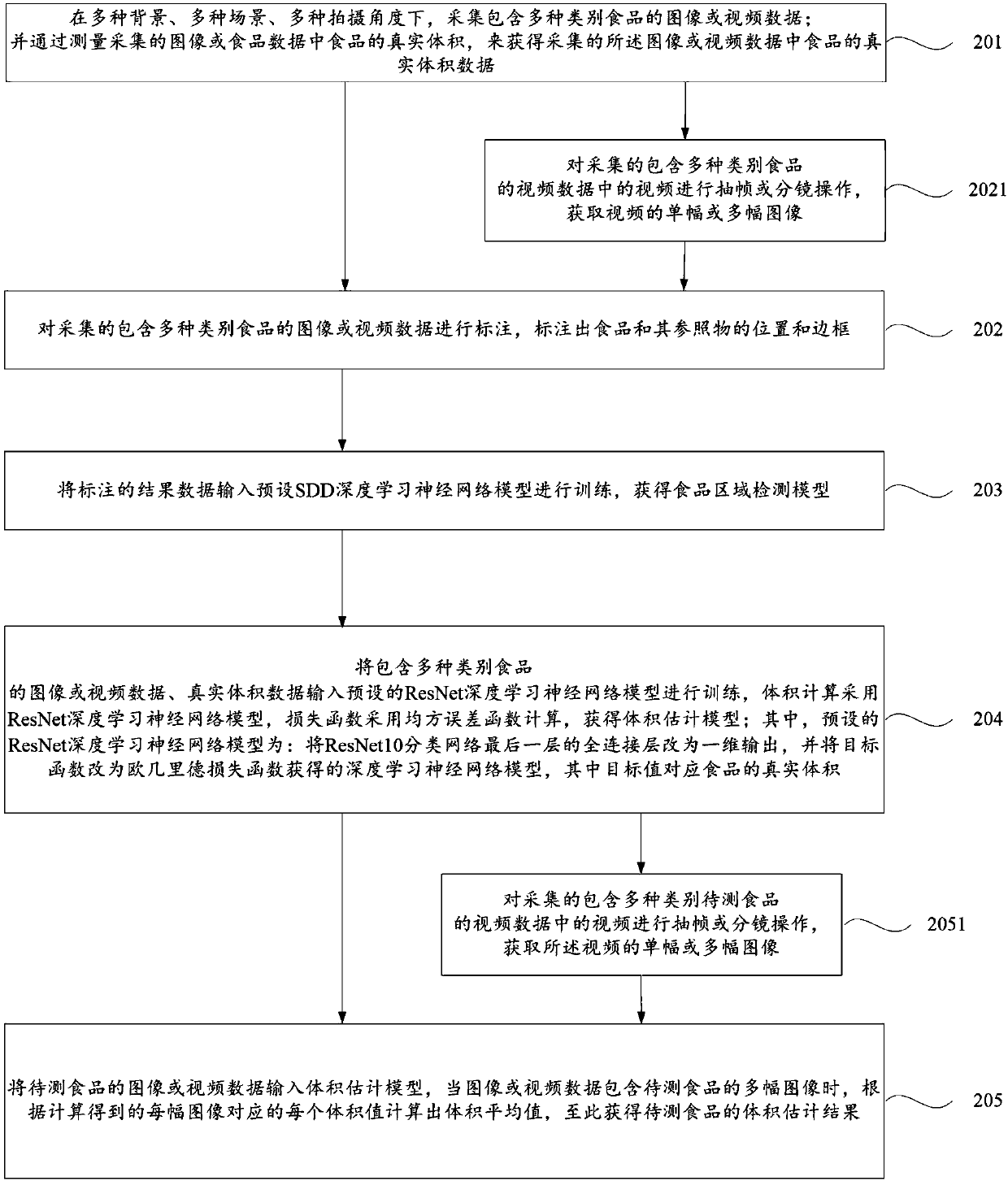

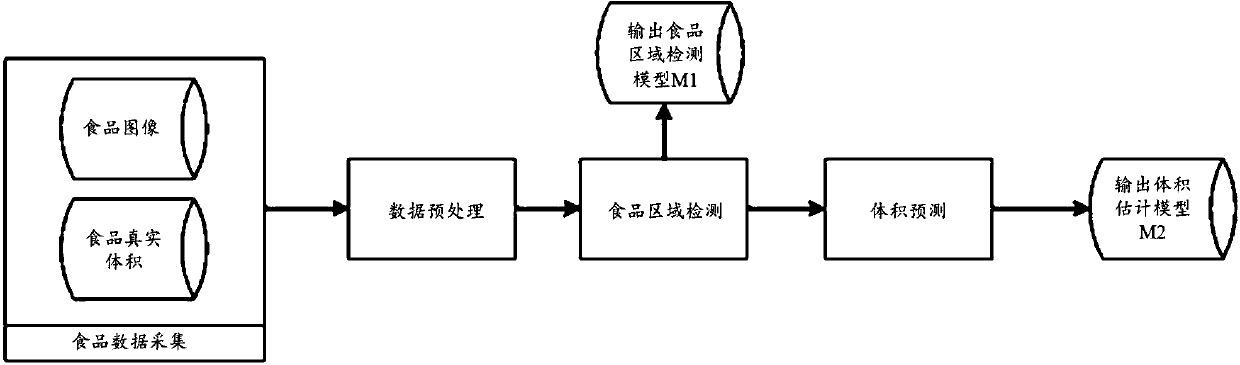

[0072] figure 2 It is a schematic flowchart of the food volume estimation method provided by the embodiment of the present invention. image 3 It is a schematic flow chart of the first stage of the food volume estimation method provided by the embodiment of the present invention. Figure 4 It is a schematic flow chart of the second stage of the food volume estimation method provided by the embodiment of the present invention. Such as Figure 2-4 As shown, the method for estimating the food volume provided by the embodiment of the present invention can be divided into two stages: the first stage—the stage of training and obtaining the food area detection model M1 and the volume estimation model M2; the second stage—the stage of estimating the volume of the food to be tested.

[0073] Specifically, the first stage includes the following steps:

[0074] 201. Collect image or video data containing various types of food under various backgrounds, scenes, and shooting angles; an...

Embodiment 3

[0087] Figure 5 It is a structural schematic diagram of the food volume estimation device provided by the embodiment of the present invention. Such as Figure 5 As shown, the food volume estimating device provided by the embodiment of the present invention includes:

[0088] Collection module 31, for collecting the image or video data that comprises multiple categories of food;

[0089] An acquisition module 32, configured to acquire the real volume data of the food in the collected image or video data;

[0090] The model training module 33 is configured to perform training with a preset deep learning neural network model according to the plurality of image or video data and the real volume data to obtain a volume estimation model. Preferably, the model training module 33 is used for training with a preset ResNet, VGG, or DenseNet deep learning neural network model according to multiple image or video data and real volume data to obtain a volume estimation model. Preferab...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com