Method for detecting three-dimensional object based on point fusion network

A technology that integrates networks and 3D objects, applied in 3D object recognition, instrument, character and pattern recognition, etc., can solve problems such as not being universal

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0033] It should be noted that, in the case of no conflict, the embodiments in the present application and the features in the embodiments can be combined with each other. The present invention will be further described in detail below in conjunction with the drawings and specific embodiments.

[0034] figure 1 It is a system frame diagram of a 3D object detection method based on a point fusion network in the present invention. It mainly includes point cloud network, fusion network, and dense fusion prediction scoring function.

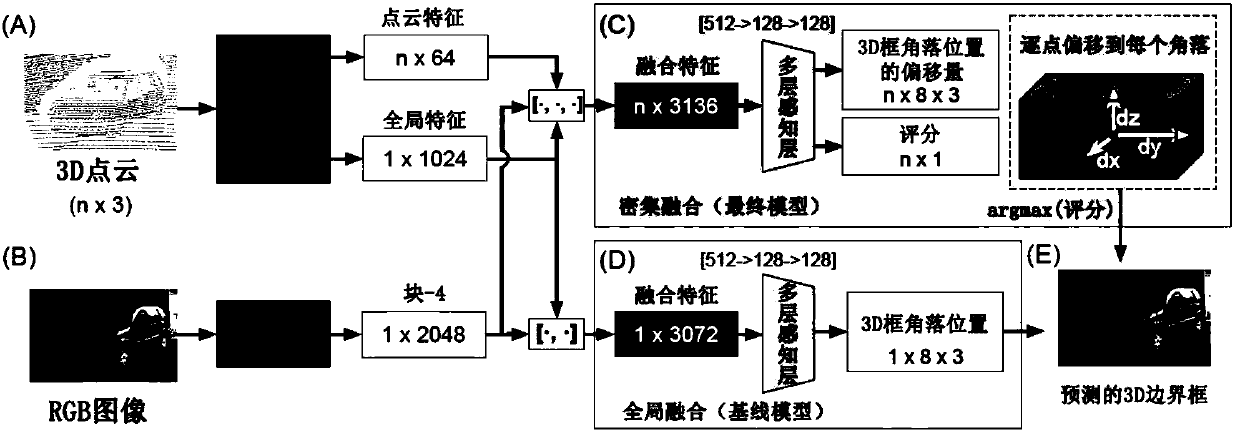

[0035] Point fusion has three main components: a point fusion network variant that extracts point cloud features, a convolutional neural network (CNN) that extracts image appearance features, and a fusion network that combines the two features and outputs a 3D bounding box.

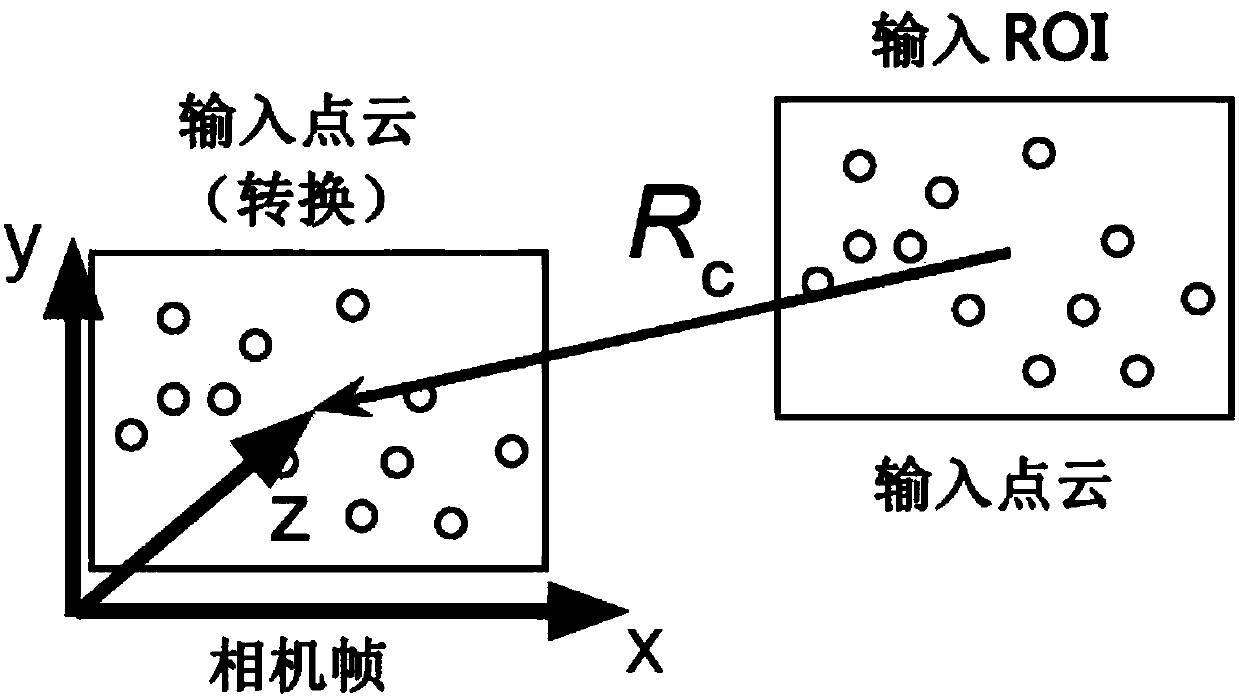

[0036] The point fusion network first uses a symmetric function (max pooling) to achieve the invariance processing of unordered 3D point clouds; the model ingests the original p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com