Deep learning-based human body motion recognition method of multi-channel image feature fusion

A human action recognition and image feature technology, applied in character and pattern recognition, instruments, biological neural network models, etc., can solve the problems of small inter-class differences, inability to realize high-precision human action recognition, and large internal differences

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

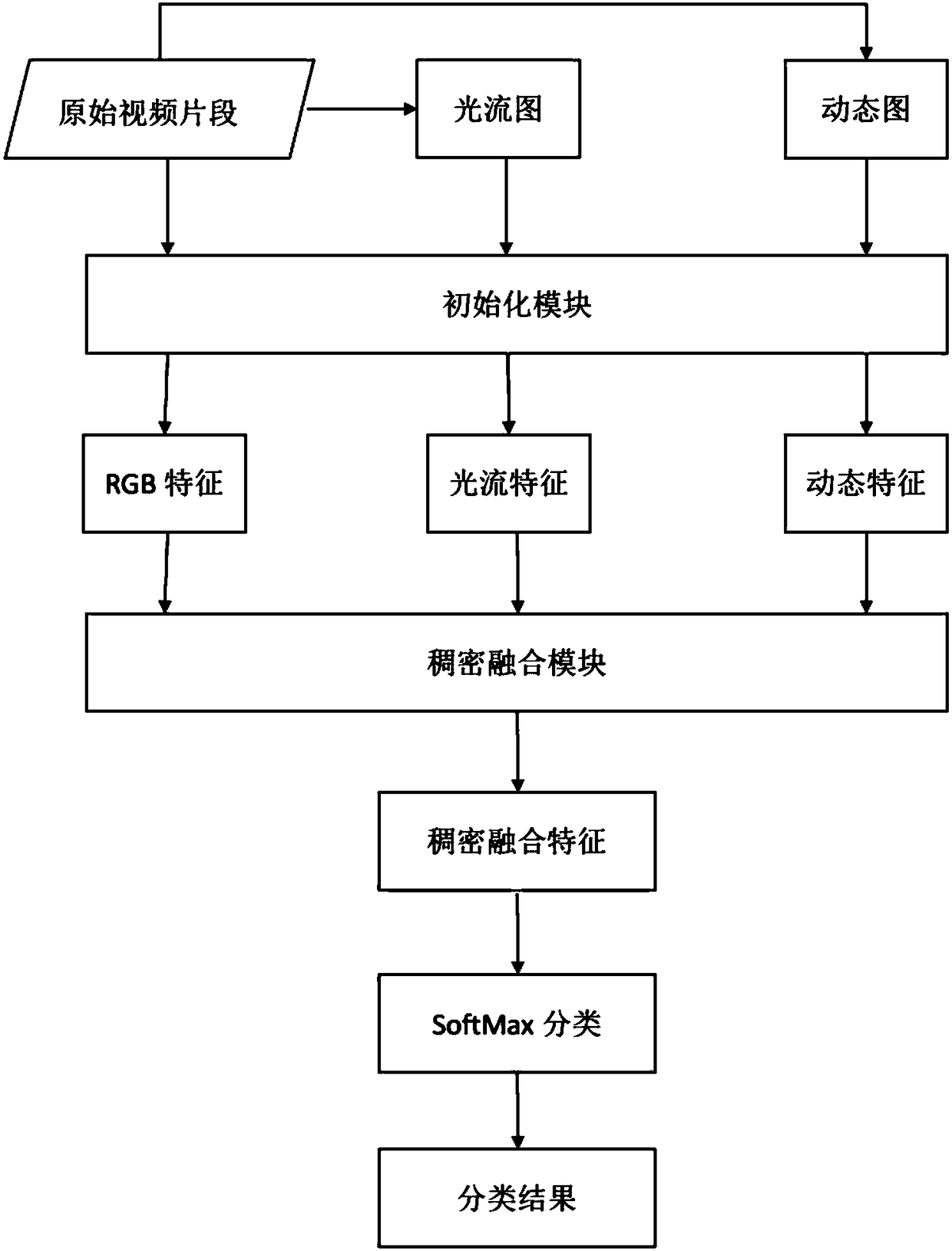

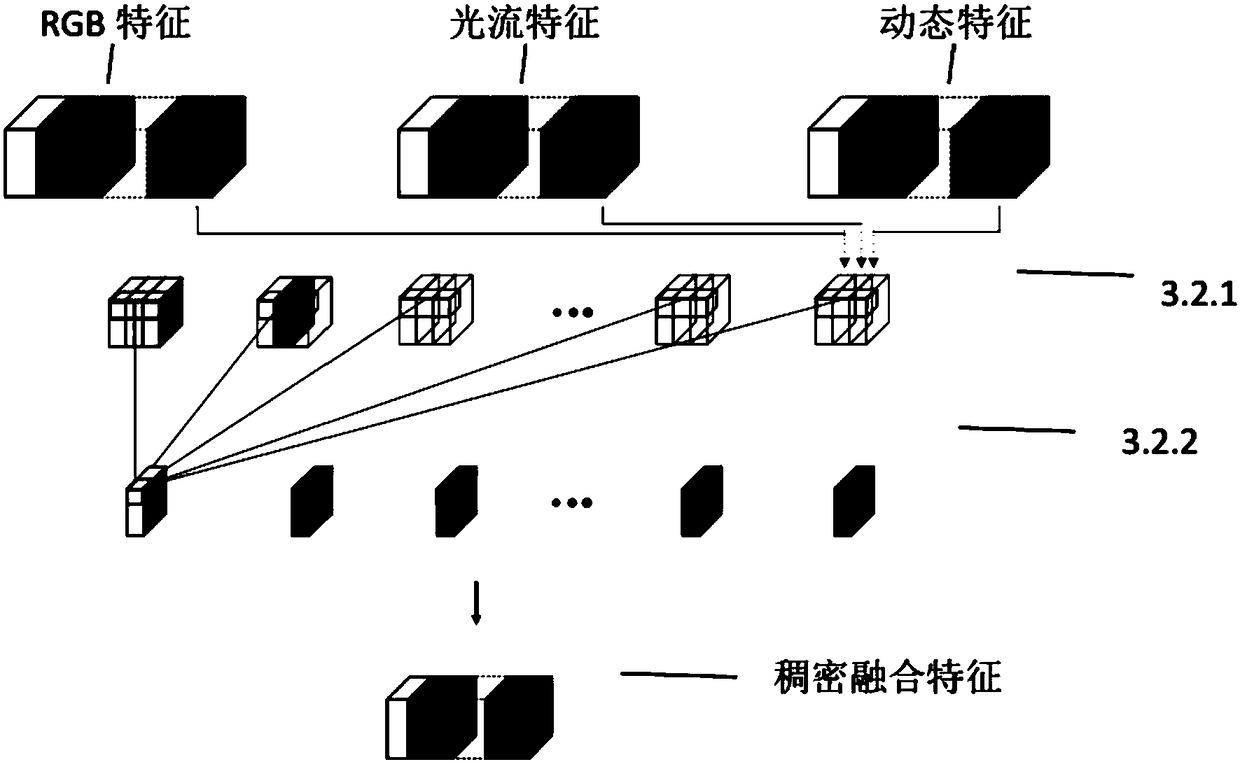

[0052] Such as Figure 1 to Figure 2 As shown, the human action recognition method based on the fusion of multi-channel image features of deep learning in the present invention is used to identify the human action in the video; including the following four steps:

[0053] (1) Extract the original RGB picture from the video, and calculate the dynamic map and optical flow map of the segmented video through the RGB picture;

[0054] (2) Carry out cropping operation to the input picture and amplify the training data set;

[0055] (3) Construct a three-channel convolutional neural network, and input the finally obtained video clips into the three-channel convolutional neural network for training to obtain a corresponding network model;

[0056] (4) For the video clip to be recognized, extract the original RGB image, and calculate its corresponding dynamic graph and optical flow graph, use the three-channel convolutional neural network trained in (3) to extract features, and obtain...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com