Three-dimensional reconstruction-based unmanned aerial vehicle image stitching method and system

An image stitching and three-dimensional reconstruction technology, which is applied in the field of remote sensing image stitching, can solve the problems of short stitching time and deformation of stitched images, and achieve the effect of improving stitching speed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

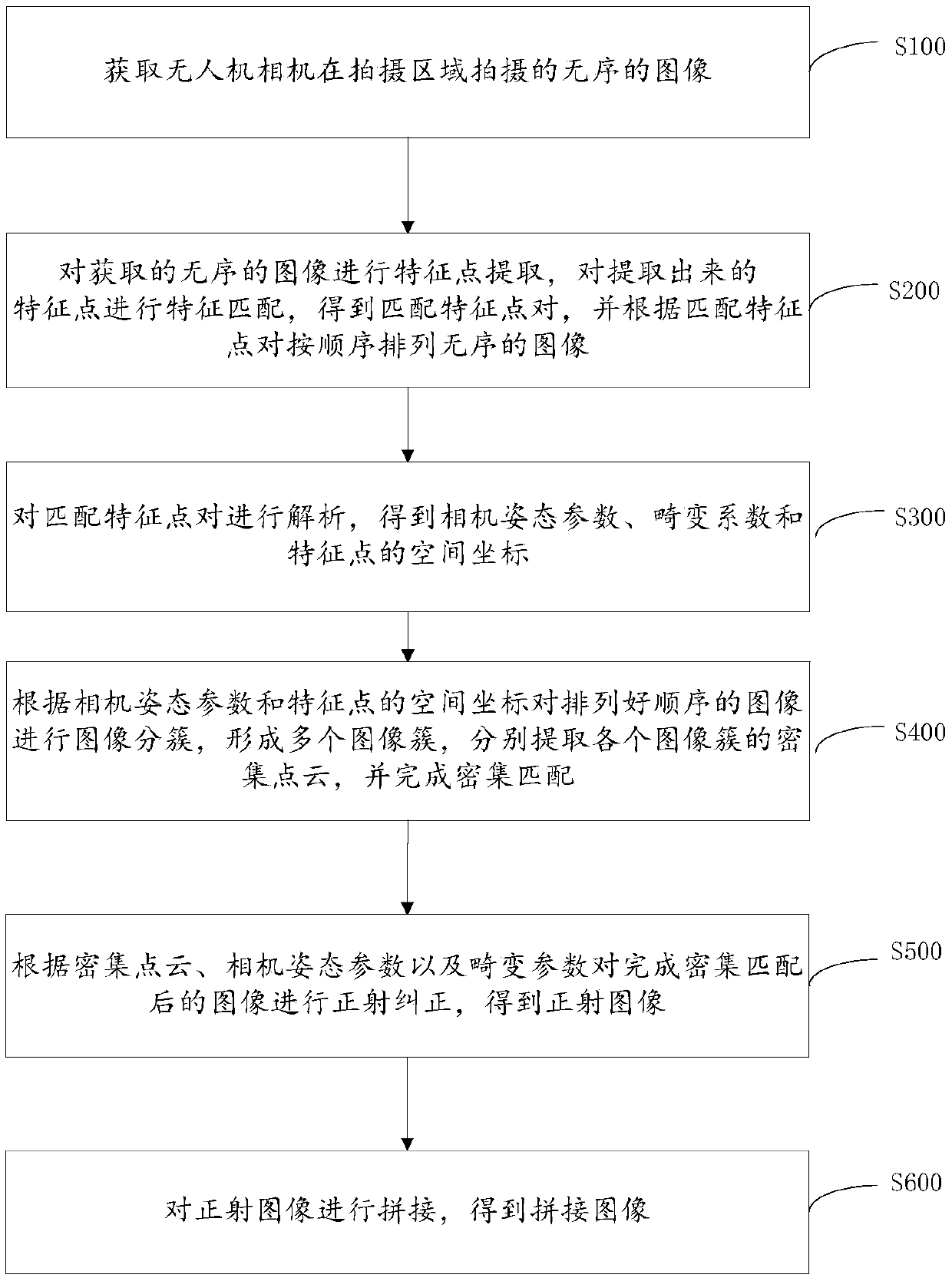

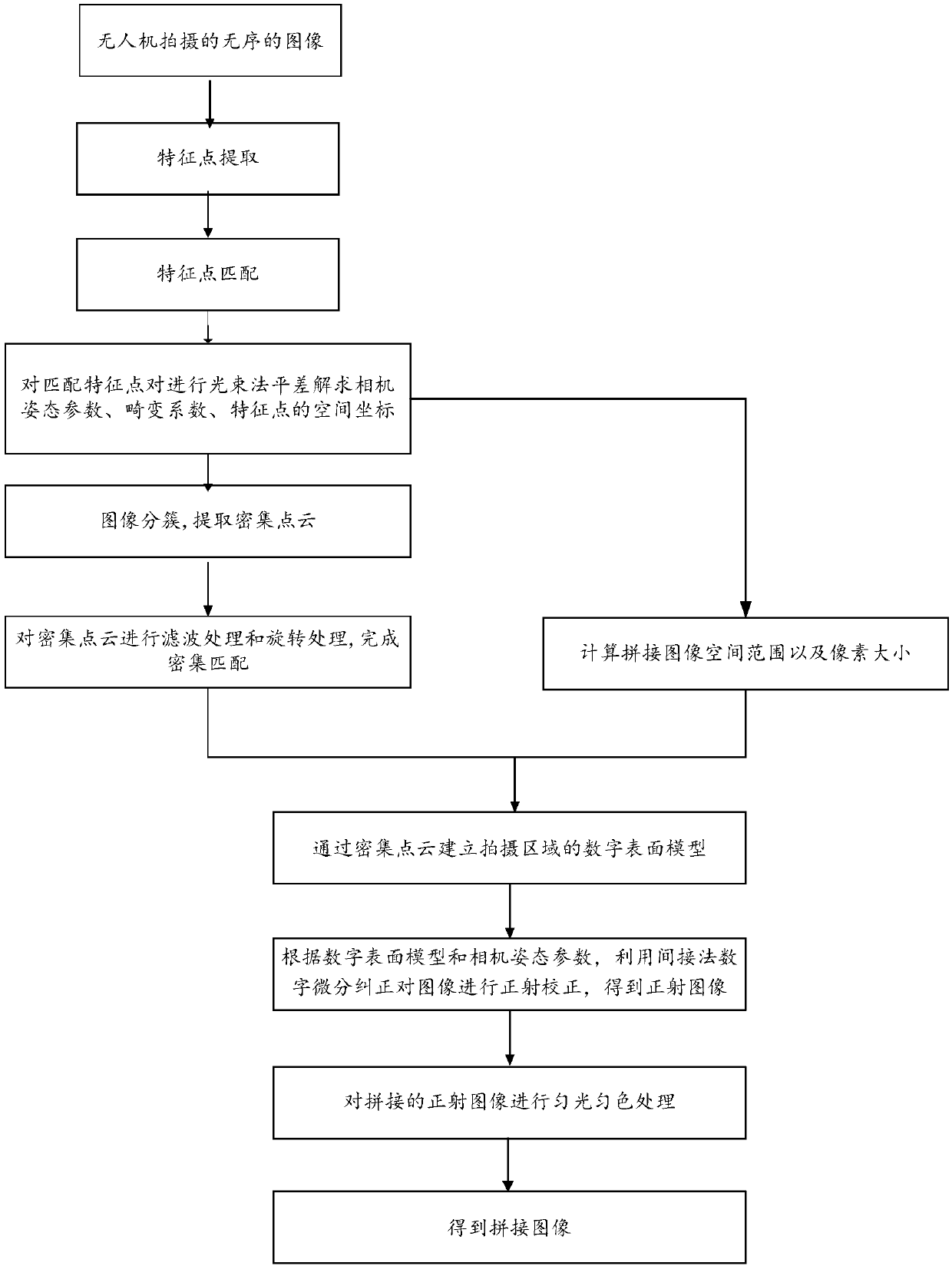

[0064] In a specific embodiment, a method for mosaicing UAV images based on 3D reconstruction is provided, such as figure 1 shown, including:

[0065] Step S100: Obtain unordered images captured by the drone camera in the shooting area.

[0066] Step S200: Extract feature points from the acquired disordered images, perform feature matching on the extracted feature points, obtain matching feature point pairs, and arrange disordered images in order according to the matching feature point pairs.

[0067] Scale-invariant feature transform (SIFT, Scale-invariant feature transform, SIFT) operator is used to extract feature points. Feature points include SIFT feature points themselves and SIFT feature vectors corresponding to SIFT feature points. Due to the large amount of image data acquired, The amount of calculation in the processing process is large, so using a graphics processing unit (GPU, Graphics Processing Unit) for SIFT operator extraction can greatly improve the running s...

Embodiment 2

[0162] The present invention also provides a UAV image mosaic system based on three-dimensional reconstruction, including:

[0163] The image acquisition module 10 is used to acquire the unordered images taken by the unmanned aerial vehicle camera in the shooting area;

[0164] Image arranging module 20, is used for carrying out feature point extraction to the unordered image of acquisition, carries out feature matching to described feature point, obtains matching feature point pair, and arranges disordered image in order according to described matching feature point pair;

[0165] Feature point coordinate parsing module 30, is used for analyzing described matching feature point, obtains the space coordinate of camera attitude parameter, distortion parameter and described feature point;

[0166] The dense matching module 40 is used to perform image clustering on the images arranged in order according to the camera pose parameters and the spatial coordinates of the feature poin...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com