A multi-modal deep network embedding method for fusing structure and attribute information

A technology of attribute information and deep network, which is applied in the field of complex network analysis and can solve problems such as lack of

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0102] The following describes several preferred embodiments of the present invention with reference to the accompanying drawings, so as to make the technical content clearer and easier to understand. The present invention can be embodied in many different forms of embodiments, and the protection scope of the present invention is not limited to the embodiments mentioned herein.

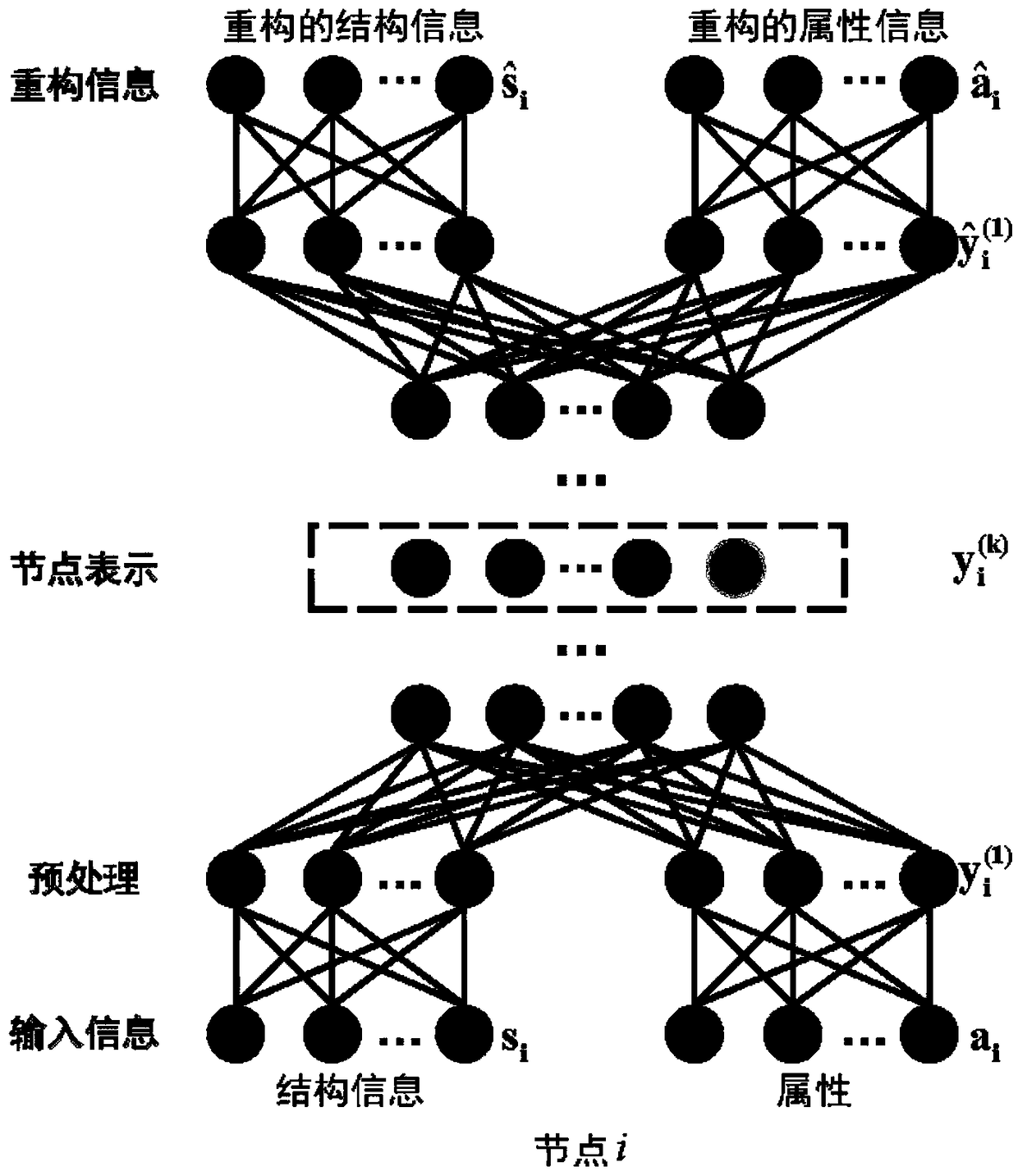

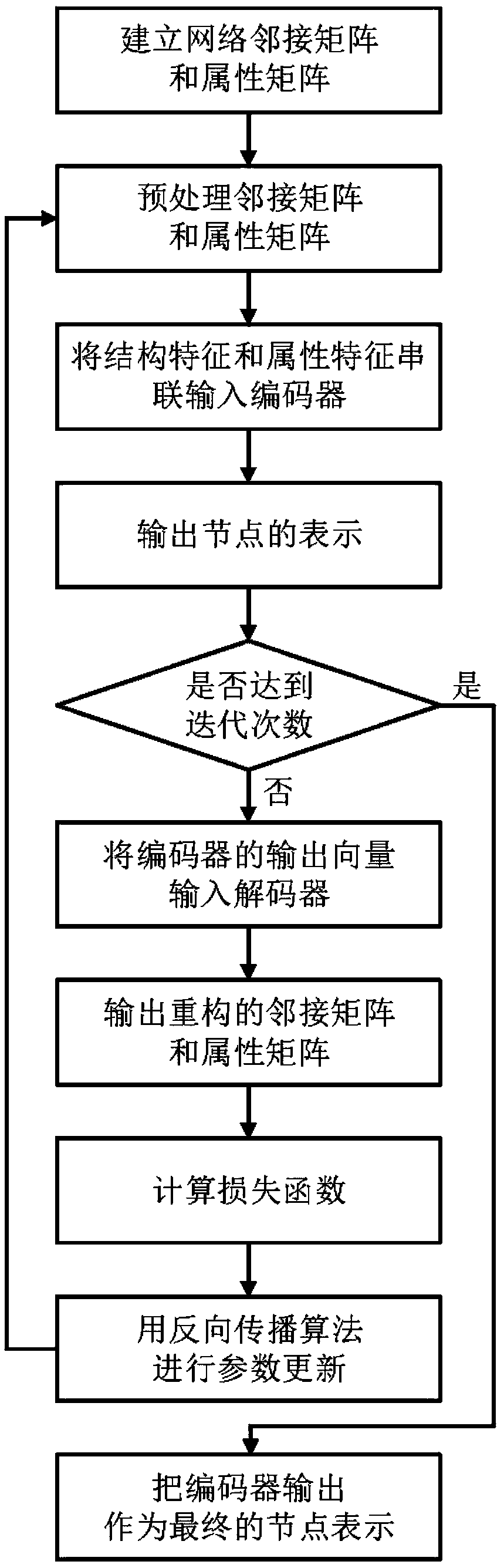

[0103] The invention provides a multi-modal deep network embedding method for fusing structure and attribute information, comprising the following steps:

[0104] Step 1, t represents the t-th iteration, and the initial value t=0;

[0105] Step 2, the original structure information of the node and attribute information Perform preprocessing calculations to obtain high-order structural features y i s(1) and attribute feature y i a(1) ;

[0106] Specifically, step 2 includes:

[0107] Step 2.1. Establish an adjacency matrix describing the original structure information of the network in the ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com