Brainlike robot navigation method based on Bayes multi-mode perception fusion

A navigation method and robot technology, applied in navigation, mapping and navigation, navigation calculation tools, etc., to achieve high biological fidelity and improve practicability.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

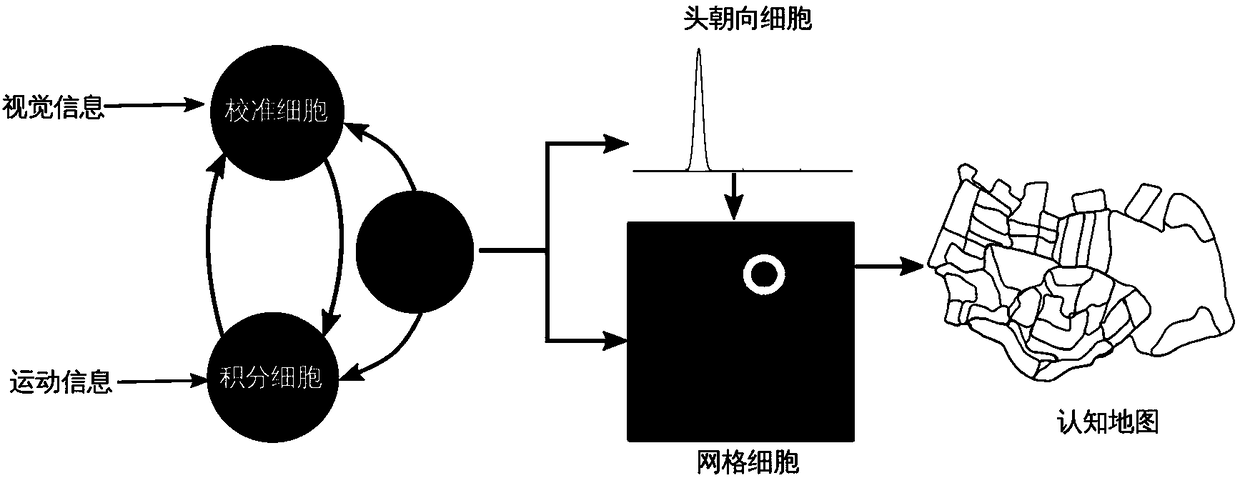

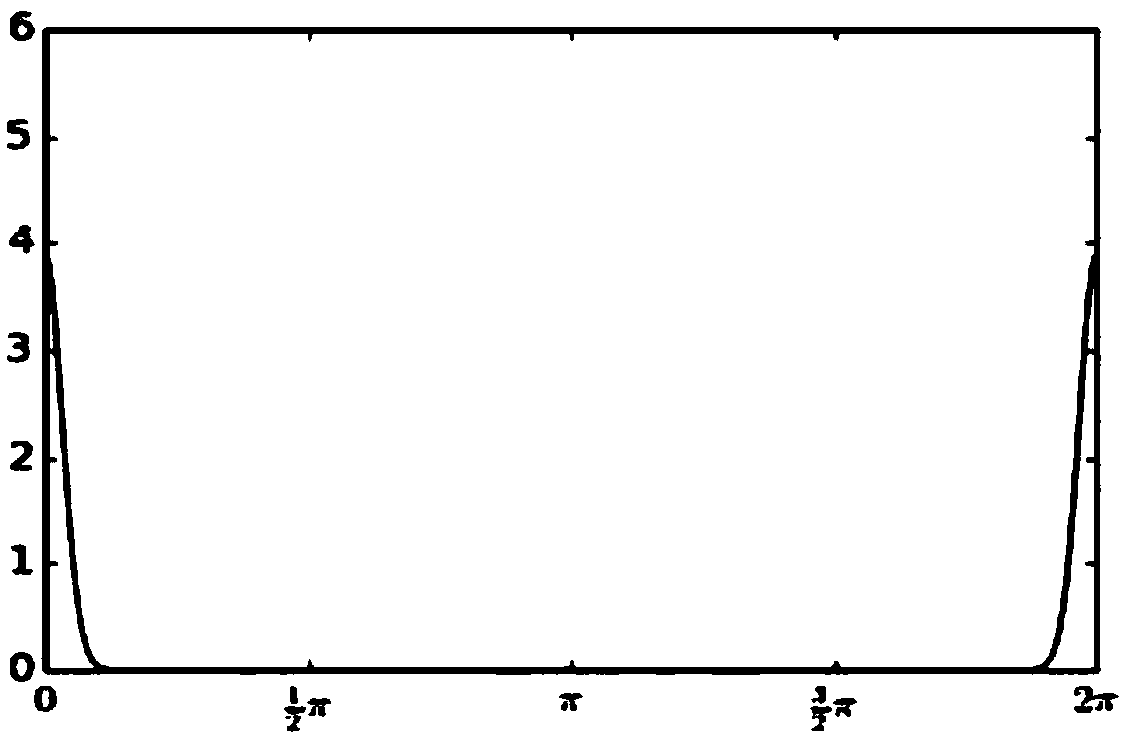

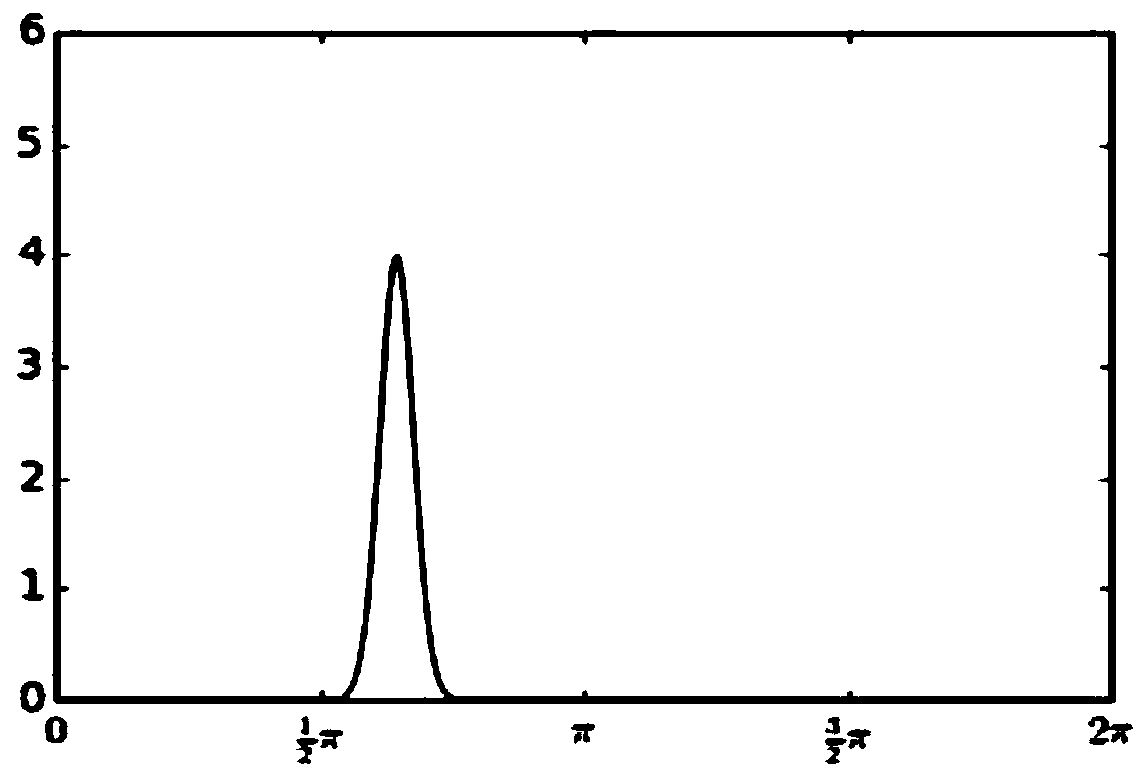

[0110] Step 3, calibrating cells for visual information fusion. After receiving the visual information, if the current information is different from the previously observed information, then a new topological network node is established, and capabilities are not injected into the head-oriented cell network and the grid cell network. If the current visual information is similar to the visual features seen at a certain time before and meets a certain threshold, then activate the relevant visual cells, inject energy into the grid cell network and head-to-head cell network, and the grid cells and The head-to-cell network is injected in a similar manner, thereby changing the magnitude of the reliability of the calibration cell probability distribution and the position of the mean. Calibration is implemented as follows:

[0111]

[0112]

[0113] in, Indicates the strength of the injected energy, Indicates the location of injected energy on the one-dimensional head-orient...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com