Image super-resolution reconstruction method for generating antagonistic network based on feature fusion

A technology of super-resolution reconstruction and feature fusion, applied in the field of image reconstruction of generative adversarial networks, can solve the problems of poor image performance and insufficient edge detail information of reconstructed images, so as to achieve clear image edge and detail information and better reconstruction effect Good, reduce the effect of computational complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach

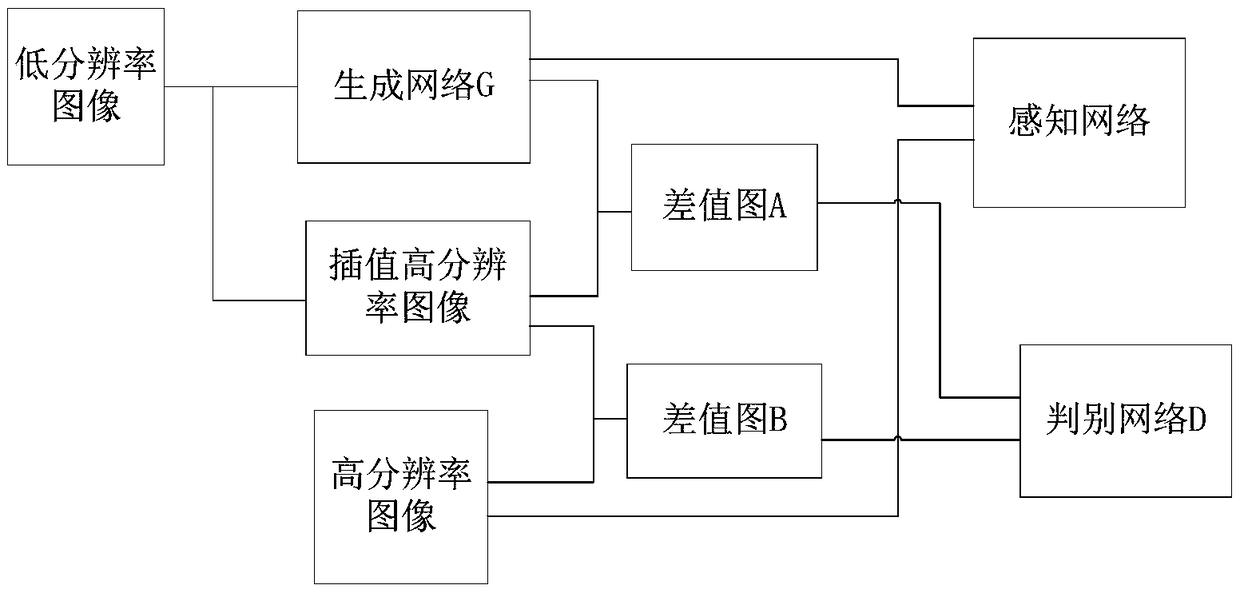

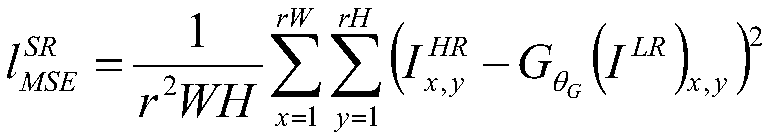

[0036] As shown in references 1 and 2, an image super-resolution reconstruction method based on a feature fusion generative confrontation network includes the following steps:

[0037] A. Preprocess the ImageNet data set to obtain the reconstruction data set corresponding to the high and low resolution images;

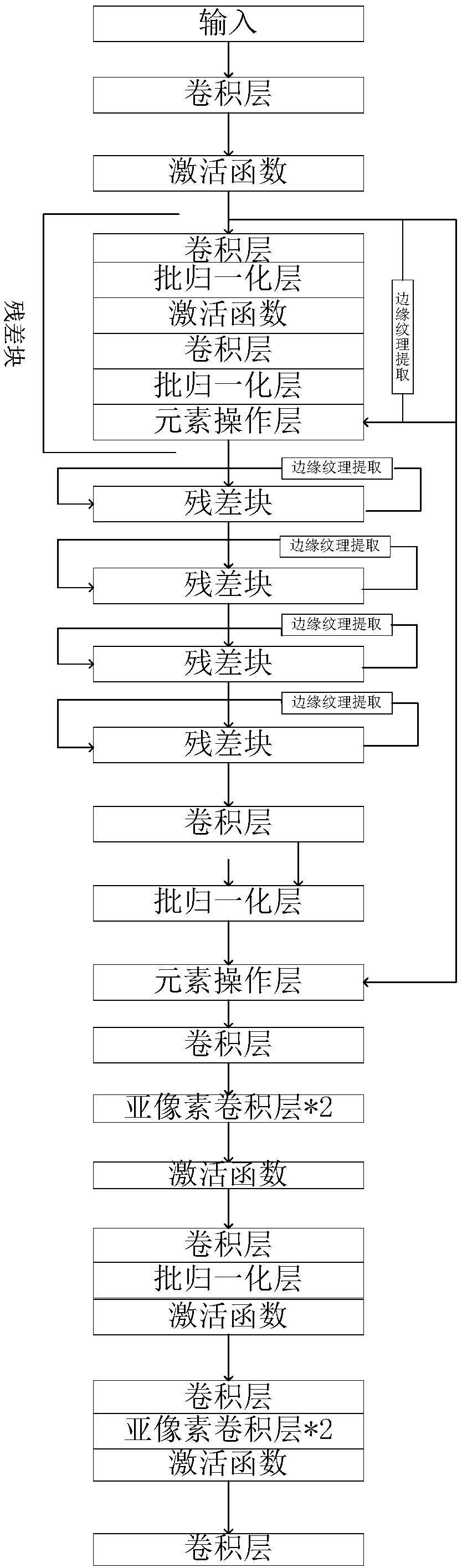

[0038] B. Construct a generation confrontation network model for training, introduce an interpolation reconstruction module into the model, and construct a generation network and a perception network for multi-feature fusion;

[0039] C. Input the reconstructed data set obtained in step A into the generative confrontation network in turn for model training;

[0040] D. Normalize the image to be processed to obtain a low-resolution image, input it to the trained generation network, and obtain a reconstructed high-resolution image.

[0041] Further, the method for making the reconstructed data set described in step A is:

[0042] A1. Obtain the ImageNet data set, and r...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com