A method for relative positioning of oblique camera based on deep learning

A technology of deep learning and relative positioning, applied in photogrammetry/video metrology, instruments, surveying and mapping and navigation, etc., can solve the problem that the positioning accuracy is greatly affected by the conversion accuracy, and achieve the effect of improving the relative positioning accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

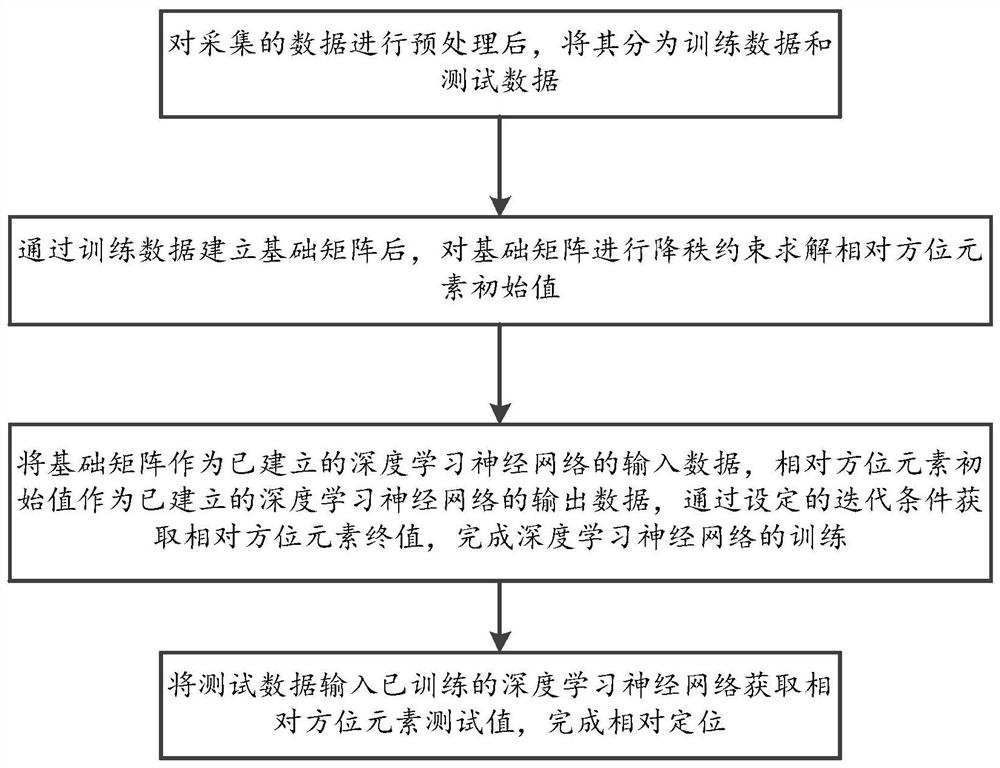

[0078] A method for relative positioning of oblique camera based on deep learning, comprising the steps of:

[0079] Step 1: After preprocessing the collected data, divide it into training data and test data;

[0080] Step 2: After the basic matrix is established through the training data, the rank-reducing constraint is applied to the basic matrix to solve the initial value of the relative orientation element;

[0081] Step 3: Use the basic matrix as the input data of the established deep learning neural network, and the initial value of the relative orientation element as the output data of the established deep learning neural network, and obtain the final value of the relative orientation element through the set iteration conditions to complete the depth Learn the training of neural networks;

[0082] Step 4: Input the test data into the trained deep learning neural network to obtain the test value of the relative orientation element and complete the relative positioning...

Embodiment 2

[0085] Based on embodiment 1, step 1 includes the following steps:

[0086] Step 1.1: set a threshold, filter and delete the most value according to the threshold, and the most value includes the minimum value and the maximum value;

[0087] Step 1.2: Divide the data after removing the most value into training data and test data in proportion, and the ratio is 6:4 or 7:3.

[0088] Step 2 includes the following steps:

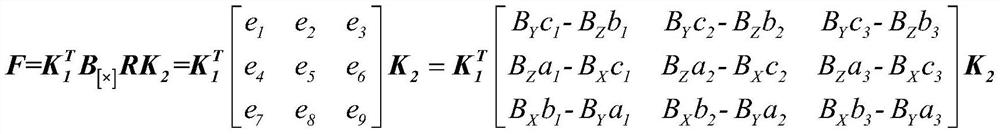

[0089] Step 2.1: Establish the basic matrix F according to the stereo pair in the training data. The stereo pair includes a left photo and a right photo, and the left photo and the right photo include a plurality of image points with the same name. The establishment equation is as follows:

[0090]

[0091] Among them, F represents the fundamental matrix between two images, B represents the photographic baseline vector, and B [×] Represents the cross-product matrix of vector B, m 1 , m 2 Indicates the image space auxiliary coordinates of the image point w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com