System level testing of entropy encoding

A technology of entropy coding and codeword, applied in the field of system-level testing of entropy coding

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

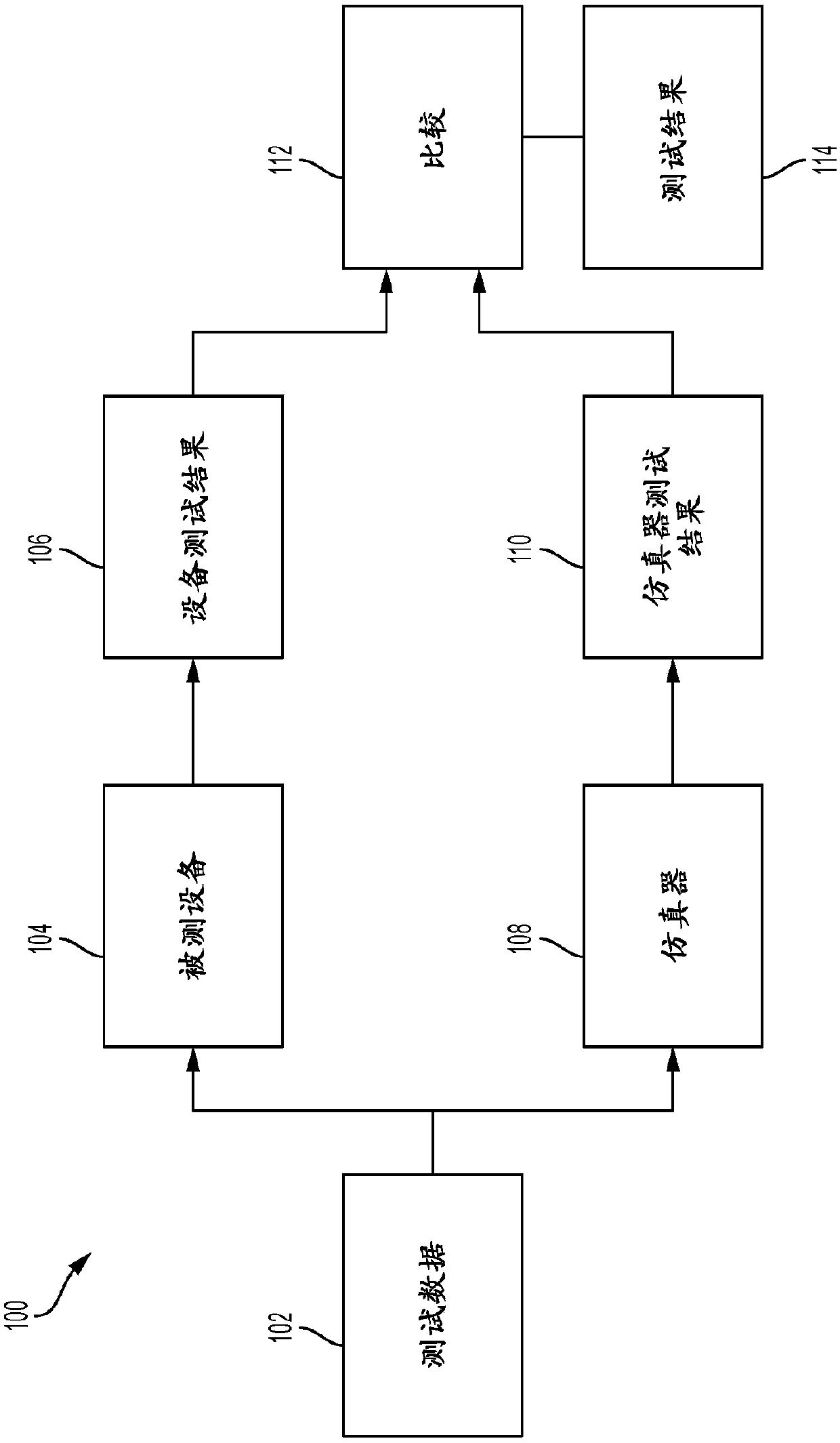

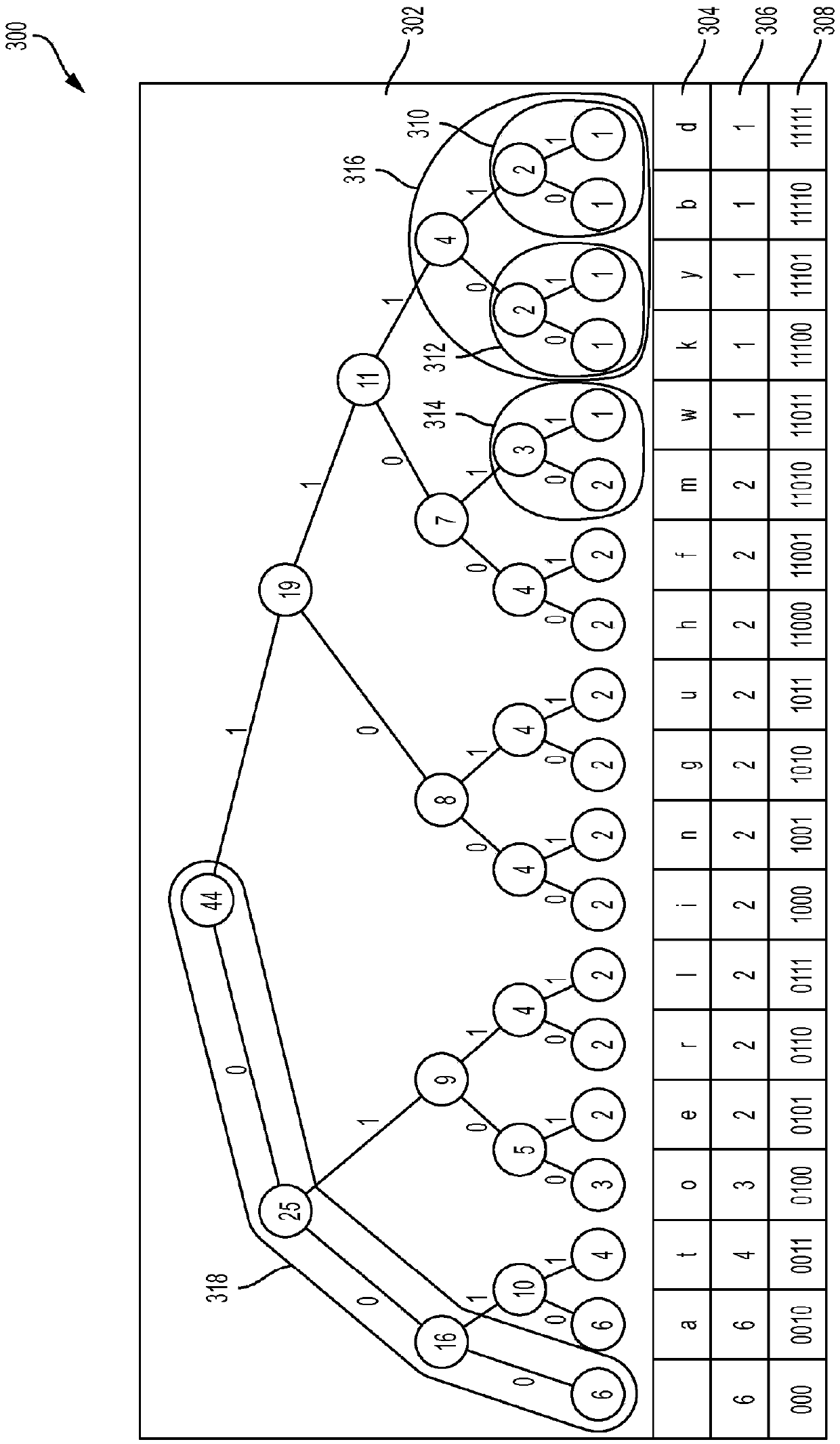

[0016] Embodiments described herein provide a system-level test of entropy coding in a computer system implementing two-stage compression. One or more embodiments provide a method of testing a two-stage compression process and its corresponding extensions using pseudo-random test generation. The two-stage compression / expansion process may include Lempel-Ziv type coding as the first stage and entropy coding based on Huffman compression techniques as the second stage. According to one or more embodiments, compression and expansion are the two main components used to test entropy coding. For compression, a Huffman tree is generated for all possible combinations based on the input data, and a Signed Transformation Table (STT) with left-justified codewords is built. Additionally, an Entropy Encoding Descriptor (EED) defining a Huffman tree is generated that includes an indication of the number of bits in the STT (used during compression) and the number of input bits for symbol ind...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com