A task type dialogue system based on deep network learning

A dialogue system and deep network technology, applied in the field of recommendation system, can solve the problems of unable to capture word position information, unable to recognize words, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

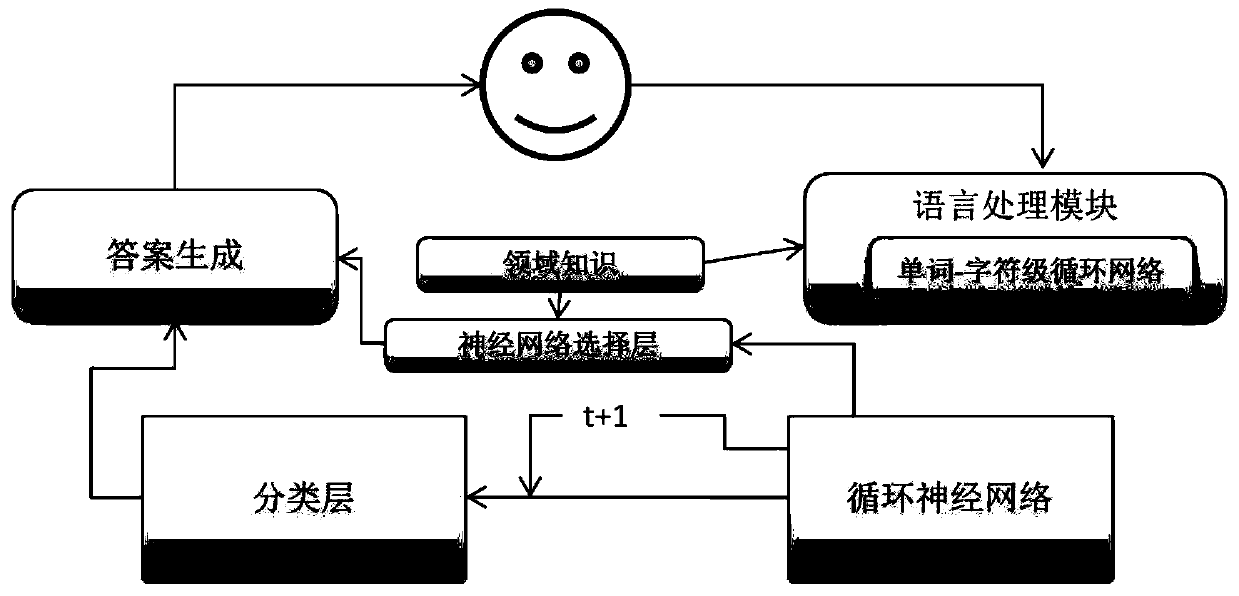

[0041] Figure 1~2 As shown, a task-based dialogue system based on deep network learning includes a language processing module, a recurrent neural network, a classification layer module, an answer generation module, a domain knowledge module and a neural network selection layer module, and the language processing module includes a word - Character-level recurrent networks, domain knowledge modules including answer templates;

[0042] The workflow of the system is as follows:

[0043]S10. In the language understanding process, use the word-character level recurrent network to encode the user's input Q t , and the final answer A of the dialogue system t , to obtain the sentence vector O corresponding to the user's latest input and the system's final answer respectively q (t) with O a (t); After encoding, the sentence vectors corresponding to the user's latest input and the system's final answer are vector spliced, and they are used as the input of the cyclic neural network: ...

Embodiment 2

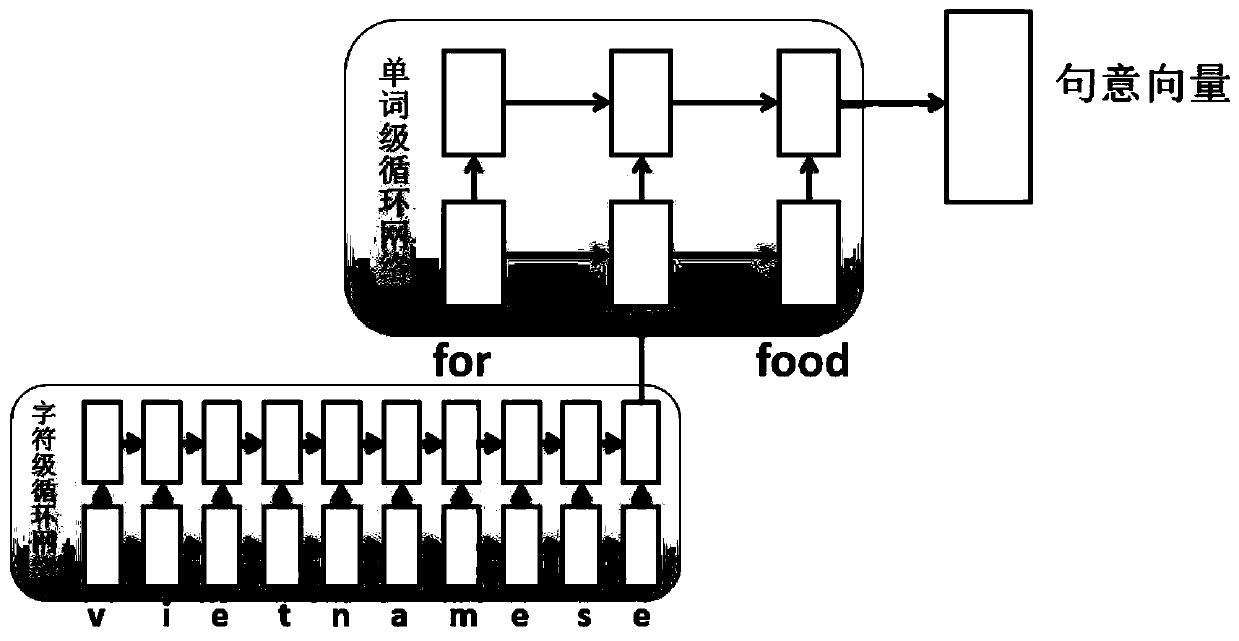

[0067] This embodiment is a specific embodiment of the word-character level recurrent network, such as figure 2 As shown, when encountering some words that are not in word2vec, such as proper nouns, or typos entered by the user. At this time, word2vec cannot be used to obtain the corresponding word vector. To address these issues, we introduce a character-level recurrent neural network to encode unseen words.

[0068] A detailed illustration of this technique can be seen in the figure. For example, the encoding of the sentence "for vietnamese food?", for and food can be found directly in word2vec, and the word vector is taken out; but vietnamese is a word that has never been seen before, so it is converted character by character into the corresponding unique The hot code is input into the character-level cyclic neural network, and then the last hidden layer of the cyclic neural network is taken as the representation of the word vector.

Embodiment 3

[0070] In this embodiment, it will be described how to combine the code and the neural network, select the neural network, and how to introduce domain knowledge into the layered mixed code network. The application of domain knowledge is mainly in the following aspects: key entity recognition, behavior template summary and database object recommendation.

[0071] Taking the data set in Table 1 as an example, there are four entities in this task: dishes, unregistered dishes, locations, and prices. We use a simple string match to find the corresponding entity from the user input, and then we can use a binary vector to represent the presence or absence of the entity. Then, we summarize the templates that the dialogue system uses to answer from the training set. For example, "pipasha restaurant is anice place in the east of town and the prices are expensive" can be abstracted as " is a nice place in the of town and the prices are ". In this way, we have summed up a total of 77 t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com