A Video Description Method Based on Object Attribute Relationship Graph

A technology of object attributes and video description, applied in the field of image processing, can solve problems such as a large amount of labeled data, no semantic perception information, features staying at the primary visual level, etc., and achieve good scalability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0035] The present invention will be described in further detail below.

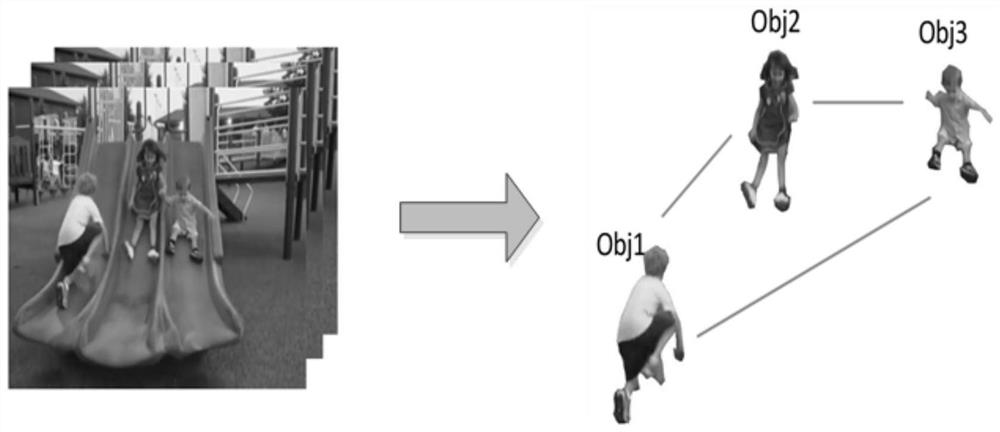

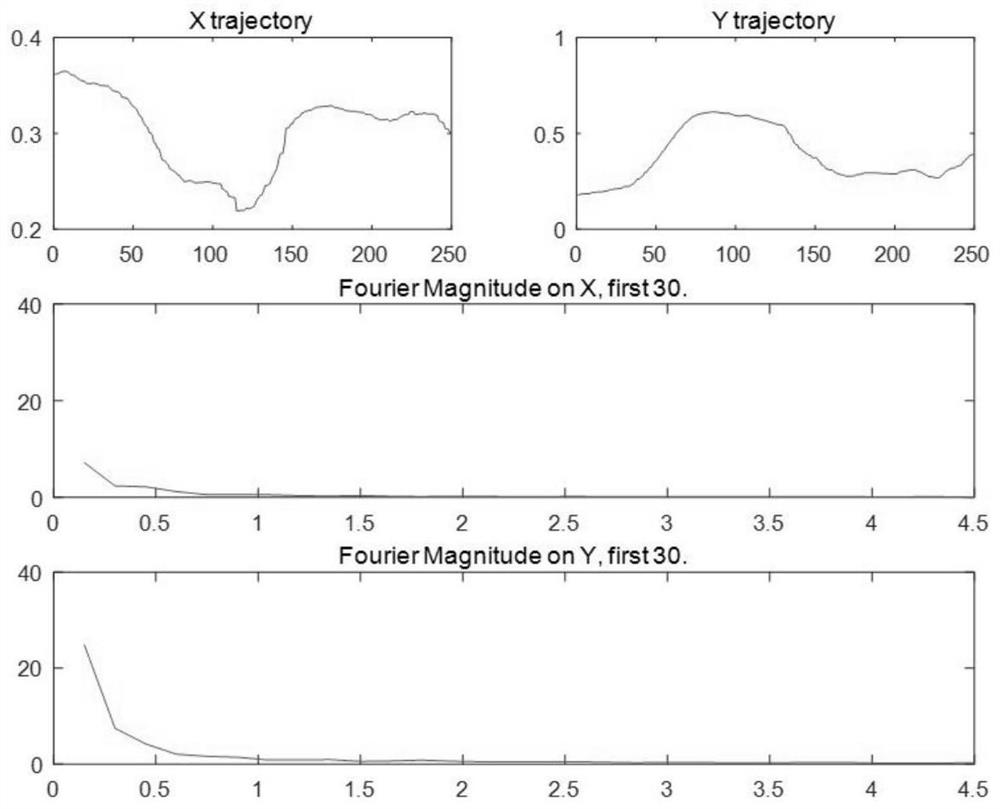

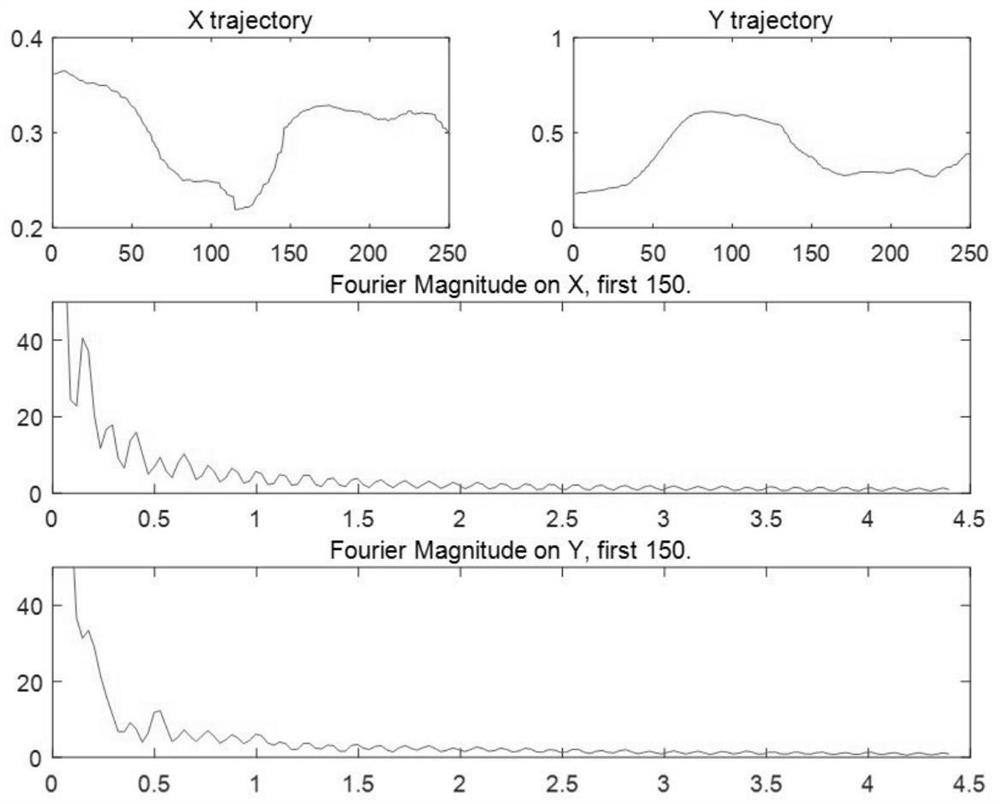

[0036] Human understanding of video content begins with the perception of key objects in the video: by observing the apparent characteristics of key objects in the video, analyzing the movement patterns of individual objects and the relationship between multiple objects, people can easily identify these objects. object, and in what context an activity is performed. Inspired by the way humans understand video, the present invention proposes a video semantic-level content description method based on object attribute relation graph. Based on the mechanism of human perception of video scene content, this method represents video as an Object Attribute Relationship Graph (OARG), in which the nodes of the graph represent the objects in the video, and the edges of the graph represent the relationships between objects. The apparent features and motion trajectory features of each object in the video scene are ext...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com