Action classifying method for small sample videos

A small sample and video technology, applied in the computer field, can solve problems such as increasing computing performance, and achieve the effect of small sample video action recognition promotion

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0027] The present invention is further described below through specific embodiments and accompanying drawings.

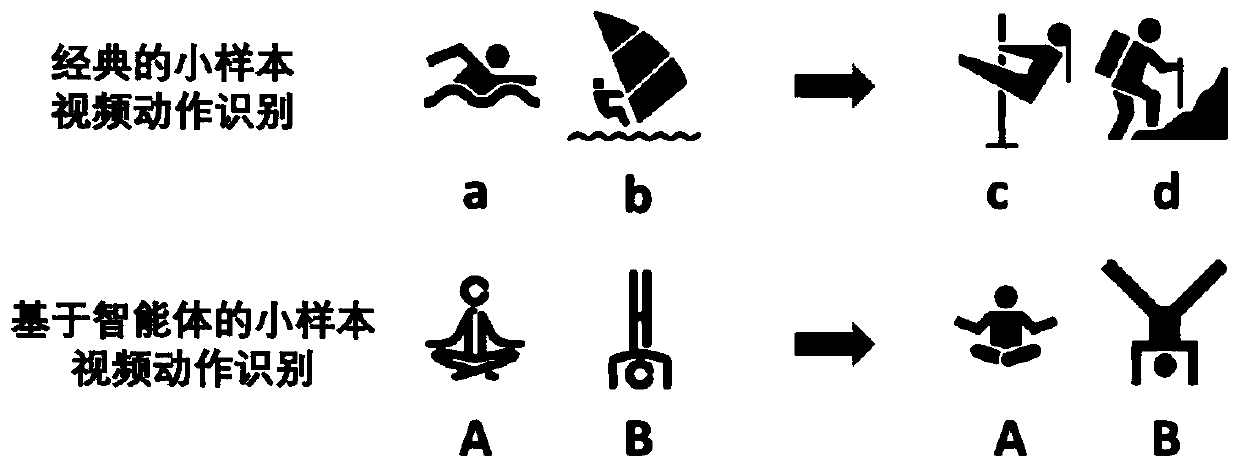

[0028] figure 1 A comparison chart of the small sample video action recognition setting based on the intelligent human body proposed by the present invention and the classic recognition is shown. The black ones represent real-world videos, and the magenta ones represent virtual world videos. The classic small sample video action recognition is to migrate from the real training set video to the real test set video of different actions; the small sample video action recognition we propose is to migrate from the virtual training set video generated based on intelligent human body to the real test set video with the same action. Test set videos.

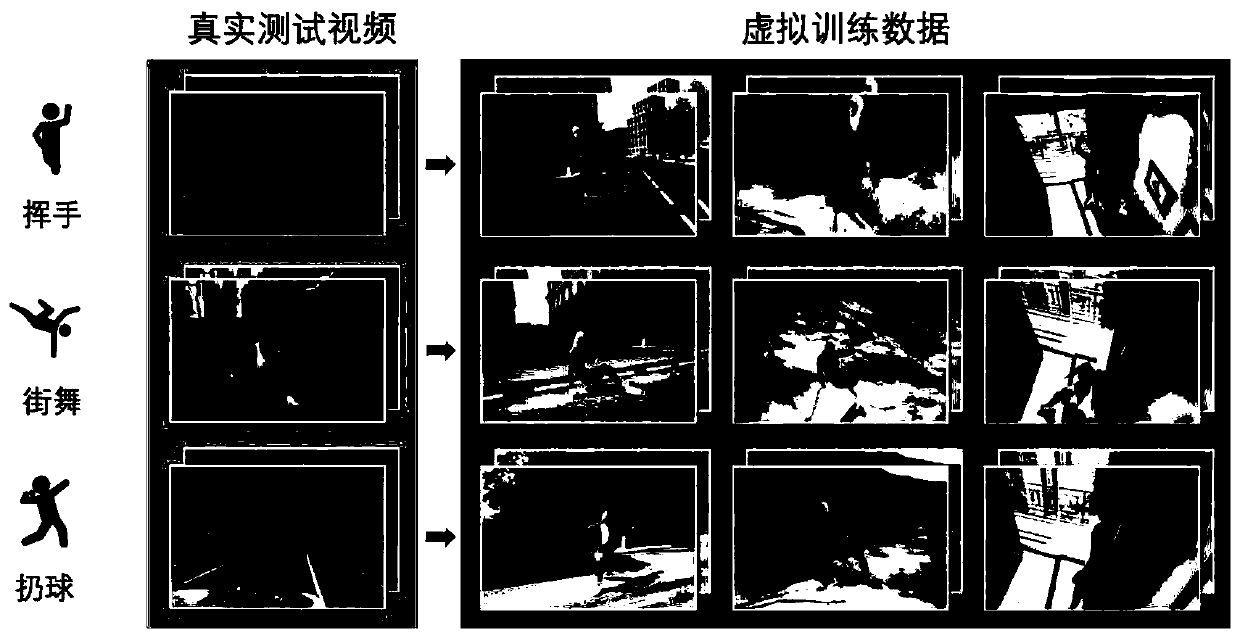

[0029] figure 2 A schematic diagram of the real test video and the corresponding generated virtual training video data of the present invention is shown. The real test video comes from real human actions such as waving, ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com