Human body action recognition method in monitoring based on deep learning and posture estimation

A technology for human action recognition and attitude estimation, applied in character and pattern recognition, computing, computer parts and other directions, can solve the problems of low efficiency, time-consuming and labor-intensive monitoring methods, and achieve the effect of improving efficiency and saving costs.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0042] The specific embodiments of the present invention are described below so that those skilled in the art can understand the present invention, but it should be clear that the present invention is not limited to the scope of the specific embodiments. For those of ordinary skill in the art, as long as various changes Within the spirit and scope of the present invention defined and determined by the appended claims, these changes are obvious, and all inventions and creations using the concept of the present invention are included in the protection list.

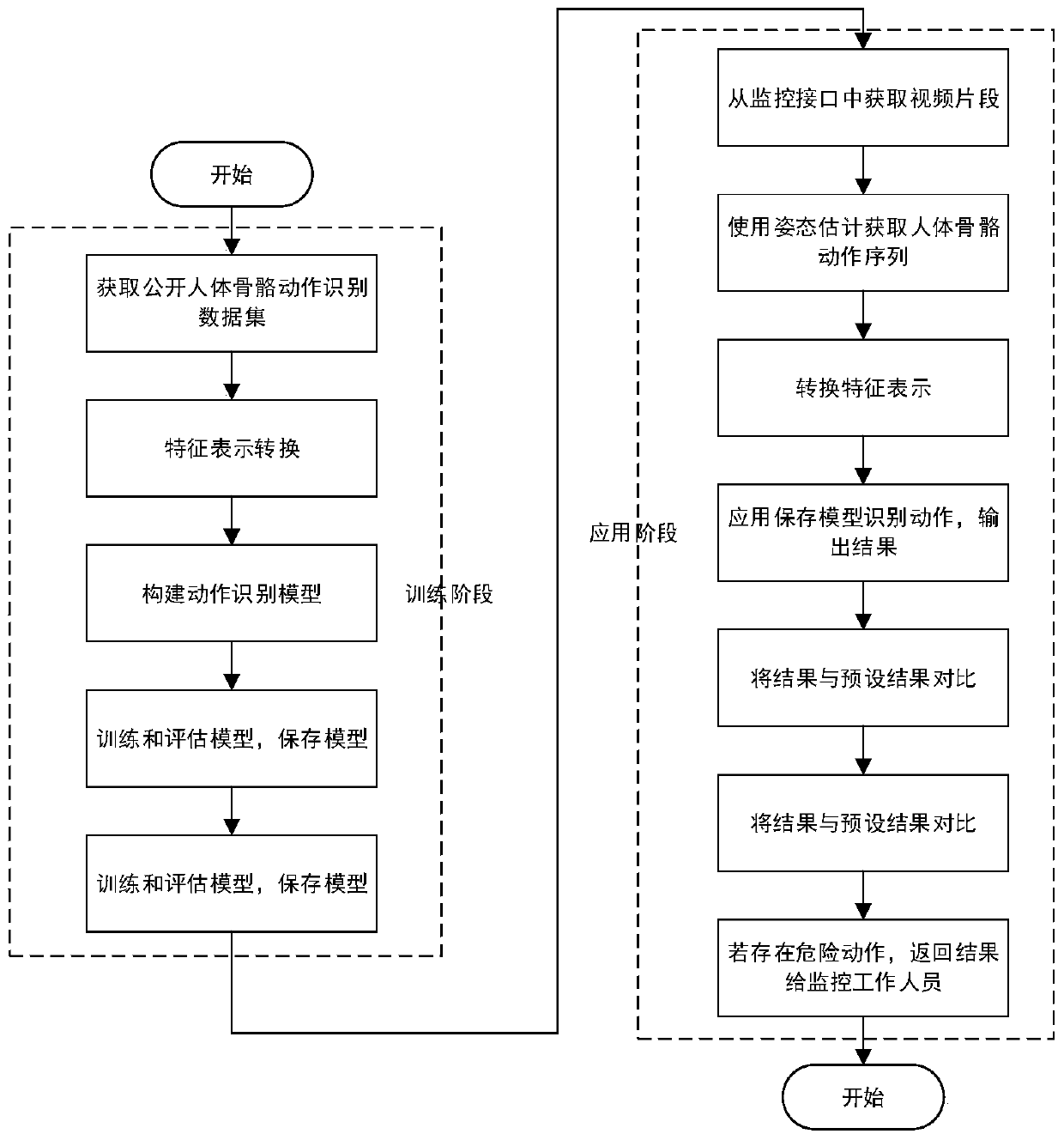

[0043] According to an embodiment of the present application, refer to figure 1 , the human action recognition method in monitoring based on deep learning and attitude estimation of this program, including:

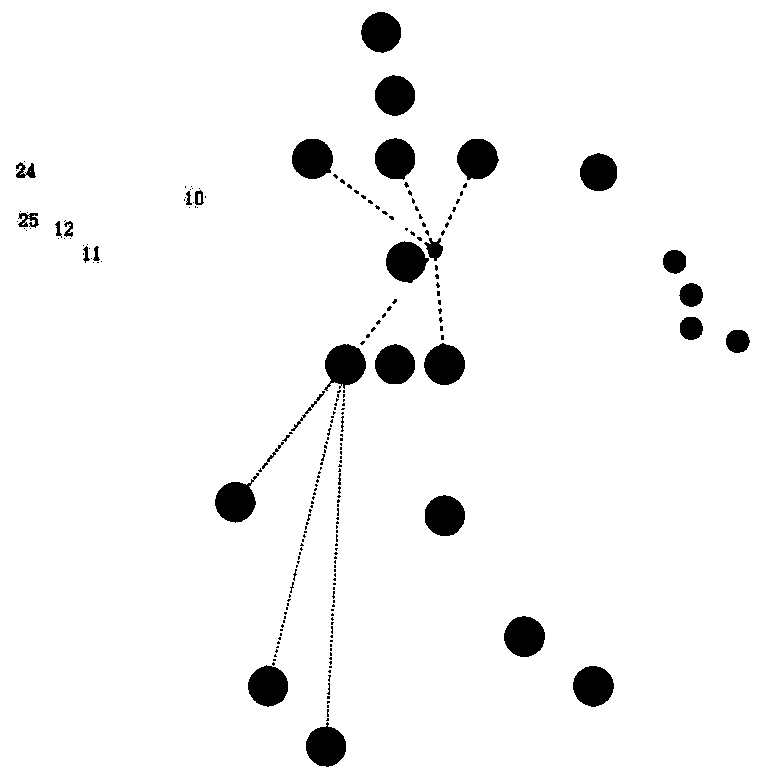

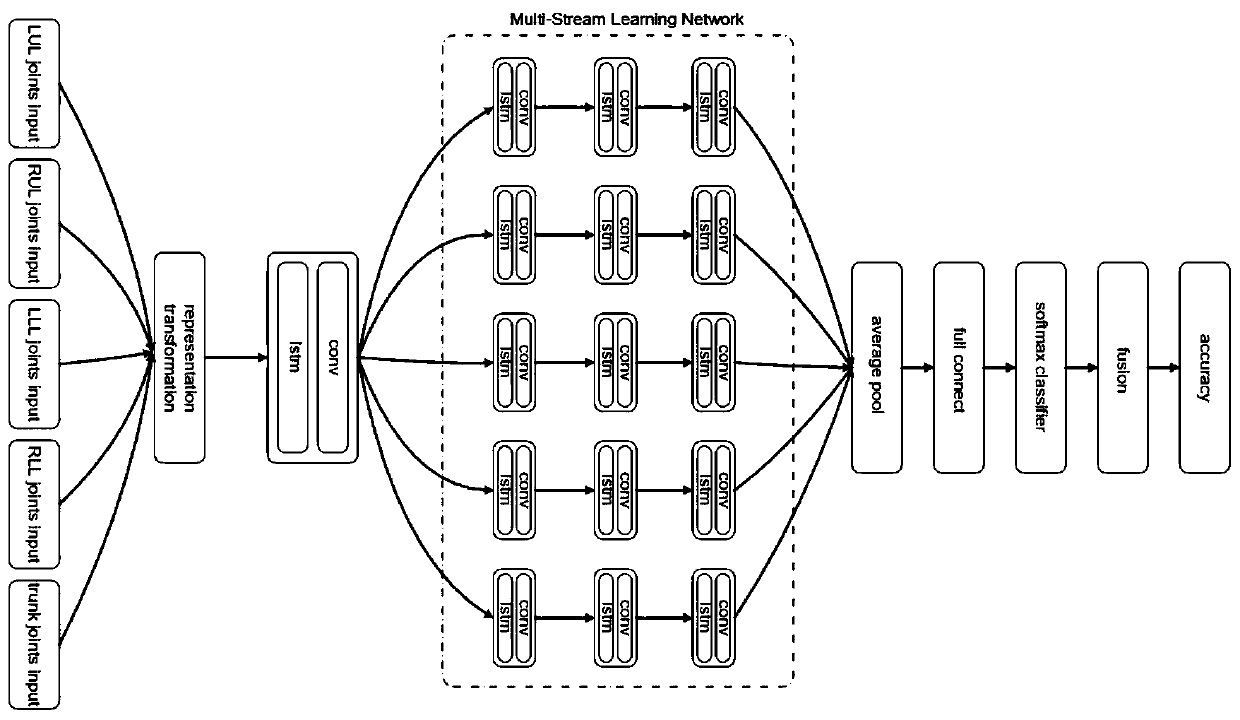

[0044] S1. Construct a multi-flow action recognition model based on the representation of joint features of relative parts;

[0045] S2. Preprocessing the human skeleton movement data and converting the joint feature ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com