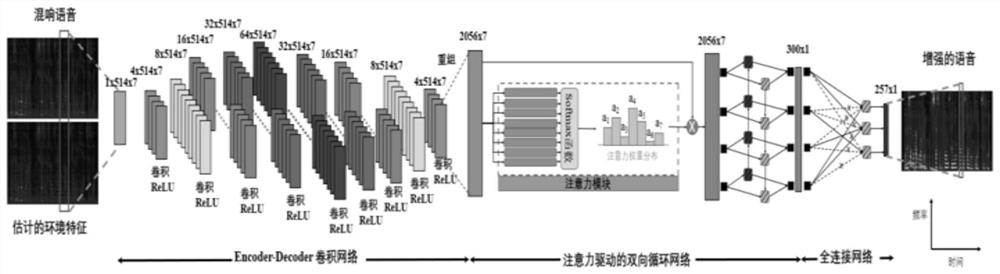

Environment Adaptive Speech Enhancement Algorithm Based on Attention-Driven Recurrent Convolutional Network

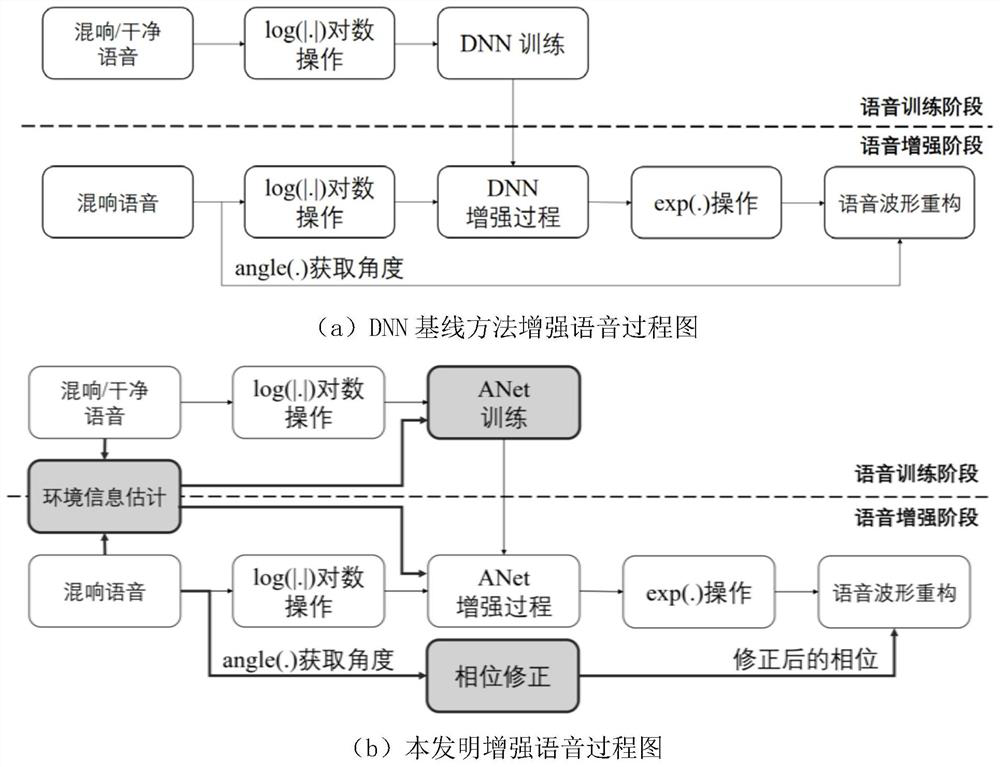

A technology of speech enhancement and circular convolution, applied in speech analysis, instruments, etc., can solve the problem that the speech enhancement model is difficult to adapt to different noise environments, and achieve the effect of enriching information acquisition, improving robustness, and improving speech enhancement performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

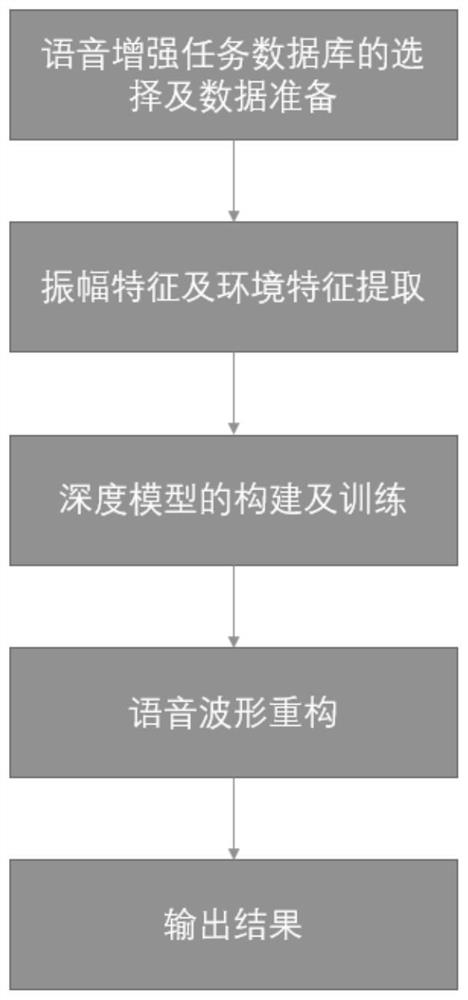

[0042] In order to better understand the technical solution of the present invention, the present invention will be described in further detail in conjunction with the accompanying drawings and specific embodiments

[0043] figure 1 It is a framework diagram of an environment-adaptive speech enhancement algorithm based on the attention-driven circular convolution network of the present invention, and mainly includes the following steps:

[0044] Step 1, input data preparation: In order to verify the effect of the present invention, a speech enhancement experiment is carried out in the REVERBChallenge2014 database. The sampling frequency of all sentences in REVERBChallenge2014 is 16KHz.

[0045] Step 2, amplitude feature and environment feature extraction:

[0046] 1) Amplitude feature extraction: Pre-emphasize, frame, window, and fast Fourier transform each segment of the speech signal. The number of FFT points is set to 512, the window length is 512, the window shift is 256...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com