Multimodal fusion human-computer interaction method, device, storage medium, terminal and system

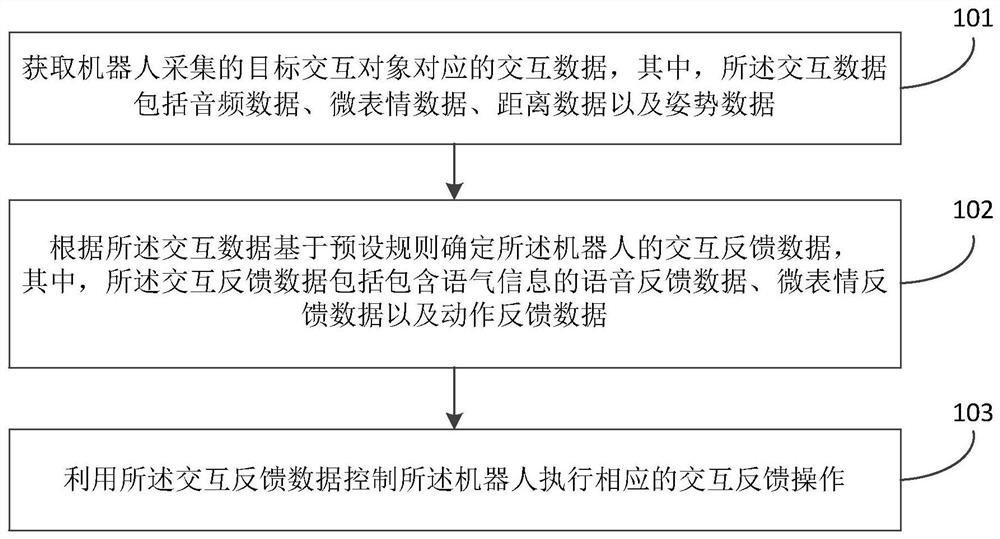

A human-computer interaction, multi-modal technology, applied in the computer field, can solve the problems of rigid interaction and monotonous feedback form, achieve the effect of reasonable and humanized feedback, enrich the feedback form, and improve the experience of human-computer interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 2

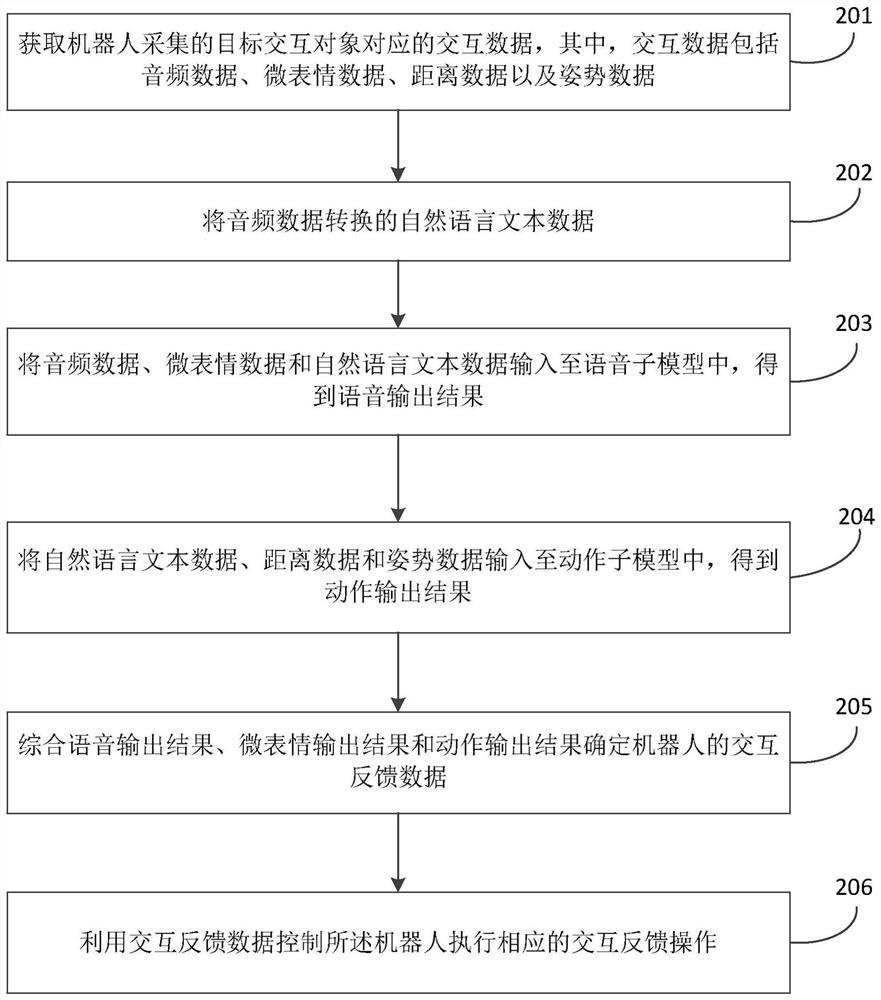

[0047] figure 2 It is a schematic flowchart of a multi-modal fusion human-computer interaction method provided by Embodiment 2 of the present invention. The method is optimized on the basis of the above-mentioned embodiment, and the preset multi-modal fusion model is divided into multiple sub-models.

[0048] Exemplarily, the preset multimodal fusion model includes multiple sub-models; the interaction data is input into the preset multimodal fusion model, and determined according to the output result of the preset multimodal fusion model The interaction feedback data of the robot includes: extracting sub-sample data respectively corresponding to a plurality of sub-models from the interaction data; inputting each sub-sample data into corresponding sub-models to obtain a plurality of sub-output results; The sub-output results determine the interaction feedback data of the robot. The advantage of this setting is that the output of each sub-model is more targeted, and, due to th...

Embodiment 3

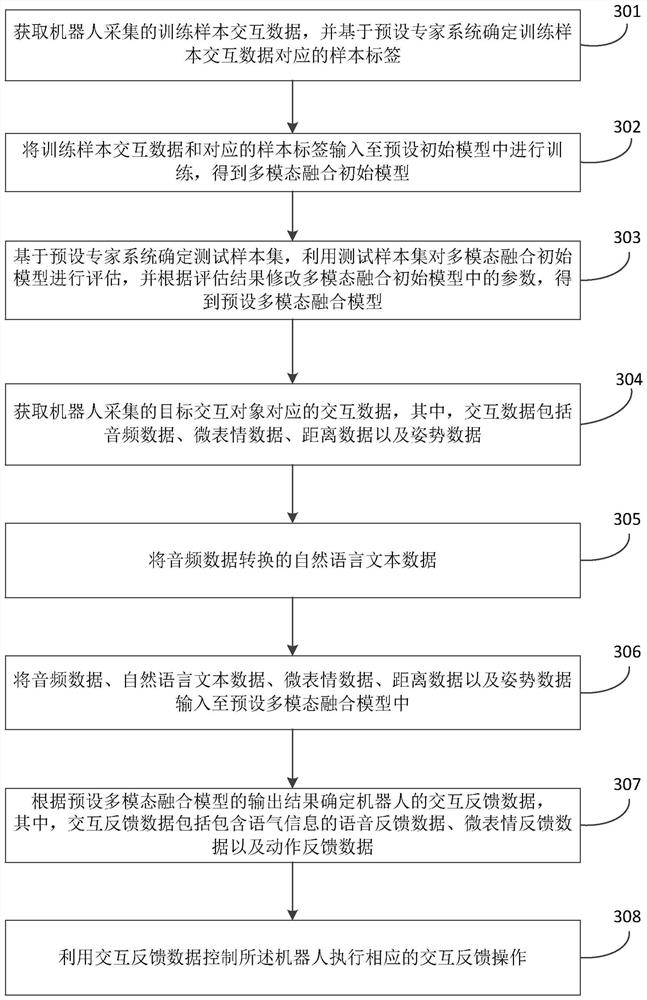

[0068] image 3 It is a schematic flowchart of a multi-modal fusion human-computer interaction method provided by Embodiment 3 of the present invention. The method is optimized on the basis of the above-mentioned embodiment, and relevant content of model training is added.

[0069] Exemplarily, before the acquisition of the interaction data corresponding to the target interactive object collected by the robot, the method further includes: obtaining the interaction data of training samples collected by the robot, and determining the sample label corresponding to the interaction data of the training samples based on a preset expert system; The training sample interaction data and corresponding sample labels are input into a preset initial model for training to obtain a preset multi-modal fusion model. The advantage of this setting is that the training sample set can be set more reasonably by using the preset expert system.

[0070] Further, the inputting the training sample int...

Embodiment 4

[0090] Figure 7 A structural block diagram of a multi-modal fusion human-computer interaction device provided in Embodiment 4 of the present invention. The device can be realized by software and / or hardware, and generally can be integrated in a terminal, and can be implemented by executing a multi-modal fusion human-computer interaction method for human-computer interaction. Such as Figure 7 As shown, the device includes:

[0091] The interaction data acquisition module 701 is configured to acquire the interaction data corresponding to the target interactive object collected by the robot, wherein the interaction data includes audio data, micro-expression data, distance data and posture data;

[0092] An interaction feedback data determination module 702, configured to determine the interaction feedback data of the robot based on preset rules according to the interaction data, wherein the interaction feedback data includes voice feedback data including tone information, mic...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap