Target detection method and system based on lightweight deformable convolution

A target detection and lightweight technology, applied in the field of target detection, can solve the problems of changes in the apparent characteristics of object instances, difficulties in visual recognition algorithms, and increased calculations, so as to reduce the amount of data calculations, improve recognition accuracy, and reduce burdens. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Benefits of technology

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0039] In one or more embodiments, a new target detection method based on a deep learning framework for feature learning is disclosed, including:

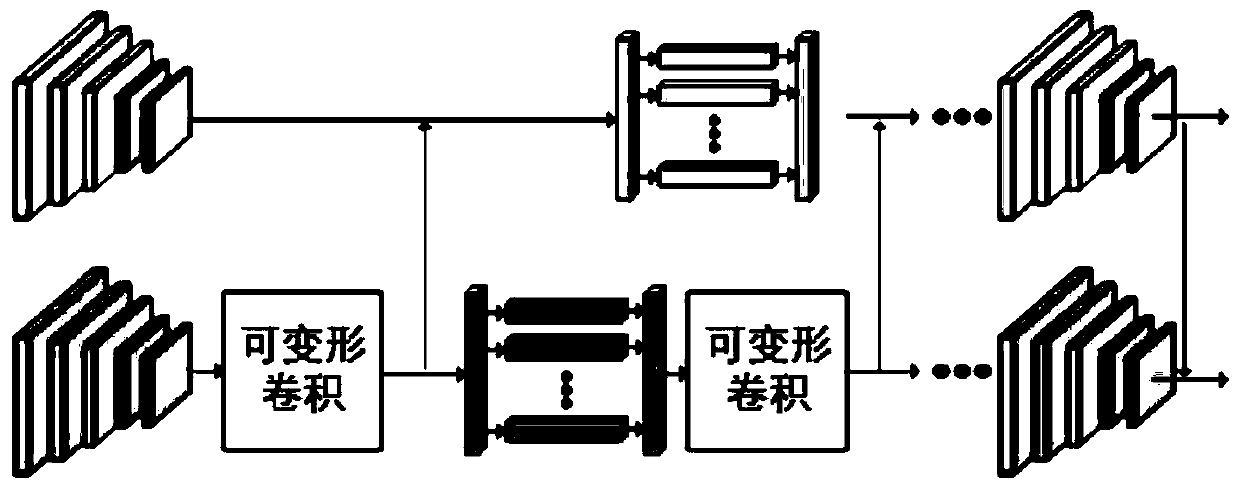

[0040] (1) Two deformable convolutions and deformable ROI pooling are used to replace existing algorithms, such as VGG, GoogLeNet, ResNet, DenseNet, ResNeXt and other traditional feature extraction networks and MobileNet, In light-weight feature neural networks such as ShuffleNet, single or multiple 3×3 convolutional network layers and pooling layers construct a depth-separable feature extraction network as the source network.

[0041] In this embodiment, the feature extraction network used is Mobilenetv1, which is not only the basic network of the source network, but also the target network. Its specific network structure is as follows:

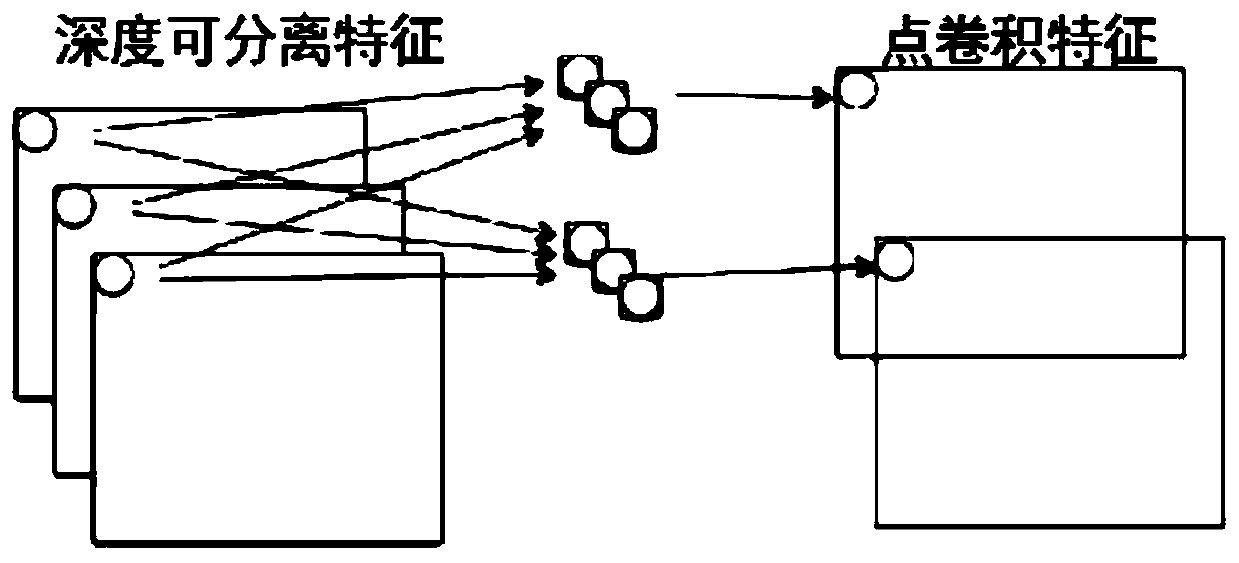

[0042] The core of Mobilenet v1 is to split the convolution into two parts: Depthwise+Pointwise.

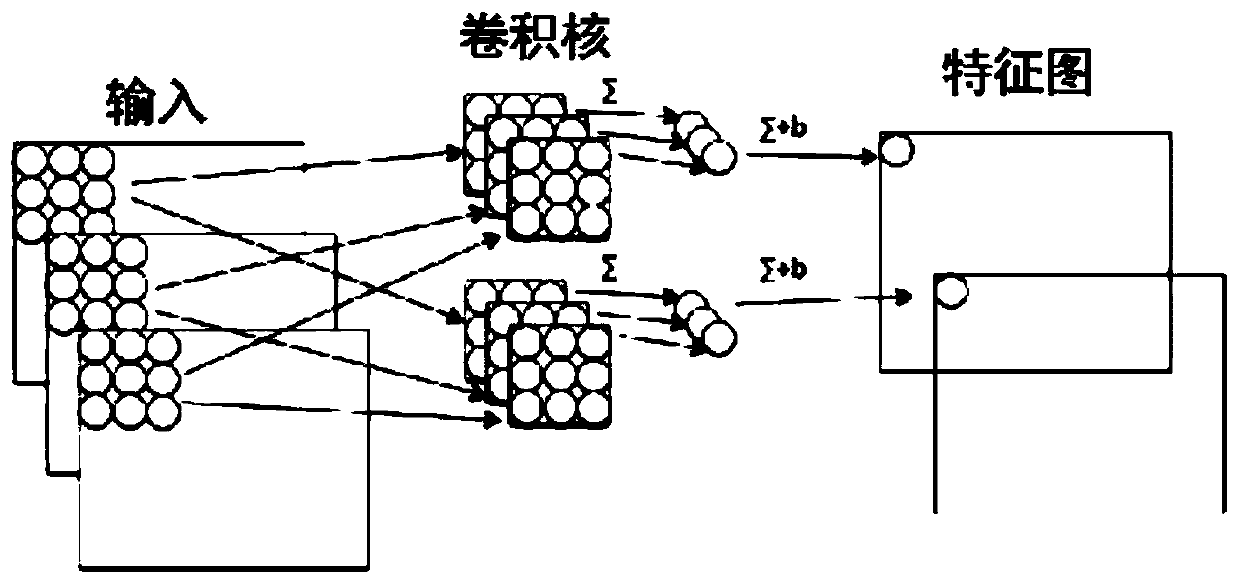

[0043] In order to explain Mobilenet v1, assume that there is an input of N*H*W*C, N represent...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com