Video content identification method and device, storage medium and electronic equipment

A technology of video content and recognition method, applied in selective content distribution, electrical components, image communication, etc., can solve problems such as low video clips, inability to recognize concentration, and achieve the effect of improving learning efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

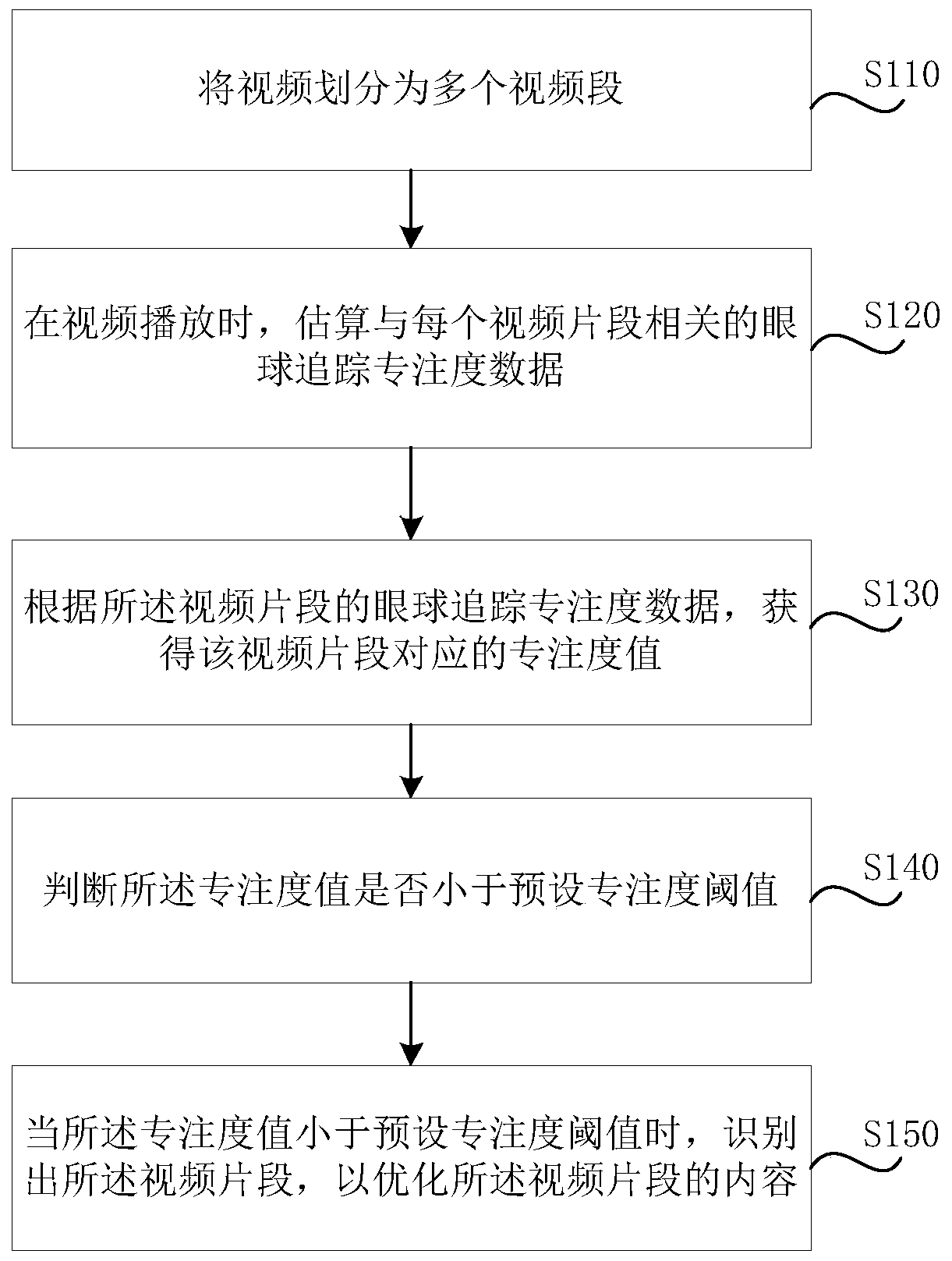

[0043] figure 1 It is a schematic flowchart of a method for identifying video content provided by the embodiment of the present application. Such as figure 1 As shown, this method includes:

[0044] Step S110: Divide the video into multiple video segments.

[0045] Specifically, the time of the video etc. is divided into multiple video segments.

[0046] Wherein, the divided time is artificially preset according to the actual duration of the video.

[0047] Step S120: When the video is playing, acquire eye tracking concentration data related to each video segment.

[0048] Specifically, the display area of the display screen where the video is played is sub-regionally processed to form at least two sub-regions; the region with video content is selected from the two sub-regions as an effective sub-region; through eye tracking technology, the effective sub-region is acquired The eye tracking information of the user in the area; according to the eye tracking information, t...

Embodiment 2

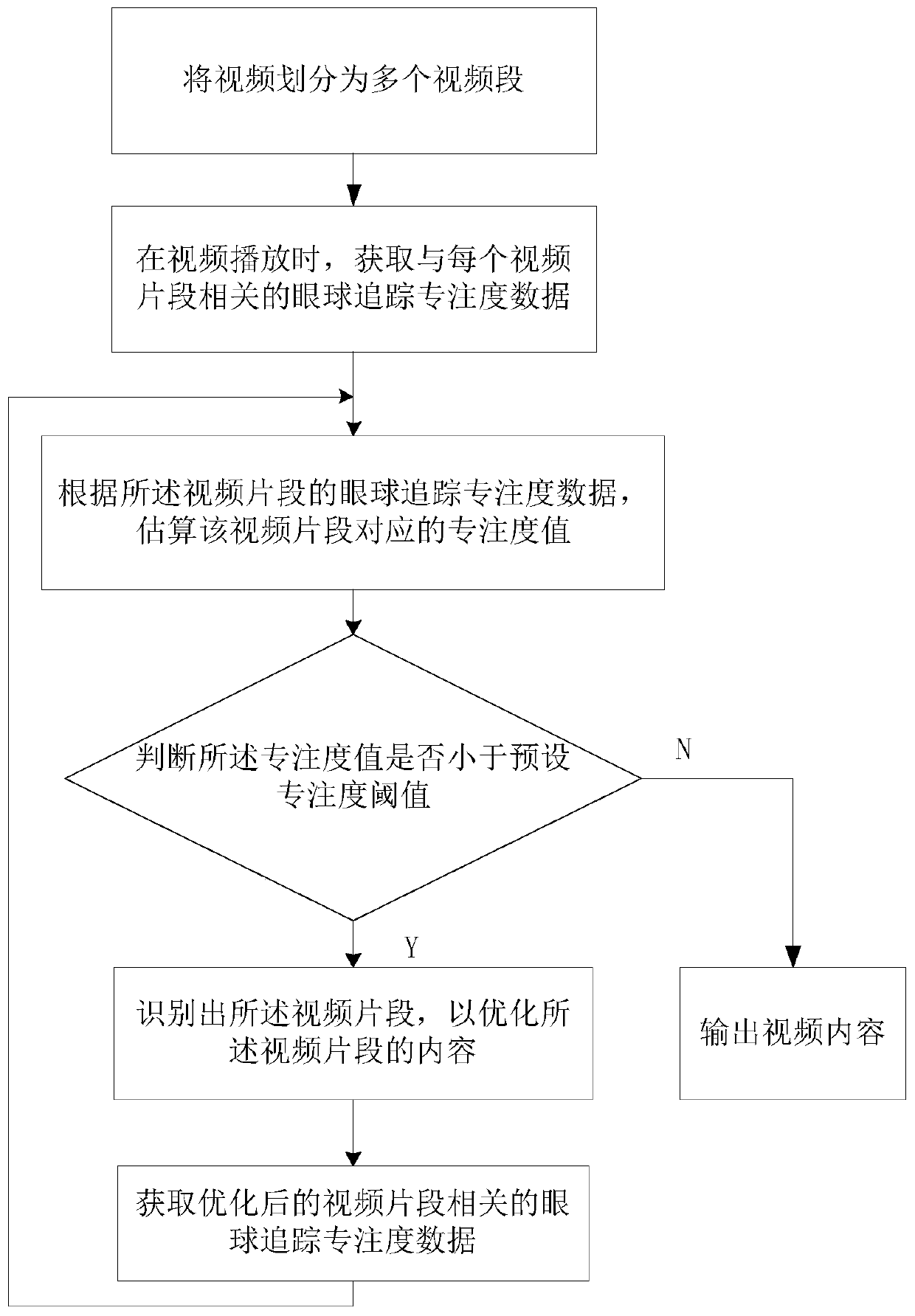

[0070] image 3 Another schematic flowchart of a method for identifying video content provided by the embodiment of the present application.

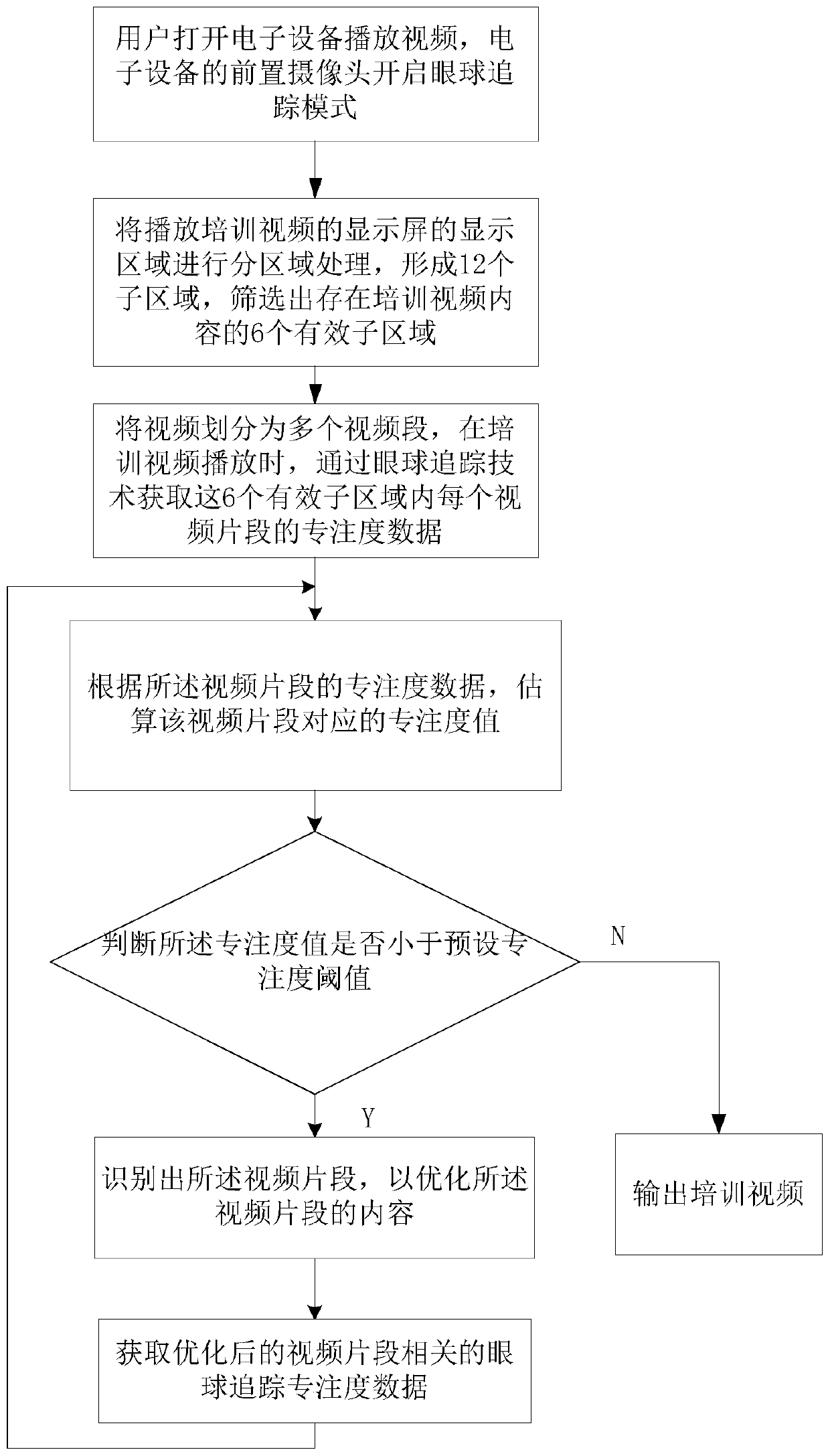

[0071] Such as image 3 As shown, the user turns on the electronic device to play the video, the front camera of the electronic device turns on the eye tracking mode, and the display area of the display screen where the training video is played is divided into regions to form 12 sub-regions, and 6 regions with training video content are screened out. The effective sub-area divides the video into multiple video segments. When the training video is played, the concentration data of each video segment in the six effective sub-areas is obtained through eye tracking technology.

[0072] According to the visual processing of the eye tracking concentration data of the video clip, the corresponding concentration value of the video clip is estimated.

[0073] Specifically, visual processing, that is, to remove some abnormal data that is too ...

Embodiment 3

[0077] Figure 4 It is a connection block diagram of an apparatus 20 for identifying video content provided by the embodiment of the present application. Such as Figure 4 As shown, the device includes:

[0078] The video division module 21 is configured to divide the video into a plurality of video segments;

[0079] The data collection module 22 is configured to obtain eye tracking concentration data related to each video segment when the video is played;

[0080] The processing module 23 is configured to estimate the concentration value corresponding to the video clip according to the eye tracking concentration data of the video clip;

[0081] The judging module 24 is configured to judge whether the concentration value is less than a preset concentration threshold;

[0082] The identification module 25 is configured to identify the video segment when the concentration value is less than the preset concentration threshold, so as to optimize the content of the video segme...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com