Human-computer interaction method, control device, controlled device and storage medium

A technology of human-computer interaction and control devices, which is applied in the field of controlled devices and storage media, control devices, and human-computer interaction methods, and can solve problems such as false triggering of control commands and voice assistants turning on TVs by mistake

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

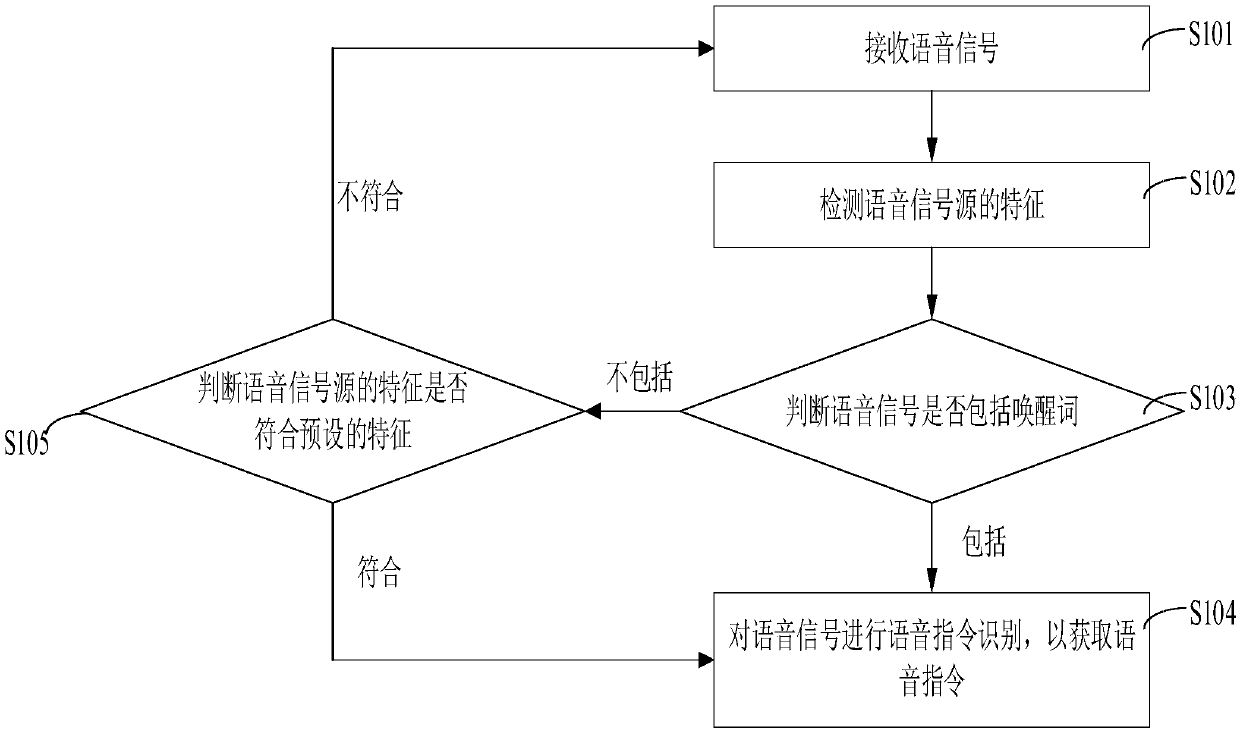

[0027] figure 1 It is a schematic flowchart of the human-computer interaction method provided in Embodiment 1 of the present invention. In order to clearly describe the human-computer interaction method provided by Embodiment 1 of the present invention, please refer to figure 1 .

[0028] The human-computer interaction method provided by Embodiment 1 of the present invention includes the following steps:

[0029] S101: Receive a voice signal.

[0030] In one embodiment, the device / device applying the human-computer interaction method provided in this embodiment is in the silence detection state before receiving the voice signal. At this time, the power consumption of the device / device is extremely low, so the device / device The ability of equipment / devices to remain operational for extended periods of time.

[0031] In an embodiment, in step S101, it may further include: when the volume of the received voice signal reaches a certain threshold, enter step S102.

[0032] S10...

Embodiment 2

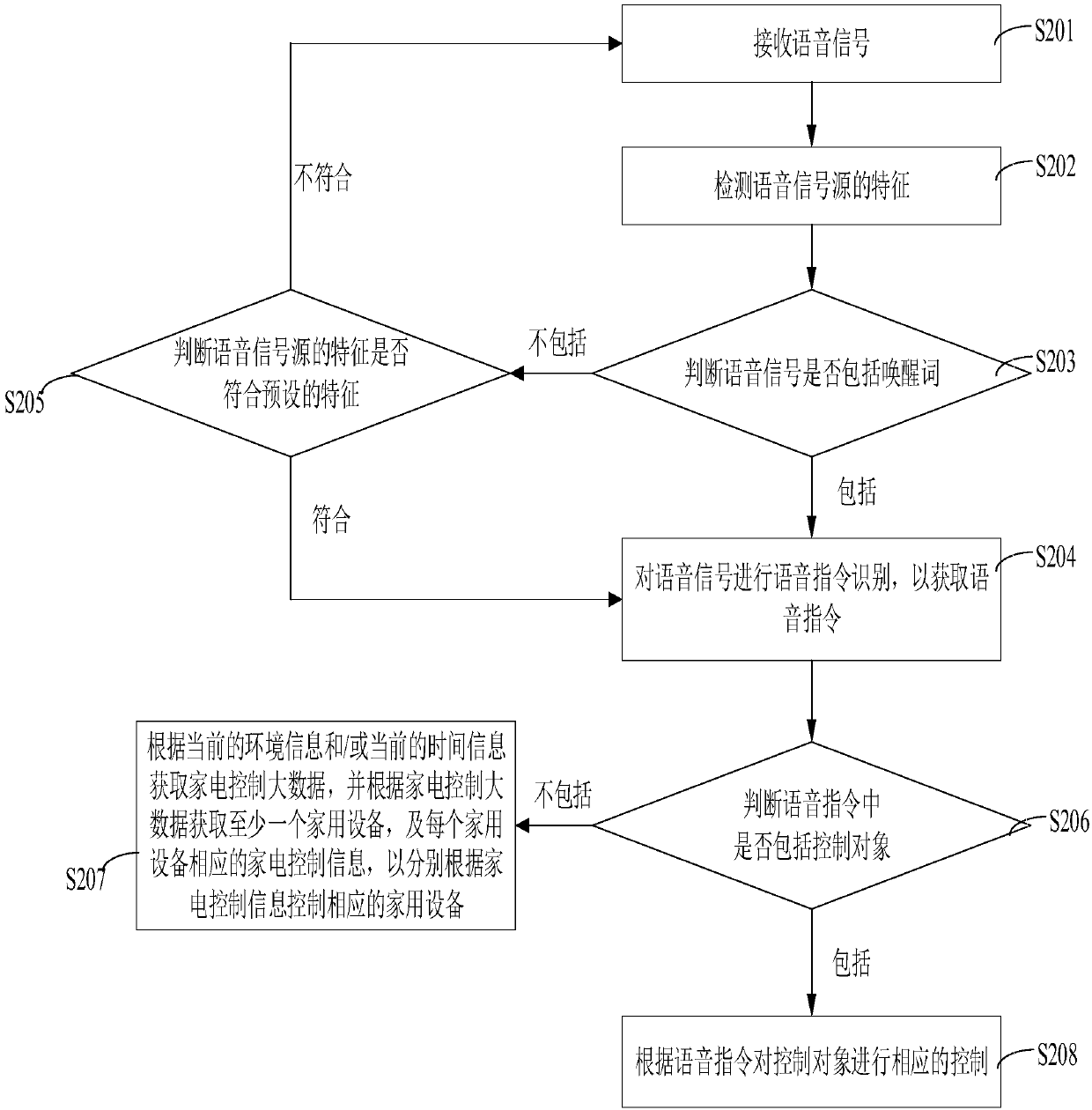

[0052] figure 2 It is a schematic flowchart of the human-computer interaction method provided by Embodiment 2 of the present invention. In order to clearly describe the human-computer interaction method provided by Embodiment 2 of the present invention, please refer to figure 2 .

[0053] The human-computer interaction method provided by Embodiment 2 of the present invention is applied to a control device, and includes the following steps:

[0054] S201: Receive a voice signal.

[0055] S202: Detect the characteristics of the voice signal source.

[0056] Specifically, the feature of the voice signal source may include the face orientation of the user who sent the voice signal or the relative orientation between the user who sent the voice signal and the controlled device, where the voice signal source includes but is not limited to the user who sent the voice signal. Specifically, after receiving the voice signal, the feature of the voice signal source is detected immed...

Embodiment 3

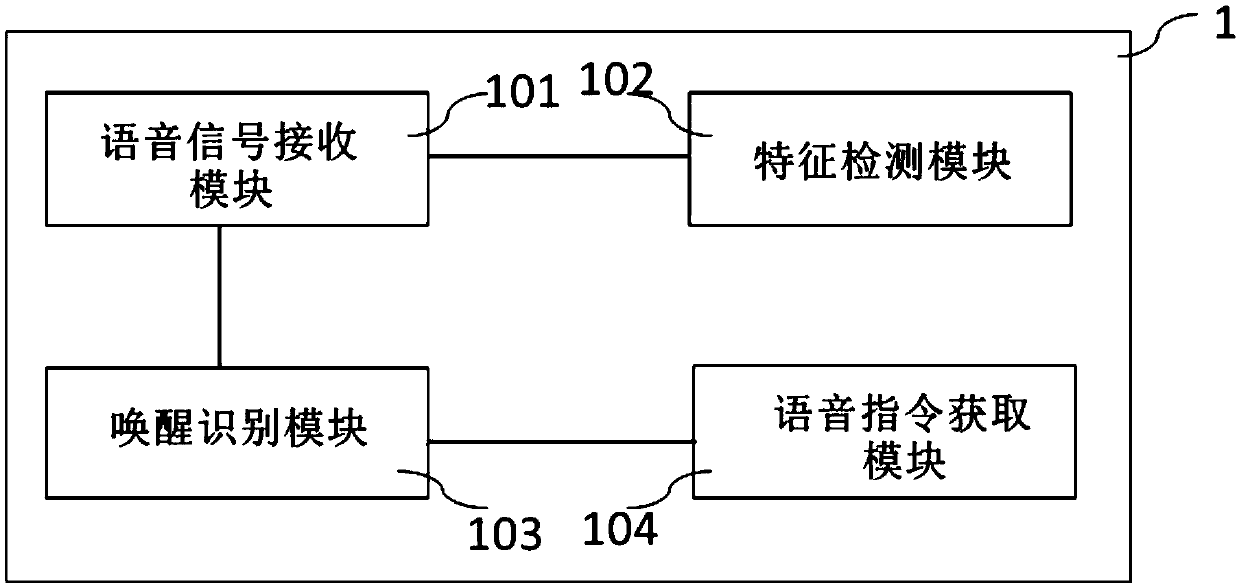

[0082] image 3 It is a schematic structural diagram of the control device provided by Embodiment 3 of the present invention. In order to clearly describe the control device 1 provided by Embodiment 3 of the present invention, please refer to image 3 .

[0083] join image 3 The control device 1 provided by the third embodiment of the present invention includes: a voice signal receiving module 101 , a feature detection module 102 , a wake-up identification module 103 and a voice command acquisition module 104 .

[0084] Specifically, the voice signal receiving module 101 is used for receiving voice signals.

[0085] In one embodiment, before the voice signal receiving module 101 receives the voice signal, the voice signal receiving module 101 is in the silence detection state, and the power consumption of the control device 1 is extremely low at this time, so that the control device 1 maintains the ability to work for a long time.

[0086] Specifically, the feature detect...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com