Saliency map fusion method and system

A fusion method and saliency technology, applied in the field of saliency map fusion method and system, can solve problems such as unreasonable emphasis and unsatisfactory recall effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

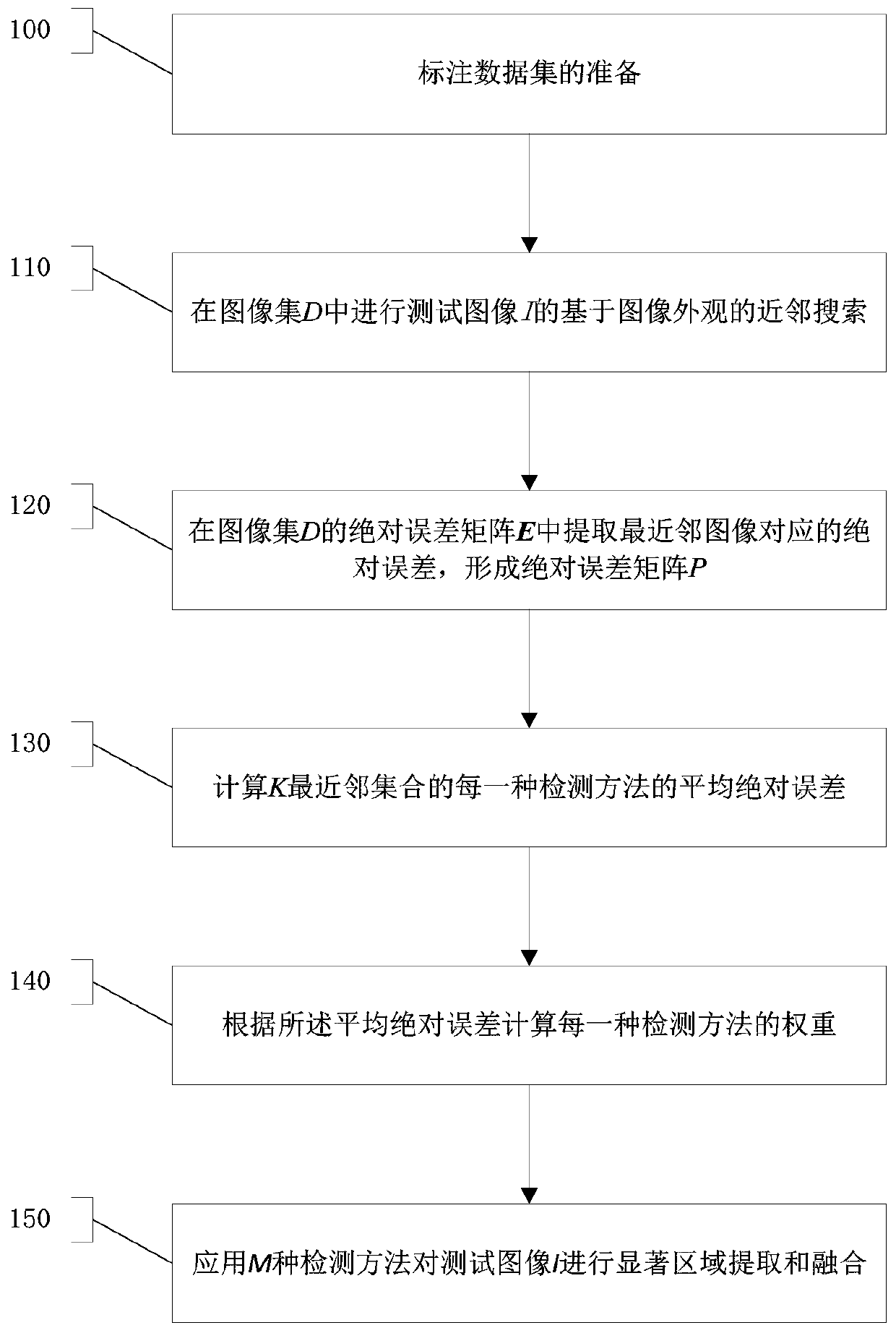

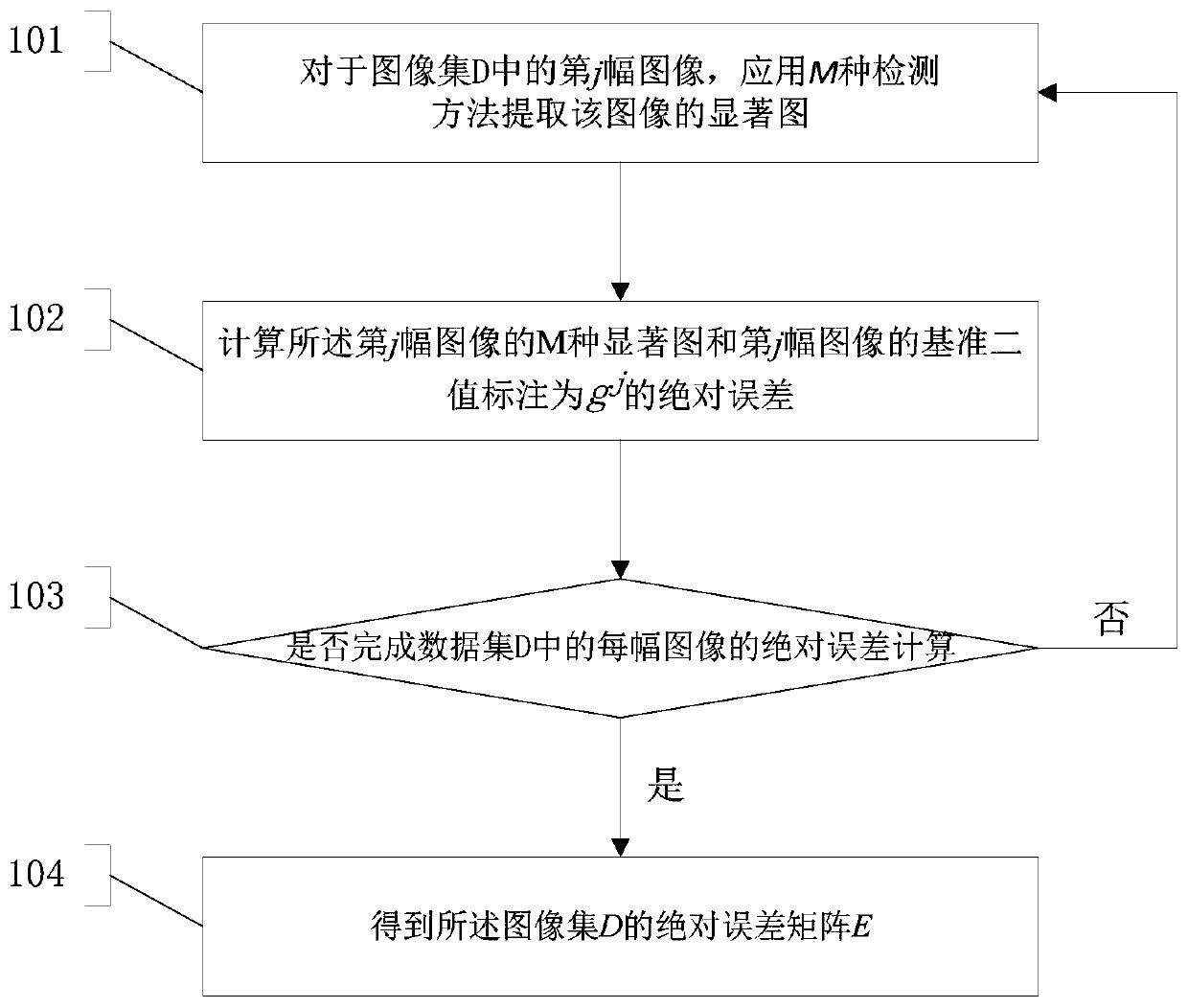

[0060] Such as figure 1 As shown, step 100 is executed to prepare the labeling data set. Such as Figure 1A As shown, in step 100, execute step 101, for the jth image in the image set D, apply M detection methods to extract the saliency map of the image. The extraction results of various methods are Denotes the saliency map extracted by the i-th method of the j-th image, 1≤i≤M. Execute step 102, calculate the M saliency map of the jth image and the reference binary value of the jth image marked as g j absolute error. random saliency map with the base binary annotation g j For comparison, calculate the saliency map S i Annotated as g relative to the base binary value j absolute error of get the absolute error vector Step 103 is executed to determine whether the absolute error calculation of each image in the data set D is completed. If the absolute error calculation of each image in the data set D has not been completed, step 101 is re-executed, and for the jth...

Embodiment 2

[0068] Such as figure 2 As shown, a saliency map fusion system includes a labeled dataset 200 and a testing module 201 .

[0069] The preparation of the labeling data set 200 includes setting the image set D and the corresponding benchmark binary labeling set G. There are M kinds of detection methods, including the following sub-steps: Step 01: For the image I in the image set D, apply M kinds of The detection method extracts the saliency map of the image, and the extraction results of various methods are S={S 1 , S 2 , S 3 ,…S i ,…S M}, S i Indicates the saliency map extracted by the i-th method, 1≤i≤M. Step 02: Set the jth image in the image set D and its corresponding reference binary value as g j , any saliency map with the base binary annotation g j For comparison, calculate the saliency map S i Annotated as g relative to the base binary value j absolute error of get the absolute error vector Step 03: Perform the operations of Step 01 and Step 02 on each ...

Embodiment 3

[0073] This embodiment discloses a saliency map fusion method.

[0074] 1. Preparation of labeled data set

[0075] There is an image set D and a corresponding benchmark binary label set G; there are M detection methods.

[0076] Step 1: For image I in D, apply M detection methods to extract the saliency map of this image. The extraction results of various methods are S i Denotes the saliency map extracted by the i-th method.

[0077] Step 2: For the jth image in the image set D and its corresponding benchmark binary value marked as g, take any saliency map with the base binary annotation g j For comparison, calculate the saliency map S i Annotated as g relative to the base binary value j absolute error of get the absolute error vector

[0078] Step 3: Perform the operation of step 2 on each image in the image set D, and store the absolute error matrix E of the image set D. The above work can obtain prior knowledge about the quality of an image extraction.

...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com