Two-stage semantic word vector generation method

A word vector, two-stage technology, applied in the field of two-stage semantic word vector generation, can solve problems such as large space, quality degradation, and data sparseness, and achieve high quality and excellent results

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The following will clearly and completely describe the technical solutions in the embodiments of the present invention with reference to the accompanying drawings in the embodiments of the present invention. Obviously, the described embodiments are only some, not all, embodiments of the present invention.

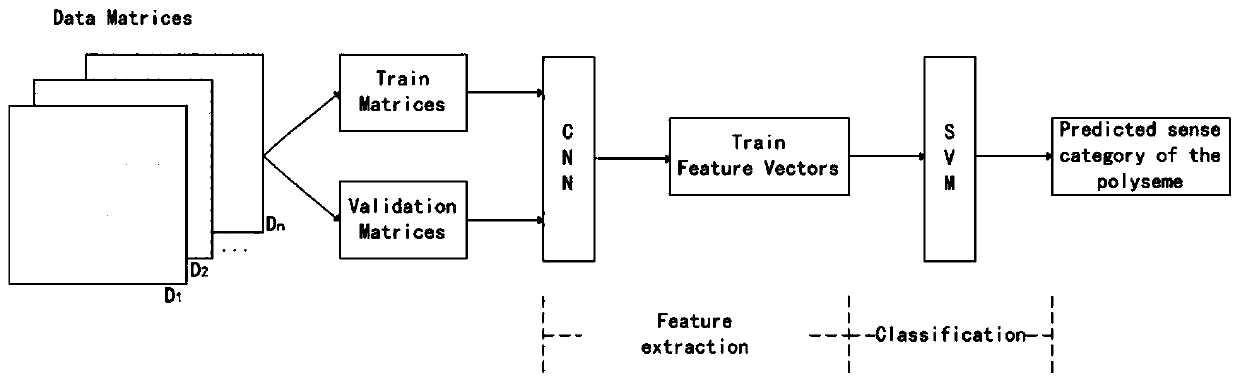

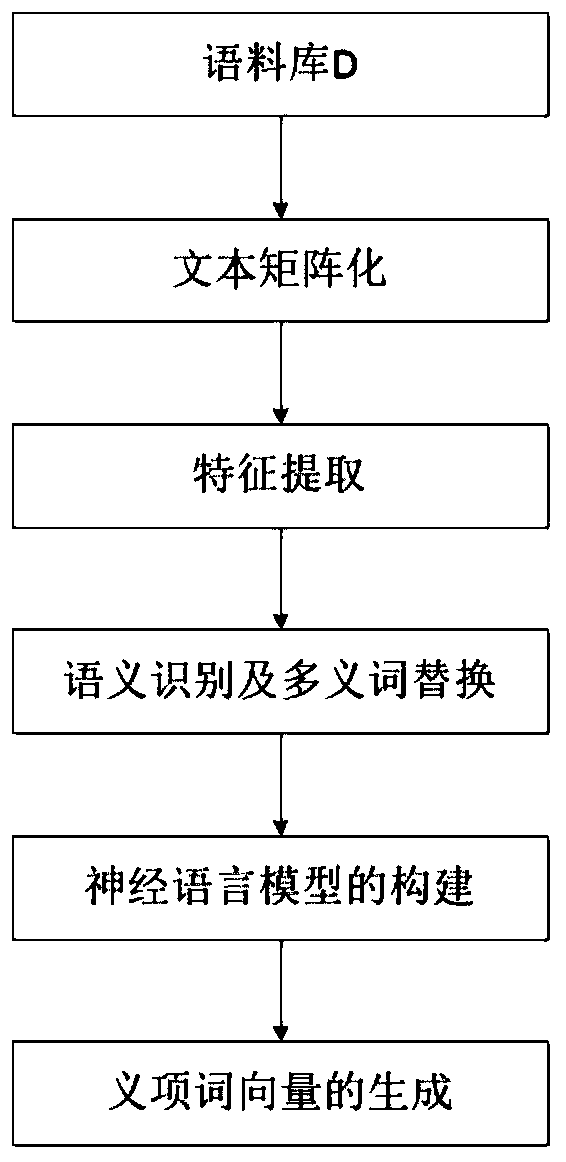

[0027] The two-stage semantic word vector generation method proposed by the present invention is divided into three stages and consists of five steps. The first stage is text matrixing; the second stage includes two steps of feature extractor construction and semantic recognition; the third stage includes two steps of neural language model construction and semantic word vector generation.

[0028] Step 1: Text Matrixization

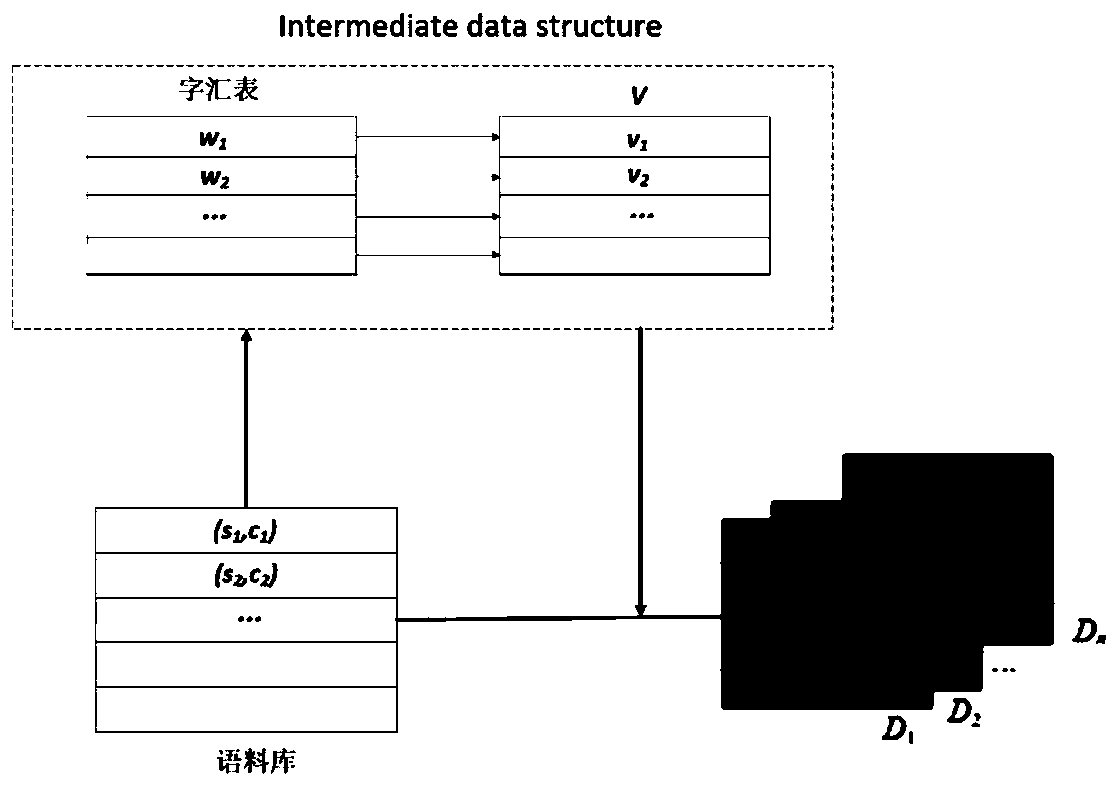

[0029] Select the clause s containing polysemy w from the obtained texti , forming a set D w ={s 1 ,s 2 ,s 3 ...} (that is, the set of clauses containing ambiguous words), this clause s i and polysemy w in the sense item category c of this ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com