Human-computer interaction method based on user behaviors and sound box

A human-computer interaction and user technology, applied in the input/output of user/computer interaction, computer components, mechanical mode conversion, etc. Avoid aesthetic fatigue, improve human-computer interaction experience, and enrich the effect of expressions or actions

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

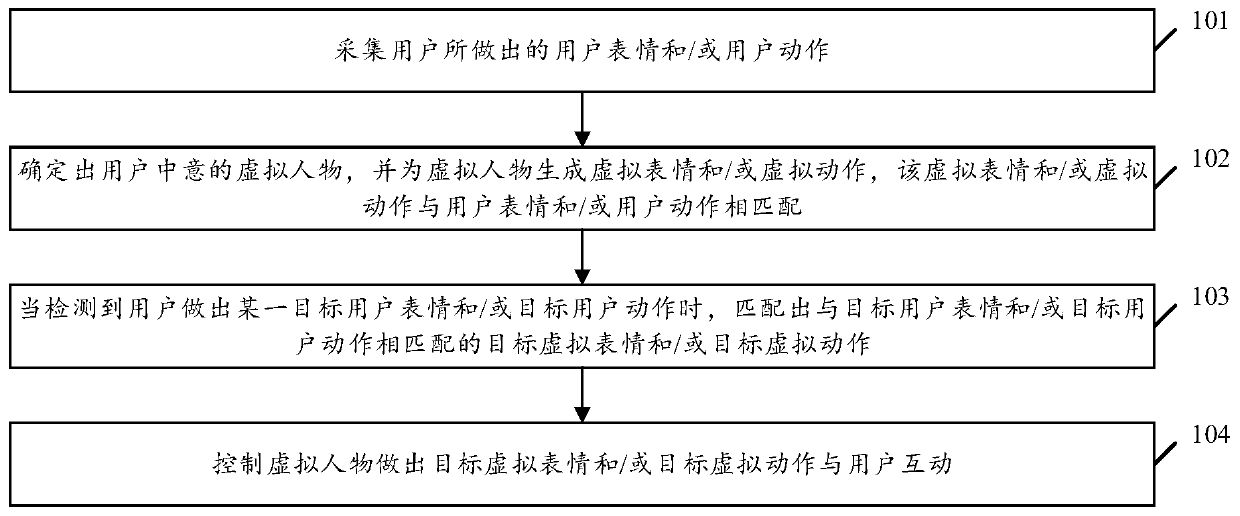

[0069] see figure 1 , figure 1 It is a schematic flowchart of a user-behavior-based human-computer interaction method disclosed in an embodiment of the present invention. like figure 1 As shown, the human-computer interaction method based on user behavior may include the following steps:

[0070] 101. Collect user expressions and / or user actions made by the user.

[0071] In the embodiment of the present invention, the execution subject for executing the user-behavior-based human-computer interaction method disclosed in the embodiment of the present invention may be a speaker, a control center that communicates with the speaker, a tablet computer, or a learning machine. Examples are provided for description, and should not be construed to limit the embodiments of the present invention.

[0072] It should be noted that the sound box disclosed in the embodiment of the present invention may include a speaker module, a camera module, a display screen, a light projection module...

Embodiment 2

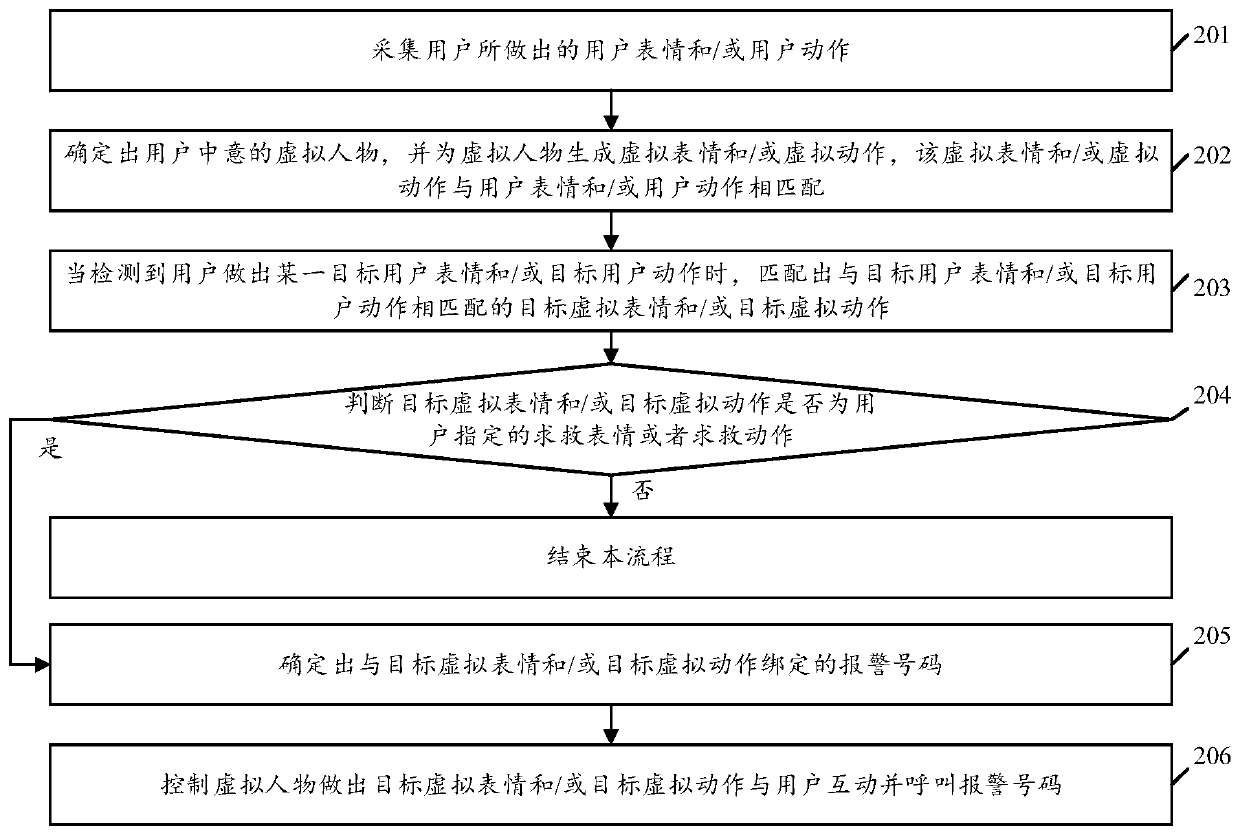

[0094] see figure 2 , figure 2 It is a schematic flowchart of another user-behavior-based human-computer interaction method disclosed in an embodiment of the present invention. like figure 2 As shown, the human-computer interaction method based on user behavior may include the following steps:

[0095] 201. Collect user expressions and / or user actions made by the user.

[0096] 202. Determine the avatar that the user likes, and generate a virtual expression and / or virtual action for the avatar, where the virtual expression and / or virtual action match the user's expression and / or user action.

[0097] 203. When it is detected that the user makes a certain target user expression and / or target user action, match a target virtual expression and / or target virtual action matching the target user expression and / or target user action.

[0098] 204. Determine whether the target virtual expression and / or the target virtual action is a request for help expression or a request for ...

Embodiment 3

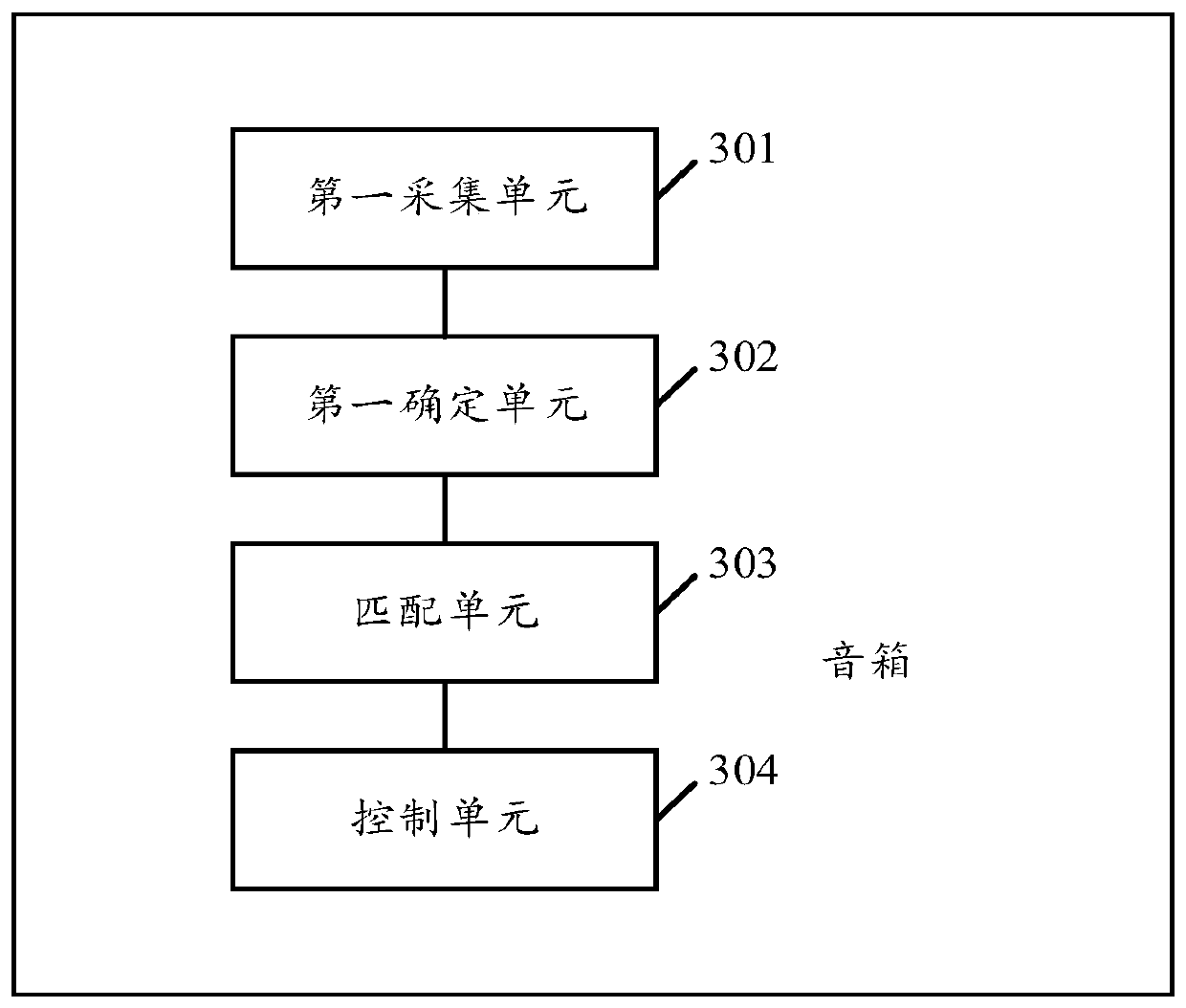

[0118] see image 3 , image 3 It is a schematic structural diagram of a sound box disclosed in an embodiment of the present invention. like image 3 shown, the speaker may include:

[0119] a first collection unit 301, configured to collect user expressions and / or user actions made by the user;

[0120]The first determining unit 302 is configured to determine the avatar that the user likes, and generate virtual expressions and / or virtual actions for the avatar, and the virtual expressions and / or virtual actions match the user's expressions and / or user actions;

[0121] A matching unit 303, configured to match a target virtual expression and / or a target virtual action that matches the target user expression and / or target user action when it is detected that the user makes a certain target user expression and / or target user action;

[0122] The control unit 304 is configured to control the avatar to make target virtual expressions and / or target virtual actions to interact w...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com