Low-sight-distance vehicle safe driving control method

A driving control and line-of-sight technology, which is applied in the field of safe driving control of low-line-of-sight vehicles, and can solve problems such as congestion, shortening of the driver's line of sight, and traffic accidents.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

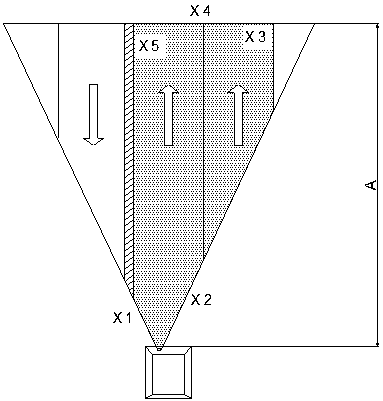

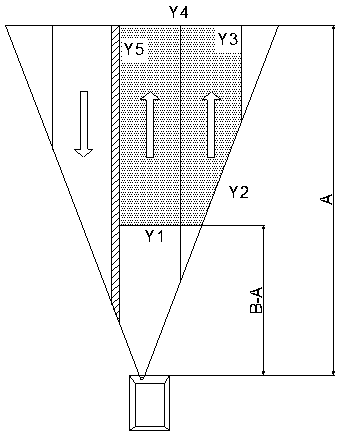

[0030] In order to facilitate those skilled in the art to understand the technical solution of the present invention, the technical solution of the present invention will be further described in conjunction with the accompanying drawings.

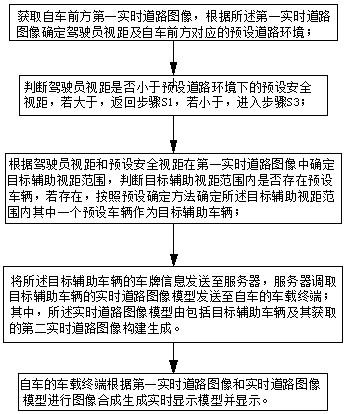

[0031] An embodiment of the present invention provides a method for controlling safe driving of a low-sight vehicle, such as figure 1 shown, including:

[0032] S1: Obtain the first real-time road image in front of the vehicle, and determine the driver's sight distance and the corresponding preset road environment in front of the vehicle according to the first real-time road image;

[0033] In the embodiment of the present invention, the first real-time road image in front of the vehicle is obtained through the simulated camera of the vehicle terminal, and the simulated camera is installed at a position corresponding to the driver's eyes. value setting. Due to people's congenital or acquired visual defects, the eyesight and sight distance...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com