CNN text classification method combined with multi-head self-attention mechanism

A text classification and attention technology, applied in neural learning methods, semantic analysis, computer components, etc., can solve problems such as excessive calculation, poor classification performance, and limited applications

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] Text classification is a common downstream application of NLP. The CNN model has incomparable advantages in the application of text classification due to its small amount of calculation and the characteristics of easy parallel acceleration. However, due to the limited convolution kernel width, the CNN model cannot Learning global semantic information of text leads to limited classification performance.

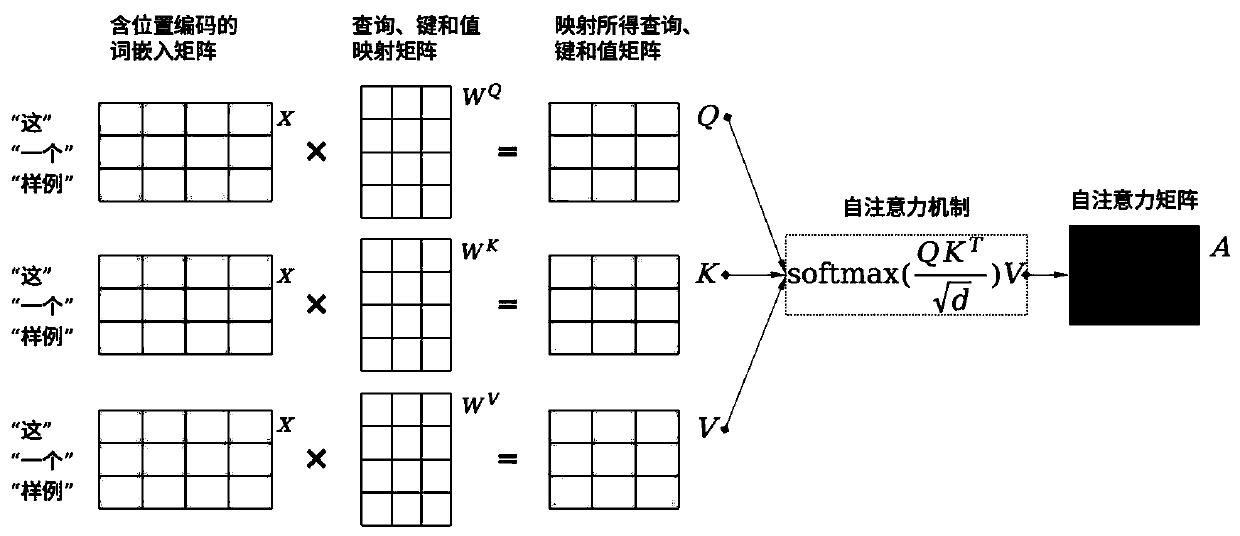

[0031] The present invention proposes a CNN text classification method combined with a multi-head self-attention mechanism. The CNN model obtains feature input including global semantic information through the self-attention mechanism, and improves the classification performance of the CNN model on the premise of ensuring a low amount of calculation.

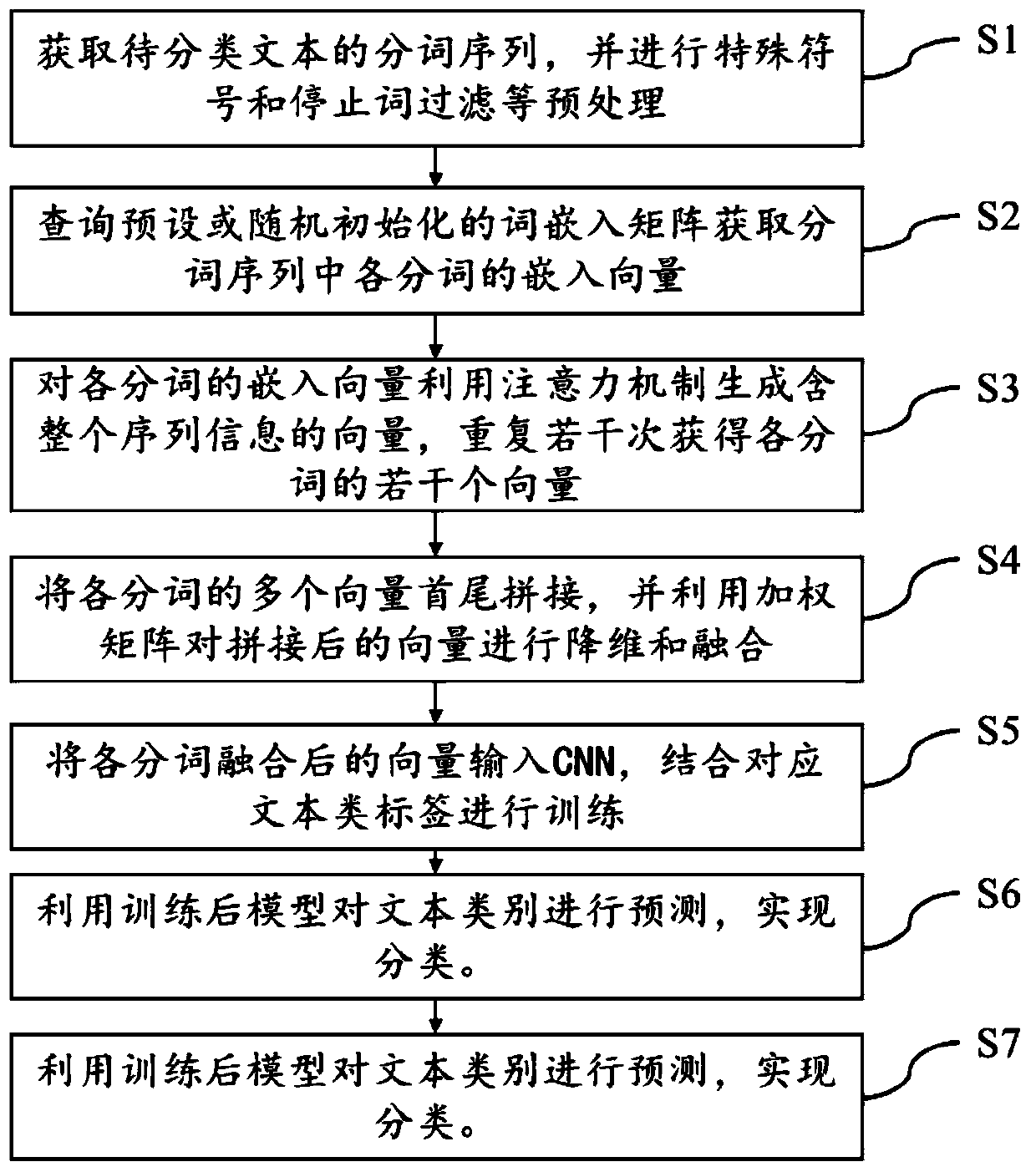

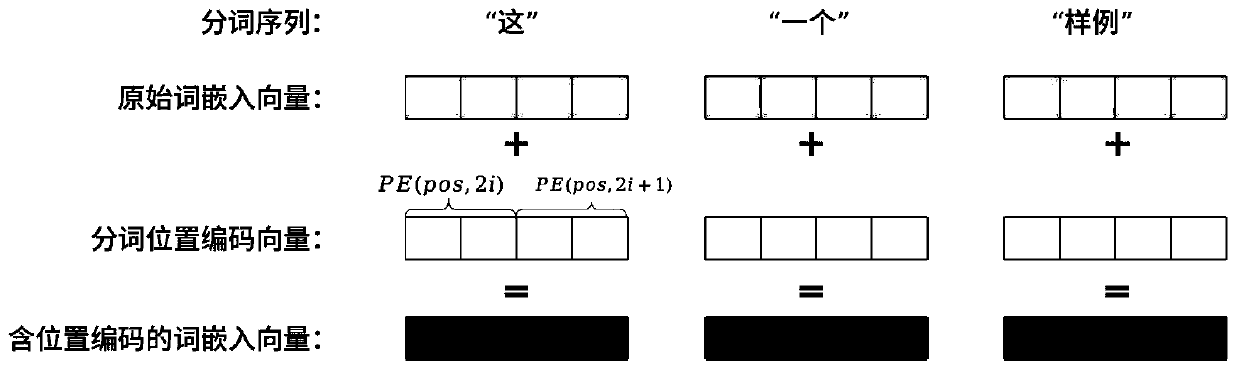

[0032] The technical solutions of the present invention will be further described below in combination with specific implementation methods and accompanying drawings. figure 1 Show that the present invention provides a ki...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com