Video object sound effect searching and matching method, system and device and readable storage medium

A matching method and object technology, which is applied in the field of video processing, can solve the problems of slow accuracy, time-consuming, and low accuracy, and achieve the effect of high accuracy

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Example Embodiment

[0052] Example 1:

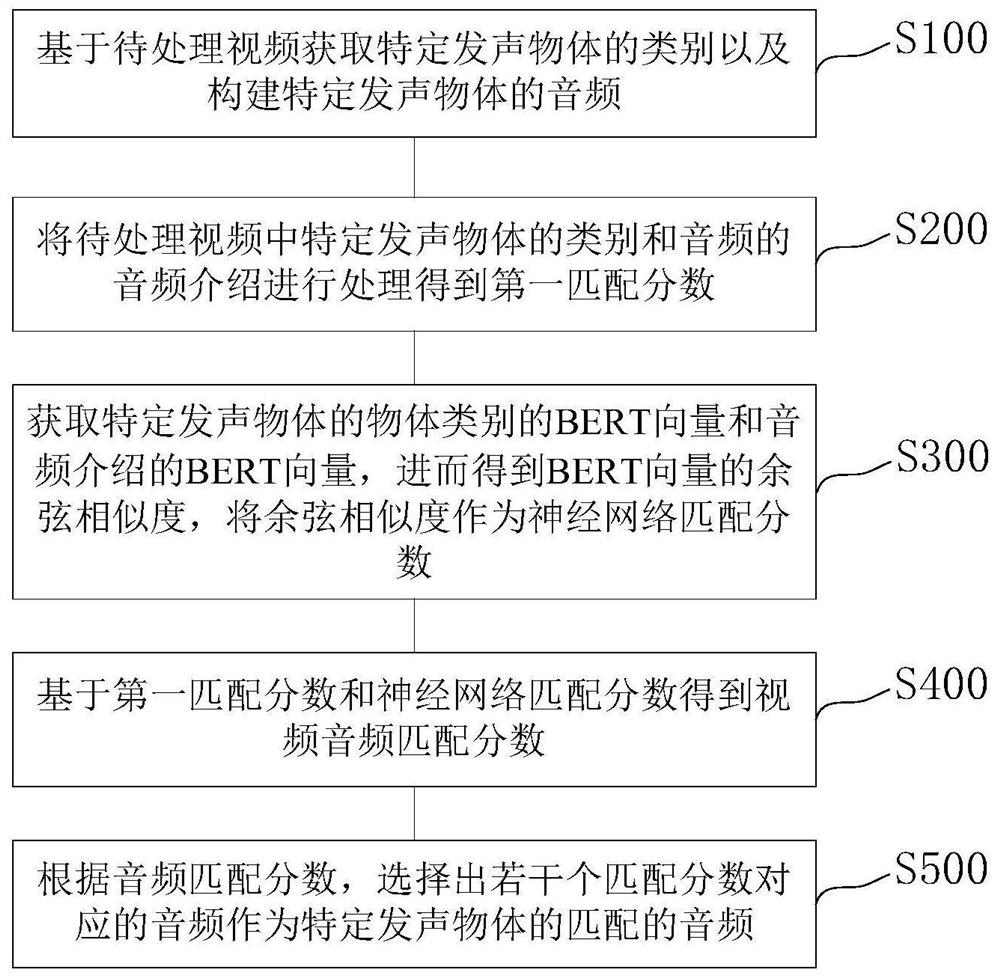

[0053] A video object sound effect search matching method, such as figure 1 As shown, including the following steps:

[0054] S100. Obtain the category of a specific sounding object based on the video to be processed and construct the audio of the specific sounding object;

[0055] S200: Process the category of the specific sound-producing object in the video to be processed and the audio introduction of the audio to obtain a first matching score;

[0056] S300. Obtain the BERT vector of the object category of the specific sounding object and the BERT vector of the audio introduction, and then obtain the cosine similarity of the BERT vector, and use the cosine similarity as the neural network matching score;

[0057] S400: Obtain a video and audio matching score based on the first matching score and the neural network matching score;

[0058] S500. According to the audio matching scores, select several audios corresponding to the matching scores as the matching audios...

Example Embodiment

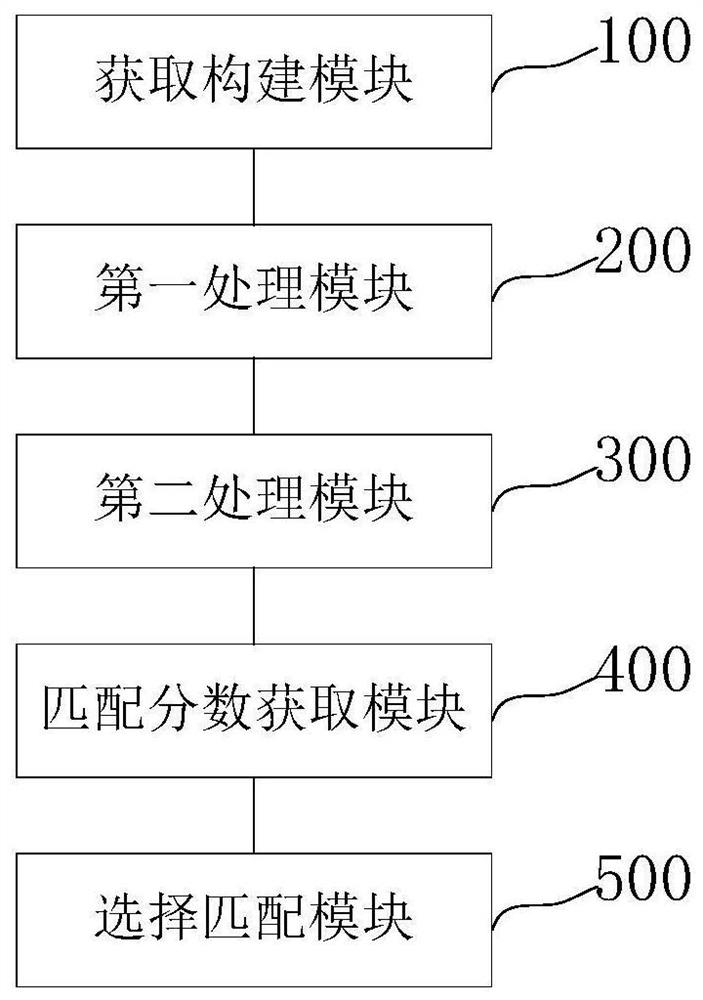

[0103] Embodiment 2: A video object sound effect search and matching system, such as figure 2 As shown, it includes an acquisition building module 100, a first processing module 200, a second processing module 300, a matching score acquisition module 400, and a selection matching module 500;

[0104] The acquisition and construction module 100 is configured to acquire the category of a specific sound-producing object and construct the audio of the specific sound-producing object based on the video to be processed;

[0105] The first processing module 200 is configured to process the category of the specific vocal object in the video to be processed and the audio introduction of the audio to obtain a first matching score;

[0106] The second processing module 300 is used to obtain the BERT vector of the object category of the specific sounding object and the BERT vector of the audio introduction, and then obtain the cosine similarity of the BERT vector, and use the cosine similarity a...

Example Embodiment

[0125] Example 3:

[0126] A computer-readable storage medium, the computer-readable storage medium stores a computer program, and when the computer program is executed by a processor, the following method steps are implemented:

[0127] Obtain the category of a specific sounding object based on the video to be processed and construct the audio of the specific sounding object;

[0128] Process the category of the specific vocal object in the video to be processed and the audio introduction of the audio to obtain the first matching score;

[0129] Obtain the BERT vector of the object category of the specific sounding object and the BERT vector of the audio introduction, and then obtain the cosine similarity of the BERT vector, and use the cosine similarity as the neural network matching score;

[0130] Obtain video and audio matching scores based on the first matching score and the neural network matching score;

[0131] According to the audio matching scores, several audios corresponding...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com