Unsupervised cross-domain action recognition method based on channel fusion and classifier confrontation

A technology of action recognition and classifier, applied in the field of computer vision and pattern recognition, can solve problems such as weak generalization ability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

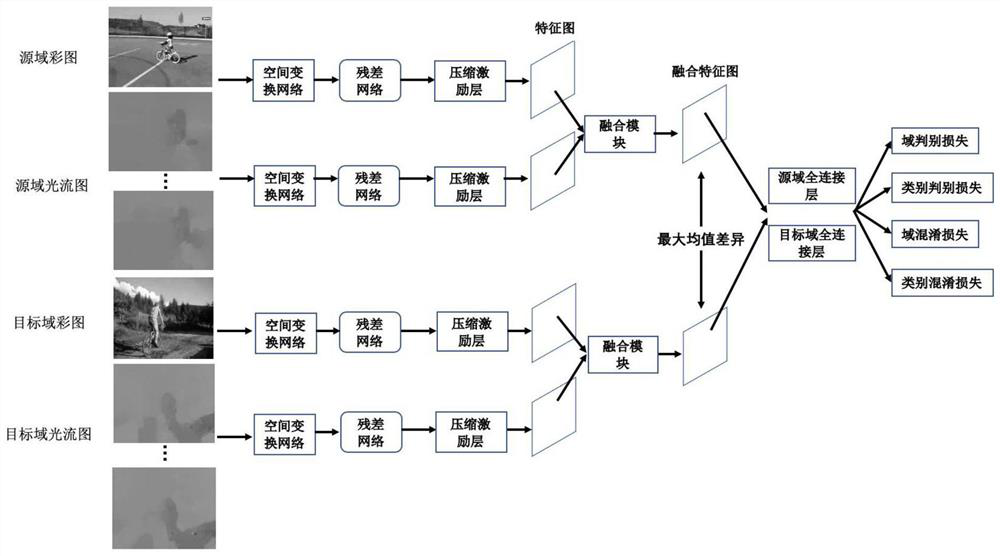

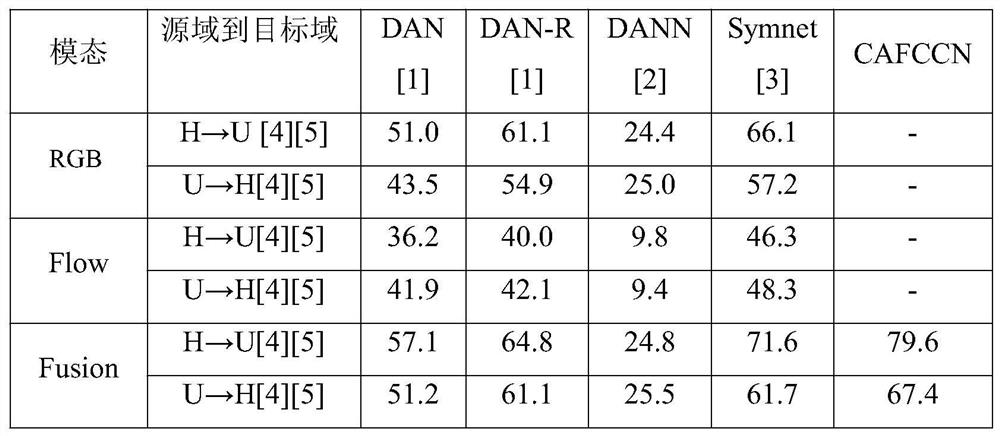

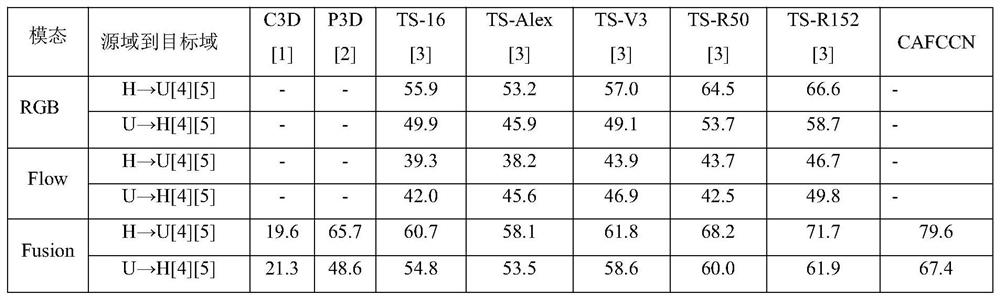

[0039] Such as figure 1 As shown, it is an operation flowchart of an unsupervised cross-domain action recognition method (CAFCCN) based on channel fusion and classifier confrontation of the present invention, and the operation steps of the method include:

[0040] Step 10 Selection of Action Recognition Model

[0041] First of all, for action recognition tasks, it is necessary to select an appropriate model.

[0042] In image recognition tasks, the method based on 2D convolution is usually used for recognition, but the method based on 2D convolution cannot be directly applied to the task of action recognition. In action recognition, the method based on 3D convolution simultaneously models the time sequence Information and spatial information, but the 3D convolution has a large amount of parameters, it is impossible to build a deep network, and it is difficult to train. Therefore, the present invention selects a dual-stream-based method for action recognition, obtains input s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com