3D human body posture estimation model training method

A technology for estimating models and human body poses, applied to biological neural network models, calculations, computer components, etc., can solve problems such as high time complexity and difficulty in obtaining high-precision 3D joint point coordinates of the human body

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The specific implementation manners of the present invention will be further described in detail below in conjunction with the accompanying drawings and embodiments. The following examples are used to illustrate the present invention, but are not intended to limit the scope of the present invention.

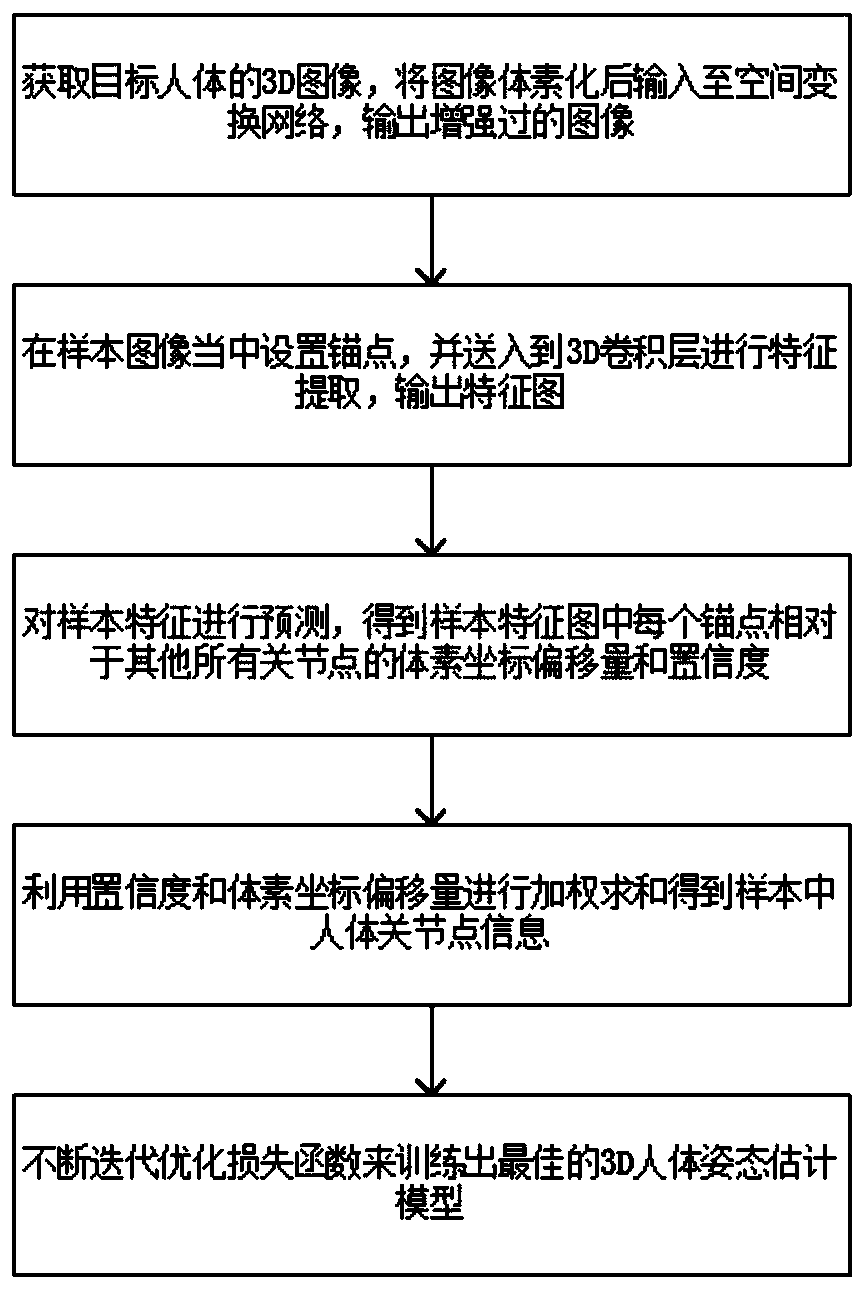

[0046] Such as figure 1 Shown, a kind of 3D human body posture estimation model training method comprises the following steps:

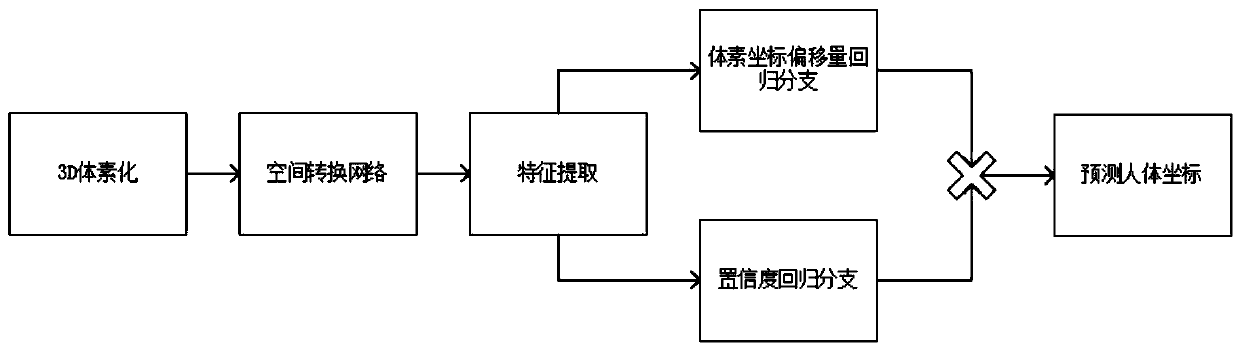

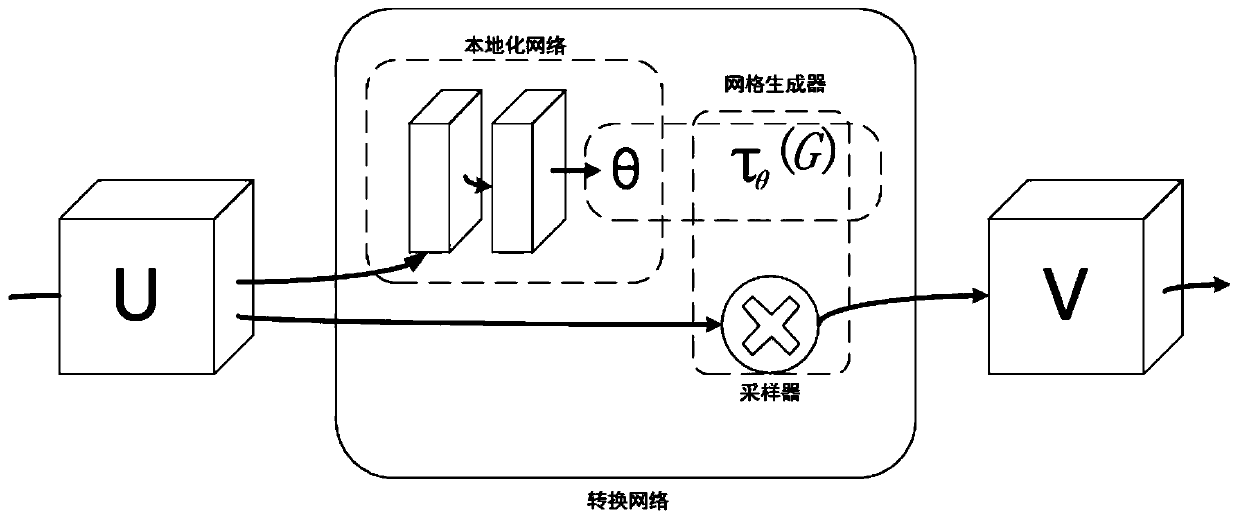

[0047] Step 1: Obtain the 3D image of the target human body, input the image into the space transformation network, and output the enhanced image;

[0048] Step 2: Set the anchor point in the enhanced image space and input it to the feature extraction layer of the 3D pose estimation network to obtain the sample features;

[0049] Step 3: Use the predictor to predict the sample feature map, and obtain the voxel coordinate offset and confidence of each anchor point in the sample space feature map to all other human joint points, and use the confi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com